- AI

- A

How the SIBUR Innovation Laboratory is organized and why it is needed

Creating innovations quickly and flexibly can be done in a startup or a small company, but what if you are an industrial giant with more than 25 factories across the country, established processes and budgeting aimed at continuous production, where every second is accounted for and there is no time to experiment? How to implement bold ideas in such conditions?

Nevertheless, we were among the first to start applying AI in industry and digitizing processes. That is, we are actively implementing modern technologies and carrying out bold projects. Today, SIBUR's portfolio includes more than 30 implemented AI-based cases. Over 200+ hypotheses are being worked on, and this number is constantly growing.

To develop and implement innovations, SIBUR has an Innovation Laboratory. And for AI-based developments, we organized an AI Laboratory, but without test tubes and lab coats, as many might imagine, but with computers and data scientists engaged in testing hypotheses, evaluating their potential, creating and training models, and many other interesting things.

Structure and Tasks of the Laboratory

Tasks of the Laboratory:

scouting solutions for the needs of functional divisions within SIBUR;

piloting external digital solutions;

monitoring and analyzing technological trends;

opening new research directions;

interacting with the innovation infrastructure (development institutes, innovative companies, accelerators, and venture funds).

Due to the fact that we have accumulated a large pool of hypotheses worthy of attention in artificial intelligence, we created a separate Laboratory. If there is as much interest and hypotheses for development in any other direction, Laboratories can also be opened for them. That is, we are open not only to AI but also to other innovations.

Besides AI, we are also interested in other modern trends. For example, we are looking towards quantum computing — we are working on hypotheses for the application of quantum computing for the company's tasks. And when quantum computing capacities appear in Russia, we will already understand the potential of their implementation in our processes.

Why SIBUR Needs an Innovation Lab

An important question: why do we actually need a whole Lab? SIBUR is a company that opens projects based on economic feasibility. All projects undergo strict screening in terms of costs and economic effect. And no one will launch a project just because the idea sounds cool, but there are no specifics.

Therefore, all ideas need to be somehow validated and evaluated. But it is far from possible to say what the economic effect will be and whether it will be at all for all project ideas. In addition, there is great uncertainty whether it will be possible to implement it.

For such "projects" that have not yet become projects and exist at the level of hypotheses, a Lab was created where we test these hypotheses.

The Lab is a place where we can make mistakes. In the framework of a project, mistakes cannot be made. If a project is open, it must be successful. Within the Lab, accordingly, the hypothesis may not meet expectations.

In the Lab, we can test hypotheses with small efforts and a small budget. Large projects are always expensive. And here, with the efforts of several specialists, we can create an MVP and understand how viable the idea is. If the hypothesis successfully passes evaluation and testing, it will move into a project for implementation.

Where Hypotheses Come From and How We Evaluate Them

The source of requests for consideration of hypotheses in the direction of artificial intelligence for the Lab often comes from colleagues from different departments.

As of the end of December 2024, we have more than 200 hypotheses in progress. And each of them is evaluated according to several criteria:

The availability of data in the company to preliminarily evaluate the hypothesis. If the data is available, we can take it, analyze it, and move on to the next stage of evaluation.

The economic effect for the company. Of course, we cannot fully calculate the economic effect at the initial stages, but we can get an approximate idea of the scale of the effect. For example, if everything works out, it will be 10 or 100 million rubles a year.

After the preliminary evaluation, hypotheses are either rejected or taken into work. Hypotheses that have passed the screening are elaborated. We look at how relevant and in demand they are in the company to determine priority.

For example, if some chatbot helps automate the work of one person, the priority will be low. But if it is a tool that helps a hundred people in the company, the priority will be higher. And if this tool also has a potential economic effect of 100 million rubles, of course, priority will be given to it.

Trendy LLM and classic ML

All hypotheses developed in the AI Laboratory can be divided into two categories, in which we apply either classic Machine Learning (ML) or Large Language Models (LLM).

The specificity of these two areas is that LLM is a new promising technology with great potential. While classic ML has been with us for a long time. Since the beginning of digitalization in SIBUR, many projects have been implemented, which over seven years have brought the company more than 50 billion rubles (as of the end of 2024), and half of this profit comes from ML projects - this is a very impressive figure. But this does not mean that the potential of LLM is less significant, it just has not yet been revealed.

The list of tasks for LLM often includes searching for information in the knowledge base for summarization and conclusions, searching for information for writing articles. In general, everything that produces a result in the form of text.

In classic ML, a significant block of tasks is related to our R&D. This is what is done for experiments in the field of petrochemistry, polymer labeling. That is, applied tasks are solved using ML.

Examples of ML tasks:

Polymer labeling for anti-counterfeiting.

Digital modeling of polymer structures for solving applied problems of synthesis and polyolefin processing.

Infrastructure and security for AI projects

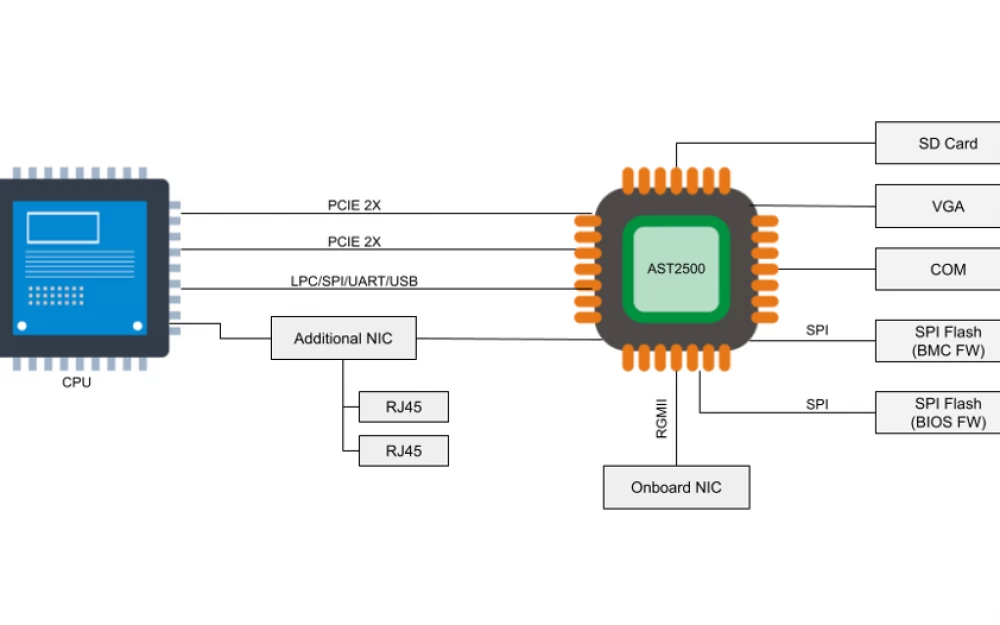

To solve problems in classical ML, we use our own infrastructure.

In working with LLM to test hypotheses, we use various open-source models, as well as Sber's GigaChat. Some hypotheses have already been confirmed and are being prepared for launch in projects in the coming year.

An important issue in training language models in external computing environments is security. To eliminate any risks, we ensure maximum data anonymization — all links to real names are removed.

In the near future, we are going to deploy our own infrastructure with sufficient computing power to implement projects using large language models in the internal information environment.

Promising hypotheses in work

And let's talk a little about what the AI Laboratory is currently working on.

Pipeline analysis — ML

One of the notable cases currently in progress is the detection of defects on pipelines using drones. Drones fly along the pipes and shoot video in the infrared spectrum. This video stream is analyzed by ML algorithms, and if visual anomalies or signs of damage are detected, the program notifies engineers.

The implementation of this case will save man-hours, increase monitoring efficiency, and also automate the process of collecting and processing relevant data.

Test generation — LLM

Another case is related to occupational safety and staff training. When it is necessary to check the qualification or how the training course has been assimilated, employees undergo testing. Tests need to be constantly updated because cheat sheets appear, people cheat, and the whole scheme breaks down.

It is laborious to come up with new tests each time. Therefore, we are now testing a model that, based on all the knowledge and regulations that we have, will generate questions and answers to test employees' knowledge.

This is a very relevant story for factories. And not only for factories.

Text generation — LLM

There is also a case with a model that generates texts from our knowledge base in Confluence. The implementation of this hypothesis will allow us to write press releases, articles, presentation plans, and so on.

What you are reading now could have been generated by artificial intelligence, and we would not have to spend time on calls, discussions, iterations. We would just look at it, tweak it a bit, approve it, and post it on tekkix. Super.

But for now, it's still in the works.

Not everything works out

Not all hypotheses can be realized. And if you count how many hypotheses turn out to be unsuccessful for one reason or another, it will be about 50/50. But that's not bad, that's the point of the Laboratory, you can make mistakes here. If everything worked out right away, why would we need the Laboratory? We could just start the project right away.

For example, there was an interesting hypothesis about procurement. The task was to predict the volume of requests for the purchase of various products. So that we could purchase something in advance and reduce the risk of production downtime. We made good progress, but we couldn't build models with the required forecast quality.

For the model to work, we needed to give it the most complete amount of data, in which patterns are somehow interconnected with events, which makes it possible to make forecasts.

In addition, for the model to work correctly, it needs a complete set of data. But completeness is not always possible to ensure, because there is a human factor. Data can also be incorrect.

One of the most popular reasons for failures in hypothesis development is inconsistency or insufficiency of data, which does not take into account all factors. This problem is actively being worked on at SIBUR, and recently there have been articles about how we have replaced our Data Quality stack and how it works.

In the next articles, we will tell you more about our projects with the use of AI. If you have any questions or want to share your opinion, write comments, we will discuss.

Write comment