- AI

- A

ChatGPT is still out of reach: what’s happening on the AI market by mid-2025?

Hello! This is Andrey from the channel Runaway Neural Network, and today I suggest we take a look at the AI market. 2025 is hitting the halfway mark, so it’s a good time to see how the standings of the major developers have changed. But first, let’s check out two illustrations. The first is the AI ranking from LMArena.

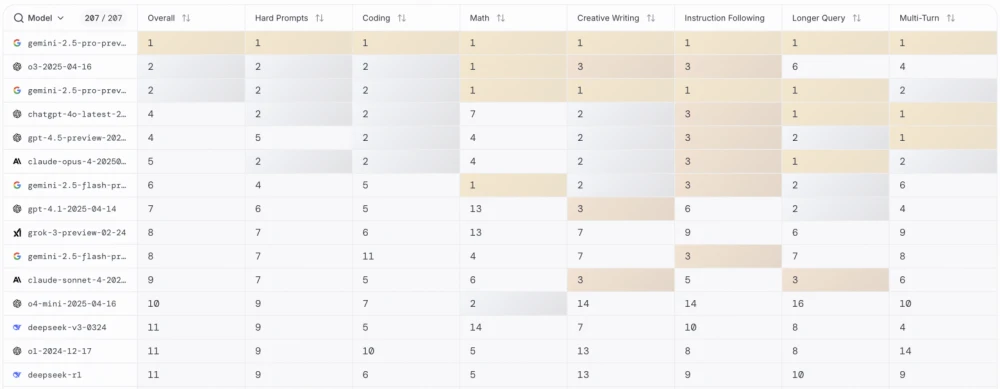

Let me say right away—I personally can't name an AI ranking that would be perfect, but LMArena, in my opinion, comes the closest. This resource conducts blind comparisons: the user makes a request, they're shown "anonymous" responses from two models, and then they choose the better one. This makes the ranking results as close as possible to practical AI usage. The ranking shows clearly that the leaders are ChatGPT (OpenAI) and Gemini (Google), followed at a slight distance by Claude (Anthropic) and Grok 3 (xAI), and with a more significant lag—DeepSeek. We'll look at the models in that order, but first—one more image. This time from SimilarWeb, with an estimate of traffic distribution among various AI services.

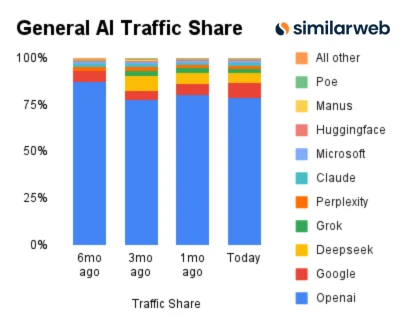

Keep in mind that SimilarWeb’s data is an estimate and does not include, for example, the use of Google models inside the company’s search engine or in the Android operating system. Still, the picture is impressive: nearly 80% of traffic goes to ChatGPT. Early entry into the AI market turned out to be a huge advantage for OpenAI—among my acquaintances, ChatGPT is still synonymous with “artificial intelligence.” So that’s where we’ll start. I’ll add that in the text below I’ll be reviewing the models from the perspective of someone willing to pay for one or two basic $20 subscriptions. This approach is closer to what most people actually use compared to working with AI via API (my apologies to those who do it that way).

ChatGPT (OpenAI)

Despite some local failures, like the expensive and slow GPT-4.5, the company’s models remain among the strongest. ChatGPT-4o is a great chatbot suitable for daily questions, quick web searches, and general conversation. ChatGPT o3 and o4-mini are reasoning models for coding, solving difficult problems, and deeper web research. I’ll also note that ChatGPT generates images very well, and starting with the Plus subscription it includes Codex-1—a web agent for programmers, able to work with a repository on GitHub. Some may also find the DeepResearch feature handy, where the model spends 10-30 minutes searching the Internet and returns with a detailed report.

There are downsides too. In recent months, OpenAI has churned out a huge number of models (in addition to those above, there’s GPT-4.5 and GPT-4.1), and choosing among them is not always obvious even for an experienced user. Additionally, many models under the $20 Plus subscription have strict usage limits: for example, the powerful o3 is capped at just 200 queries per week, and GPT-4.5 is limited to only 10 queries. This should change with the launch of ChatGPT-5—it’ll be a “router,” analyzing user prompts and deciding which model to use. But for now it is what it is.

And the most important question for OpenAI: the days when the company was the undisputed leader in the market, rolling out nearly every AI breakthrough first, are over. Now it has at least one serious competitor.

Gemini (Google)

Google-owned company DeepMind is one of the veterans in AI development. For example, its AlphaGo system in 2016 defeated 18-time world Go champion Lee Sedol, and the subsequently developed AlphaFold algorithm predicted the three-dimensional structure of about 98% of human proteome proteins, effectively solving the long-standing "protein folding problem." But at the beginning of the year, Google wasn’t really shining in the market for commercial models.

Everything changed at the end of March with the release of the first Gemini 2.5 Pro version: the new reasoning model immediately took top spots in most rankings. A faster 2.5 Flash followed, perfect for everyday questions and chat. From my own experience, I’ll add another surprising thing: Google's models are now less censored than others, and often take on prompts that get refusals from ChatGPT and Claude.

Google’s arsenal also includes pretty decent image generation with ImageGen 4.0 and Veo 3, which many consider the best video creation system. The company is constantly experimenting with new things: there’s the AI video editor Flow, a very interesting app for creating summaries called NotebookLM, and its own agent for coding, Jules. And the killer feature of Gemini is the gigantic 1 million token context window, even in the $20 Pro subscription. With ChatGPT Plus’s modest 32,000, there’s just no comparison—OpenAI really needs to catch up here.

I have mixed feelings about the Gemini Pro subscription. On the one hand, it has some of the best value on the market. For example, 2.5 Pro is limited to 100 requests per day—that’s more than just 200 requests per week for competing ChatGPT o3. The subscription includes AI features in Gmail and Google Docs, 2 terabytes of cloud storage, access to Jules, extra features in NotebookLM, and the fast Veo 3 Fast version. There’s also Google AI Studio, where you can use the company’s models for free at the moment—in exchange for data that Google may use to further train its AI.

On the other hand, Gemini’s services are much more raw than ChatGPT’s. The web interface and mobile apps have lots of bugs, are rarely updated, and lack many important features, like full-fledged chat memory. Interestingly, the Google AI Studio team is developing its “free” project even faster: it’s not uncommon for features to show up there before they go to paying subscribers. If it weren’t for the absence of a mobile app, I’d switch to AI Studio.

Claude (Anthropic)

When Google and OpenAI started rolling out product updates at a cosmic speed in spring, there were concerns that Anthropic just wouldn’t keep up and would drop out of the race. But no—they recently presented the new Claude Sonnet 4.0 (faster and cheaper) and Opus 4.0 (smarter), rocketing back to the top of the rankings. Claude models are traditionally beloved by those who use AI for programming—not just for code quality, but also for their “aesthetic sense,” as much as that can apply to AI. Ask different AI models to create a website—and Claude’s version is likely to be the best one.

I’d also mention the quality of the Claude web version and apps—even better than ChatGPT. I’m especially impressed by the Artifacts mode, where you can immediately see how, for example, a presentation or a simple website would look.

The biggest downside is the dynamic usage limits. Even with a Pro subscription, you never know exactly how many messages you have left—the limit resets every 5 hours and can vary in the morning and evening, depending on server load. If you use Claude for work, there’s always a risk of being left without your assistant at the most critical moment. I’ve already mentioned above that I don’t find working with the API very convenient, but in Claude’s case, this might actually be the preferable option.

Grok 3 (xAI)

Elon Musk’s xAI quickly burst onto the AI scene, building the Colossus supercomputer in record time and training the Grok 3 model, which, at launch in mid-February, became one of the top-rated models. But four months is a long time, so Grok 3 has already lost a lot of ground. Also, while this AI was very lively in conversation at first, xAI recently (for some reason) changed its system prompt, making the model more boring (here I explained how to restore the old prompt and use it in Gemini, too).

Elon Musk announced Grok 3.5 back in April, boasting that it would be the first AI capable of reasoning from first principles—that is, tackling problems where no solution exists online. We haven’t been able to verify this so far: Grok 3.5’s release is constantly delayed because, according to Musk, the model needs more training.

I worked a lot with Grok 3 almost immediately after launch—I really like this model, which makes me pretty confident Grok 3.5 will be a strong AI, too. But right now, Grok 3 isn’t the best choice: it already lags behind competitors, and for some reason, a SuperGrok subscription costs $30 instead of the usual $20. Still, I’ll mention the voice mode, which I like more than the competition’s—though it’s available even on the free plan. Grok 3 also isn’t harshly censored—about on par with Gemini, which I praised earlier.

DeepSeek R1/V3

DeepSeek R1 made a big splash in January: the Chinese released an AI on the level of the latest ChatGPT models, but free and open-source. After that, everyone expected a miracle in the form of DeepSeek R2, which would leave the competition in the dust. In reality, though, the company released only a minor update to DeepSeek R1 at the end of May, just slightly improving its ranking.

Still, even in this state, DeepSeek R1 remains the best AI for those who just want to “download and have it work”—no fuss with subscriptions or other barriers. In January–February, DeepSeek R1’s free access led to major availability issues, but I haven’t noticed that lately.

Keep in mind that DeepSeek R1 is a pure AI, with minimal extra features. There’s no image generation, voice mode, Artifacts analogs, or anything else like that. So the model is good for getting started, and once you know what you need, you’ll understand which competitor to switch to.

...and all the rest

A few words about the models that didn’t get their own section:

The Llama-4 family from Meta turned out to be a total flop, trained for good-looking benchmark numbers. Almost no one mentions them anymore, and according to some reports, Zuckerberg is assembling a new team to develop AI.

Perplexity remains a good choice for web search, but the search functions of the companies listed above have improved so much that I almost don't use it anymore.

Midjourney was recently updated to version 7, which is very good, but the situation is the same: decent image generation is now available in ChatGPT and Gemini, so there is little point in a separate subscription.

P.S. You can support me by subscribing to the channel "escaped neural network", where I talk about AI from a creative perspective.

Write comment