- AI

- A

Overcoming Turbulence in AI Security

Hello, this is Elena Kuznetsova, an automation specialist at Sherpa Robotics. Today I have translated an article for you dedicated to security in the field of artificial intelligence. Applying old security models to new challenges in artificial intelligence turns enterprises into laboratories for studying the unpredictability of turbulence.

Famous German physicist Werner Heisenberg allegedly said on his deathbed: "When I meet God, I will ask him two questions: why relativity theory and why turbulence? I am confident that he will answer the first question."

This quote is often mistakenly attributed to Albert Einstein, but the main thing here is not the authorship, but the idea itself, scientists feel uncomfortable trying to model systems whose behavior cannot be accurately predicted. Turbulence in hydrodynamics is one of the most difficult areas to predict and mathematically uncertain.

Today we find ourselves in a similar situation with security in the age of artificial intelligence. The volume and speed of code generation create new loads that go beyond the old software security models. We are faced with a phenomenon similar to turbulence in fluid dynamics, where familiar methods no longer work.

How turbulence destroys models

Let's start with the basic concept of hydrodynamics - laminar flow, which describes the movement of fluid through a pipe. Laminarity (from Latin lamina - layer) is like layers in laminate or in a croissant, where fluid particles slide over each other in a layered order. In this mode of fluid movement in a pipe, there is no mixing of flows - the water in the center moves faster, at the walls - slower, forming a perfectly predictable picture.

Under ideal conditions, this flow can be modeled using a simple curve that accurately relates pressure and velocity.

Laminar flow is a kind of ideal that is easy to model and predict. However, the real world is not always so simple. Once you increase the flow rate, change the pipe diameter, or decrease the viscosity, the system goes out of the predictable state. Turbulence begins.

Turbulence is when familiar patterns break down. Water stops moving in layers, particles start moving between streams, and the behavior of the fluid becomes unpredictable. Although theoretically scientists can try to model such situations using computer simulations, it is very difficult to explain why a particular process occurs.

Turbulence is an example of a system whose behavior is difficult to predict at the level of an individual particle. However, using methods such as linear regression, it is possible to create models that describe general trends given the input parameters. The only question is what assumptions underlie these models.

Software security in a transitional flow state

Today, many developers use AI tools for faster code development, but few use AI to review change requests and the code their team writes. It's like moving from laminar flow to turbulence.

Laminar flow is the state we are in in most models that are used to write and secure software. Developers write code, conduct reviews, and check code at the level of individual lines and pull requests to find defects and vulnerabilities.

In open projects, there is a list of maintainers who review code from contributors. Vulnerability researchers are constantly finding new issues that get unique CVE identifiers and are tracked in tables. All this is easy to understand and model.

There is a principle: all models are wrong, but some are useful. The point is that these models and approaches work when you clearly understand what phase (mode) you are in and know how to build such models correctly.

But if developers use AI to create an increasing amount of code but do not apply the same approaches to test it, we start to overload the system — the "pipeline" is unable to handle this volume, and previous assumptions begin to break down.

In hydrodynamics, the transition from one state to another is called transitional flow. This is the most difficult type of system to model. The transition does not occur at a specific point in time; it can only be predicted using constraints and coefficients, but it still requires a lot of engineering experience. It is important to understand in which mode the system operates: laminar or turbulent.

Artificial Intelligence and Security

When the pipeline bursts, you can clearly observe the transition from laminar flow to turbulence. At first, the water flows smoothly, but literally a moment later — bang, and an explosion occurs. This is the moment when the system collapses under the load.

Today we are on the verge of a similar transition in the field of artificial intelligence security. Not all is lost, but it is time to rethink our approach. We can still achieve good results and protect systems, but AI models are clearly not coping with the new volume and speed of changes they are causing.

As the role of artificial intelligence in software development grows, the scale at which it will be implemented will only increase. Developers are writing more and more code using AI systems. And any code, as we know, contains bugs — and some of them can significantly affect security. The more code is created, the more vulnerabilities appear in the system.

Security researchers are becoming more productive in finding known vulnerabilities. If they start finding more vulnerabilities than teams can process and fix, it leads to a loss of trust in systems and their security.

At the same time, AI systems are being deployed in production environments at an unprecedented rate, especially when it comes to data that requires increased confidentiality. This makes the task of safe deployment even more complex as the stakes are higher. Companies are striving to integrate AI into their infrastructure much faster than I have seen in my entire career.

Instead of observing a predictable and understandable process (like laminar flow), we are faced with the need to work with a "difficult" turbulent data set, where we fail to understand or control every component.

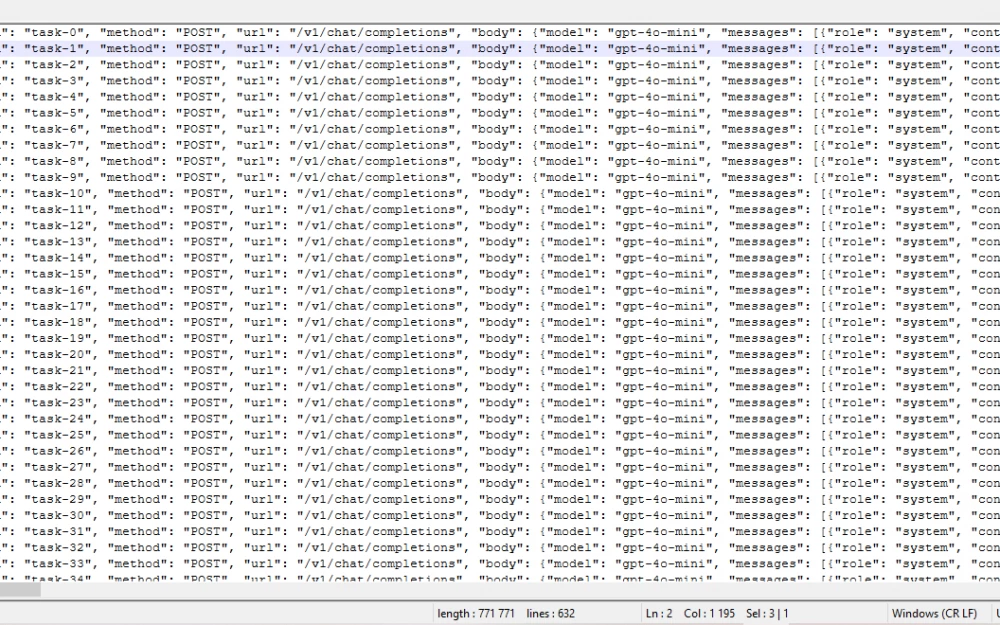

We are forced to adapt new methods. New approaches are required. Developers need not only to write code faster but also to identify and fix vulnerabilities in a timely manner to keep up with the pace of development. Security teams need to address the problem of identifying new vulnerabilities faster than the traditional approach with tables and lists allows — that's how it's managed today.

Simple arithmetic is not enough here. We need to change the very way of thinking and move to probabilistic or statistical methods.

We need to start treating systems as "cattle" rather than "pets". This is an analogy often used in the context of infrastructure: if you have only a few servers, you can address each one by name and monitor each one individually. But if the number of such systems increases 20 times, it will be impossible to do so.

Growth of attacks on AI systems

This transition is already beginning, but it is not yet evenly distributed.

Developers can no longer keep up with the code review process because a significant portion of it comes from sources they do not trust, and finding vulnerabilities in such code is becoming increasingly difficult.

But attackers understand that attacks on systems are precisely the area where large language models of AI can demonstrate their advantages. Mistakes in attacks are almost cost-free: they can repeat their attempts endlessly. While defenders must always be flawless. This imbalance gives security teams the same feelings that physicists have when working with turbulent systems.

What should we do in such conditions? We need to get used to working in a situation where there are too many vulnerabilities to give them unique names, where we cannot rely on people to catch all security defects during code review.

We have already walked this path

This AI gold mine is not the first time the industry has faced the need to master a new format and learn to trust the security of software components created by other people. We have successfully dealt with this for several decades, for example, with open source.

The infrastructure needed to run AI processing largely coincides with that used for other purposes — essentially, it's Kubernetes with the addition of CUDA.

Many of us still remember the difficulties in implementing secure multi-user applications on Kubernetes, and this process is no more complicated.

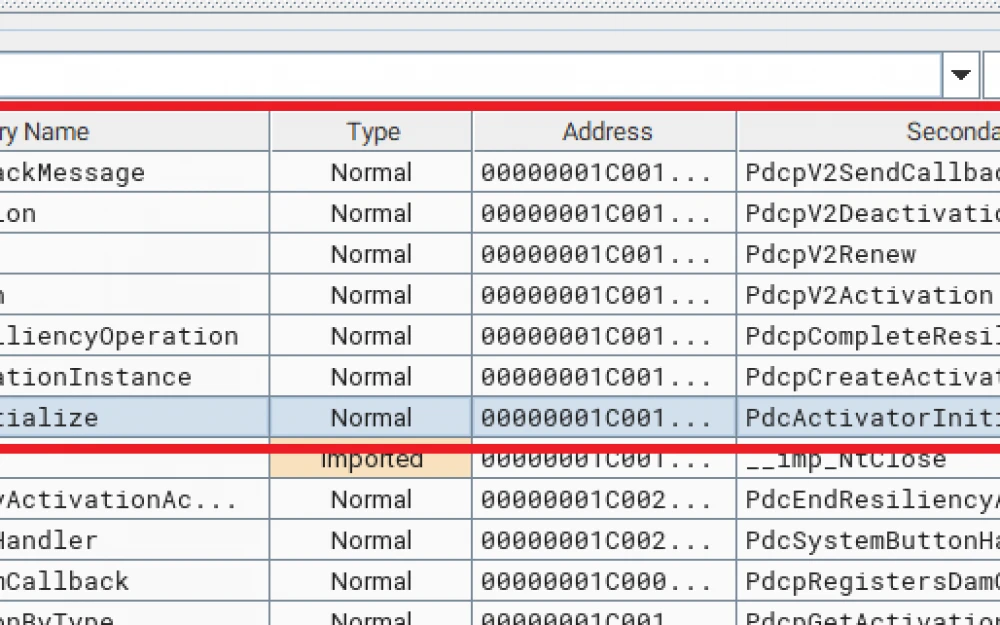

Working with the source codes of AI toolkits shows that we are in an extremely rapidly changing and turbulent security environment. For example, PyTorch builds only work with specific versions of CUDA, which require older versions of GCC/Clang compilers. Creating packages can take several hours, even on powerful systems.

And one more alarming detail: the state of supply chains. Most links to low-level libraries lead to random forums and repositories where verifying the authenticity of information is often impossible. If a vulnerability appeared in one of these libraries, the consequences could be catastrophic.

In addition, many of these libraries process unauthorized and raw data for training, which further complicates the security situation.

Despite all this, it is worth remaining optimistic in the long term. AI can become an important tool for processing noise in large volumes of data and identifying important signals. But, as we have learned over decades of working with open source, it is necessary to start with a reliable security foundation and build everything correctly from the very beginning.

Chainguard, for example, has introduced a set of AI images that includes the entire AI workflow, from development to data storage in vector databases. Addressing the AI supply chain at the raw material level, including software signatures, bills of materials (SBOM), and CVE vulnerability fixes, we are expanding the "pipelines," creating protective barriers for large-scale ambitions in AI system development.

Ultimately, the security of AI systems will depend on how quickly and effectively we adapt our approaches to this new world.

Write comment