- Security

- A

AI Security with a French Flavor or an Analysis of Securing Artificial Intelligence from ETSI. Part 1

Artificial intelligence technologies are developing rapidly, but with new opportunities come new risks. Prompt injections, abuse of agent tools, vulnerabilities in the orchestration of complex systems—the range of threats to AI is increasing. While the US and China compete in the efficiency and quality of generative models, Europeans are adopting AI security standards. A couple of years ago, the European Telecommunications Standards Institute created an AI protection committee to develop a comprehensive set of AI security standards. The committee’s working group has produced a wealth of reports, now totaling as many as 10 artifacts. In this first part, we delve into ETSI SAI’s initial AI security reports.

My name is Evgeny Kokuykin, we are developing a product for protecting genAI systems called HiveTrace and studying new types of attacks on generative systems in the ITMO laboratory. A couple of weeks ago, a colleague from the OWASP working group announced the release of report TS 104 223 "Baseline Cyber‑Security Requirements", but a detailed study led to the need to systematize the works from the last two years from ETSI SAI. In this article, we’ll try to extract what’s useful for practice and discard information that has already become outdated over the past few months and is hardly applicable now.

ETSI is an independent nonprofit organization, recognized by the EU as one of three official European standardization bodies alongside CEN and CENELEC. They regularly issue global standards for telecom, support European regulation, and include leading industry companies (for example, Rostelecom was once a participant). ETSI’s work directly affects the governance of large organizations that do business in the EU and interacts with other organizations for releasing standards and regulations.

Everyone has seen the CE mark on toys, when a product is certified by the manufacturer for the EU. Roughly speaking, ETSI is one of just three organizations that issue such certificates, though of course not for toys, but for telecom.

The ETSI technical committee SAI (Securing Artificial Intelligence) develops specifications that: 1) protect AI components from attacks; 2) reduce the likelihood of threats in AI systems; 3) use AI to strengthen cyber protection; 4) consider the social and ethical aspects of security.

Now there are 10 documents related to AI available in ETSI SAI, below you can see the full list

Date and Version | Document | Brief Content |

July 2024 V1.1.1 | TR 104 066 «Security Testing of AI» | Fuzzing, adversarial attack methods, and coverage evaluation for testing AI components |

July 2024 V1.2.1 | TR 104 222 «Mitigation Strategy Report» | Catalog of protective measures against poisoning, backdoor, evasion, and others |

January 2025 V1.1.1 | TR 104 221 «Problem Statement» | Basic justification of AI system risks at all stages of the lifecycle |

January 2025 V1.1.1 | TR 104 048 «Data Supply Chain Security» | Data integrity and mechanisms to preserve it in agent chains |

March 2025 Read also:

V1.1.1 | TS 104 224 «Explicability & Transparency» | Static and runtime explainability templates, logging requirements |

March 2025 V1.1.1 | TR 104 030 «Critical Security Controls for AI Sector» | Adaptation of CIS Controls to the AI landscape |

March 2025 V1.1.1 | TS 104 050 «AI Threat Ontology» | Taxonomy of attack targets and threat surfaces |

April 2025 V1.1.1 | TS 104 223 «Baseline Cyber-Security Requirements» | 13 baseline principles (Design → EOL) and ≈80 provisions for models and systems |

May 2025 V1.1.1 | TR 104 065 «AI Act mapping & gap analysis» | Mapping of EU AI Act articles with ETSI work plan |

May 2025 V1.1.1 | TR 104 128 «Guide to Cyber-Security for AI Systems» | Practical guide for implementing TS 104 223 requirements |

Based on the table above, you can notice that the documents were released not all at once, but with intervals, which will be important when we start analyzing them in more detail.

First wave (07-2024) — methodology: testing and countermeasure catalog.

Second wave (01-2025) — foundation: problem formulation and data supply chain security.

Third wave (03-2025) — thematic specifications for threats, transparency, and controls.

Fourth wave (04-2025) — unified baseline requirements for implementation.

Fifth wave (05-2025) — alignment with legislation (AI Act) and guide for practical implementation.

Thus, the historical sequence shows a gradual shift from describing threats and tests → to process requirements → to regulatory mapping and implementation guidance in agent AI systems.

Let's dive into the documents.

TR 104 066 «Security Testing of AI»

The document is focused on predictive ML models. It describes fuzzing, differential testing, and a whole range of adversarial algorithms, but does not mention LLM, prompt injection, jailbreak, or other specific attacks on genAI. Gradient attacks can be transferred to GenAI, but are not currently the main attack tool.

When I started reading this guide, I expected to find ideas for specific attacks on LLAMATOR here, but there are no practical "recipes" with ready-made code in the document. All techniques are presented as formulas, pseudocode, and comparative efficiency tables.

Basically, this is a reference book on fundamental neural network robustness testing techniques, so if you’re interested in red teaming, you’re better off using the OWASP red team guide (link).

Next up is TR 104 222 “Mitigation Strategy Report”

This section features a taxonomy of attacks on ML models (CV, NLP, audio). There’s no direct mention of LLMs, even though the document was published in 2024, which is explained by the fact that the first version was prepared back in 2021 and apparently the authors just wanted to finish what they started and not touch on language models.

Below is an example of a taxonomy of attacks; for this article, I've abbreviated it a bit, but you can get the idea.

Attack type | Examples of defense measures recommended in ETSI TR 104 222 |

Poisoning (data poisoning) | • Dataset sanitization Read also:

• Blocking suspicious samples before training |

Backdoor (inserting during training) | • Dataset sanitization • Trigger detection • Detection of backdoor behavior |

Evasion (bypass at inference stage) | • Input data preprocessing • Model hardening • Model robustness assessment • Detection of adversarial examples |

Model stealing | • Limiting the number of API requests • Model output obfuscation • Model fingerprinting |

The bottom line of the document: the authors acknowledge that the material is again academic; you won’t find any specific recommendations or calls to action here, which is a shame—the title was promising.

Two documents after a long pause: TR 104 221 “Problem Statement” and TR 104 048 “Data Supply Chain Security”

The first describes threats to machine learning systems along two axes: lifecycle stage, and type of attack/misuse. To the issues from the table above, two new classes are added:

Improper use of artificial intelligence:

ad-blocker bypass

malware code obfuscation

deepfake content

imitation of handwriting or voice

fake conversations, etc.

And Systemic/process risks:

bias,

ethical flaws,

lack of explainability,

software/hardware vulnerabilities

This doesn’t rise to the level of a full threat model; the document is short, more like a memo for internal corporate mailing.

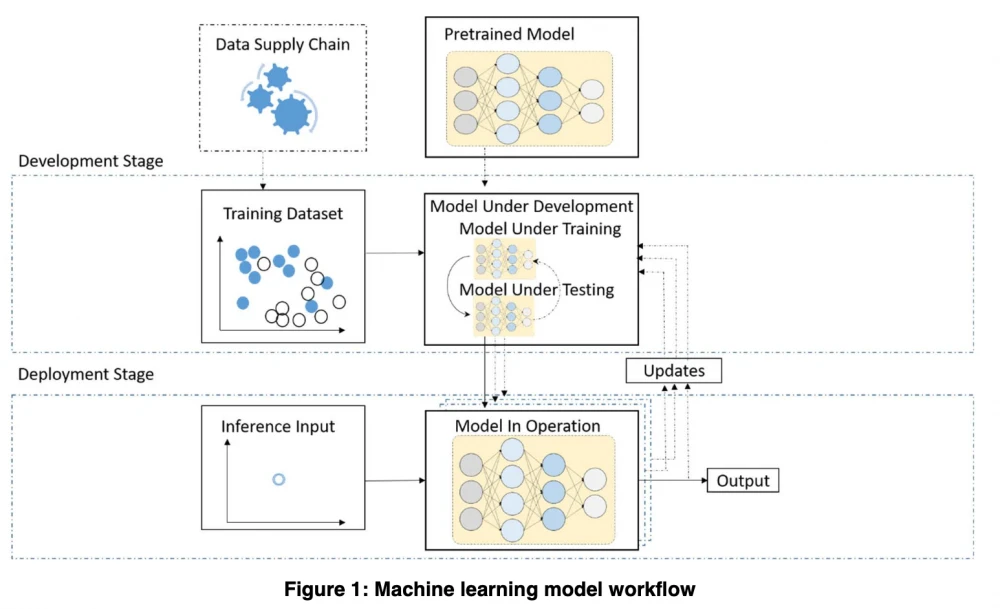

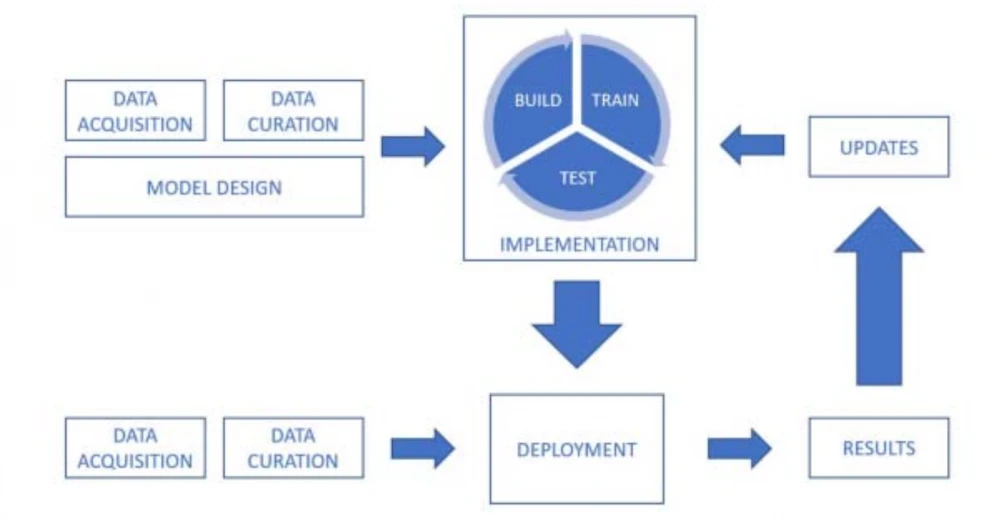

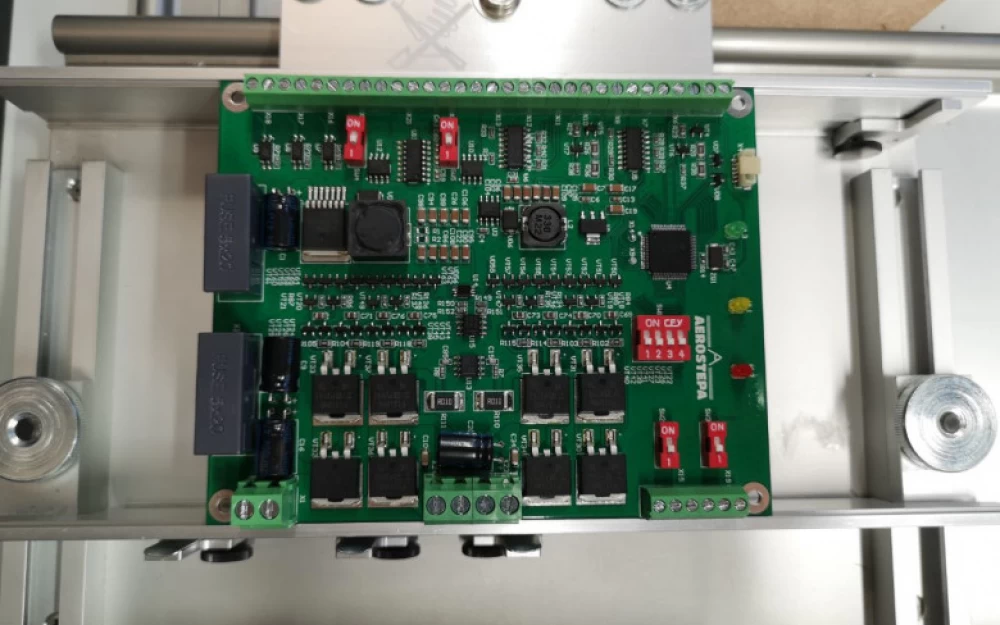

The second document once again touches on dataset topics and protection against various types of data poisoning and training pipeline compromises. Below is an illustration of the training pipeline that the document revolves around.

At the end of the document, recommendations are given for protecting the supply chain for models:

Hash sums can be used for effective data integrity protection. When verifying data integrity.

Further training of models using verified or trusted data

Following standard cybersecurity recommendations, such as the principle of least privilege when accessing data and the supply chain.

Logging at all stages of processing and deployment, including model telemetry collection.

TS 104 224 “Explicability & Transparency”

A document that immerses the reader in the following concepts:

Transparency — the system is “open for inspection,” there are “no hidden parts”; any operation can be externally audited

Explicability — the ability to show how exactly the system came to the result (“showing step-by-step how the task was solved”)

To ensure transparency of AI systems, the authors propose the following actions:

Static Explicability – AI system passport | • Describe each AI system in terms of: system purpose, data sources, their role, methods for evaluating data quality, responsible person. • Answer questions: where is the data from? how was authenticity verified? what measures against bias? |

Run‑Time Explicability (RTE‑service) – | • Collect information about every model decision: event time, software and hardware versions, system performance metrics • Define which events to log, so as not to overload the system (§ 6.4). |

XAI methods – explainability techniques that help understand decision rationale | • Use XAI to search for false patterns and check user trust in the system. |

TR 104 030 “Critical Security Controls for AI Sector”

Brief overview of TR 104 030 “Critical Security Controls for AI Sector.” Essentially, the document answers a single question: is it necessary to change the methods listed in the older ETSI TR 103 305 checklist for AI systems testing? Some security controls from the original source below:

Action | Reference to original TR 103 305 |

Implement a full inventory of assets: models, datasets, pipelines | CSC 1 (“Inventory and Control of Enterprise Assets”) and CSC 2 (“Inventory and Control of Software Assets”) |

Include continuous vulnerability management for models and their environments | CSC 7 (“Continuous Vulnerability Management”) |

Set up logging and monitoring of models/agents’ activities | CSC 8 (“Audit Log Management”) + facilitation methods from TR 103 305-4 |

Conduct testing and “purple-team” exercises against LLMs/agents | CSC 18 (“Penetration Testing”) together with MITRE ATLAS for AI attack scenarios |

Ensure control of personal data in training, test, and inference datasets | CSC 5 (“Account Management”) and privacy recommendations from TR 103 305-5 |

The report confirms the applicability of the existing Critical Security Controls methodology and does not introduce new methodologies.

Here we will pause our review of the Securing Artificial Intelligence ETSI document package. Many of the documents do not have an explicit focus on LLM issues and are intended for working with classic ML models. In the next part, we'll look at the latest documents from May, which are related to the newly enacted European EU AI Act and already touch on the challenges of LLM-based systems and agent systems.

P.s. I often write about AI Security in my Telegram channel https://t.me/kokuykin, subscribe so you don't miss the next part

Write comment