- AI

- A

Detection of DGA domains or a test task for the position of intern ML-engineer

In this article, we will consider a simple task that is used by one company as a test task for interns for the position of ML-engineer.

It involves the detection of DGA-domains — a task solved using basic machine learning tools. We will show how to handle it using the simplest methods. Knowledge of complex algorithms is important, but it is much more important to understand basic concepts and be able to apply them in practice to successfully demonstrate your skills.

DGA (Domain Generation Algorithm) is an algorithm that automatically generates domain names, often used by attackers to bypass blocks and communicate with command servers.

The technical task included test data for which predictions needed to be made, and validation data on which metrics needed to be demonstrated in the format:

True Positive (TP): False Positive (FP): False Negative (FN): True Negative (TN): Accuracy: Precision: Recall: F1 Score:

Sometimes companies do not provide training data and want to assess how well you can find solutions on your own. This includes:

Understanding the problem: Clear formulation of the task.

Methodology: Development of an action plan and selection of methods.

Critical thinking: Data analysis and hypothesis generation.

Practical skills: Application of basic machine learning concepts.

It is important to demonstrate initiative and the ability to work with limited information. In our case, the domains of existing companies can be found on Kaggle, and we will need to generate non-existent domains ourselves.

High-quality and diverse data allow algorithms to identify patterns, make predictions, and make informed decisions. Therefore, without good data, it is impossible to achieve successful results in machine learning. It is important to create high-quality data for training the model to ensure its efficiency and accuracy. We need to focus on creating such data:

Write functions to generate random strings and domain names. The

generate_random_stringfunction generates a string of a given length with letters and, optionally, digits. Thegenerate_domain_namesfunction creates a list of domain names with various patterns.def generate_random_string(length, use_digits=True): """ Generates a random string of a given length, including letters and optionally digits. :param length: Length of the string :param use_digits: Whether to include digits in the string :return: Random string """ characters = string.ascii_lowercase if use_digits: characters += string.digits return ''.join(random.choice(characters) for _ in range(length)) def generate_domain_names(count): """ Generates a list of domain names with various patterns and TLDs. :param count: Number of domain names to generate :return: List of generated domain names """ tlds = ['.com', '.ru', '.net', '.org', '.de', '.edu', '.gov', '.io', '.shop', '.co', '.nl', '.fr', '.space', '.online', '.top', '.info'] def generate_domain_name(): tld = random.choice(tlds) patterns = [ lambda: generate_random_string(random.randint(5, 10), use_digits=False) + '-' + generate_random_string(random.randint(5, 10), use_digits=False), lambda: generate_random_string(random.randint(8, 12), use_digits=False), lambda: generate_random_string(random.randint(5, 7), use_digits=False) + '-' + generate_random_string(random.randint(2, 4), use_digits=True), lambda: generate_random_string(random.randint(4, 6), use_digits=False) + generate_random_string(random.randint(3, 5), use_digits=False), lambda: generate_random_string(random.randint(3, 5), use_digits=False) + '-' + generate_random_string(random.randint(3, 5), use_digits=False), ] domain_pattern = random.choice(patterns) return domain_pattern() + tld domain_list = [generate_domain_name() for _ in range(count)] return domain_listThe code loads three CSV files, processes the data by removing the '1' column and adding 'is_dga' with a value of 0. It generates 1 million DGA domain names, combines them with

part_df, and shuffles the resulting DataFrame.try: logging.info('Loading data') part_df = pd.read_csv('top-1m.csv') df_val = pd.read_csv('val_df.csv') df_test = pd.read_csv('test_df.csv') logging.info('Data successfully loaded.') except Exception as e: logging.error(f'Error loading data: {e}') logging.info('Processing data') part_df = part_df.drop('1', axis=1) part_df.rename(columns={'google.com': 'domain'}, inplace=True) part_df['is_dga'] = 0 list_dga = df_val[df_val.is_dga == 1].domain.tolist() generated_domains = generate_domain_names(1000000) part_df_dga = pd.DataFrame({ 'domain': generated_domains, 'is_dga': [1] * len(generated_domains) }) df = pd.concat([part_df, part_df_dga], ignore_index=True) df = df.sample(frac=1).reset_index(drop=True)Exclude domains from the validation and test sets, then balance the classes by selecting 500,000 examples for each class. The final balanced set is shuffled and the indices are reset.

# Exclude domains from validation and test sets train_set = set(df.domain.tolist()) val_set = set(df_val.domain.tolist()) test_set = set(df_test.domain.tolist()) intersection_val = train_set.intersection(val_set) intersection_test = train_set.intersection(test_set) if intersection_val or intersection_test: df = df[~df['domain'].isin(intersection_val | intersection_test)] # Balance classes to have the same number of examples logging.info('Balancing classes') df_train_0 = df[df['is_dga'] == 0] df_train_1 = df[df['is_dga'] == 1] num_samples_per_class = 500000 df_train_0_sampled = df_train_0.sample(n=num_samples_per_class, random_state=42) df_train_1_sampled = df_train_1.sample(n=num_samples_per_class, random_state=42) df_balanced = pd.concat([df_train_0_sampled, df_train_1_sampled]) df_train = df_balanced.sample(frac=1, random_state=42).reset_index(drop=True)Create and train a model using a pipeline that includes vectorization with

TfidfVectorizerand logistic regression. After training, the model is saved to a filemodel_pipeline.pkllogging.info('Creating and training model') model_pipeline = Pipeline([ ("vectorizer", TfidfVectorizer(tokenizer=n_grams, token_pattern=None)), ("model", LogisticRegression(solver='saga', n_jobs=-1, random_state=12345)) ]) model_pipeline.fit(df_train['domain'], df_train['is_dga']) logging.info('Saving model') joblib_file = "model_pipeline.pkl" joblib.dump(model_pipeline, joblib_file) logging.info(f'Model saved to {joblib_file}')

Our entire task boils down to the fact that we need to break domains into N-grams and vectorize them using TF-IDF. An N-gram is a sequence of N elements (words or characters) in a text, but in our task, we apply them to a single word to highlight and analyze domain syllables. TF-IDF (Term Frequency-Inverse Document Frequency) is a method that helps to assess the importance of a word in a document compared to other documents in the collection.

Thus, by combining N-grams and TF-IDF, we can effectively analyze domains and identify their key characteristics. Let's consider the example of existing domains: texosmotr-auto.ru and pokerdomru.ru, breaking them into 4-grams, ignoring the generic domain (.ru)

For

texosmotr-auto.ru: "texo", "exos", "xosm", "osmo", "smot", "motr", "otr-", "r-au", "-aut", "auto"For

pokerdomru.ru: "poke", "oker", "kerd", "erdo", "domr", "omru"

We considered 4-grams, but is it necessary to use fixed N-grams for all domains? Of course not. For each domain, 3-dimensional, 4-dimensional, and 5-dimensional grams are created to identify various linguistic patterns and structural features. This approach allows for better context capture and increases the possibility of detecting unique characteristics that may be useful for classification.

code for creating 3-dimensional, 4-dimensional, and 5-dimensional grams for the domain

def n_grams(domain): """ Generates n-grams for a domain name. :param domain: Domain name :return: List of n-grams """ grams_list = [] # Sizes of n-grams n = [3, 4, 5] domain = domain.split('.')[0] for count_n in n: for i in range(len(domain)): if len(domain[i: count_n + i]) == count_n: grams_list.append(domain[i: count_n + i]) return grams_list

All obtained N-grams need to be vectorized, and the aforementioned TF-IDF method will help us with this. This approach allows us to assess the importance of each N-gram in the context of domains by transforming textual data into numerical form. Vectorization using TF-IDF takes into account the frequency of N-grams in each domain and their rarity in the overall set.

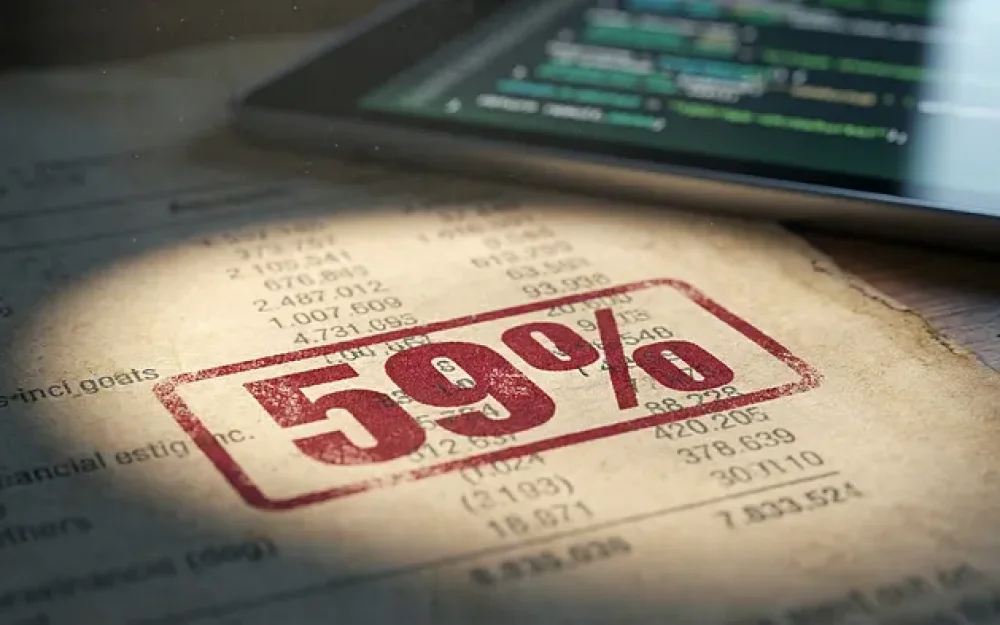

The final step is to train our model. You can use various algorithms that improve your metric, but I chose the classic logistic regression (LR) because it is simple to implement, well interpretable, and often gives good results. For example, I obtained the following metrics on the validation data set:

True Positive (TP): 4605 False Positive (FP): 479 False Negative (FN): 413 True Negative (TN): 4503 Accuracy: 0.9108 Precision: 0.9058 Recall: 0.9177 F1 Score: 0.9117

Thus, understanding basic concepts such as N-grams and TF-IDF will open up opportunities for you to solve applied problems and allow you to confidently present yourself during internships. These skills will become a strong foundation for your professional growth in the field of machine learning and data analysis.

PS: The code submitted for review to the company that provided this test assignment is located here.

Write comment