- AI

- A

How we create Visionatrix: simplifying ComfyUI

We would like to share our experience in developing Visionatrix — an add-on to ComfyUI, which we are creating together, and what comes out of it

We have been reading tekkix for over ten years, but we never got around to writing an article. Finally, we decided to talk about our project, which may be of interest to the developer community.

We will immediately stipulate that the article will not explain what ComfyUI is and its features. It is assumed that you are already familiar with this tool.

If not, you can go to sections 2-3 of the article, where we talk about the development process in a short time, having very limited time (we work full-time, and Visionatrix is our hobby project).

What this article includes:

Description of ComfyUI problems and why we decided to create Visionatrix

How we approached development and what we did first

What came out in the end

We hope you find it interesting.

Here is a link to the project on GitHub

ComfyUI Problems

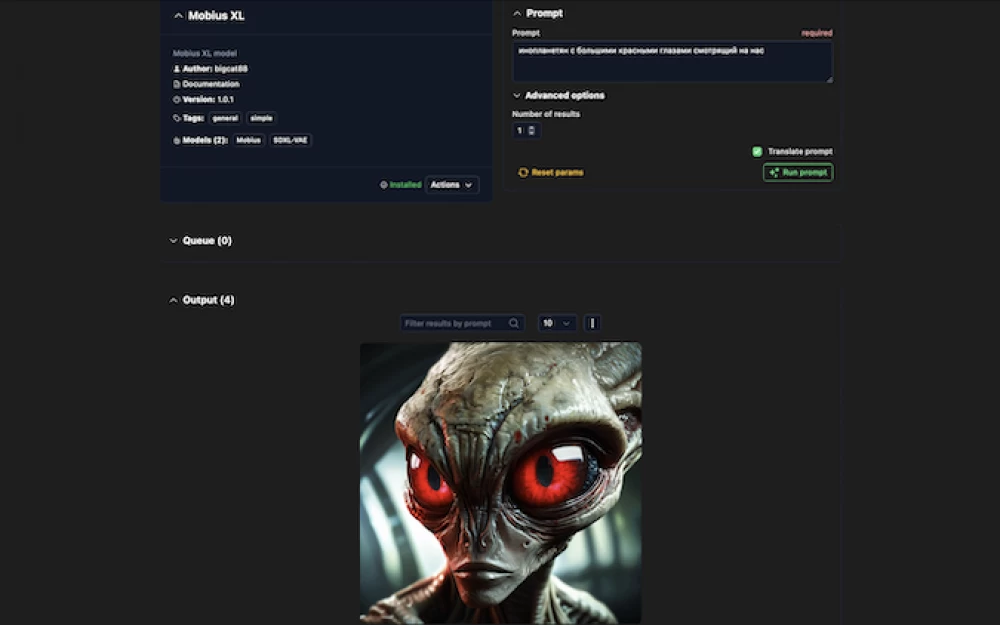

The ComfyUI interface is designed for creating workflows, not for use

An average person who is not a programmer finds it quite difficult to understand what KSampler is, how CFG parameters and latent noise values affect the result, and other nuances of diffusion.

We wanted to create something that an ordinary person familiar with a computer but not familiar with the intricacies of diffusion could use.

Someone might say that there is A1111, but we wanted an even simpler alternative, and for some reasons decided to base it on ComfyUI, as it has a large community of workflow developers.

We also didn't want the user to see many input blocks requiring detailed study when opening the program. Understanding that it is difficult for an ordinary user to understand complex settings and blocks, we decided to provide the ability to flexibly manage which parameters to display in the interface and which to hide.

Since the ComfyUI backend can only execute workflows in the API format, which does not save many metadata from the original workflow, we had to write our own small optional nodes. Our Python backend parses them and generates a dynamic UI based on them.

We also left the option not to use special nodes, but to specify all the information in the "names" of the nodes so that the backend could understand what to give to the UI. The format is approximately as follows:

input;display name;flag1;flag2;...

For example:

input;Photo of a person;optional;advanced;order=2

And based on this, the backend generates a list of input parameters for the workflow that the UI should display. Of course, you can connect any other UI; we understand that ours may not seem ideal to someone, and we try to make everything modular.

Our interface, based on the optional flags (meaning that the parameter for the workflow can be set or not set) and advanced, shows whether the parameter will be hidden by default or not, so that workflows do not overload the interface.

An ordinary user just wants to generate an image based on a prompt or input and does not want to adjust the number of steps in diffusion, right?

The parser was written fairly quickly and is quite simple; we tried not to complicate the project and not to add unnecessary things, resulting in the following options for input parameters:

input- the keyword by which the backend understands that this is an input parameterdisplay name- the name of the parameter in the UIoptional- whether the parameter is optional, for validation on the UI side that all required parameters are filled inadvanced- a flag determining whether to hide the parameter by defaultorder=1- the order of display in the UIcustom_id=custom_name- the custom id of the input param, by which it will be passed to the backend

Results of workflows in ComfyUI

Here we encountered another problem: most nodes have outputs, but they do not need to be displayed, as they are more often used for debugging the workflow process.

Example: the workflow internally refers to VLM to get a description of the input image, then you do something with this text in the workflow and use the "ShowText" node to display the result.

We have not completely solved this problem, but it is on the TODO list for the near future.

To solve the problem of displaying unnecessary output data and make the interface more user-friendly, we decided that the results of the SaveImage class, as well as VHS_VideoCombine for video support, will always be displayed in the UI. This allows the user to focus only on key results and reduces information overload.

When there is time, we plan to add a debug flag for workflows. If it is passed at the time of task creation, we will collect all outputs and display them in the UI as a separate dropdown list — this will be useful for debugging.

At the moment, we have simply added the ability to download the generated ComfyUI workflow from the UI, it can always be loaded into ComfyUI and see what is not working.

Generation history and support for multiple users

ComfyUI does not properly support generation history and working with multiple users, and this was very important for us.

It is convenient when there is one powerful computer in the family, you run the software on it, and each family member uses their account without interfering with each other.

Workflow installation

There are big problems with this in ComfyUI. Most often you see an excellent workflow on openart.ai, but even after spending 30 minutes installing everything you need, you cannot run it, because you need to install 20 nodes and get 5 new models from somewhere.

In addition, today the workflow installation may work, and tomorrow it may stop, because, for example, the model used in it was deleted.

We partially solved this problem by creating a temporary catalog of models in JSON format and wrote GitHub CI to check the availability of models (this often happens).

It looks like soon the ComfyUI frontend will support specifying hashes in nodes for loading models, and then we will get rid of our catalog, as we will be able to find the model on CivitAI by hash and write a parser for HuggingFace by name and hash comparison.

Also, a new version of ComfyUI with support for ComfyUI registry for nodes will be released soon. However, installing nodes will not become much easier and will not solve the problem of Python packages with incompatible dependencies, but overall the situation will improve slightly.

At Visionatrix, we created a "small workflow store" using GitHub Pages and branch versioning. Workflows also have a version and extended metadata.

It turned out to be simple and effective: no need to rent anything, everything is stored in the repository and assembled on GitHub CI.

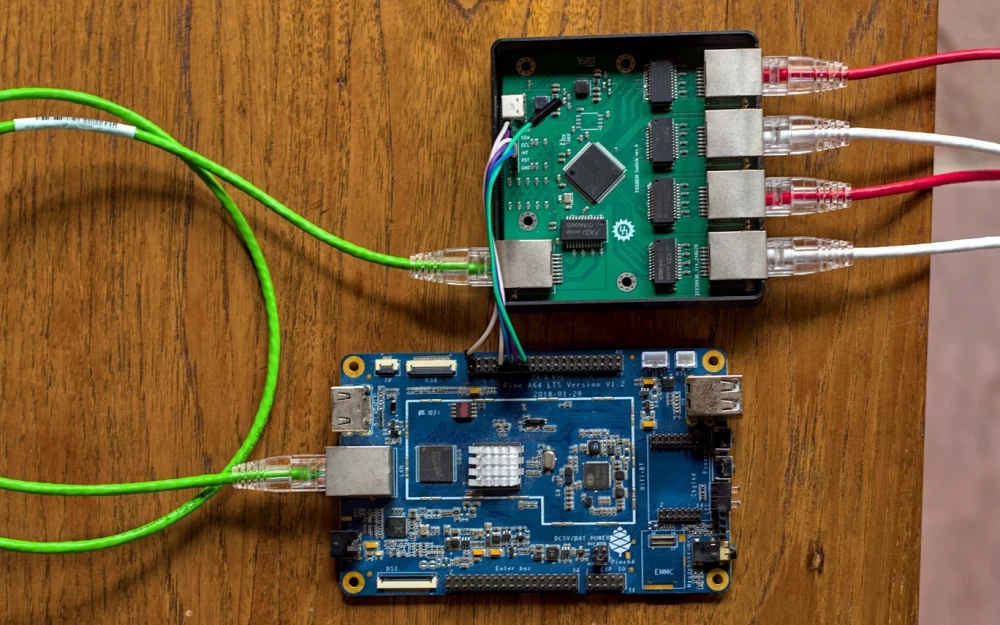

Ease of installation and scaling

Our main requirement was that everything should be easy to install and use.

It should also be easy to scale the solution.

Therefore, it was decided to make everything comprehensive: one-click installation for home use uses SQLite, but PostgreSQL is supported for scaling if necessary.

One installation can work in standard mode, including both the server part and the part executing workflows (in ComfyUI these parts are inseparable). This can also be easily changed by connecting one instance to another, where the connected one becomes an external task executor working in worker mode.

We may tell you later how we implemented all this if anyone is interested. Perhaps it will be enough for you that in our free time we write and "generate" a semblance of documentation: https://visionatrix.github.io/VixFlowsDocs/

Why semblance? Because the project is developing rapidly, ComfyUI is developing in parallel, and ComfyUI developers do not particularly inform about future changes, so you need to be prepared for sudden unexpected changes.

We also see how quickly and dynamically the field of generative AI is developing in general, and if you are limited in time, it is better to focus on those parts that will not change in the near future.

How we approached development and what we did first

Using LLM to assist in development

I will immediately clarify: the author of this article has been programming for more than 10 years, started programming at school in assembler and Delphi, and then wrote in C/C++ for a long time.

The idea of Visionatrix was born at the beginning of 2024, when ChatGPT-4 was already available.

My colleague and I both work full-time in the field of open-source and AI integrations, without touching diffusion and ComfyUI. After an 8-hour workday, there is little strength left, and we manage to allocate 2-4 hours a day for the hobby project. Sometimes we manage to find some time on weekends, but it is still not enough for large projects.

Given the limited time and desire to speed up development, we decided to use generative AI to write backend code. The main goal was for ChatGPT/Claude to work effectively with the most popular Python libraries, such as httpx, FastAPI, Pydantic, and SQLAlchemy.

It was also important for the project to support both synchronous and asynchronous modes. Those familiar with Python understand that this creates serious difficulties, as the need to support both modes significantly increases the complexity and volume of code.

Why is synchrony needed in addition to asynchrony? Because in the future, with the popularization of Python version 3.13+, synchronous projects may get a second life; after all, true multithreading is multithreading.

One person wrote the backend part, and the other mainly worked on the UI and testing. AI was mainly used for the backend so far.

The entire project was divided into files that are convenient to "feed" to the AI, namely:

Database:

database.pydb_queries.pydb_queries_async.py

Pydantic models were moved to a separate file pydantic_models.py — ChatGPT loves to receive them for context.

All the logic for working with the database, relationships between tasks, child tasks (yes, we did that too; it's convenient when the result of a task created from the current one can be seen without leaving the current workflow page), and everything else was also moved to separate files:

tasks_engine.pytasks_engine_async.py

This allowed us not to write functions for working with the database ourselves; we only needed to write the first 4-5 functions, and then, when requesting functionality expansion, write something like:

"Please, based on the files database.py, pydantic_models.py, db_queries.py, tasks_engine.py, add the ability for tasks to have priority, add or expand existing synchronous functions for this. Keep the writing style as in the original files.

pydantic_models.py:

file content

db_queries.py:

file content"

And then a separate request, but without pydantic_models.py and database.py, just drop the files tasks_engine_async.py and db_queries_async.py into the same chat and write: "now do the same, but for asynchronous implementations".

Although with ChatGPT-4, a lot had to be manually corrected, starting from the beginning of summer it became quite smarter and began to produce much higher quality code, requiring fewer corrections.

This autumn, with this approach, it allowed, spending much less effort, to expand new functionality, adding different things, even after work, tired, lying on the couch, as you just ask for what you need, and then, if necessary, ask to redo it.

Now, when o1-preview is available, the situation has simultaneously become both better and worse. How so?

Requests for o1-preview are much more difficult to compose, but with a good prompt, it produces much better code than ChatGPT-4o — but the results are much harder to evaluate, as the code generated by o1-preview is more complex, resembling code quickly written by a human and then immediately rewritten with optimizations.

But overall, the approach remained the same: clear separation by functionality files, to make it as convenient as possible to provide the code to AI and then evaluate it.

Also, LLM loves it when the code is maximally typed, and this allows errors to be caught faster using ruff or pylint utilities.

After all, pre-commit in Python is what we love about this language, when many errors can be caught without even running the code.

Using LLM for writing tests

Initially, we were not going to write tests at all, as this is a hobby project and there was no time for them.

But then the situation changed a bit, as, firstly, we wanted to measure performance between PyTorch versions, ComfyUI itself, the parameters with which all this can be run, and also test it all on different hardware.

Initially, we did this manually a couple of times, but after a few attempts, it became clear that this could not continue.

This was already this fall, during the times of ChatGPT-4o and o1-preview.

We had files with backend sources, openapi.json, access to LLM, and an incredible desire to have tests...

We gave all this to the LLM, and after explaining the situation, we got what we expected — almost working benchmarking code on the first try.

For those interested, almost the entire file was written by ChatGPT:

https://github.com/Visionatrix/VixFlowsDocs/blob/main/scripts/benchmarks/benchmark.py

What interesting things were discovered in the process of writing?

It became clear that we needed more than 5 requests to the LLM to generate working code.

Maybe it's due to the shortcomings of the LLM? Perhaps, but maybe the reason is that the backend documentation (openapi.json) and the backend algorithms themselves are not good enough.

The LLM can be imagined as a developer who entered the repository and tried to use the project's API. He doesn't succeed on the first, second, or third attempt, and he moves on to find software that is easier to integrate with.

And this is an indicator of how easy it will be for other developers to use your product.

Although the tests were eventually written correctly with the help of AI, we rented a couple of instances with different video cards like 4090, A100, H100 and ran them in draft. To write them, we had to explain to the LLM that to create a task from the ComfyUI workflow, you need to pass all the arguments and all the files sequentially as parameters in the form, which endpoint to call to get the task status, how to get the results.

This all turned out to be quite non-obvious the first time for LLM (and, apparently, will be non-obvious for a human as well) and requires further improvements and refinements.

The main thing that was found out: how well the project and its OpenAPI documentation are structured can be checked using LLM by asking it to write a script for tests or just a UI on Gradio.

The fewer questions you have to answer for AI, the better you did, and this will also reduce the time in the future for project support, as you will be able to outsource more tasks to juniors AI and speed up the development process, freeing yourself from routine.

What was the result

We created a solution that is easily installed on both Linux and macOS, and also works on Windows.

Uses a database to save the history of tasks that the user creates from workflows.

Supports working with SQLite and PostgreSQL databases.

Supports connecting one instance to another instance, installed both locally and remotely.

Since all this is done on ComfyUI, most of the ComfyUI features are supported.

Implemented prompt translation support using Gemini and Ollama.

A small benchmarking script has been written, which is also used for tests.

A small script for GitHub has been written, which checks if everything is in order with the models used in the workflows.

What is missing in the project?

Prompt expansion: for example, the drawing model does not know who Geralt of Rivia is; the LLM should transform and simplify the prompt to get a better result.

A more convenient API for creating tasks, as well as OpenAPI specifications for each flow — this will make it much easier to write integrations for the project, as well as create demos based on Gradio with a single request to the LLM.

Automatic generation of documentation and examples for workflows, using the same LLM.

Development of a universal input method in chat format, when based on some result you ask to redo what you do not like, possibly with words, possibly by selecting objects, after which the LLM itself should choose which workflows to use to achieve the result (maximum ease of use, as much as possible when you initially do not know what you want).

Conclusion

The development of Visionatrix has been an interesting experience for us in creating an add-on over ComfyUI, aimed at simplifying use and expanding capabilities for ordinary users. We continue to actively develop the project and will be happy if it interests the community.

If you have ideas, suggestions, or want to contribute, we welcome your participation. The project is open to contributors, and any help is welcome. Also, if you liked the project, don't forget to give it a ⭐️ on GitHub — we will be very pleased.

If you are interested in any of the topics discussed in the article, let us know in the comments.

Write comment