- AI

- A

Neural networks, scammers, and "mammoths": how artificial intelligence is changing cyber fraud

Remember how we were taught not to talk to strangers as children? In 2024, this wisdom has taken on a new meaning: now you can't even be sure that you are talking to a familiar person, and not their neural network double.

According to The Wall Street Journal, in 2023, the number of scams using deepfakes in fintech increased by 700% compared to 2022. At the same time, fraudsters are increasing the use of chatbots, expanding their reach.

We invited the editor of the "Financial Security" section of T—J, author and host of the "Scheme" podcast Alexey Malakhov, to discuss how the arsenal of cybercriminals is changing under the influence of AI.

The expert will talk about new fraud schemes, large-scale neural network scams, and a future in the spirit of Cyberpunk 2077. We will also discuss the prospects for identity verification technologies in a world where "trust but verify" has turned into "don't trust and double-check."

Alexey Malakhov

Knows everything about how not to become a mammoth

I got acquainted with neural networks in 2013 at the institute. In the lab, my classmates and I played with a simple neural network: we made it recognize handwritten digits. At that time, I thought it was a fun toy that would hardly find serious application.

Ten years later, my colleagues and I from the "Scheme" podcast experimented with creating a neural network copy of my voice. We had a whole season of the podcast ready, which meant hours of studio recordings for training the model. After eight hours of additional training, the neural network started speaking Russian, albeit with a strong English accent and creepy machine intonations.

"Got it! It won't be possible to deceive anyone like this anytime soon," I thought, and I was wrong again. Six months later, neural networks are successfully stealing identities and scamming people, and I dedicate entire podcast episodes to such schemes.

How fraudsters use neural networks

I'll start with the key question: have new types of "scams" emerged with the development of neural networks? Probably not, but neural networks have upgraded the old ones. They allowed:

to automate fraud;

to increase the scale, and with it the profitability of old unprofitable schemes;

to increase the realism and persuasiveness of deception.

Chatbots are responsible for automation and scaling. The simplest way to use them for fraud is to make a large language model write Nigerian letters. Provide the neural network with context, think through the prompt — and in a moment you have a personalized letter with which you can catch a trusting "client".

However, such tricks are just the beginning. While less experienced cyber scammers are stamping out ineffective "chain letters", technically savvy fraudsters are deploying robots that do almost all the dirty work for them.

Automation of deception using chatbots

There are many schemes where chatbots can be used. For example, the victim is sent an SMS with a password from an account on a crypto exchange. Everything looks as if someone accidentally indicated the wrong number during registration. The victim follows the link, enters the password and sees a tidy sum. Rejoicing at his "luck", the attacked person asks the support service to withdraw the money. The chatbot replies that first you need to transfer some ether to confirm the wallet. Of course, the account on the exchange turns out to be fake, and all the crypto goes directly to the scammers' account.

Another common scenario is related to fake discount groups.

Potential victims are sent messages like: "5 thousand rubles for purchases at Wildberries for subscribing to us on WhatsApp." The person subscribes to the group, then the robot asks to enter the phone number and "confirmation code to receive the certificate". In pursuit of the discount, the victim does not even notice that he is actually entering the code from two-factor authentication — and as a result, his WhatsApp account is hijacked.

Also, bots are used to automate schemes related to romance and dating. A classic example: a pretty girl in a chat on a dating site writes to a guy that she lives somewhere in London and knows a banker who is ready to invest in cryptocurrency. Allegedly, this will bring double income in a week.

Or even simpler: a beauty invites you on a date, sends a link to a cinema website, and asks you to buy a ticket for the seat next to hers. But there is no ticket, and no cinema either. And if the victim complains to the fake support service, they ask to pay again, promising to refund the money later.

If such correspondence used to be conducted by people, today chatbots are increasingly doing this. Thus, the funnels of sales deception are significantly expanding. A flesh-and-blood scammer can simultaneously communicate with a couple of potential victims, while a bot can communicate with thousands.

However, by automating deception, scammers lose some credibility. Rigidly programmed bots get lost if you deviate significantly from the scenario embedded in them, and LLM agents are easily led by the simplest prompt engineering.

Fraud with deepfakes

Another new and extremely dangerous tool in the arsenal of cybercriminals is deepfakes. The victims of schemes using this technology are not only individuals but also entire corporations.

Mass deception

Some of the most high-profile cases are related to copies of celebrities. Media personalities constantly appear in the news, participate in podcasts and shows. As a result, scammers have voice recordings and videos that can be used to recreate someone else's image.

So, in 2023, my colleagues and I were preparing a podcast episode about how the identity of the famous entrepreneur Dmitry Matskevich was stolen.

One morning he was hit with a barrage of questions about a new investment project: people were asking by email and in messengers whether it was worth investing in his new project. Astonished, Dmitry could not understand what was going on. Then the entrepreneur was sent a link to a lengthy video where he "in person" presented a smart algorithm that works at 360% per annum. The scammers found a recording of Dmitry's real speech, made a deepfake, and started luring victims ready to invest their hard-earned money. It all looked pretty cringe, yet many fell for it.

Recognizing such fakes is becoming increasingly difficult. Recently, I happened to be in Kazakhstan, where I saw with my own eyes a fake video featuring the president of that country. The head of state announced that all citizens were entitled to a share of the gas extraction money. The link under the video led to a fake site where a "tax" had to be paid to receive the payment. The scheme itself is as old as the hills, but the president's voice and appearance were copied very convincingly.

In fact, recently a stream of a conference allegedly featuring Elon Musk hit the top of YouTube. Casually strolling across the stage, he talked about launching a new cryptocurrency project. The video suggested scanning a QR code, going to the site, and sending bitcoins. Neural network Musk promised to double this amount and give a gift in return. YouTube moderators were too late: by the time the video was removed, it had been at the top for several hours, and the scammers' wallet had accumulated a considerable amount.

Classic scam with a pinch of deepfake

As a rule, individuals are attacked using deepfakes of relatives, friends, and acquaintances. Since the days of ICQ, there have been scams like: "urgently lend me money until tomorrow, just transfer it to this card, because mine is blocked." It would seem that no one would fall for a 30-year-old scam, but when the victim receives an audio message or even a video clip from a familiar person who is anxiously asking for help, the hand reaches for the wallet.

In our editorial office, we like to show an example of a girl who read Tatiana's letter to Onegin on camera. The scammers re-voiced the video clip as if she was asking to transfer 50 thousand rubles to a card number.

Another popular scheme is when a fake friend sends a video or audio asking to vote for him in a contest and then shares a link to a phishing site. There, the attacked person is asked to log in through a messenger. Of course, for this, you need to enter a login and password or a confirmation code. Voila! The scammer gains access to the correspondence.

Attacks on companies

By deceiving individuals, scammers can cause serious harm to an entire company. There is a case where an employee of a large firm was invited to a video conference with several dozen deepfakes of his colleagues. Most of them were present for the crowd and simply remained silent. But some engaged in dialogue, discussing work issues. At some point, the deepfake director casually instructed the employee to transfer 25 million dollars to an account in a Hong Kong bank, which he did.

The battle of shield and sword, or how not to become a "mammoth"

To successfully counter such attacks, companies need to modernize their organizational culture. It is important to move away from "telephone management" and build processes so that the staff does not just silently follow orders, but can verify their authenticity and, most importantly, is not shy or lazy to do so.

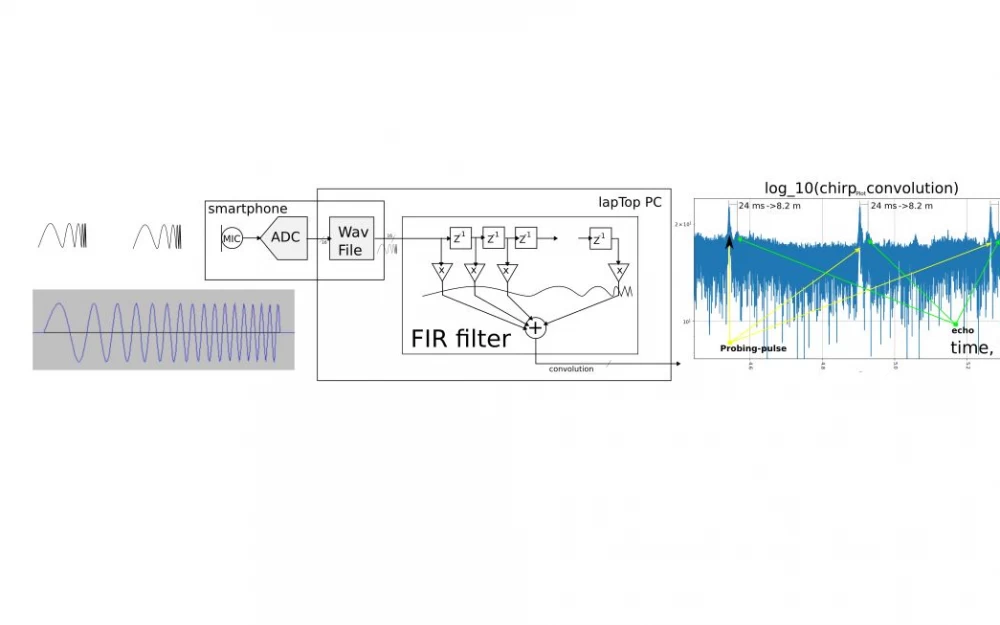

There are also technological solutions. Banks are currently leading in this area. To combat deepfakes in financial organizations, the Liveness technology is used — a check for the "liveness" of sound and image.

Algorithms analyze what is inaccessible to the human ear: unnatural sinusoids, cosinusoids, repeating sound patterns. In the case of video, they check how a person moves, smiles, and shows emotions. Suspicious artifacts, glares, blurs are detected, and thousands of other parameters are evaluated. So far, even the most realistic deepfakes do not deceive banking systems.

Unfortunately, such tools are not available to all organizations, and this is unlikely to change in the foreseeable future. But fraudsters will definitely continue to hone their skills on both companies and ordinary people. It is likely that the latter will become the main victim in the eternal struggle between the "shield and the sword". They can only rely on their vigilance.

I communicate a lot with people who are far from information security and just live their lives. When I tell them about neural networks, they respond: "Wow, cool!". Most understand that someone else's face can be stolen, but they think that such risks do not concern them. As if fraudsters are only interested in celebrities, and "ordinary mortals" will not be faked. Such misconceptions can sometimes be very costly.

I think that in the near future we will all have to become paranoid. Standard precautions (not telling anyone card numbers, passwords, etc.) are no longer enough. In the world of neural network deepfakes, the golden rule from spy movies applies — "trust no one".

So it is worth agreeing with your loved ones on a code phrase in case of unforeseen circumstances. Hearing, for example, "code — pink monkey" (don't ask) you will be sure that the message is genuine. Or try to contact through alternative channels. Especially if the voice message or video clip asks "under no circumstances to call".

And remember: neural networks still make mistakes, so look and listen carefully to the video. If a person speaks too monotonously, blinks rarely, does not express any emotions, or, on the contrary, overacts, all this is a reason to be wary. A soapy, pixelated, or heavily cropped picture, differences in skin tone on the face, neck, and hands also reveal a deepfake.

Cyberpunk Style Future

But all these spy games only delay the inevitable. The lion's share of internet traffic is already generated by robots, and now they have taken up content. If we do not find a quick and reliable way to distinguish neural networks from humans, then a reality in the spirit of Cyberpunk 2077 is quite possible, where the internet has become unusable. Only there it was captured by wild AIs, and here it will be littered by LLMs. You go online, and there is not a single person, only fake news and a whole horde of bots trying to deceive you.

I know many on tekkix do not like this topic. For one mention of the internet by passports and blogger registration, they can be pelted with tomatoes, and not without reason. States, in theory, have the resources to centrally monitor the authenticity of content and accounts, but quis custodiet ipsos custodes?

Intervention by "Big Brother" will cause a sharp rejection among many users. Now RKN has obliged everyone with more than 10 thousand subscribers to register in their system. But people do not feel that this is all for verifying authors and protecting subscribers. Due to the regulator's reputation, people feel that this is for control and fines. To be honest, I myself am not sure that anyone at RKN plans to use this registry as protection against fakes.

But even now, fake profiles and neural network doubles of stars and bloggers are actively being created. In such accounts, photos are posted for months, advertisements are sold, various scams are promoted, and then it turns out that all the profile content is generated.

The good news is that to solve this problem, it is not at all necessary to involve internet oversight authorities. For example, content generated by neural networks can be labeled: at OpenAI they have long figured out how to do this in practice, but they are in no hurry to implement the idea. After all, even if all commercial neural networks start labeling content, nothing will prevent scammers from training their own to work without labeling — and all the efforts of companies will be in vain.

However, if the labeling option does not work, companies directly interested in the fight for content authenticity may take up the task of catching fakes. Certain progress in this area has already been achieved by the Content Authenticity Initiative coalition, created at the initiative of Adobe, but it is too early to judge the real effectiveness of such associations.

Whether to give control over fakes on the network to corporations is an open question, but this development scenario looks at least no worse than the scenario where it will be possible to access the internet only through State Services. I do not exclude that there is a more reasonable way out of the situation, eliminating the need to sacrifice something and choose between two evils.

What do you think about this? I suggest discussing in the comments what you think is the optimal solution to the problem.

Write comment