- AI

- A

6 internal factors affecting the efficiency of facial recognition from video cameras

Hello everyone! In the previous article, I already talked about how external factors can affect the speed and accuracy of facial recognition systems on video streams. Today we will talk about no less important internal aspects - the architecture of the system and the correct choice and configuration of equipment.

First, a little about how the tests were conducted:

The tests were conducted from November 2023 to July 2024 in three cities — St. Petersburg, Moscow, and Chelyabinsk, which allowed for different climatic conditions and seasonal changes to be taken into account.

Cameras with different resolution parameters and viewing angles were used, and the installation height (2-4 m) and installation locations (e.g., traffic light poles and public transport stops) were checked.

In total, out of ~5,500 faces of passers-by, 1,056 identification attempts were made against a database of 528,000 faces.

(More details about the test conditions).

And now to the results:

Internal factors:

1. Network bandwidth

Degree of influence — low (4 losses in 1056 attempts).

The existing urban infrastructure may not be ready to "pump" the main (best in quality and resolution) video streams from a large number of cameras to the data center. As a result, we get frame drops and short-term video freezes. There were cases when we lost not single frames, but entire passages of people.

2. Equipment stability

Degree of influence — significant (11 losses in 1056 attempts).

When transmitting the stream from the camera to the video analytics servers, the data passes through a number of devices: the camera itself, the POE switch, the switches on the way to the data center, the video recording server, the video analytics server, the face vector storage server, and the identification results collection server. All this hardware can fail at the very moment when the person we need is in the camera's field of view and looking at it.

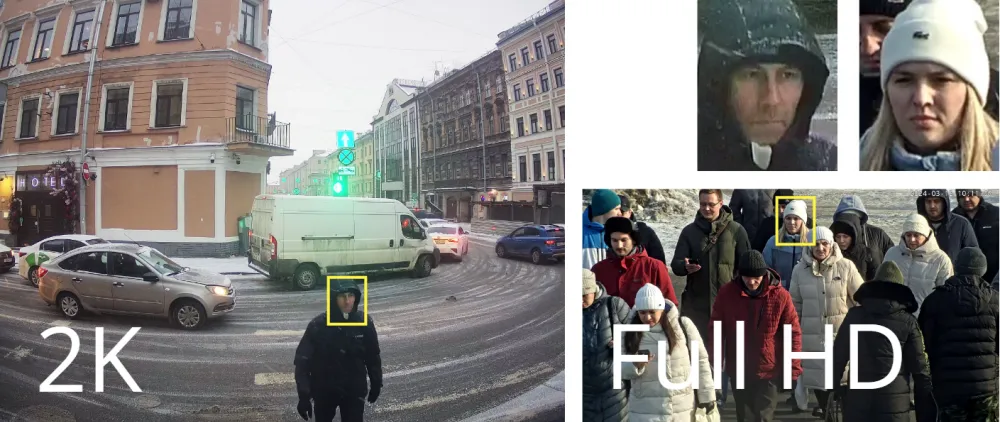

3. Camera image resolution

Degree of influence — significant (22 losses in 1056 attempts).

The higher the camera resolution, the greater the temptation to use it to cover the largest area. This leads to the relative size of faces becoming smaller, distortions being added when approaching the edges of the frame, and the overall quality of faces "sagging". As the resolution increases, the cost of the camera starts to increase, and there are also additional costs for the infrastructure to deliver the stream to the data center and disk storage.

4. Camera matrix quality

Degree of influence — high (27 losses in 1056 attempts).

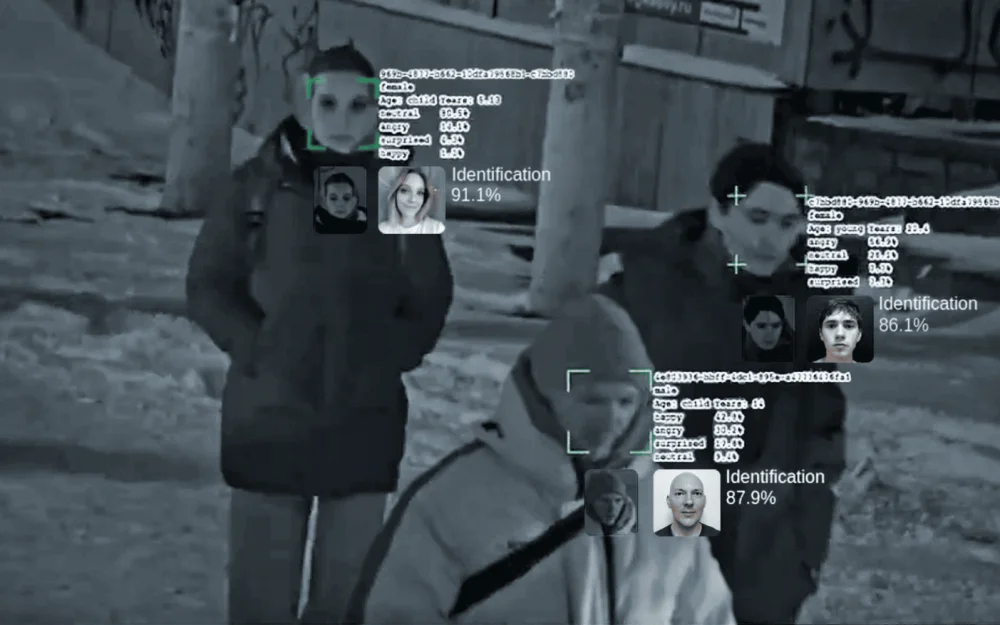

While a person moves within the camera's field of view, the system tracks their face and looks for the best image based on tilt/turn angles, blurriness, distance between the eyes, lighting, etc. From 15 to 30 frames per second are analyzed over several seconds. Budget segment cameras provide low-quality images from the matrix (noise and interference). As a result, such images may be discarded by quality assessment algorithms, and more suitable frames may not appear.

Moreover, matrices of cheaper cameras may fail earlier, "burning out" in the sun, which will add additional noise and blurriness to the image, making the camera useless for face recognition.

5. Video analytics server performance (overload >80%)

Degree of influence — high.

The higher the resolution of the stream from the camera, the more people in the video and the more streams being processed, the higher the load on the computers. Protection against crashes during peak loads is that instead of completely stopping work, the system starts discarding some of the frames submitted for analysis. This keeps it operational, but may result in successful face angles being simply excluded from the analysis. The table below shows that increasing the number of video streams on the server at some point starts to lead to a decrease in FPS (frames per second) from 25 to 17, and therefore the total number of identifications starts to fall from 235 to 196.

6. Quality of reference photos in the database

Degree of influence — high (31 false positives out of 1056 attempts).

Reference photos in the database with low quality, on the basis of which a vector is built for recognizing faces in the video, lead to a large number of false positives.

How to manage internal factors?

High image resolution from the camera is not so important. It is better to take a specialized long-focus camera with a lower output resolution, but ensure large faces in the frame. This will reduce the need for network bandwidth, disk space for video storage, and server capacity for video analytics.

It is better to process video on the periphery (directly at intersections where cameras are installed) with specialized edge devices instead of transmitting a "rich stream" to the data center. This reduces the risk of identification losses due to data transmission failures, reduces the cost of building and maintaining communication lines and switching equipment, as well as storing video in the data center.

Monitor the quality of reference photos in the database, otherwise the likelihood of false identifications or omissions increases.

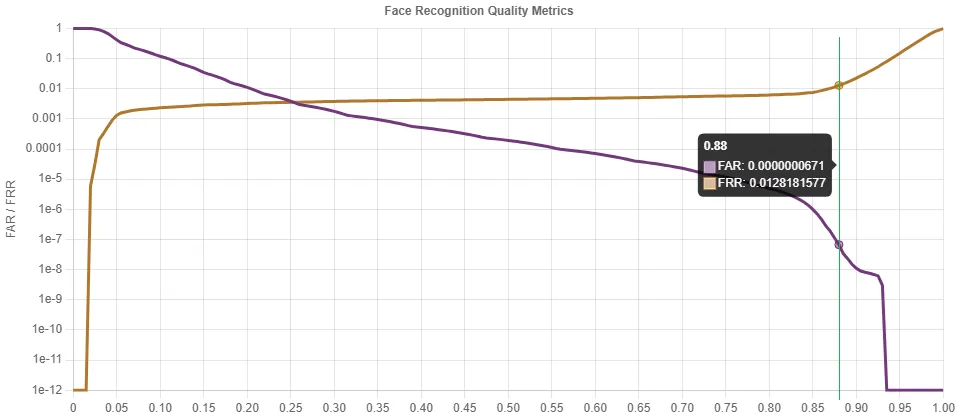

The special services do not have the resources to process false identifications, so the principle "if not recognized by this camera, it will be recognized by another" allows increasing the confidence threshold of identification and thus reducing the number of false positives and not discrediting the system. The recommended confidence threshold for identification is set to reduce the number of false positives on databases of more than 500,000 faces. It is necessary to estimate how many people will pass in front of the camera per day and decide how many false positives users (security, special services) will be ready to respond to. Using the FAR/FRR ratio graph (False Acceptance Rate / False Rejection Rate), you can choose the optimal threshold. For example, in our system, this threshold is 87.6%

Conclusion

To get the most out of the facial recognition system, it is necessary to comprehensively approach the selection and configuration of all components, including cameras, video analytics server, and data storage servers.

I hope that the results and research materials will help integrators of video surveillance systems with facial recognition to avoid unnecessary errors in the design, installation, and operation of such systems.

Write comment