- AI

- A

What can the search for causal relationships in IT monitoring teach us?

The desire to understand why certain events occur is inherent in human nature. We constantly seek causal relationships to predict the future, make decisions, and improve our lives. But how does this desire manifest itself in the world of IT monitoring?

My name is Dmitry Litvinenko, I am a Data Scientist at the IT company ProofTech IT, and in this article, we will dive into the fascinating world of causality, exploring the parallels between how our brain establishes connections between events and how modern tools help monitoring specialists in this.

In the first part, we will discuss the main concepts, interesting examples from life and IT, and how exactly people and monitoring systems:

simplify this complex world of events;

build cause-and-effect relationships;

fall victim to false correlations.

Events and Their Abstraction

An event is any change in the state of the world or an individual system that we can observe or record. In everyday life, events can be a knock on the door, an app error on a smartphone, or the start of rain. In the IT world, events are considered log file entries, metric changes, trigger activations, and much more.

In Life

In life, we often observe sequences of events and try to find connections between them, but only when it is really important. And we do not look for connections with everything indiscriminately, but only with what is somehow related to our problem. "Somehow related to our problem" is actually quite difficult to determine.

Let's start with the fact that the world is very complex. The change in the state of every pedestrian on the street, every speck of dust, every molecule can be considered an event, and cause-and-effect relationships can be built at this level. The world is much more complex than us, and we cannot model it fully - we need to simplify. But how? After all, all objects are unique when examined in detail.

We introduce abstractions. Each word is, in essence, an abstraction. Sentences made up of many words are also abstract, but they better narrow down the field of what they can mean. There is no need to remember every specific fact that an object, being suspended in the air, then released, will fall. It is enough to formulate the rule "If an object is heavy, hangs in the air, nothing holds it, and I let it go, then it will fall" - and it will cover countless cases. For simplicity of narration, let it be like this: "the object stopped being supported" -> "the object falls" -> "the object fell".

What will this rule allow us to do?

Group.

Examples of groups: physics, movement in space, law of gravity, behavior of objects.

All these are tags that can be assigned to events, and by these tags, group them, determining the fact of "Some relation to our problem".

Predict.

"The object is falling", which means soon "the object will fall".

Look for the root cause.

"The object fell", which means "the object was falling".

Record anomalies.

"The object was falling" -> ..., but for some reason still did not fall - need to go check.

In IT monitoring

In IT monitoring, everything is also not simple. There are extremely many unique events, and building causal relationships among them is not only difficult but also not always useful. We need to group.

And here what?

Grouping (a smart word - clustering, combining objects into clusters).

Examples of groups: host "hosty-1", running on server "my_serv", using database "db-17"

Prediction.

There is "little memory left" on the server, which means it is likely that soon "the server will crash".

Root cause analysis.

"The server crashed", which means:

either "there was little memory left";

or someone "manually shut down the server";

or "changes were made to the application code";

or there was "unusually high request density".

Anomaly detection.

The number of requests to the "hosty-1" server suddenly increased threefold for no apparent reason, which may indicate a DDOS attack or a configuration error in the client sending the requests.

Why look for cause-and-effect relationships?

The question seems obvious. But the answer to it is more extensive than one might expect.

In life

For people, generally speaking, - to build a model of the world in their heads that would allow them to:

Predict.

Prediction helps to feel the future as the present, decide whether we like this present, and take actions to prevent an undesirable future.

When we think about an interview, imagining how we cannot answer some questions, we feel discomfort, letting the whole body know that something needs to be done now to correct this probable future. (Or actually not probable at all, but pessimistic, wound up by fears.)

Find root causes.

Often, it is impossible to directly correct the consequence - only by influencing the root cause can the consequences be changed. We narrow the field of potential root causes only to events related to the class of the problem. In the example below - software, internet, cellular communication. We also start the search with recent events.

The event that the taxi search application began to show an unforeseen error and refuse to call a car initiates the process of finding the root cause. Maybe the application itself is glitching, and it needs to be opened and closed? Maybe the connection is not catching? Ah, it seems I remember that about 3 hours ago I received a message from the mobile operator about an unsuccessful payment for the connection, which I only glanced at and immediately dismissed because I was focused on something else. The root cause of the problem is established and can be addressed.

As a bonus, notice anomalies.

Everything that does not fit into the working model of the world constitutes the unknown, and therefore - interesting, dangerous, and holds useful potential.

If someone in your environment behaves strangely, not fitting into your model, maybe they are hiding or plotting something, and it is worth checking.

In IT monitoring

In IT monitoring, the situation is similar. There is a complex world of actors using a large-scale system of many devices. We simplify it to a scheme, graph, or other object (for large systems, the main thing is not to a set of connections in the worker's brain), reflecting the system's operation. Likewise, this object will allow:

Forecasting.

If a chain of events repeats in the system, we can calculate how long the transitions between them take, how likely they are, and based on this, conclude at the beginning of such a chain what will follow and how soon it will follow.

Finding root causes.

When observing an error in a module, if it consists of a sometimes repeating chain (sometimes tree) of events, you can pay attention to the beginning of this chain (or the leaves of the tree). If there are no repeating chains, you can at least use the module's attributes: on which server it is deployed, which host it is accessed by, etc. Events with the same attributes will be the first to analyze.

Detecting anomalies.

Many parts of the IT system should work in a certain way, and changes in their behavior are undesirable. Therefore, if we could not explain the system's behavior over the past 10 minutes with our model, this may mean:

Something went wrong, and possibly soon we will learn about it in an event with the status WARNING, ERROR, or CRITICAL

The system topology has changed, and now the correct system model looks different

Correlation and causation

We often notice that some events happen simultaneously or follow one another. This makes us wonder: are these events related? Here, two concepts come into play — correlation and causation.

Correlation is a statistical relationship between two events. If two events occur together more often than by chance, we talk about correlation. However, this does not necessarily mean that one event causes the other.

Causality implies that one event is a condition for the occurrence of another event.

So it is important not to confuse correlation with causality! Both in life and in IT monitoring. How to build more truthful causal relationships will be discussed in the second part, but for now, let's deal with the concepts themselves.

In life

In life, we observe events, and if they are somewhat close (they can be abstractly grouped), two cases arise:

Events met nearby for the first time: in this case, we can resort to the existing model of the world in our head and see if we can use the causal relationships we have to build a probable path from one event to another.

Events met nearby for the second-third-tenth time, and the fact that we managed to predict B will finally catch our attention, and we will start thinking about why A -> B

In summer, ice cream consumption increases and the number of drownings simultaneously increases. There is a correlation between these events, but you may agree that eating ice cream is unlikely to increase the likelihood of drowning. Or does it...? Perhaps each ice cream eaten cools the body so much that the desire to dive into the water becomes irresistible. And with the growing number of ice creams eaten, a person loses the ability to adequately assess the depth and temperature of the water, which, in turn, leads to irreversible consequences.... Or the common cause of both is hot weather :)

In IT monitoring

In the IT world, the situation is similar.

You can look at the system behavior map and establish indirect causality.

You can with caution use statistics or a predictive machine learning model (the brain is limited in its ability to keep accurate statistics, so it uses the prediction method).

We can notice that the increase in server load coincides with the increase in application response time. The question arises: does the load cause the application to slow down, or are both of these events related to something else? If we mistakenly take correlation for causation, we may take the wrong actions. For example, start optimizing the application code when the problem is actually in the network.

How to distinguish correlation from causation?

Here we enter the territory of A/B tests, hypothesis testing, and the scientific method. We will list the basic approaches, but we will delve into this topic in the second part of the article, both for life and for IT monitoring.

Predictive ability

If knowledge of A allows you to confidently predict B, this is a plus for causation.

Experiment

If it is possible to directly cause event A and observe the occurrence of event B, this is a plus for causation.

Time distribution

If event B occurs at suspiciously normally distributed intervals after event A, this is a clear indication of at least indirect causation: either A -> B, or C -> A and C -> B.

Context analysis

If the correlating phenomena have sufficiently different contexts, this is a minus for direct causation. However, indirect (transitive) causation is still possible, we just haven't figured out how they are related yet.

False correlations

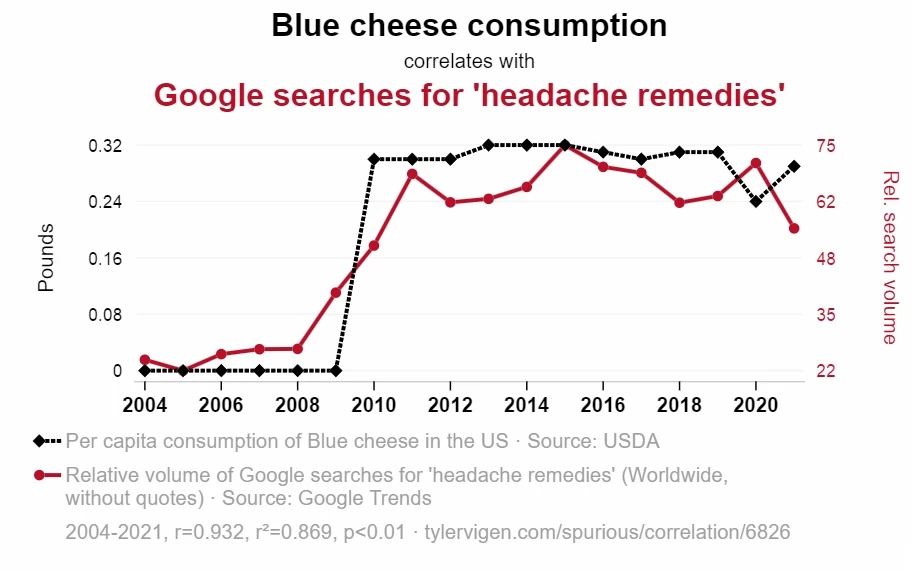

Consumption of blue cheese correlates with the number of searches for "headache remedies". r = 93.2%.

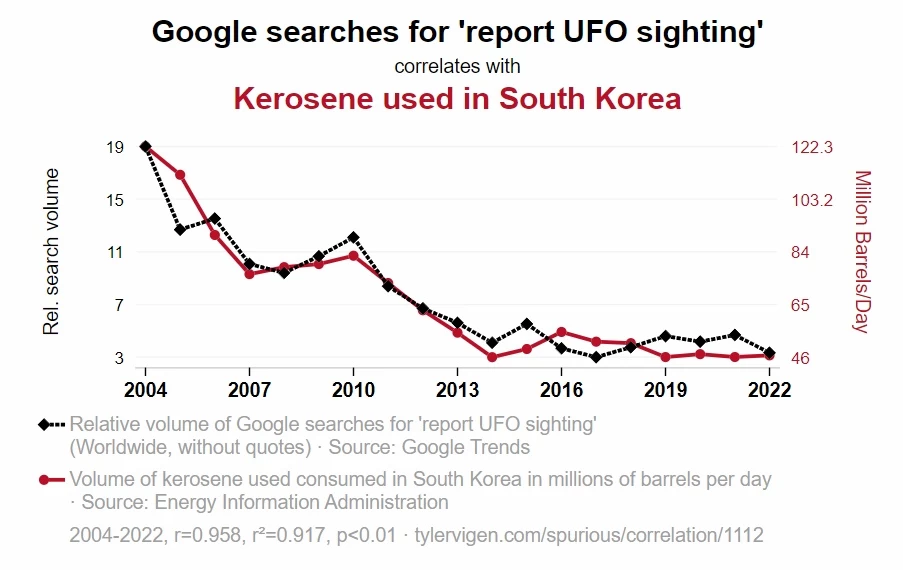

The number of Google searches about UFO sightings correlates with the volume of kerosene used in South Korea. r = 91.7%.

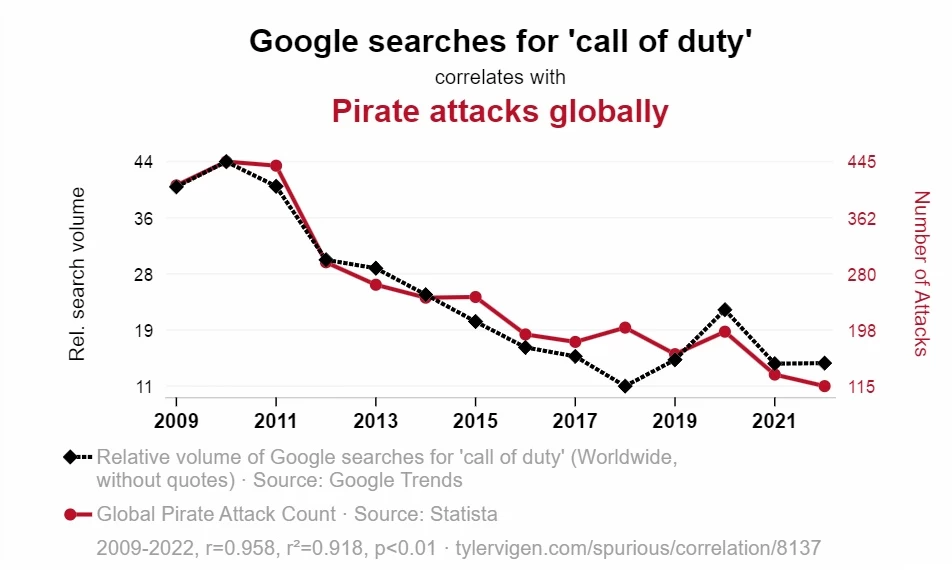

The number of Google searches for the game "call of duty" correlates with the number of pirate attacks in the world. r = 95.8%.

Obviously, there is no causal relationship between these events. There isn't even a common cause! It's just that if there is a lot of data, there will always be incredible coincidences among them - so incredible that two unrelated time series will have extremely high correlation.

This effect occurs when considering many different parameters in a sample. The smaller the sample and the more parameters measured, the more amazing "discoveries" can be made! In science, this is actively fought against, and the quality of scientific publications is determined, among other things, by the caution with which statistics are handled.

As they say, "There are three kinds of lies: lies, damned lies, and statistics."

What did we learn?

We learned the basis of our surprisingly effective orientation in the world of events!

It is important to group events by labeling them to facilitate filtering.

When solving an internet problem, consider events labeled: "electricity," "provider," "router" in recent times.

It is important to group events by meaning to simplify the world and derive abstractions.

The event "object fell" encompasses a lot of things.

It is important to build causal relationships between abstractions to:

Predict this world: "Object fell" -> "bang!";

Look for the root cause: "Bang!" - maybe some "object fell"

Find anomalies: "Sitting alone at home, ... -> "door slammed" - oh! scary! what slammed the door?"

And for all of the above to work, updating your map of causal relationships, it is important not to confuse correlation with causation! Events often happen simultaneously, but that doesn't mean one causes the other. The world is full of coincidences, and we must not forget that the only thing more incredible than the most incredible coincidences would be the absence of incredible coincidences!

The article is about the basics, but still, maybe you remembered relevant cases from life, funny mistakes, or just interesting insights?

Yes, the second part of the article will be out soon!

Write comment