- Hardware

- A

Special architecture for data centers that handle AI operations: how Rubin surpasses Blackwell

Recently, NVIDIA announced that the release of the GB200 NVL72 supercomputer on the Blackwell architecture is delayed, and the GB300 superserver will be released in mid-2025. It turns out that these devices will be released almost simultaneously with the Rubin architecture, although the latter is positioned as next-gen. In the article, we will consider these technologies and try to understand why the company is in such a hurry to release the new platform.

Why NVIDIA is in a hurry to develop Rubin

The Rubin architecture was presented in 2024 at the Computex conference along with the Blackwell version — we wrote about it in the blog in December. The technology was named after the astronomer Vera Rubin, who discovered one of the confirmations of the existence of dark matter. The solution was supposed to be implemented in the R-100 (Rubin-100) series graphics processors and Vera central processors in 2026, but now it will happen earlier — approximately in mid-2025.

The reason for the rush is the rapid development of neural networks: AI models are becoming more complex and larger. Standard "hardware" does not provide the necessary speed of calculations and data transfer and at the same time is not energy efficient, which is important for large data centers with thousands of computing machines.

NVIDIA does not hide that it wants to become a leader in the field of artificial intelligence technologies, so it focuses on performance, information transfer speed, and reducing the power consumption of its devices. Thanks to this, the Rubin architecture should significantly accelerate the training and generation of responses by neural networks and become a breakthrough in the AI market.

Features of the Rubin architecture

There is no detailed specification of future devices yet, but some information about them was partially disclosed by NVIDIA CEO Jensen Huang at the Computex 2024 conference. Chips on the Rubin architecture will be manufactured by Taiwan Semiconductor Manufacturing. The manufacturer will use the 3 nm process technology, not the previous 5 nm version, which means the chip will be smaller than the previous generation.

At the same time, the Rubin architecture will use a 4x grid design. This technology will allow NVIDIA to achieve higher performance of Rubin-based devices with lower power consumption.

NVIDIA does not plan to stop there: Taiwan Semiconductor Manufacturing reports that the next boards, which are expected to be released by 2026, will have a 5.5x grid design and use 12 HBM memory stacks on a 100 × 100 mm substrate. The solution is expected to deliver 3.5 times more computing power than previous generations. By 2027, the developer plans to introduce a CoWoS board with an 8x grid on a 120 × 120 mm substrate.

In extensive computing environments for AI clusters or supercomputers, high CPU and GPU performance is not the only important factor. Such systems operate in a distributed manner, meaning that hundreds or even thousands of machines perform operations simultaneously. Therefore, in data centers for AI, not only CPU and GPU performance is crucial, but also the high bandwidth of switches and adapters through which information flows from different parts of the cluster.

To increase speed, NVIDIA plans to upgrade the memory in next-gen chips to the HBM4 standard. This will increase the bandwidth of devices to 3.6 Tbps, which is twice as fast as the previous generation.

What Rubin-based solutions did NVIDIA announce

In total, NVIDIA announced six devices, including central and graphics processors, as well as peripherals. It is already known that the lineup will include:

Rubin R100. A graphics processor that will operate on the HBM4 memory standard with 8 stacks. Developers emphasize that the GPU will be optimized for parallel computing when processing neural network queries.

Rubin Ultra. A flagship GPU with 12 stacks of HBM4 memory. The GPU will be compatible with the new Vera CPU, which will minimize latency in information exchange and reduce power consumption. The device will also be optimized for parallel computing.

Vera CPU. A next-generation CPU designed specifically for deep neural network computations. The solution is integrated with new communication technologies — NVLink 6 and ConnectX 9 SuperNIC. The Vera Rubin superchip, which combines the functions of both graphics and central processors, will be based on this device.

NVLink Switch of the sixth generation. A bus for creating IT networks. Bandwidth — 3600 GB/s. Allows combining up to 16 graphics processors within a single cluster.

CX9 SuperNIC 1600 GB/s. A network adapter with a data transfer rate of 1600 GB/s, it will ensure uninterrupted communication between advanced CPUs and GPUs. Currently, the most modern data centers use ConnectX-8 SuperNIC devices with a bandwidth of 800 GB/s.

X1600 IB Ethernet Switch. A network switch that supports the InfiniBand (IB) standard. Compatible with QSFP-DD1600 ports, allows connecting up to 128 devices. Bandwidth — 1600 GB/s per port.

At the moment, we can only talk about the future architecture based on the developer's statements and insider rumors — currently, chips and other devices operating on this technology have not been shown. Unfortunately, at the recent CES press conference, NVIDIA CEO Jensen Huang did not share details about Rubin. Overall, it seemed to us that the company has shifted its focus from data center equipment to platforms for working with robots and autonomous AI systems, including unmanned vehicles.

Are we expecting a breakthrough in the AI field from NVIDIA

NVIDIA claims that Rubin will surpass Blackwell in all respects:

Architecture | Rubin | Blackwell |

Grid design | 4x | 3.3x |

Memory standard | HBM4 | HBM3E |

Bandwidth | 3600 GB/s | 1800 GB/s |

Switching standards support | NVLink 6 | NVLink 5 |

All this will allow neural networks running on the new build clusters to train faster and generate responses.

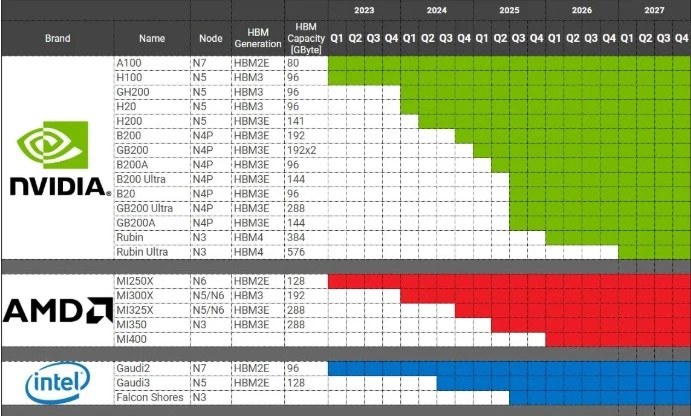

Competitors of NVIDIA — AMD and Intel — are also keeping up. They are preparing to respond to GB300 and Rubin with new architectures and devices. Their future solutions MI350 and Falcon Shores will be released in the second and third quarters of 2025. They are also based on N3 technology, but their memory is still a level below NVIDIA.

Although NVIDIA outperforms competitors in terms of the number of solutions, it is too early to talk about their quality. Everything will fall into place only after the release and implementation of new technologies in real data centers. We at mClouds will continue to follow the news and will talk about the most interesting in the blog — subscribe to stay updated.

Write comment