- Security

- A

Alive Photos: Creating a Private Steganographic Audio Player with Python and PyQt6

Hello, tekkix!

Have you ever wished your photos could tell stories? Not figuratively, but literally. And what if these stories were meant just for you? Imagine sending your friend what looks like an ordinary PNG file, but hidden inside is a personal audio greeting that no mailing service or messenger would ever see. Or keeping a digital photo diary, where every snapshot conceals a voice memo with your thoughts, safely tucked away from prying eyes.

This isn’t magic—this is steganography. Today I’ll talk about the ChameleonLab project, specifically about its unique feature: a steganographic image player. It’s a desktop application that lets you not only hide audio files inside images, but play them back just like you would in a regular player, creating a new way for private and creative information sharing. The project already offers ready-made builds for Windows and macOS.

Why do this? Privacy and creativity

The idea of hiding one file inside another isn’t new. But most utilities are made for specialists. We focused on practical use for everyone.

The main thing — confidentiality. In the digital age, it’s hard to be sure about the privacy of data being sent. Our player offers a solution: you can send a photo over any open channel (email, social network), and only someone with the ChameleonLab app will discover and play the hidden audio message. It’s a perfect way to share private information without attracting suspicion.

"Living" photo albums: Save short voice notes or ambient sounds right inside your photos. A photo of your child with their first word, a concert snapshot with a bit of the performance, a birthday picture with greetings—all stored in a single PNG file, hidden from others.

Education and art: Imagine an interactive museum exhibit or an art lesson. Students open reproduction images on tablets, and each one "speaks" in the guide’s voice about its story, its artist, and its technique.

How it works: Diving into the code

At the core is the classic LSB steganography (Least Significant Bit) method. In short, you take the least significant bits of each color component (R, G, B) in every pixel and replace them with bits from your audio file. For the human eye, these changes are completely invisible.

As a container, we use the PNG format because it compresses data losslessly. Using JPG for this is disastrous, since its lossy compression algorithms will destroy and distort the hidden information.

Our app has two key components: the Creator and the Player.

Step by step: Creating your first audio-photo

We’ve built a "Creator" tab into the player, making the process as simple as possible.

We choose the image-container. You can drag the file into the left window. Supported formats are PNG, JPG, BMP. Any format will be converted to PNG on output.

We choose the audio file. Drag the audio file (

.mp3or.wav) into the right window.We check the capacity. The program automatically calculates if the audio file will fit into the image. If not, you can check the "Automatically expand..." box, and the program will add black pixels at the bottom of the image to increase its capacity without distorting the original.

We create! Press the "Create and Save" button to get our hybrid PNG file.

Behind the scenes of the "Creator"

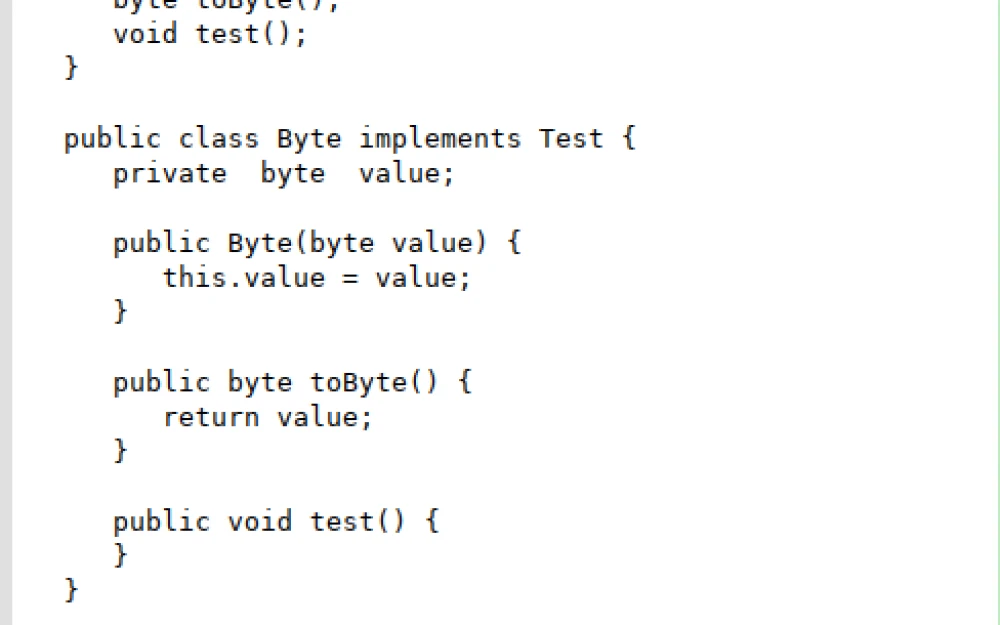

This process is managed by the background worker PlayerCreateWorker. The main task is handled in the function steganography_core.hide(). Before hiding the audio, we create the "payload" in a simple format:

[File name in UTF-8] + [Separator symbol '|'] + [Audio file bytes]

This allows us to know the original file name when extracting. The worker in the background thread does all the heavy lifting: expands the image (if needed), reads the audio file, and calls stego.hide() to bitwise embed the data into the pixels.

Here’s the key part of the code from workers.py:

# From file ui/workers.py

class PlayerCreateWorker(QtCore.QObject):

# ... signals ...

def __init__(self, carrier_data, audio_path, n_bits, should_pad):

# ...

def run(self):

try:

# ... calculating the required size ...

if required_size > capacity_bytes:

if not self.should_pad:

raise ValueError(t("embed_log_conclusion_fail"))

# Image canvas expansion logic

h, w, c = self.carrier_data.shape

# ... calculating new dimensions ...

new_h = math.ceil(required_pixels / w)

padded_image = np.zeros((new_h, w, c), dtype=np.uint8)

padded_image[0:h, :, :] = self.carrier_data

self.carrier_data = padded_image

self.progress.emit(40)

with open(self.audio_path, 'rb') as f:

audio_bytes = f.read()

secret_filename = Path(self.audio_path).name

packaged_data = secret_filename.encode('utf-8') + b'|' + audio_bytes

self.progress.emit(60)

output_payload = stego.hide(self.carrier_data, packaged_data, self.n_bits, is_encrypted=False)

self.progress.emit(95)

self.finished.emit(output_payload)

except Exception as e:

self.error.emit(str(e))

The heart of the player: How to extract and play the sound

The playback process is reverse and also works in the background thread so the interface doesn't freeze.

Instant preview: As soon as the user selects a track, we immediately load and display the image.

Extraction in the background: At the same time,

PlayerRevealWorkerstarts. It opens the PNG, reads the LSB bits of the pixels, and reconstructs the hidden data package ([filename]|[audio]).Playback: When the worker completes, it passes the extracted audio bytes to the main thread. We save these bytes to a temporary file on disk and pass it to the standard

QMediaPlayerfor playback.Cleanup: The temporary file is automatically deleted after playback or when the program is closed.

Here’s how the worker for extraction looks:

# From ui/workers.py

class PlayerRevealWorker(QtCore.QObject):

finished = QtCore.pyqtSignal(bytes) # audio_bytes

error = QtCore.pyqtSignal(str)

progress = QtCore.pyqtSignal(int)

def __init__(self, image_path):

super().__init__()

self.image_path = image_path

def run(self):

try:

self.progress.emit(20)

carrier_data, _, _ = file_handlers.read_file(self.image_path)

self.progress.emit(50)

packaged_data, _, found = stego.reveal(carrier_data)

self.progress.emit(90)

if not found:

raise ValueError(t("player_error_no_audio"))

try:

_, audio_bytes = packaged_data.split(b'|', 1)

except ValueError:

audio_bytes = packaged_data

self.finished.emit(audio_bytes)

except Exception as e:

self.error.emit(str(e))

Difficulties and solutions

During development we ran into several classic problems:

Thread crashes:

QThread: Destroyed while thread is still running— the headache of anyone working with multithreading in PyQt. This was solved by setting the parent widget forQThread(QtCore.QThread(self)), which creates a hard link and prevents the garbage collector from deleting the thread object prematurely.UI freezing: Initially all operations were done on the main thread, which caused the app to freeze for a few seconds. Moving all heavy logic into worker classes (

QObject) and running them viaQThreadcompletely solved this, making the UI responsive.

Conclusion

The ChameleonLab project and its image player is an example of how you can take well-known technology and find a new, creative, and—most importantly—private use for it. What we ended up with isn't just a utility, but an intuitive tool for creating a new kind of content, where every image has a second, hidden audio layer.

It's not just a creative tool for making "living" photos, but also a way to protect personal audio memories and messages in our excessively open digital world.

The ChameleonLab project is already available as ready-to-use builds for Windows and macOS, allowing anyone to try creating their own "living" and secret photos today.

We'll keep listening to your feedback and improving ChameleonLab. Thanks so much for your participation and support!

Download:

Download the latest Windows version: ChameleonLab 1.4.0.0

Download the latest macOS version: ChameleonLab 1.4.0.0

Our Telegram channel: t.me/ChameleonLab

- Sure, here is the translation you requested:How AI is Already Manipulating Us or Why Asimov's Three Laws Won't Save Us

- How to set up your own internet time machine on a virtual server using ArchiveBox

- Fundamental mathematics — the theory of everything in IT and beyond. Type theory and formalization in Coq

Write comment