- AI

- A

Camera without a lens: how Stable Diffusion captures reality

Finally, you can buy a camera without a lens, not set adequate ISO, shutter speed, and just observe the results.

Today, the AI product market is overflowing with various copies of generative neural networks, and Telegram market craftsmen are creating thousands, if not tens of thousands of bots with connected GPT. But among the "laziest" developments, this one stood out with its idea... If you google the name of the device itself, you can find a list of two pages of search results with news about a new camera that "generates reality".

The Paragraphica camera is a 2023 device from a Dutch craftsman that generates "photographs" through artificial intelligence algorithms and location data...

But what's the catch?

A camera with a super-prompt and super NLP algorithm

When a photographer points the camera at an object or a specific place, it first reads the exact location data (latitude, longitude), and then matches it with various sources of information. For example, the camera can use information about weather conditions, time of day, landmarks, surroundings, and even historical or cultural data related to that place.

This data is then processed using machine learning algorithms that interpret and transform it into a textual description of the scene. The peculiarity of the camera is that it does not capture images in the traditional sense, as conventional cameras with optical sensors do. Yes, there is no Lidar (laser) here...

Instead, it "reads" the surrounding world through the prism of data and transforms this data into textual descriptions that represent an interpretation of what could have been captured in a photograph, this description is used as a prompt for Stable Diffusion.

We get a metaphorical view of photography as a process not of capturing a visual image, but of creating a narrative based on objective and subjective data about the world. But that's okay, we got a little carried away with philosophical reasoning...

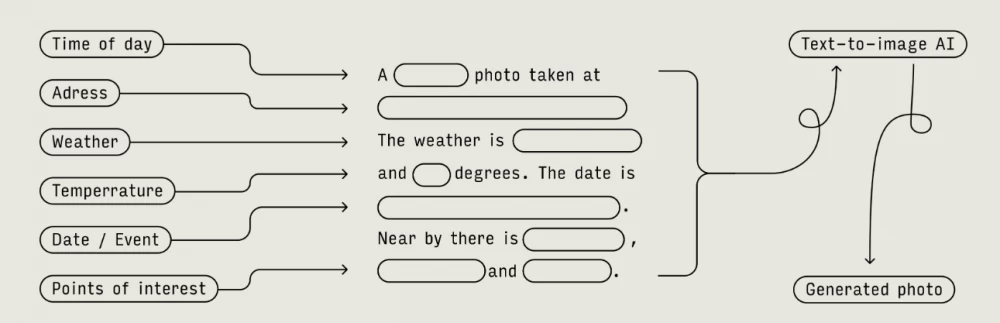

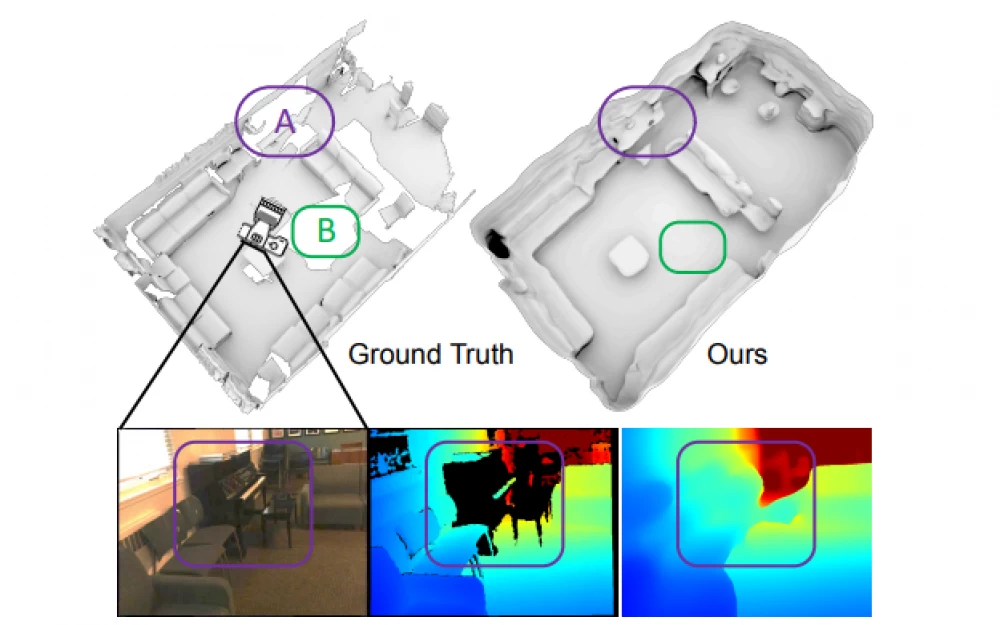

The camera uses an API interface to connect to databases: meteorological services, mapping applications, or social networks — it works in real-time. You can see it above. In general, the researcher published the Noodle scheme from his personal website.

The diagram demonstrates the process of obtaining and processing data, their interaction between various scripts and modules.

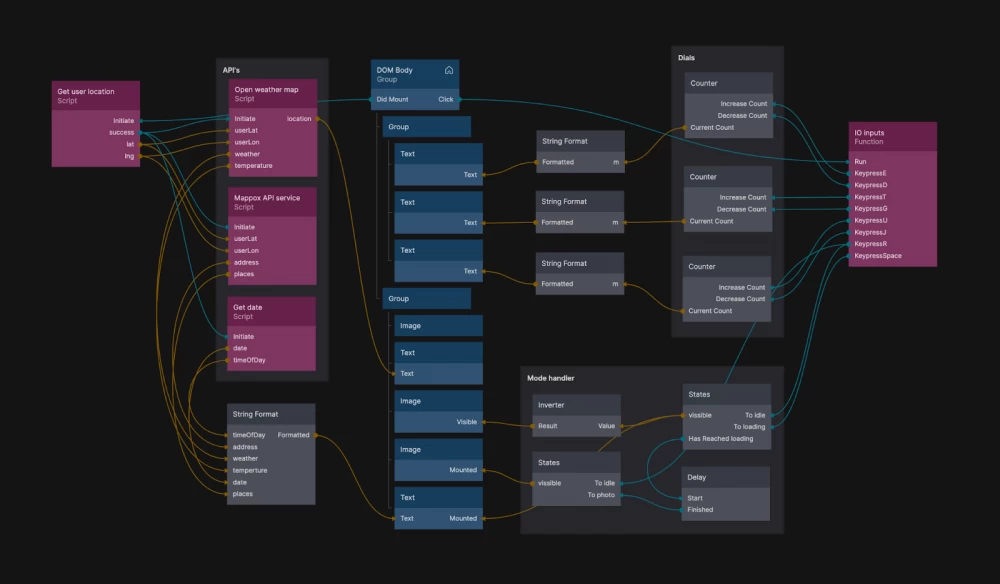

Get user location: The initial module is responsible for obtaining user location data (latitude and longitude). These are key input parameters that are then passed to other modules for processing.

API's: Here we see several APIs that interact with the system:

Open Weather Map. Requests weather information based on latitude and longitude, returns data on weather conditions and temperature.

Mapbox API. Provides information about the exact address and nearby objects based on geolocation.

Get date. This module is responsible for obtaining the current date and time of day.

String Format. This block is responsible for formatting data such as time of day, address, weather, temperature, date, and nearby places. This data is structured in text format for further use.

DOM Body. The user interface section that responds to the "Click" event and updates the displayed information. It consists of text blocks and graphic elements (e.g., images) that are updated based on the received data.

Dials. Dials are counters that can be increased or decreased, changing certain parameters. For example, the user can influence scene parameters by controlling camera modes or other aspects.

Mode Handler. This module is responsible for managing interface states, such as switching between modes (turning on the camera, waiting, loading). It also manages the visibility of elements on the screen and transitions between different system states.

IO Inputs: This section presents keyboard input management. These are actions that the user can perform using keys, such as starting the system (Run key) or using other commands through keys like D, T, G, and others.

To create a text description based on data obtained through Open Weather Map, Mapbox API, and the current date module, the machine learning model uses several key stages related to data processing and interpretation.

At the same time, Raspberry Pi serves as the computing platform that ensures the execution of these operations.

First, the Raspberry Pi (the author uses the fourth version) receives data requested through external APIs. When the device receives location coordinates, this data is transmitted to the Open Weather Map and Mapbox APIs.

Open Weather Map returns information about current weather conditions: temperature, humidity, cloudiness, and wind.

Mapbox, using geolocation, provides the exact address and information about nearby attractions, infrastructure objects, or other interesting places, such as parks or monuments.

The date module provides the system with information about the current time of day, including timestamps (day, evening, or night) and the specific date.

After receiving this data, the Raspberry Pi passes it to an algorithm that quickly formats the necessary prompt and sends the data to Stable Diffusion.

For example, weather data can be transformed into a description like "Today is a clear sunny day with a temperature of 25°C," and information from the Mapbox API can complement this description with location details: "You are near Central Park."

Time of day data from the date module can be used to create phrases like "the morning light gently illuminates the surroundings." But in reality... there is no secret fine-tuned GPT here...

Why did this project get so hyped but fail to find angel investors?

The basis is a simple algorithm that requests data from different sources and simply throws it into Stable Diffusion. Well, how else? At the foundation, there must be a neural network that transforms data through NLP into a super prompt that creates super photos incredibly close to reality?

Still, in Figure 1 we see that the prompts are templated, which means there are no NLP neural networks here. Roughly speaking, the data is simply substituted into a form that is sent to SD. But why do the photos turn out so close to reality? – it's an illusion.

In fact, any prompt like: "You are near Central Park Netherlands on Avenue Street 35 in the morning" does not lead to a specific place for AI, but serves as a hook that directs the AI to certain types of streets/architectural structures/weather/time – they remain the most general parameters. Degrees (angle) determine the position relative to the generated photo.

In a sense, the small genius of the developer lies in creating a minimal prompt that guarantees the closest result even under the most generalized parameters.

Here we can also pay tribute to the developers, namely the giant dataset, which probably contains enough images of all representatives of fauna and flora, as well as landmarks (and not only of Venice, but also of German Celle).

There was also a marketing move here, as the project literally promises photos taken from unapproachable reality – in life, just a set of data linked by a single geolocation, which only in general terms brings us closer to a specific place.

Probably, the project failed not because of this approach, but because of the artifacts and inaccuracies that appear during the first crash test: try to generate a monument of Chelyabinsk Kurchatov at an angle of 15 degrees with ZhiznMart in the neighboring house... Sometimes this generalization really shows very similar results to a live photograph, and sometimes it gives off serious falseness.

Nevertheless, the author really approached the source of his inspiration, more precisely to his picture of the world - the view of the star-nosed mole, which collects a generalized world from a limited set of tactile information.

The star-nosed mole, living and hunting underground, considers light useless. Consequently, it has evolved to perceive the world through its finger-like whiskers, which gives it an unusual and intelligent way of "seeing". This amazing animal has become an ideal metaphor and inspiration for how empathy for other intelligent beings and their perception of the world can be almost impossible for human perception.

As a result, the project turned out to be not so much a profitable technologically simple startup, but rather a real photo project and a postmodern statement...

Write comment