- AI

- A

I was a designer for 6 years, creating images for news, and then the neural network came

In 2022, I was just a designer in the PR department — designing social media, creating images for news. I thought I'd be making posts my whole life.

This story for my blog was told by Alexey Perminov

Now I work fully with external clients of our company. In addition to graphic design for SMM, I now do interfaces, 3D, and motion design.

In this article, I’ll talk about how neural networks turned me from a narrow SMM specialist into a multi-skilled creator, show real cases, and share working techniques I use every day.

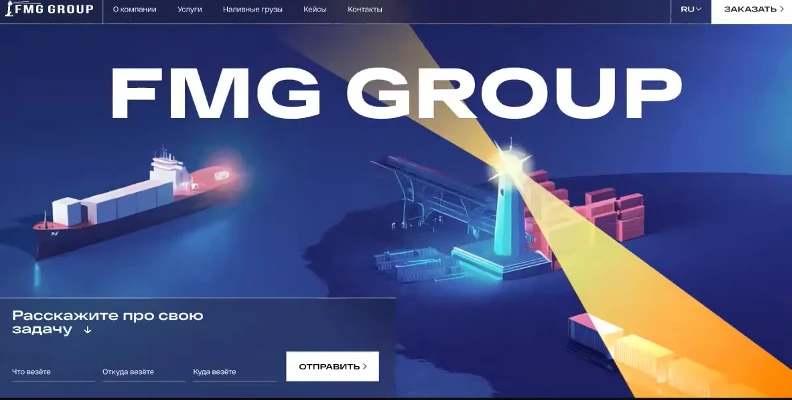

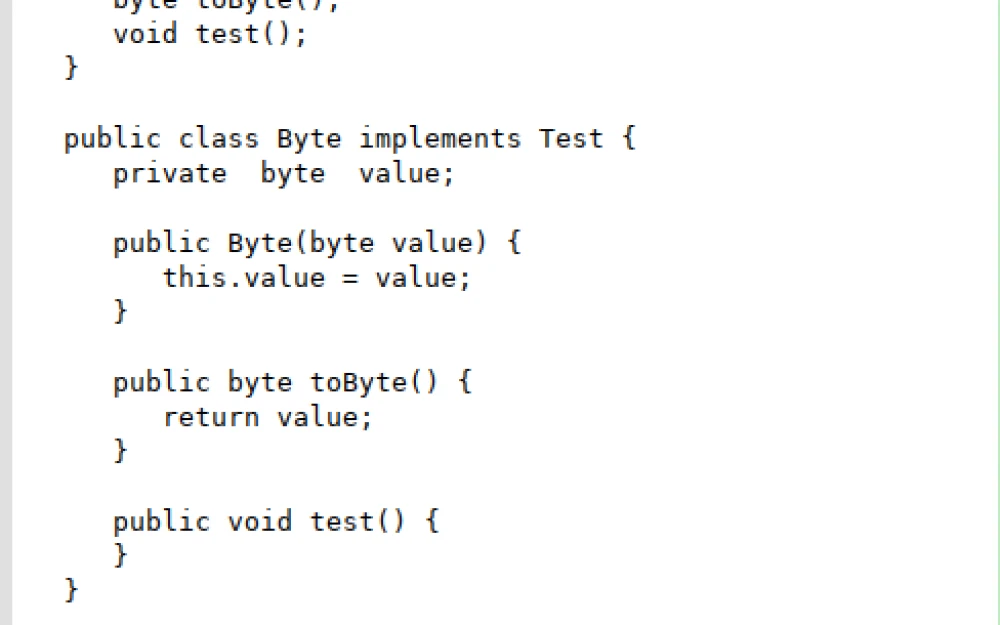

How we created the concept of a freight transport website

Task: In the concept for a freight transport company, show that we can deliver absolutely anything. Even an AI startup with Indians — we’ll get it where it needs to go.

Stage 1: From idea to visual

Recently we got a client — a freight transport company with a lighthouse in their logo. They wanted an original website with a fun idea. During a call, the concept came up: a lighthouse-rocket. You scroll through the site, see the lighthouse everywhere, and in the end it turns out to be a rocket.

We went to GPT, because it understands prompts well and generates quickly, and created a lighthouse-rocket to show the client the concept:

I described the transformation of the lighthouse into a rocket, added a dramatic reveal — plot twist visual — meaning I explained the storyline and mood to the neural network: not just a static image of a lighthouse-rocket, but the exact moment of transformation with a cinematic surprise effect.

The neural network had to convey the emotion of astonishment and the dynamics of transformation, not just draw a hybrid lighthouse-rocket.

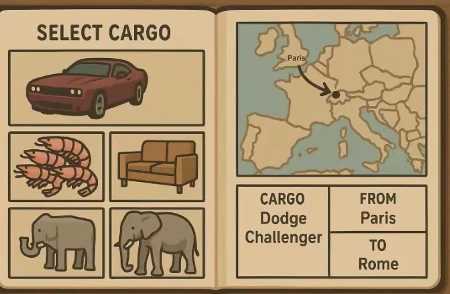

There was also a request for a mini-game — I sent a Flappy Bird reference and said: replace the bird with a ship, add a sea background:

The client wanted interactive elements on the site, so we suggested a game where you fill in a cargo boarding pass — here we’re sending a Dodge from Paris to Rome. Neural networks aren’t great with geography, but for a concept that’s not so important:

Additionally, the clients wanted storytelling.

The main thing is, I never told the neural network that it was for a website concept. We had the idea in our heads and I simply asked it to generate images. I focused only on the visual result.

We got 10–12 conceptual images in different styles. We showed them to the client — they liked everything. We chose the lighthouse-rocket as the main concept. True, we later had to tone it down — the clients realized we were getting too absurd. Final result — 8 stylistically consistent slides of the concept for 3D implementation.

Before, the process was: concept → illustrator → art director → revisions → me → 3D artist. Now I did everything myself and the art director approved it. Instead of a week working with an illustrator, we had a ready concept in a day. We shortened approval chains and saved budget.

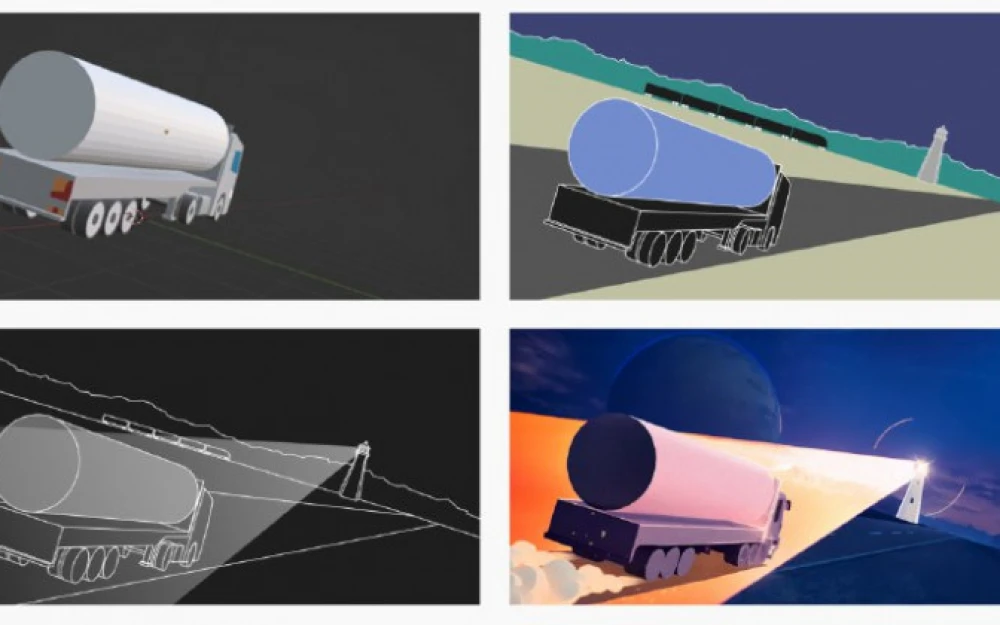

Stage 2: Creating sketches by hand

After the client approved the basic lighthouse-rocket idea, it was necessary to create a detailed storyboard of the entire website. I did it, the art director approved it, but at the 3D stage we brought in our 3D and VFX specialist so that she could help with shaders and scene optimization.

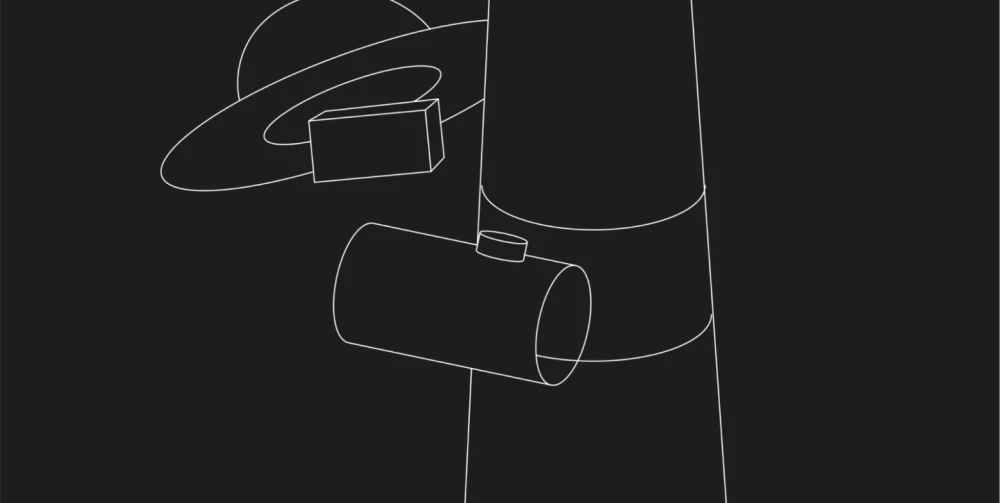

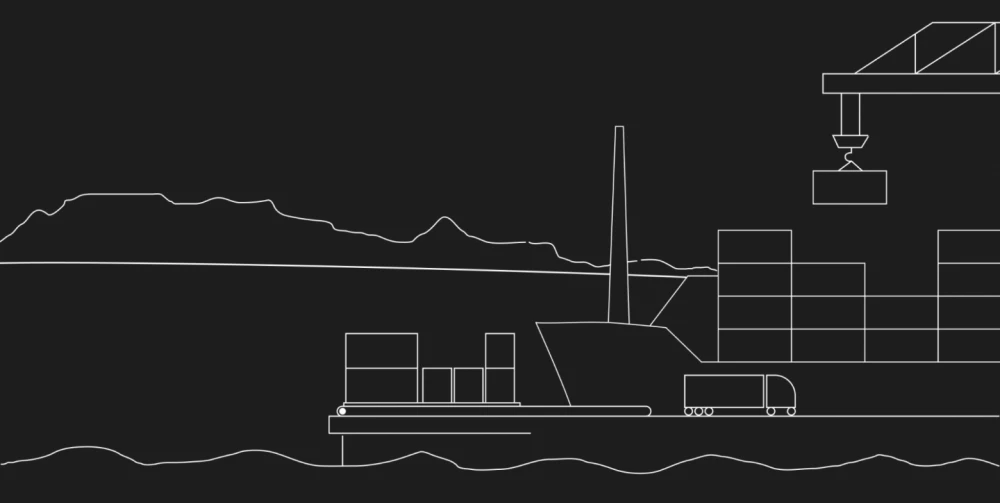

I started making more detailed drafts and sketches, first outlines in Figma.

It’s funny how my inability to draw by hand is compensated by my skills in 3D — I blocked out a basic scene in Blender, and then you trace the outline by hand, and there you have a nice 2D sketch. This hybrid approach allows you to create technically correct perspective and composition even without academic drawing skills.

Workflow scheme:

Blender — creating a basic 3D scene with correct proportions

Manual outlining — turning 3D into a 2D sketch with the necessary details

I created rough outlines as a storyboard of what would happen on each scroll — essentially, frame-by-frame animation of the future site, where scrolling would gradually transform the lighthouse into a rocket.

These were extremely simple sketches: sticks instead of people, rectangles instead of buildings, circles instead of ships. The goal was to show composition and movement, not beauty. These outlines would become the basis for a neural network, which would turn them into stylized concepts.

The first problem appeared right away — when I sent the simple outlines to the neural network, it didn’t understand what was depicted.

So sometimes I had to make more detailed sketches and collages so the neural net would better understand where each object was. Like these:

As a result, we got detailed sketches with clearly readable objects, ready to be fed into the neural network.

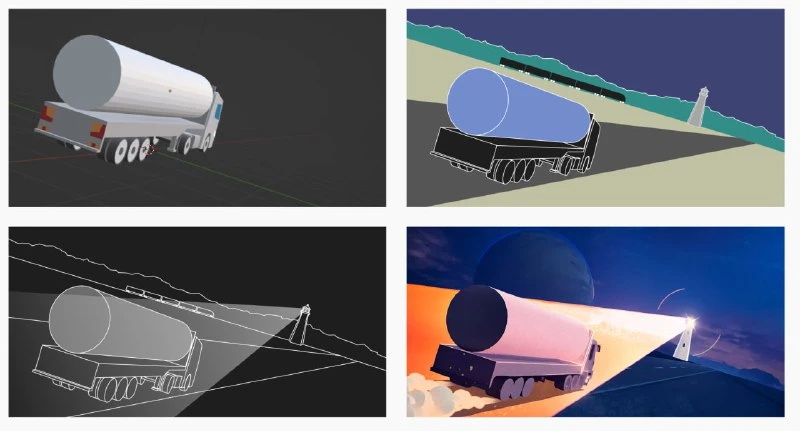

Stage 3: Stylization

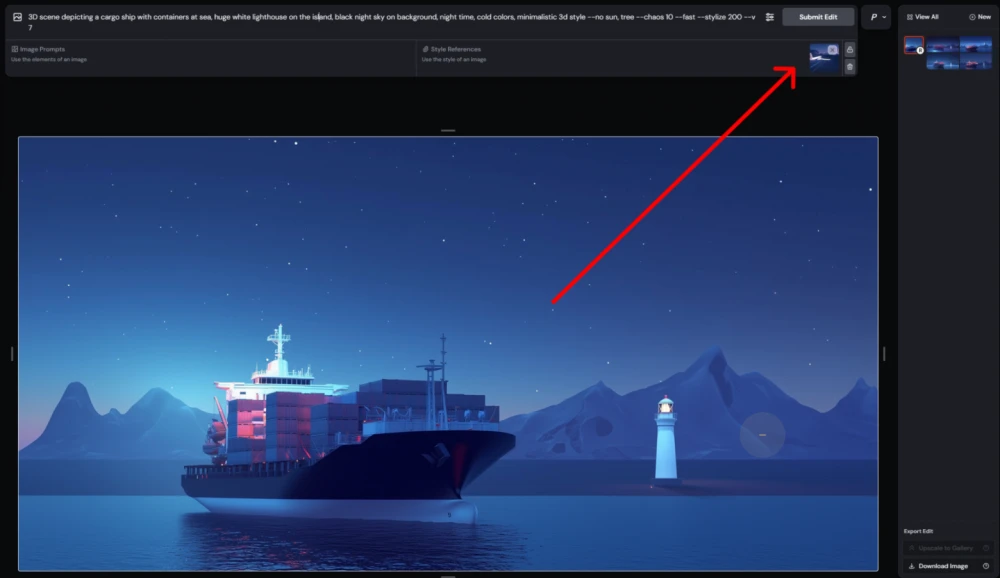

Generation

I sent these exact images to Midjourney — it has a retexturing function. Essentially, it keeps the contours the pictures have but overlays an artistic style and whatever you write in the prompt.

The algorithm is simple:

Upload the outline from Figma

Turn on retexturing mode

Write a prompt: "cinematic lighting, blue and orange color scheme, industrial maritime atmosphere"

The neural net keeps my composition but makes everything look beautiful

When generating the next ones, I used the first image as a style reference so it would take the colors and style from it. Obviously, it didn’t always work, and even when it did, not always on the first try, but overall as an option — you generate one image first, and then all the rest based on it — works quite well.

Had to switch between tools right in the middle of the work process. Some slides were done in Stable Diffusion — it gives more control over stylization when Midjourney drifts into excessive detail or cartoonishness.

Stable Diffusion was developed by programmers for programmers, while Midjourney was made by programmers for designers. Midjourney is more artistic, with better aesthetic taste, more casual — perfect when you need something beautiful, pleasant and harmonious sketches. If someone has never worked with neural networks and asks which one to try, I always say — go to Midjourney.

Stable Diffusion is a more advanced tool, harder to install, but it can be used locally. It doesn’t depend on servers and can be installed on your own computer. Stable Diffusion is more controllable — it follows the prompt more closely, and has more different functions for controlling generations. For example, you can install a plugin that lets you set the pose in which a character will stand. Midjourney doesn’t have this. The retexturing function appeared recently, maybe six months to a year ago, and it doesn’t always work, plus you can’t fine-tune it.

We decided to achieve a result as close as possible not to confuse the client, so it was important to convey the final look as accurately as possible. Midjourney didn’t manage it — either drawing too detailed or going cartoonish. So some slides had to be done in Stable Diffusion. Same approach there — you give it a sketch, and through the ControlNet function, keeping the contours of objects, it simply recolors them and applies style.

Stable Diffusion is also very handy when you need detailing or refinement. If a Midjourney image looks good but some parts need to be blurred or re-generated, I go to Stable Diffusion.

So the strategy is: start in Midjourney for overall aesthetics, but if the client isn’t happy with the result — switch to Stable Diffusion for precise adjustments.

That way, for each slidewe determined the optimal tool, producing stylized concepts with preserved composition.

For video content I use a different workflow:

Midjourney + Recraft — generating key frames

Photoshop — element compositing

Immersity (formerly Leiapix) — creating a pseudo-3D effect for depth

Hailuo (Minimax) and klingAI — animating static images

After Effects — final assembly with sound and effects

This pipeline allows creating dynamic concept presentations that impress clients much more than static slides.

Stage 4: Refinement and post-processing

There was a lot of work with color correction.

Combinations of blue and yellow-orange didn’t work — the neural network either made it too yellow, too orange, or colored the wrong elements.

So, a lot of work in Photoshop was focused on color. Selective correction, masks, fine-tuning the balance. It took as long as the generation process itself, but without this, the concepts would have looked like a student's craft.

We got a series of 8 stylistically unified slides with a single color palette in the client's corporate colors, cinematic lighting with proper shadows, preserved composition from the initial sketches, and a technical yet not cold style.

Previously, such a series of concepts would have taken 2-3 weeks of an illustrator’s work plus several iterations of approvals. Now, I received finished concepts after 4 days of work. And if I had been a former illustrator and could fix things faster by hand, this could have been done in a day.

But this is definitely not a magic button—half the time was spent on iterations and color correction. If I had been a former illustrator and could have fixed things faster by hand, "traditional art + neural networks" would have made it possible to do this in a day in Photoshop. It's not a time savings, but a saving of human resources and the headache of approvals.

Stage 5: 3D Implementation

After the concepts were approved, we moved to 3D—completely manual work in Blender. The only thing done through AI was generating textures for the mountains in the background, which were later used in the 3D model.

GPT helps write scripts for Blender and After Effects—you describe the task, and you get Python code for automation. This is not about the visual aspect but the technical side.

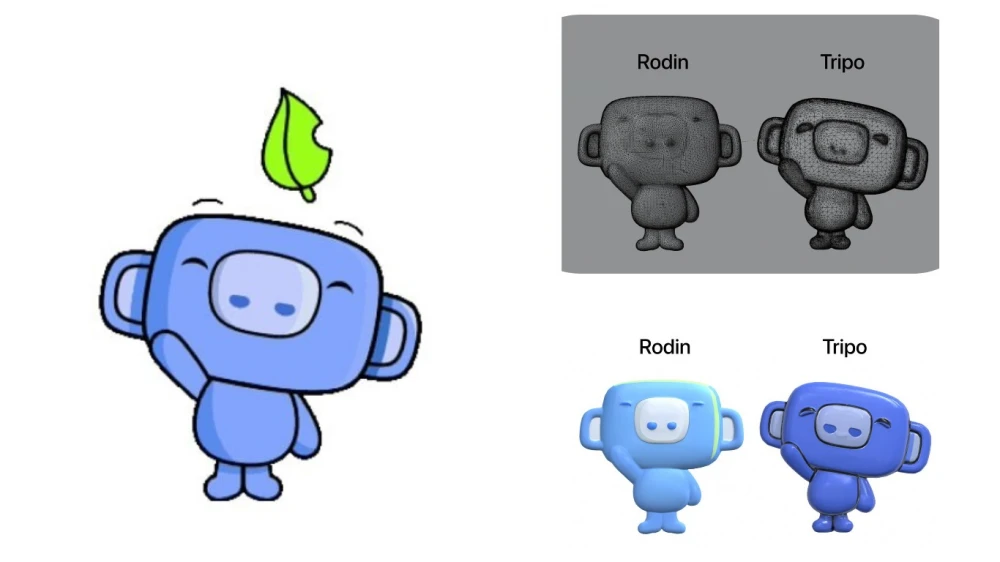

I occasionally test new 3D neural networks—Luma AI, Rodin, Tripo.

3D skills developed not thanks to neural networks—AI just accelerated some processes. Previously, I spent hours searching for ready-made materials; now I generate the necessary texture in minutes.

Otherwise, everything is done manually. But from time to time, I keep track of the development of generative 3D AI—I understand that it is the future, just not here yet.

Emotional Truth of Working with Neural Networks

First, honestly about the pain. How does working with neural networks differ from working with other tools? Is it fascinating? Quite. Is it faster? It varies. Is it emotionally hard? Absolutely.

How does the process of working with traditional tools like Photoshop, After Effects, and Blender go: there is a task that you haven’t solved before, you have no experience or skills, but you need to solve it. You go watch tutorial videos on YouTube, read articles, spend several exhausting hours experimenting on your own, discovering new features along the way.

And after spending enough time, you finally solve the task—"Phew! Cool! I’m a 3D wolf and Motion ninja, I’m super cool, I’m satisfied with myself and with the new experience and skills I’ve gained."

How does the process work in Midjourney: you sit down and write prompts. A lot of prompts. Long prompts, short prompts. If the prompts don’t work, there’s no objective and clear reason why. Either the neural network doesn’t have enough capabilities, or you’re just dumb and writing the prompt wrong, or maybe the randomness isn’t on your side today.

Can’t go learn on YouTube, and all those Telegram channels about “prompt engineering” that supposedly know all the secrets and are now going to share them — garbage that in reality doesn’t work, or works randomly.

And here you sit, not knowing who to be angry at — yourself or the robot? And what happens when the task is solved? You feel nothing, except perhaps the desire to never again solve tasks with neural networks, because you still haven’t figured out — are you a cool specialist who managed to find the right prompt, or did the robot’s neuromediators glitch and it decided to do you a favor and give you what you wanted?

But there’s another side. Thanks to neural networks I now make concepts, got into 3D, and into motion. AI gives more versatility, more branches as a specialist. Before, if you needed concepts, you went to an artist. Now I can do it myself.

Main takeaway: AI is like switching from horses to cars, not the end of transportation

AI doesn’t destroy the design industry — it offers to switch from horses to cars. Transportation hasn’t gone anywhere, it still exists, it’s just that everyone switched from horses to cars and now it’s faster. In the same way AI doesn’t replace a designer, but expands their capabilities, allowing one specialist to handle the tasks of an entire team while maintaining quality and creative control.

Neural networks gave me more versatility. I used to be a designer in a PR department working on internal tasks, but thanks to neural networks I now make concepts, got into 3D, and into Motion. I feel my importance — I’ve got the muscle not only to make interfaces, but also to generate beauty in neural networks.

But working with AI requires a mindful approach. Two key principles:

1. Always ask “why?”

Rule: if it can be solved without neural networks, let’s solve it without them. Use a neural network only if it’s faster, cheaper, or the result will be more beautiful and better.

2. It’s never a magic button

People unfamiliar with neural networks often see beautiful pictures and think — you gave a prompt to the neural net, pressed one button, and it generated something. Indeed, with cats and nice portraits, neural networks really can manage with one click. But if you’re making something complex — like a website concept — you spend a week just making the concepts, and only then move on to 3D. It’s not a magic button, and you’re not going to finish it in two or three hours.

AI in design is not a revolution, it’s an evolution. And like any evolution, it takes time, patience, and understanding that a tool always remains a tool. What matters is who’s controlling it.

In the Telegram channel I’ve posted a complete cheat sheet of eight neural networks for designers. Subscribe to not miss new articles!

Write comment