- AI

- A

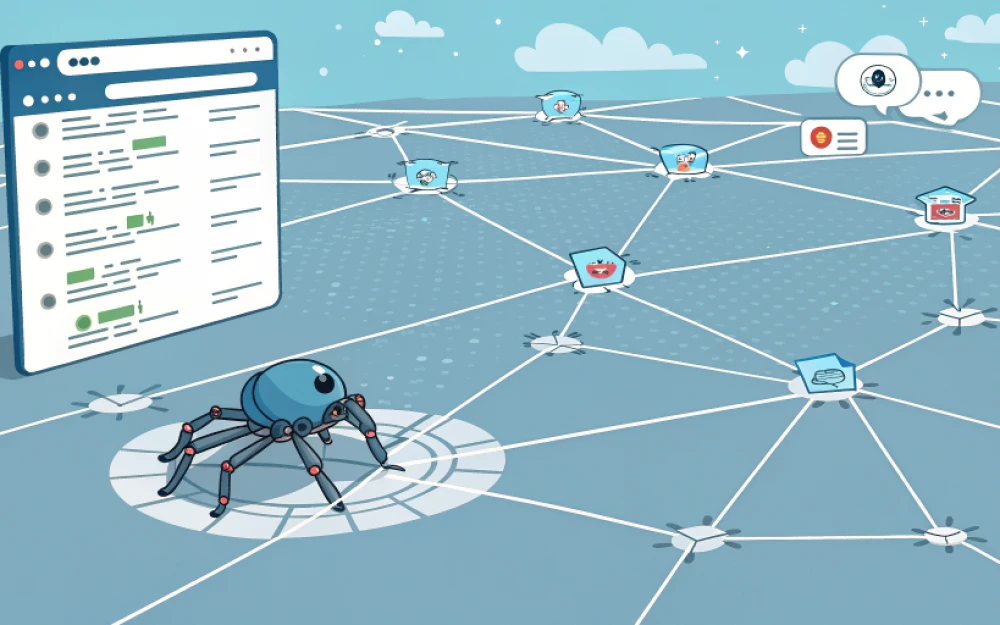

Parsing Telegram channels, groups, and chats with LLM processing

Hello everyone! Probably, everyone has experienced this: you open a Telegram chat, and there are thousands of new messages for the day. And somewhere within this "hodgepodge" is an important reply to your question or a discussion of the topic you need. Or you need to track certain messages for business purposes.

Of course, you can spend a lot of time searching manually, but it's much more interesting to teach a userbot to parse the chat history and create a convenient database for semantic search.

Here's where the combination of the Qdrant vector database and LLM comes into play:

The userbot collects messages and converts them into embeddings.

Qdrant stores these vectors and retrieves only the closest fragments upon request.

LLM receives exactly these pieces and formulates the final answer in human language.

If any of the terms are unclear, we recommend reviewing our previous articles on working with Qdrant. Links to them will be provided at the end of the article.

In this article, we will break down how to create such an application: from basic chat parsing to semantic search using LLM.

What is the essence of parsing Telegram through a bot?

When we say "semantic search," it's important to understand that this is not magic, but a very specific chain of steps.

Let's go through everything step by step:

1. Collecting messages

The first stage — is the userbot (for example, on Telethon). It connects to your Telegram account and "listens" to the selected chats. As soon as a new message comes in, the bot saves it. If desired, you can also load the old history, but most often, it's enough to process the new incoming ones.

Why the userbot? A regular bot does not have access to the chat history (it only sees what is written in real-time). But a userbot = you, but in the form of a program, meaning it has full access to all messages.

2. Converting text to vectors (embeddings)

For the computer to "understand the meaning" of messages, the text needs to be transformed into a numerical representation.

These are embeddings — a set of numbers (a vector) that describe not the shape of the words, but their meaning.

Example:

"Error during payment" and "Payment not going through" — different sets of words, but their vectors will be close to each other because the meaning is the same.

But "Today the weather was nice" will be far away, because the theme is different.

There are a lot of models for embeddings now: from OpenAI (text-embedding-3-small) to fully local ones (e5-base, mxbai-embed-large).

3. Storing in Qdrant

All the received vectors are stored in the Qdrant vector database. It's specifically designed for this kind of data: it quickly finds the closest vectors among millions of records.

Each message in the database is stored with our customizable metadata:

idof the message;the text itself;

the author (can be anonymized);

the date and time.

This means that at any moment, we can ask: "Give me the most similar parts of the chat to this message," and Qdrant will find them in milliseconds.

4. User query

Now imagine that you want to know: "What colors were discussed yesterday?"

Your question is similarly transformed into a vector and sent to Qdrant.

The database searches for the most semantically similar chat fragments and returns them (for example, 5–10 pieces).

5. Processing in LLM

And now comes the time of the large language model (LLM).

We take the found fragments and insert them into the prompt:

Question: "What colors were discussed yesterday?"

Chat context:

1) [12:30] user1: My favorite color is black

2) [12:35] user2: I’m thinking of repainting the site in blue, it’ll look fresher

3) [12:40] user3: I’m for green, it looks calmer

4) [12:50] user4: Red attracts attention better

...

Formulate the answer briefly and to the point.

The LLM sees only this small piece of context, not the entire chat, and formulates the result:

“Yesterday several colors were discussed: black, blue, green, and red.”

6. What we get as a result

No endless scrolling.

The answer comes in human-friendly form.

If desired, links to the original messages can be attached to check the context, but for now there will be enough time.

Thus, the LLM never collapses under the weight of thousands of messages: it only works with the sample that Qdrant prepared in advance.

Additionally: can you get banned for this?

Technically, Telegram does not approve the use of userbots, since this is access to an account through unofficial clients. But in practice, for reading history and storing messages, there is almost no risk. Millions of people use Telethon and Pyrogram. A ban more often comes for spam or aggressive activity. For peace of mind, you can create a separate account for the userbot so as not to risk your main one.

Practical part: building an MVP of a Telegram parser bot

To avoid going deep into fine-tuning, we’ll make a minimal working example:

the userbot reads messages from the chat;

turns them into embeddings;

saves them in Qdrant;

then upon request retrieves the relevant pieces and passes them to the LLM.

The application itself will run as two separately living modules performing their own functions. The first — bot.py will collect all new incoming messages. The second (search.py) — will search through them.

1. Dependencies

In requirements.txt we add:

telethon==1.36.0

qdrant-client==1.12.1

httpx==0.27.2

python-dotenv==1.0.1

sentence-transformers==5.1.02. Environment variables

It’s recommended to store all important and secret data, such as the bot token and Qdrant password, in environment variables. In Amvera, where we will deploy our service, this is the “Variables” tab.

For this project we use the following variables:

API_ID = 123456

API_HASH = your_api_hash

SESSION_PATH = /data/tg.session # Save here

QDRANT_URL = http://localhost:6333

QDRANT_COLLECTION = chat_messages

LLM_BASE_URL = https://kong-proxy.yc.amvera.ru/api/v1/models/llama

LLM_API_KEY = key

LLM_MODEL = llama70b

PYTHONUNBUFFERED = 1You can track up-to-date methods in the swagger

2.1. How to get API_ID and API_HASH for a userbot

For a userbot to connect to your Telegram account, you need special keys:

API_ID

API_HASH

You can get them on the official Telegram developer website:

Log in to my.telegram.org with your Telegram account.

Select API development tools.

Enter the application name (any, for example

chat-search), short name, and fill in the form.After saving, your

API_IDandAPI_HASHwill appear.

3. Userbot on Telethon

import os, asyncio

from dotenv import load_dotenv

from telethon import TelegramClient, events

from qdrant_client import QdrantClient

from qdrant_client.models import VectorParams, Distance, PointStruct

from sentence_transformers import SentenceTransformer

import httpx

load_dotenv()

API_ID = int(os.getenv("API_ID"))

API_HASH = os.getenv("API_HASH")

SESSION_PATH = os.getenv("SESSION_PATH", "session")

QDRANT_URL = os.getenv("QDRANT_URL", "http://localhost:6333")

COLLECTION = os.getenv("QDRANT_COLLECTION", "chat_messages")

LLM_BASE_URL = os.getenv("LLM_BASE_URL")

LLM_API_KEY = os.getenv("LLM_API_KEY")

LLM_MODEL = os.getenv("LLM_MODEL")

client = TelegramClient(SESSION_PATH, API_ID, API_HASH)

qdrant = QdrantClient(url=QDRANT_URL)

embedder = SentenceTransformer("intfloat/e5-small")

# create collection (once)

try:

qdrant.get_collection(COLLECTION)

except:

qdrant.create_collection(

collection_name=COLLECTION,

vectors_config=VectorParams(size=384, distance=Distance.COSINE)

)

async def embed_text(text: str):

return embedder.encode(text).tolist()

@client.on(events.NewMessage)

async def handler(event):

# It’s recommended to add a condition to check the chat. In this implementation all messages are saved

text = event.message.message

if not text:

return

emb = await embed_text(text)

point = PointStruct(

id=event.message.id,

vector=emb,

payload={"text": text, "chat_id": event.chat_id}

)

qdrant.upsert(COLLECTION, points=[point])

print(f"Saved message: {text[:50]}...")

async def main():

await client.start()

print("Userbot started")

await client.run_until_disconnected()

if __name__ == "__main__":

asyncio.run(main())

4. Semantic search + LLM answer

In a separate file search.py:

import os

import asyncio

import httpx

from dotenv import load_dotenv

from qdrant_client import QdrantClient

from sentence_transformers import SentenceTransformer

load_dotenv()

QDRANT_URL = os.getenv("QDRANT_URL", "http://localhost:6333")

QDRANT_COLLECTION = os.getenv("QDRANT_COLLECTION", "chat_messages")

LLM_BASE_URL = os.getenv("LLM_BASE_URL") # e.g.: https://kong-proxy.yc.amvera.ru/api/v1/models/llama

LLM_API_KEY = os.getenv("LLM_API_KEY")

LLM_MODEL = os.getenv("LLM_MODEL", "llama8b")

qdrant = QdrantClient(url=QDRANT_URL)

embedder = SentenceTransformer("intfloat/e5-small")

def embed_text(text: str):

return embedder.encode([text], normalize_embeddings=True).tolist()[0]

async def llm_answer(question, context):

headers = {

"X-Auth-Token": f"Bearer {LLM_API_KEY}",

"Content-Type": "application/json"

}

payload = {

"model": LLM_MODEL,

"messages": [

{"role": "system", "text": "You help find important information in the chat. Respond briefly and to the point. In Russian."},

{"role": "user", "text": f"Question: {question}\nContext:\n{context}"}

]

}

async with httpx.AsyncClient(timeout=120.0) as http:

r = await http.post(LLM_BASE_URL, headers=headers, json=payload)

r.raise_for_status()

data = r.json()

return data["choices"][0]["message"]["text"]

async def search(question):

vec = embed_text(question)

results = qdrant.search(collection_name=QDRANT_COLLECTION, query_vector=vec, limit=5)

context = "\n".join([p.payload["text"] for p in results])

answer = await llm_answer(question, context)

print("Question:", question)

print("Answer:", answer)

if __name__ == "__main__":

q = input("Enter your question: ")

asyncio.run(search(q))Example of input and output data.

Question: "What colors were discussed yesterday?"

Context from chat:

1) [12:30] user1: My favorite color is black

2) [12:35] user2: I'm thinking of repainting the site in blue, it will feel fresher

3) [12:40] user3: I vote for green, it looks calmer

4) [12:50] user4: Red attracts more attention

...

Formulate the answer briefly and to the point.5. How it works together

Run

bot.py— the userbot listens to the chat and saves all new messages to Qdrant.Run

pythonsearch.py— enter a question, get an answer from the LLM.You will also need to deploy the database (Qdrant in our case) and connect the LLM via API.

Example:

Question: What colors were discussed yesterday?

Answer: Yesterday several colors were discussed: black, blue, green and red.This is just an example of parsing Telegram channels, groups, and chats via a bot

We showed the simplest pipeline that can be built with Python, Qdrant, and LLaMA. This code works, but it’s more of an educational framework than a ready-to-use production application.

Why so:

each project has a different scale (from a small group to a corporate chat with hundreds of thousands of messages);

different privacy requirements (sometimes you can store the entire history, sometimes you need to anonymize authors or remove personal data);

different embedding models and LLMs produce different results (some work better for short messages, others for long dialogues).

So you shouldn’t see the example as the ultimate tech solution. It’s much more useful to take this template and try it yourself.

Relevant articles

Your own ChatGPT on documents: building RAG from scratch.

All about Qdrant. Overview of the vector database.

Universal website parsing in Python: requests vs headless, tokens, cookies, proxies, and IP rotation.

Write comment