- AI

- A

Evaluation of LLM: metrics, frameworks, and best practices

Jensen Huang in his speech at the "Data+AI" summit said: "Generative AI is everywhere, in every industry. If there is no generative AI in your industry yet, then you just haven't been paying attention to it."

However, widespread adoption does not mean that these models are flawless. In real business cases, models often fail to achieve their goals and need refinement. This is where LLM evaluations come in: they help ensure that models are reliable, accurate, and meet business preferences.

In this article, we will delve into why LLM evaluation is critical, and look at metrics, frameworks, tools, and challenges of LLM evaluation. We will also share some reliable strategies we have developed while working with our clients, as well as best practices.

What is LLM evaluation?

LLM evaluation is the process of testing and measuring how well large language models perform in real-world situations. When testing these models, we observe how well they understand and answer questions, how smoothly and clearly they generate text, and whether their answers make sense in context. This step is very important because it helps us identify any issues and improve the model, ensuring that it can effectively and reliably handle tasks.

Why do you need to evaluate LLM?

Everything is simple: to ensure that the model meets the task and its requirements. LLM evaluation guarantees that it understands and responds accurately, correctly processes various types of information, and communicates in a safe, understandable, and effective manner. LLM evaluation allows us to fine-tune the model based on real feedback, improving its performance and reliability. By conducting thorough evaluations, we ensure that LLM can fully meet the needs of its users, whether it is answering questions, providing recommendations, or creating content.

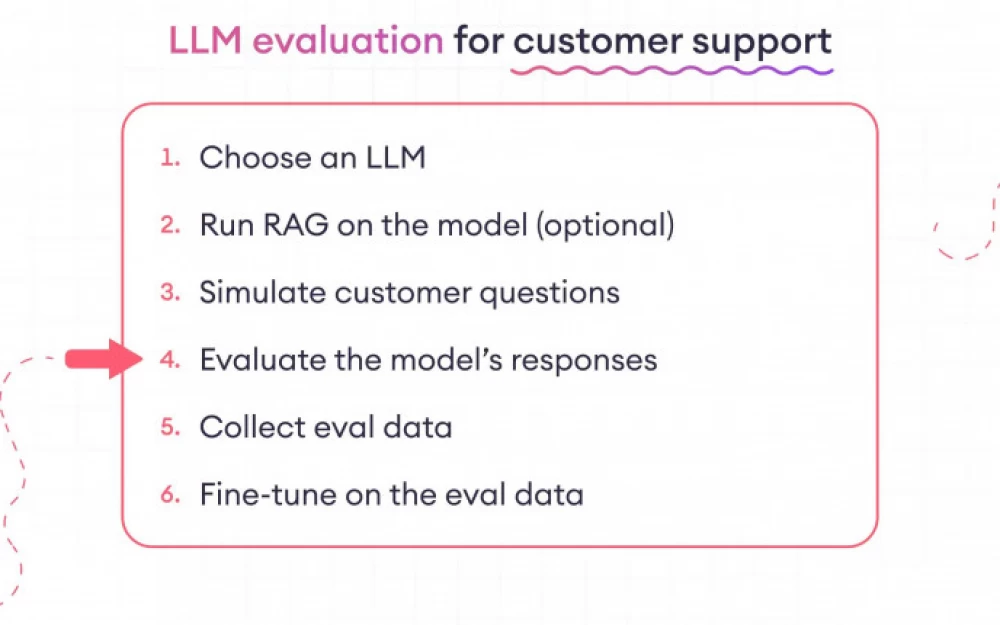

Suppose you use LLM in the customer support service of an online store. Here's how you can evaluate it:

You would start by configuring the LLM to respond to common customer queries, such as order status, product information, and return policy. Then you would run simulations using various real customer questions to see how the LLM handles them. For example, you might ask: "What is the return policy for an opened item?" or "Can I change the shipping address after placing an order?"

During the evaluation, you would check whether the LLM's responses are accurate, understandable, and helpful. Does it fully understand the questions? Does it provide complete and reliable information? If a customer asks something complex or ambiguous, does the LLM ask clarifying questions or make hasty conclusions? Does it give toxic or harmful answers?

As you collect data from these simulations, you also create a valuable dataset. You can then use this data to fine-tune LLM and RLHF to improve model performance.

This cycle of continuous testing, data collection, and improvements helps the model perform better. It ensures that the model can truly assist real customers, enhancing their experience and making work more efficient.

The Importance of Individual LLM Assessments

Individual assessments are key because they ensure that the models truly meet the needs of customers. You start by identifying the unique challenges and goals of the industry. Then you create test scenarios that reflect the real tasks the model will face, whether it's answering customer support questions, analyzing data, or writing content that elicits the desired response.

You also need to ensure that your models can responsibly handle sensitive topics such as toxic and harmful content. This is crucial for ensuring safe and positive interactions.

This approach not only tests whether the model works well overall, but also whether it works well for its specific task in real business conditions. This way, you ensure that your models truly help customers achieve their goals.

LLM Model Assessments and LLM System Assessments

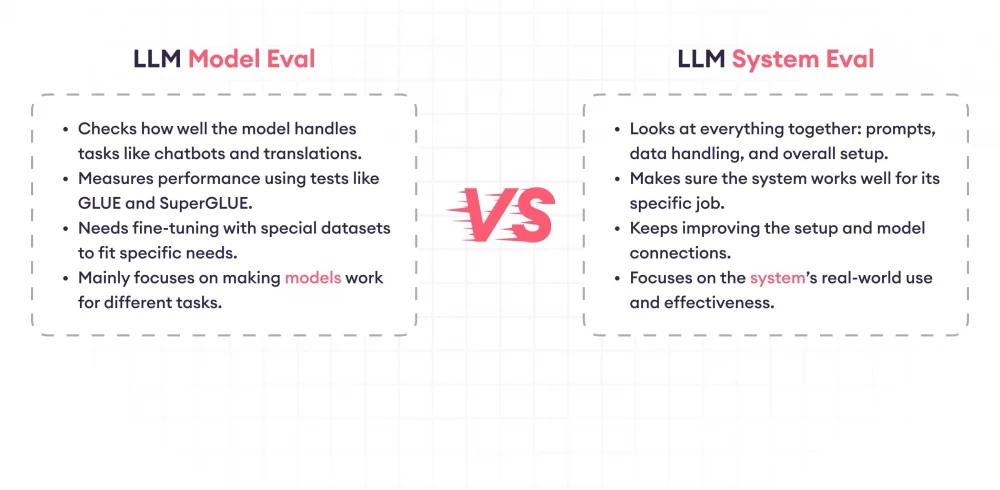

When we talk about evaluating large language models, it's important to understand that there is a difference between evaluating an individual LLM and testing the performance of an entire system that uses LLM.

Modern LLMs handle various tasks such as chatbots, named entity recognition (NER), text generation, summarization, question answering, sentiment analysis, translation, and more. These models are often tested based on standard benchmarks such as GLUE, SuperGLUE, HellaSwag, TruthfulQA, and MMLU, using well-known metrics.

However, these LLMs may not fully meet your specific needs right after installation. Sometimes we need to fine-tune the LLM with a unique dataset created specifically for our particular application. Evaluating these adjusted models—or models that use methods like Retrieval-Augmented Generation (RAG)—usually means comparing them to a known accurate dataset to see how they perform.

But remember: ensuring that the LLM works properly depends not only on the model itself but also on how we set everything up. This includes choosing the right prompt templates, setting up effective data retrieval systems, and, if necessary, adjusting the model architecture. Selecting the right components and evaluating the entire system can be a challenging task, but it is crucial to ensure that the LLM delivers the desired results.

LLM Evaluation Metrics

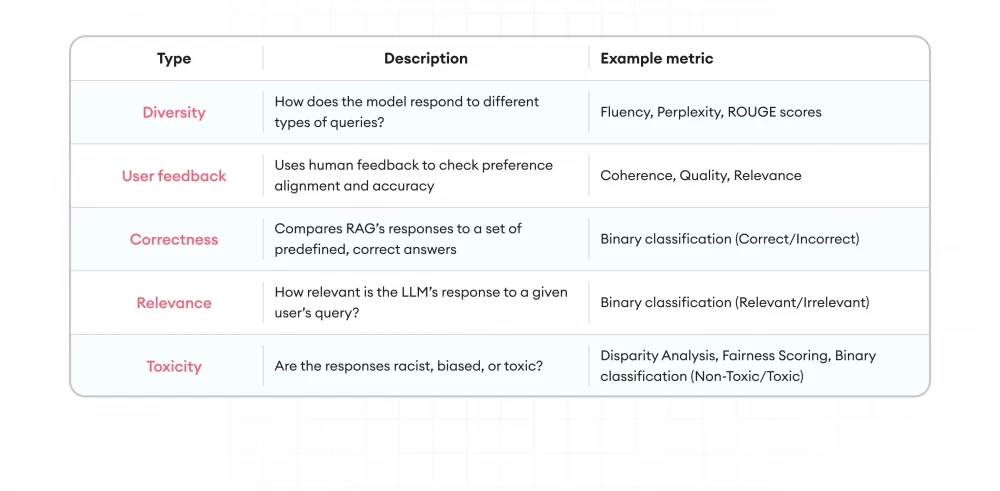

There are several LLM evaluation metrics that practitioners use to measure how well the model performs.

Dilemma

The dilemma measures how well the model predicts a text sample. A lower score means better performance. It calculates the exponential dependence of the average logarithmic likelihood of the sample:

Perplexity=exp(−1N∑logP(xi))

Perplexity=exp(−N1∑logP(xi))

where NN is the number of words, and P(xi)P(xi) is the probability assigned by the model to the i-th word.

Despite its usefulness, the dilemma does not tell us about the quality or coherence of the text, and it can be influenced by how the text is tokenized.

BLEU Score

The BLEU score was originally used for machine translation and is now also used for evaluating text generation. It compares the model's output with reference texts by considering n-gram overlap.

Scores range from 0 to 1, with higher scores indicating better alignment. However, BLEU can be misleading when evaluating creative or diverse texts.

ROUGE

ROUGE is great for evaluating summaries. It measures how much the content generated by the model overlaps with reference summaries using n-grams, sequences, and word pairs.

F1 Score

The F1 score is used for classification and question-answering tasks. It balances precision (relevance of the model's answers) and recall (completeness of relevant answers):

F1=2×(precision×recall) precision+recall

F1= precision+recall2×(precision×recall)

It ranges from 0 to 1, where 1 indicates perfect accuracy.

METEOR

METEOR takes into account not only exact matches but also synonyms and paraphrases, aiming to better align with human judgment.

BERTScore

BERTScore evaluates texts by comparing the similarity of contextual embeddings from models like BERT, paying more attention to meaning than exact word matching.

Levenshtein distance, or edit distance, measures the minimum number of single-character edits (insertions, deletions, or substitutions) required to change one string into another. This metric is valuable for:

Evaluating text similarity in generation tasks.

Assessing spelling correction and OCR post-processing.

Supplementing other metrics in machine translation evaluation.

The normalized version (from 0 to 1) allows for comparing texts of different lengths. Despite its simplicity and intuitiveness, it does not account for semantic similarity, making it most effective when used alongside other evaluation metrics.

Human Evaluation

Despite the rise of automated metrics, human evaluation remains important. Its methods include using Likert scales to assess fluency and relevance, A/B testing of different model outputs, and expert reviews for specialized domains.

Task-Specific Metrics

For tasks like dialogue systems, metrics may include engagement levels and task completion rates. For code generation, it is necessary to see how often the code compiles or passes tests.

Robustness and Fairness

It is important to check how models respond to unexpected inputs and assess the presence of biased or harmful outputs.

Efficiency Metrics

As models evolve, the importance of measuring their efficiency in terms of speed, memory usage, and energy consumption grows.

AI Evaluates AI

As AI becomes more advanced, we begin to use one AI to evaluate another. This method is fast and allows for processing huge volumes of data without fatigue. Additionally, AI can identify complex patterns that humans might overlook, offering a detailed performance analysis.

However, this evaluation is not perfect. AI evaluators can be biased, sometimes favoring certain responses or missing subtle context that a human might catch. There is also the risk of an "echo chamber," where AI evaluators favor responses similar to those they are programmed to recognize, potentially overlooking unique or creative answers.

Another issue is that AI often cannot explain its evaluations well. It can rate responses but may not offer the in-depth feedback that a human could provide, which can be like receiving a grade without an explanation of why.

Many researchers believe that a combination of AI and human evaluation works best. AI processes most of the data, while humans add the necessary context and understanding.

Top 10 Frameworks and Tools for LLM Evaluation

Practical frameworks and tools can be found online that can be used to create an evaluation dataset.

SuperAnnotate

SuperAnnotate helps companies create their datasets for evaluation and fine-tuning to improve model performance. Its fully customizable editor allows you to create datasets for any use case in any industry.

Amazon Bedrock

Amazon's entry into the LLM market - Amazon Bedrock - also includes evaluation capabilities. It will be especially useful if you are deploying models on AWS. SuperAnnotate integrates with Bedrock, allowing you to create data pipelines using the SuperAnnotate editor and fine-tune models from Bedrock.

Nvidia Nemo

Nvidia Nemo is a cloud microservice designed for automatic testing of both modern foundation and custom models. It evaluates them using various benchmarks, including academic sources, customer applications, or using LLM as judges.

Azure AI Studio

Azure AI Studio from Microsoft provides a full suite of tools for evaluating LLM, including built-in metrics and customizable evaluation flows. It will be especially useful if you are already working in the Azure ecosystem.

Prompt Flow

Prompt Flow is another Microsoft tool that allows you to create and evaluate complex LLM workflows. It is great for testing multi-step processes and prompt iteration.

Weights & biases

W&B, known for its experiment tracking capabilities, has also started evaluating LLM. It is a good choice if you want to support model training and evaluation in one place.

LangSmith

LangSmith, developed by Anthropic, offers a range of evaluation tools specifically designed for language models. It is particularly strong in areas such as bias detection and safety testing.

TruLens

TruLens is an open-source framework that focuses on transparency and interpretability of LLM evaluation. It is a good choice if you need to explain your model's decision-making process.

Vertex AI Studio

Vertex AI Studio from Google also includes evaluation tools for LLM. It is well integrated with other Google Cloud services, making it a natural choice for teams already using GCP.

DeepEval

Deep Eval is an open-source library that offers a wide range of evaluation metrics and is designed for easy integration into existing machine learning pipelines.

Parea AI

Parea AI focuses on providing detailed analytics and understanding of LLM performance. It is particularly strong in areas such as conversation analysis and user feedback integration.

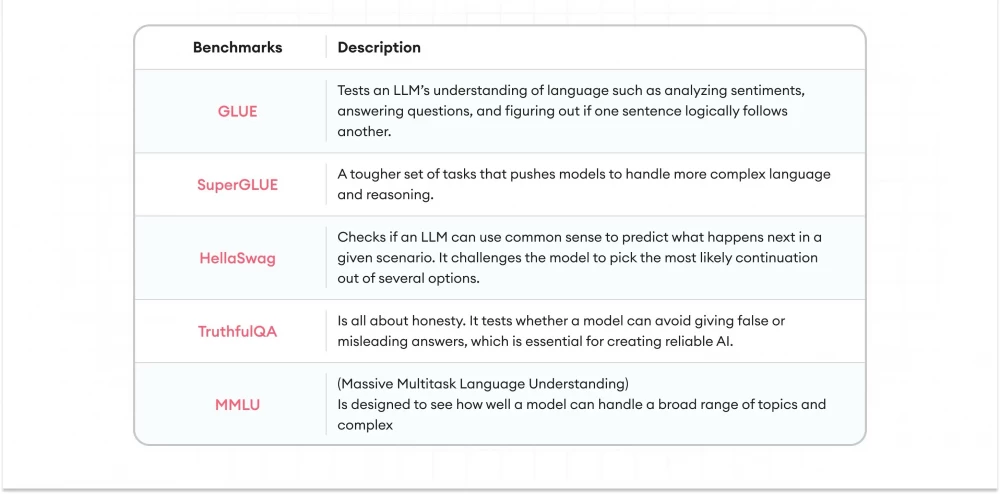

LLM Model Evaluation Benchmarks

To test how language models handle various tasks, researchers and developers use a set of standard tests. Below are some of the main benchmarks they use:

GLUE (General Language Understanding Evaluation)

GLUE tests the language understanding of LLM with nine different tasks, such as sentiment analysis, question answering, and determining whether one sentence logically follows another. It provides a single score that summarizes the model's performance across all these tasks, making it easier to compare different models.

SuperGLUE

As models began to surpass human results on GLUE, the SuperGLUE benchmark was introduced. It is a more challenging set of tasks that forces models to handle more complex language and reasoning.

HellaSwag

HellaSwag tests whether LLM can use common sense to predict what will happen next in a given scenario. It challenges the model to choose the most likely continuation from several options.

TruthfulQA

TruthfulQA is about honesty. This benchmark tests whether the model can avoid false or misleading answers, which is crucial for creating reliable AI.

MMLU (Massive Multitask Language Understanding)

MMLU is extensive and covers everything from science and math to art. It contains over 15,000 questions across 57 different tasks. It is designed to assess how well the model can handle a wide range of topics and complex reasoning.

Other benchmarks

There are also other tests, such as:

ARC (AI2 Reasoning Challenge): focuses on scientific reasoning.

BIG-bench: a collaborative project with a variety of different tasks.

LAMBADA: tests how well models can guess the last word in a paragraph.

SQuAD (Stanford Question Answering Dataset): measures reading comprehension and the ability to answer questions.

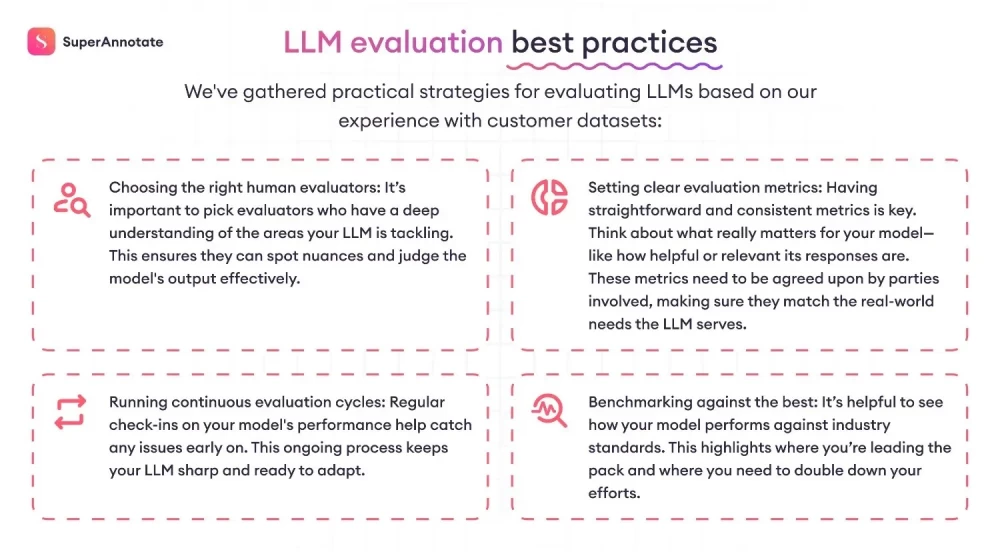

Best Practices for Evaluating LLM

Julia MacDonald shared her thoughts on the practical side of evaluating LLM: "Creating an evaluation framework that is thorough and generalizable, yet simple and free from contradictions, is key to the success of any evaluation project."

Her perspective highlights the importance of creating a solid foundation for evaluation. Based on our experience working with client datasets, we have developed several practical strategies:

Choosing the right human evaluators: it is important to select evaluators who have a deep understanding of the areas in which your LLM is involved. This ensures that they can notice nuances and effectively evaluate the model's outputs.

Setting clear evaluation metrics: having simple and consistent metrics is a key factor. Consider what really matters for your model, such as how useful or relevant its responses are. These metrics should be agreed upon by stakeholders, ensuring they align with the real needs that the LLM serves.

Conducting continuous evaluation cycles: regular performance checks of your model help identify any issues at an early stage. This continuous process allows your LLM to stay in shape and be ready to adapt.

Benchmarking against the best: it is extremely useful to know how your model compares to industry standards, which will help you understand where you are leading and where you need to double your efforts. Choosing the right people to help create the evaluation dataset is key; we will discuss this in the next section.

Challenges of LLM Evaluation

Evaluating large language models can be challenging for several reasons.

Training data overlap

You cannot be sure that the model has not seen the test data before. When training LLMs on large datasets, there is always a risk that some test questions may have been part of their training (overfitting). This can make the model appear better than it actually is.

Metrics are too general

We often do not have good ways to measure LLM performance across different demographic groups, cultures, and languages. They also mostly focus on accuracy and relevance and ignore other important factors such as novelty or diversity. This makes it difficult to ensure fairness and inclusiveness of models in terms of their capabilities.

Adversarial attacks

LLM can be deceived by carefully crafted input designed to make it fail or behave unpredictably. Identifying and defending against these adversarial attacks using methods like "red teaming" is a growing concern in the field of evaluation.

Benchmarks are not designed for real-world situations

For many tasks, we do not have enough high-quality, human-created reference data to compare LLM results. This limits our ability to accurately assess performance in certain areas.

Inconsistent performance

LLMs can be both successful and unsuccessful. One minute they write like professionals, the next they make silly mistakes. These performance ups and downs make it difficult to judge how good they really are overall.

Too good to measure

Sometimes LLMs create text that is as good as or better than what humans write. When this happens, our usual ways of evaluating them fall short. How can you assess something that is already top-notch?

Missing the mark

Even when an LLM provides factually correct information, it can completely miss the necessary context or tone. Imagine asking for advice and getting a response that is technically correct but utterly useless for your situation.

Narrow focus of testing

Many researchers are keen on refining the model itself and forget about improving the ways to test it. This can lead to the use of overly simple metrics that do not tell the whole story of what LLM can actually do.

Problems of Human Judgment

Involving people in evaluating LLM is valuable, but it has its own problems. This process is subjective, can be biased, and conducting it on a large scale is expensive. Moreover, different people can have very different opinions on the same result.

Blind Spots of AI Evaluator

When we use other AI models to evaluate LLM, we may encounter some strange biases. These biases can distort the results in a predictable way, making our assessments less reliable. Automated evaluations are not as objective as we think. We need to be aware of the "blind spots" to get an objective picture of how LLM actually works.

In Conclusion

In short, the evaluation of large language models is necessary if we want to fully understand and improve their capabilities. This understanding helps us not only solve current problems but also develop more reliable and efficient AI applications. As we move forward, focusing on improving evaluation methods will play a crucial role in ensuring the accurate and ethical operation of AI tools in various conditions. These ongoing efforts will help pave the way for AI that truly benefits society, making each stage of evaluation a significant step towards a future where AI and humans collaborate seamlessly.

Write comment