- AI

- A

Does Vision Llama understand impressionists?

Hello everyone, my name is Arseniy, I am a Data Scientist at Raft, and today I will tell you about Visual Language Models.

Large language models have already become part of our lives and we use them to simplify modern routine, as well as to solve business problems. Recently, a new generation of vision transformer models has been released, which have significantly simplified image analysis, regardless of the field these images come from.

The September release of Llama-3.2-11b was particularly notable, not only because it is the first vision model from Llama, but also because it was accompanied by a whole family of models, including smaller ones with 1B and 3B parameters. And as you know, smaller means more usable.

This month, many cool reviews have already appeared on Llama-3.2-11b. For example, the Amazon blog and another no less detailed review. From these reviews, the following can be concluded:

Llama is capable of analyzing economic reports, X-rays, complex compositions, graphs, plant diseases, recognizing text and in general, the model seems incredibly cool.

However, I decided to check how well the model can perceive and evaluate art.

To make it more interesting, I chose a few more Vision Transformer models for comparison: Qwen2-VL-7B, LLaVa-NeXT (or LLaVa-1.6) and LLaVa-1.5.

About the data

I used the WikiArt dataset. It contains 81,444 artworks by various artists from WikiArt.org. The dataset includes class labels for each image:

artist — 129 artist classes, including the "Unknown Artist" class;

genre — 11 genre classes, including the "Unknown Genre" class;

style — 27 style classes.

On WikiArt.org, genres and styles are classified according to the depicted themes and objects:

Most of the paintings in the dataset are in the styles of Impressionism and Realism – 29.5% and 18.9% of all records, respectively, and the predominant genres are landscape and portrait – 23.2% and 15.1% of records. The dataset is quite large — about 35 GB of data, so I chose only a small part for the study: 1130 records.

To simplify the work of the models, they will predict the genre of the painting -- the target in the form of a number.

Experiments

Now let's move on to the experiments. I used various prompting techniques: zero-shot, Chain-of-Thought (CoT), and system prompt. For a more accurate solution to the problem, it was possible to try prompt-tuning – a method where the model itself selects the most significant tokens for the prompt during fine-tuning, but my task was to compare the models “out of the box”.

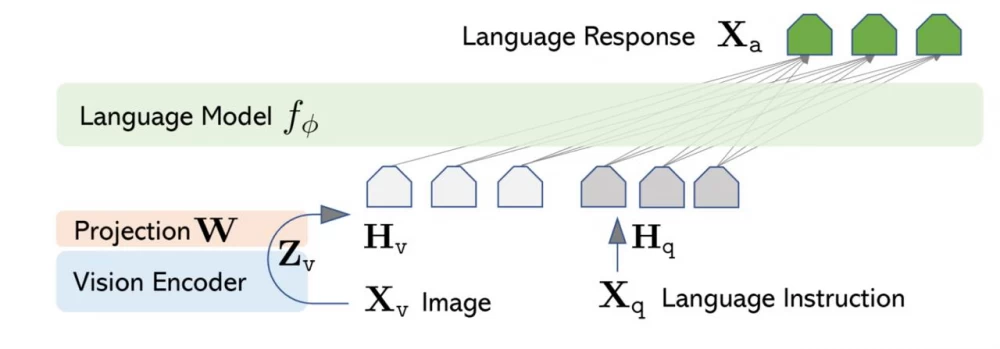

LLaVa models

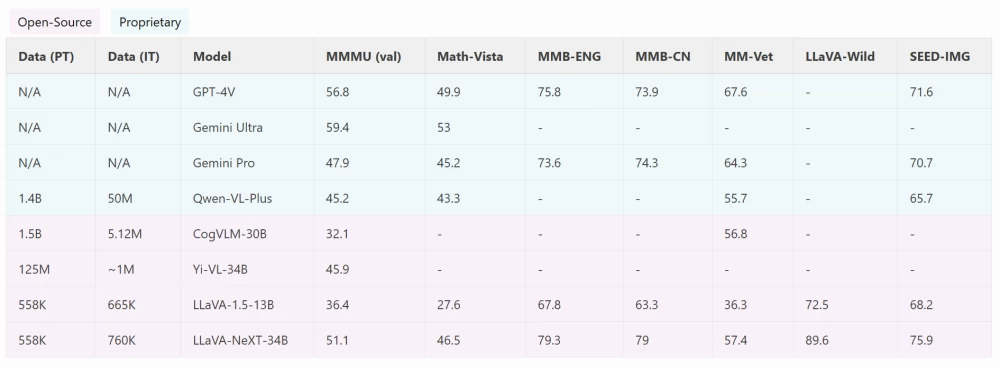

LLaVa-1.5 fits even in colab, on T4 GPU, taking about 14GB in torch.fp16 weights. The new model LLaVa-NeXT-7b (LLaVa-1.6) in torch.fp16 takes about 16GB on RTX 4090. The new LLaVa model has significant technical differences, for example, LLaVa-1.5 uses the language model Vicuna-7B and works in conjunction with the visual encoder CLIP ViT-L/14, but in LLaVa-1.6 several improvements have been made. The key one is the use of the new language model Mistral-7B, which gives the model better world knowledge and logical reasoning. They also increased the input image resolution by 4 times, which allows for more visual details. Overall, the model retains the minimalist design and data processing efficiency of the previous model LLaVa-1.5. The senior model LlaVa-NeXT-34B outperforms Gemini Pro and Qwen-VL-Plus on MMB-ENG, SEED-IMG.

For classifying paintings by genre, I wrote a prompt where information about the artist and the style of the painting was passed. It also listed 11 genres in the format "number: genre" (variable genres_str). Example: 0: Portrait, 1: Landscape, 2: Still life,...

Prompt:

"There are 11 possible genres: {genres_str}. What is the genre of painting by {artist} in style {style}? Choose from the given list. If you do not know the genre exactly, choose '10: Unknown Genre'. Say only the number of genre, do not output anything else."

This instruction gave the models a clear structure, setting specific parameters (author, style) and requiring a strict response in the genre number format. This approach allowed the model not only to analyze visual data but also to rely on its initial knowledge of artists and styles.

As a result, for llava-1.5-7b the accuracy was very low – 28.1%, but for the new version the figures were comparatively high – 50.3%.

Additionally, I modified the prompt to make the models perform a "Chain-of-Thought". With this prompting technique, the model analyzes the picture "out loud", i.e., step by step explains its thoughts before making a final decision. However, this did not increase accuracy — the results remained at the same level, and I did not continue to reinvent the wheel.

Llama-3.2-11B

What about the recently released llama? It turned out to be easy to fit on the 4090 in torch.bf16 weights. It takes up about 21Gb in total. The same prompt with authors, styles, and a suggestion to choose a genre was used. The model inference is quite fast -- 462 tokens per second. Then I decided to add system prompts to set the model to more expert behavior.

System prompts:

"You are an art history and painting styles expert.""You are an art expert. You know all artwork genres, styles, and artists and carefully adjust your knowledge thinking step by step.""You are an expert in art history and painting styles. You are engaged in a discussion with a user who is asking for your expert opinion on the genre classification of famous paintings."

However, these system prompts did not improve the model's analysis. Accuracy fluctuated in the range of 45–49%. Perhaps the model was overstrained or started to "think", which hindered its performance.

Then I decided to encourage the model by giving it tips and slightly rephrased the prompt:

Prompt v2.0:

"The task is to classify an image into one of the following 11 genres: {genres_str}.\nYou are provided with the name of the artist: and the painting style of the image.\nArtist: {artist}\nStyle: {style}\nUse all your knowledge and given information to determine the correct genre. Choose the genre from the list by providing only the corresponding number. Say ONLY the number of the genre and do not say anything else. THIS IS VEY IMPORTANT FOR MY CAREER I WILL TIP YOU 1000000000$ for the correct answer."

It is worth noting that Llama 3.2 follows instructions much more obediently and is easier to prompt. If LLaVa models had incorrect outputs when instead of the answer number the models started to output text and reasoning, Llama 3.2 did not have this issue.

Llama 3.2 outputs answers in the correct format, showing an accuracy of 50.2% -- this is higher than that of LLaVa models, but the gap is small.

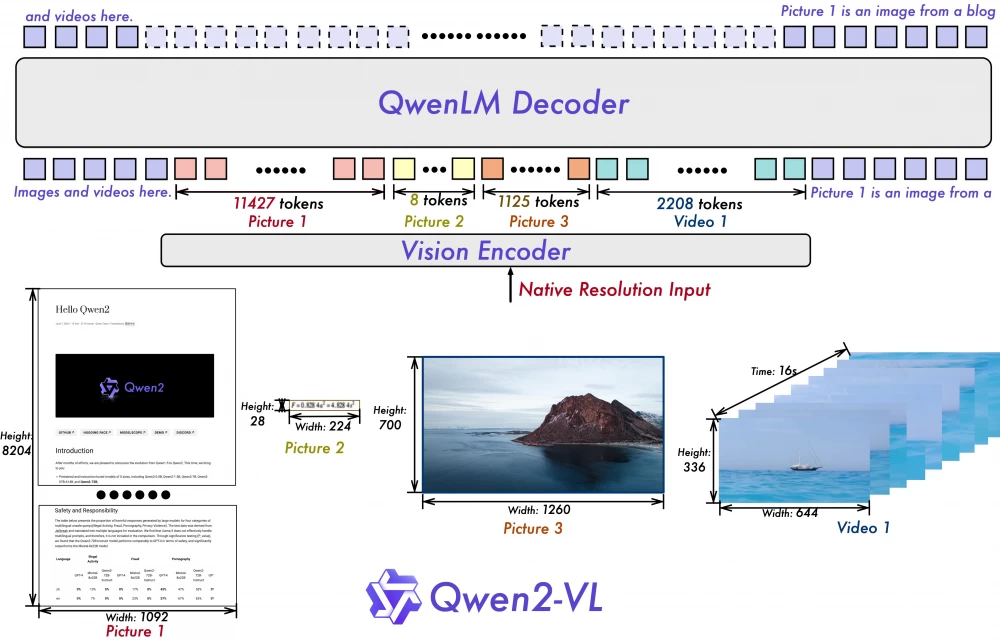

Qwen2-VL-7B

Completes the quartet of models Qwen2-VL-7B. It had the biggest problems: it took a long time to fit on the card. The model itself easily fits on the RTX 4090 in torch.bfl16 and takes up 17 Gb. However, when trying to input an image, the memory immediately ran out. It turned out that the format of the paintings from the dataset had to be changed, since some were about 4000×3072 in size, and when such an image was fed to the model, it immediately consumed the remaining GPU memory.

The largest format that fit on the GPU is 1600×900. But 1920×1080 does not fit. So I had to resize the images of the paintings before feeding them to the model.

I started again with the simplest first prompt, where you only need to output the genre number. On images of paintings sized 1600×900, it took 45 minutes. Then I decided to reduce the size, in the end the quality did not drop, and the run on sizes 512×512 took 16 minutes. As a result, accuracy is 60.2%.

Prompt v3.0:

"There are 11 possible genres: {genres_str}. What is the genre of painting by {artist}? Choose from a list. Say only the number of genre, do not output anything else."

Having obtained such results with the zero-shot technique, I was sure that allowing the model to think before answering would definitely improve accuracy. I again applied the Chain-of-Thought technique.

Prompt v4.0:

"You are an art expert. The task is to classify an image into one of the following 11 genres: {genres_str}. You are provided with the name of the artist and the painting style of the image.\nArtist: {artist}\nStyle: {style}\nUse all your knowledge, thought, and given information to determine the most appropriate genre.\n"Thoughts: (Provide chain of thought here)"\n"Answer: (Provide the genre number here)"

Contrary to my expectations, with CoT accuracy became 59.9%. This cannot be called an improvement compared to the previous result, and there is an error. In general, the techniques can be called identical, except that the first prompt is easier to come up with.

What pleased me was that the model strictly followed the instructions. Each request took about 1.4 seconds, which gave a total experiment time of 35 minutes, with responses up to 512 tokens long. CoT showed how the model made decisions on simpler prompts under the hood, bringing its thoughts into the response. This shows that the model really has the potential for in-depth analysis, but CoT does not always improve the answers.

Example of model output for a painting by Claude Monet:

Thoughts: The painting features a cathedral, which is a common subject in cityscapes. The style is Impressionism, which is known for its focus on capturing the effects of light and atmosphere. The use of light and color to create a sense of movement and mood is characteristic of Impressionist art.

Answer: 1

What conclusions?

Models are far from being art experts, but their potential is enormous. New VLMs handle a huge range of tasks that are applicable in business tasks. Based on the results, if there are not many computing resources, you can take llava-1.5-7b, it fits without quantization on a fairly basic T4 card. For better results, it is better to take newer models. LlaVa-NEXT-7b, Llama-3.2-11b, and Qwen2-VL-7B performed much better, achieving higher accuracy for my task of predicting the genre of a painting. The results and our internal experiments with other tasks show that Qwen2-VL-7B is also good at recognizing Russian from pdf documents, but more on that another time!

P.S. You can see examples of model runs here.

Write comment