- AI

- A

Just like in Black Mirror: which plots of the sci-fi series may soon come true in real life

At the end of September 2024, Netflix announced the release of a new, seventh season of the sci-fi series Black Mirror. This anthology is dedicated to dark forecasts for the near future, warning viewers about the consequences of the unpredictable development of technologies. The first episodes of Black Mirror were released in 2011, and during this time some of the series' plots have frighteningly come close to reality. Polina Sokol, Senior Data Analyst at the R&D Laboratory of the Cybersecurity Technology Center of the Solar Group, especially for Techinsider, and now for Habr, has collected several examples of the development of artificial intelligence that already exist in our lives or may soon become a reality.

AI Neuroimplants

In which episodes the technology appears:

The Entire History of You: In the near future, most people have a "grain" implanted behind their ear, which records everything they do, see, or hear. This allows memories to be played back before the person's eyes or on a screen.

Arkangel: A concerned mother becomes part of a project to create a parental control system. Her child is implanted with a device that allows real-time tracking of geolocation and medical condition, receiving, recording, and playing back the image of direct vision, censoring obscene and stressful scenes through pixelation and sound distortion.

Crocodile: Neuroimplants are used to record what is seen and subsequently use this information in the investigation of crimes and insurance cases.

Examples from real life

Neuroimplants to improve the lives of people with disabilities are not yet a widespread direction, but in the future, it may develop. In January 2024, Elon Musk announced the first experience of implanting a chip in a human. The device allowed the patient — a paralyzed person — to use the internet, post on social networks, move the cursor on a laptop, and play video games.

One of the Russian companies uses AI in its developments to restore people's vision and hearing, and to combat severe nervous system disorders. In all cases, the principle is the same: the processing unit receives signals from the environment, the processor processes them and converts them into impulses that reach the brain through a chain of electrodes.

Developers note that AI algorithms refine the data so that the brain receives information in a suitable format. They "capture" the outlines of objects, doorways, and contours of objects from the surrounding space, which are sent as electrical impulses to the visual cortex of the brain. The second level of algorithms, using machine vision, tells the patient which objects are in front of him. This way, for example, it becomes possible to read a book — the AI algorithm recognizes and voices the text.

At this stage, AI begins to be responsible for what information enters the human brain. This means that nothing prevents reproducing not a real picture, but additional images — from augmented reality to multimedia streams, as shown in Black Mirror. In productions with technical secrets, employees will have special glasses — and in the more distant future, possibly implants. They will allow the employer to monitor whether the employee visits zones closed to him and does not read information that he should not have access to, and, if necessary, censor prohibited data, as in Arkangel.

So it's not far off to the robots that we can still imagine in movies and games.

AI bots like real people

In which episodes does the technology appear:

Be right back: an online service allows people to stay in touch with a deceased person. Using online messages and social media profiles, you can create a bot - a copy of the deceased.

The Waldo Moment — the actor who voiced the cartoon bear named Waldo becomes involved in politics when his character runs for office and gets out of the author's control.

Examples from real life

These fantasies are also much closer to reality than you might think. Already in the current academic year, an AI student "Grigory IIsaev" entered RUDN University. In essence, it is a machine learning model based on GigaChat, which will master the material on a par with "live" students, clearly demonstrating the process of accumulating knowledge. It can be assumed that such technology can provide valuable insights into how course listeners perceive educational materials, where the load is too strong, and where it allows them to take a breath.

Now you have to interact with "Grigory" using text. In the future, they plan to create a graphical image and give him a voice so that communication with the robot is no different from talking to another student.

And "Alisa" from "Yandex" has long been able to maintain a conversation on free topics, voicing her thoughts. Developers offer users to play cities with a voice assistant and ask to tell a fairy tale, that is, they literally declare the goal of creating an artificial companion, or even a friend.

And such developments are already yielding real results.

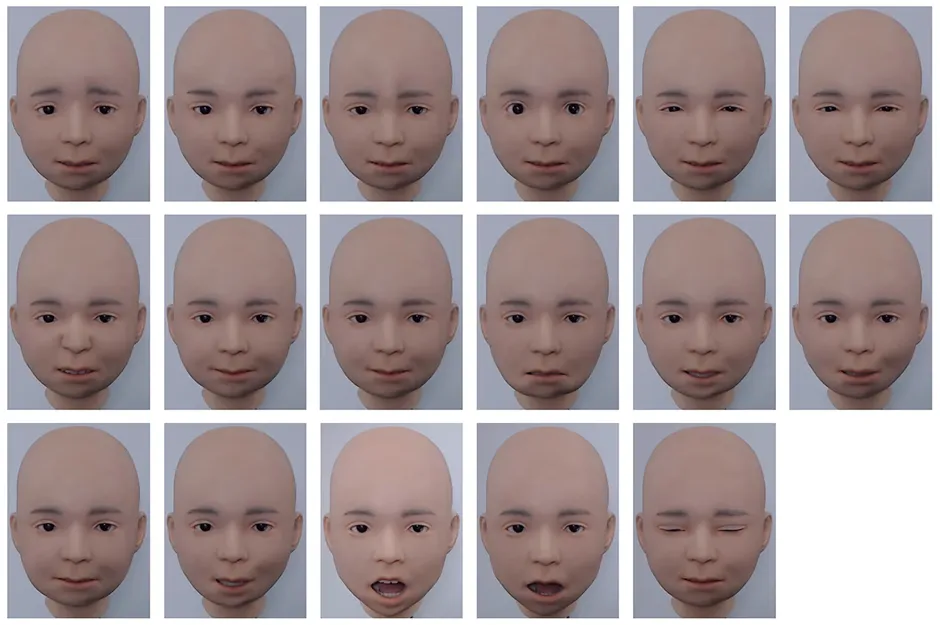

So, the robot Nikola, despite the European name, was created in a Japanese laboratory as part of the Guardian Robot project. Developers strive to "combine psychology, brain science, cognitive science and artificial intelligence research to create a future society in which people, artificial intelligence and robots can coexist flexibly."

Nikola has the head of a child robot with a face made of silicone skin, under which there are almost 30 pneumatic actuators that control artificial muscles. The creators of the robot used the developments of the Facial Action Coding System (FACS), which is widely used to study facial expressions. Based on "facial action units" such as cheek raising and lip puckering, researchers taught Nikola to mimic human emotions: happiness, sadness, fear, anger, surprise, and disgust.

However, anyone who has interacted with bank call centers in the past couple of years already knows what it's like to talk to robots. Interactive voice menus now solve up to 40% of the questions that customers ask, and handle simple tasks 70% more efficiently than "live" employees (i.e., cheaper for business).

At the same time, rosy forecasts for the implementation of AI often break down... On cybersecurity. Fintech and e-commerce are the most vulnerable industries in this regard, as they massively use open code in the development of customer services and applications. Often, unverified datasets are used to develop scripts in chatbots. And if developers skip verification steps, there is always a risk that "bookmarks" will work, leading to the loss of users' personal data.

For example, to attack digital resources that use AI bots to communicate with customers, hackers can exploit vulnerabilities in digital platforms and authentication systems in personal accounts. They can "hijack" cookies to take control of accounts and gain access to interaction history. Cybersecurity in this case is provided by solutions for secure development, identification and access management technologies.

AI for decision making and human management

In which series the technology is found:

Hang the DJ: people try to find a partner, relying on a dating service that makes all the decisions for the users.

Nosedive: the heroine lives in a world where all people rate each other on a five-star scale - from friends to strangers they meet on the street. Only those with a high rating can use public benefits, such as living in better areas.

Examples from real life

AI systems that make decisions about a person for him are probably the main fear since the beginning of fantasies about such technologies. Even in "2001: A Space Odyssey," the HAL computer told the astronaut commander that it decided not to let him on board for its own reasons. Neural networks are by default a "black box": it is not always possible to explain exactly how the model processes data before redrawing a picture in the style of Van Gogh or writing a post on a given topic. Therefore, scientific research appears on how LLM thinks or how to interpret the work of a neural network by removing data from its internal layers.

Theoretically, a computer can be entrusted with decisions in almost any industry where it is necessary to take into account many changing factors. Analytical solutions of previous generations faced limitations in terms of work flexibility, limited data integration capabilities, and manual operations.

For example, Microsoft introduced the experimental Python library TinyTroupe, which is designed to model human behavior. It works based on large language models and will be useful for evaluating the effectiveness of advertising campaigns, organizing software testing, or generating data for training neural networks.

Examples from real life

AI systems that make decisions about a person for themselves are probably the main fear since the beginning of fantasies about such technologies. Even in "2001: A Space Odyssey," the HAL computer informed the astronaut commander that it had decided not to let him on board for its own reasons. Neural networks by default are a "black box": it is not always possible to explain exactly how the model processes data before redrawing a picture in the style of Van Gogh or writing a post on a given topic. Therefore, scientific research appears on how LLM thinks or how to interpret the work of a neural network by removing data from its internal layers.

Theoretically, a computer can be entrusted with decisions in almost any industry where it is necessary to take into account many changing factors. Analytical solutions of previous generations faced limitations in terms of work flexibility, limited data integration capabilities, and manual operations.

For example, Microsoft introduced the experimental Python library TinyTroupe, which is designed to model human behavior. It works based on large language models and will be useful for evaluating the effectiveness of advertising campaigns, organizing software testing, or generating data for training neural networks.

Moreover, retailers are already actively using AI-based systems to forecast demand, manage inventory, and optimize pricing. It turns out that the availability or absence of a product in the store may depend on the decisions of the robot, and people may buy certain products only because AI decided that they should be put on the shelf now.

Often we ourselves give up control over our data. For the sake of discounts in stores, we open our social media pages to retail chains, fill out detailed questionnaires, which are then used to create our digital profiles. Companies like Palantir in the US or the Russian Data Sapience sell clients the ability to make business decisions based on huge amounts of up-to-date data about how we actually live, shop, work, and relax.

Criminals also take advantage of this opportunity, as was the case in 2022 after a data leak at one of the largest food delivery services. By collecting data from multiple sources, attackers can create a complete portrait of the victim of a future fraudulent scheme. This is no longer a blind attack, but a ready-made scenario in which details of the biography, names of friends and colleagues can be used.

But I don't want to paint the future only in dark tones. The same companies working with big data talk about a world where it will be more convenient for us to choose a profession and easier to learn, where advertising will offer only those products that we really need.

After all, the main value of the "black box" of neural networks is precisely that it is "black". We use machine learning technologies to detect hidden patterns in data and make better decisions. For example, the UBA AI module in the Solar Dozor DLP system recognizes unusual actions and anomalies that may indicate an attempt to steal information. The neural network built into the system recognizes speech in 50 languages and translates it into text. All this data is used to investigate information leaks and insider actions in companies.

And in the future, a robotic security system will be able to recognize minimal differences that reveal a fake if a person, for example, is trying to be deceived by a fake voice message allegedly from a close relative or boss. Therefore, new tasks will appear for AI - to recognize fakes in the form of voice, text or video sequences that can forge messages from company specialists, its partners and counterparties.

The likelihood of such supernatural fakes will increase because more and more systems and devices are collecting data about a person's life and work. Now even beginners in machine learning can take courses on fine-tuning LLM models and fine-tune them on their own data. It is not hard to imagine a future where cybercriminal robots will plan and carry out cyberattacks on companies protected by similar robots.

Moral of the story

Futurists often talk about technological singularity—a hypothetical event in the future after which the development of technology will get out of human control. Therefore, all the grim scenarios of "Black Mirror" are based on the fear of the side effects of new technologies and their consequences. To a large extent, society's concerns are related to the birth of true artificial intelligence, which will surpass the capabilities of existing machine learning models by several heads.

In an attempt to curb these technologies, the global scientific community has formulated several laws of robotics. The priority is safety and benefit for people, ethical principles of safety, transparency and responsibility, respect for the rights of others. These same principles are being developed by the Russian community. For example, experts from the Higher School of Economics have developed a declaration of ethical principles for the creation and use of AI systems. The first point there is "priority of human communication"—it can be assumed that this restriction will not allow replacing employees with a robotic system.

Or the experience of the Consortium for Artificial Intelligence, which was created with the support of the Ministry of Digital Development of Russia. The scientific community, in partnership with technology companies, is developing several areas related to AI. Experts focus on developing secure technologies for working with data, cryptographic methods for ensuring security for AI, and, together with partners, developing centers for industry research in the field of machine learning security.

GC "Solar", which joined the Consortium in November 2024, on its part, is researching methods for protecting information assets and confidential information using neural networks, developing prototypes for detecting abnormal user and software behavior.

In attempts to regulate AI, it should not be forgotten that this is a double-edged sword that also plays on the side of cybercriminals. Hackers are already using large language models to improve cyberattacks, research targets, improve scripts, and develop social engineering techniques. At the same time, they massively exploit human fears, emotions, and negative beliefs to achieve their goals.

Therefore, in order not to end up in the scenario of the next anthology of "The Twilight Zone", we are conducting research to protect data from compromise using AI and developing technologies to detect fakes that can influence our decisions and determine our future.

Write comment