- Hardware

- A

192 cores per processor — release of AMD EPYC Turin server processors

Lisa Su has been at the helm of AMD for ten years, and during this time she not only brought the company out of crisis but also made it a true market leader. Under her leadership, AMD has transformed from an outsider into a serious player in the server processor market, forcing Intel to fight for survival. Now, on October 10, AMD has introduced new server processors of the Epyc 9005 Turin series. The lineup includes models with 192 Zen5C cores, aimed at distributed computing, and 128-core processors with full-fledged Zen5 cores and half a gigabyte of L3 cache. Read our post for details on all the new products and unique features of the new processors!

For a whole decade, Lisa Su has been at the helm of AMD, and during this time she not only brought the company out of crisis but also turned it into a true market leader. Under her leadership, AMD has transformed from an outsider into a serious player in the server processor market, where Intel ultimately has to play the role of not even catching up with AMD, but given their current state – fighting for survival with all their might.

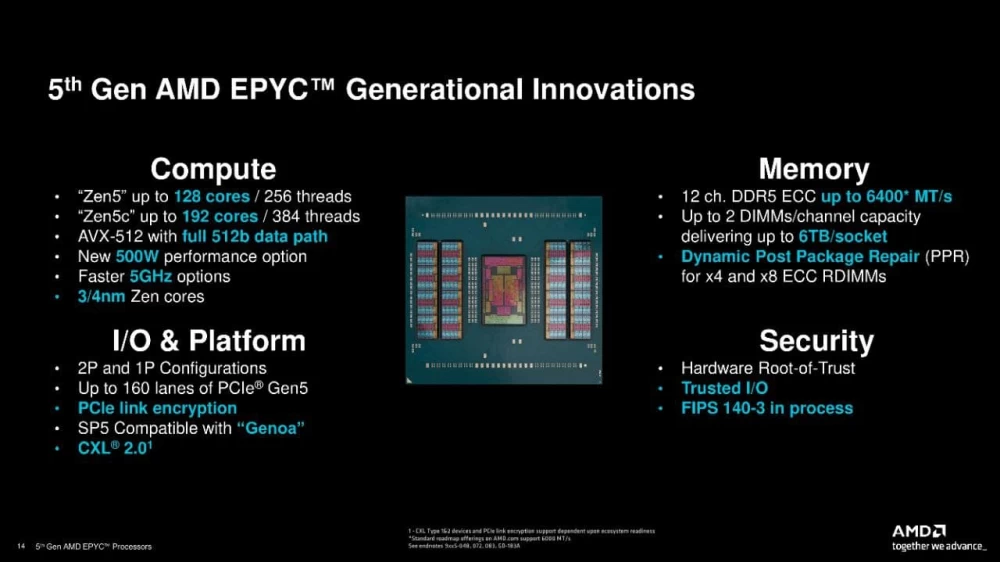

And finally, the long-awaited event for everyone who follows the server hardware market happened – on October 10, AMD introduced its new server processors of the EPYC 9005 Turin series. Want 384 threads per processor? Here you go, Epyc with 192 cores, albeit with a nuance inherited from the EPYC 9004 "Bergamo" line – energy-efficient Zen5C cores with a smaller cache, all in the name of multithreading and cramming as many cores as possible onto one chip. There were also 128-core variants with full-fledged Zen5 cores and even half a gigabyte of L3 cache.

However, there is something to talk about beyond just cores with cache, and we will discuss all the innovations in more detail below in the post.

It will be hot

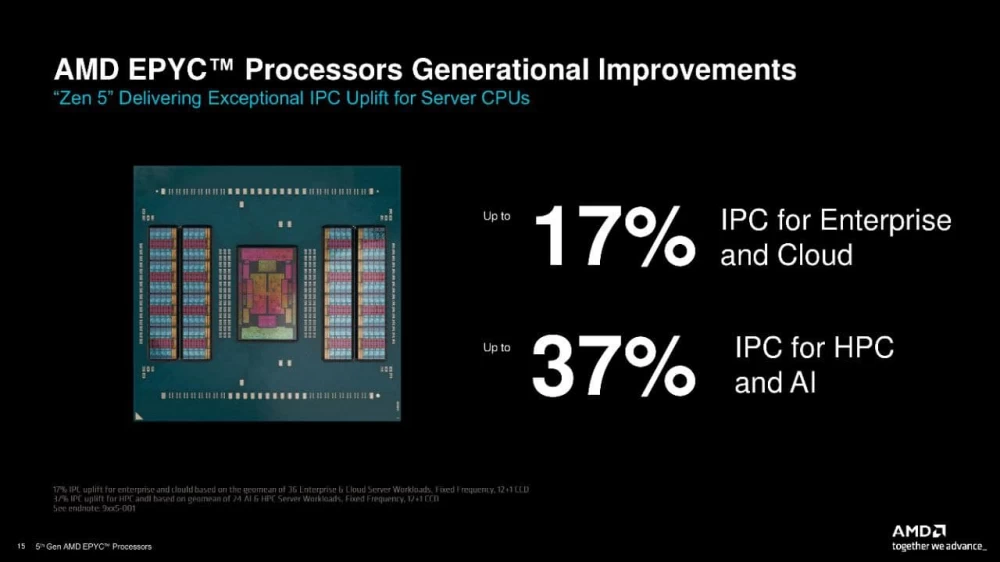

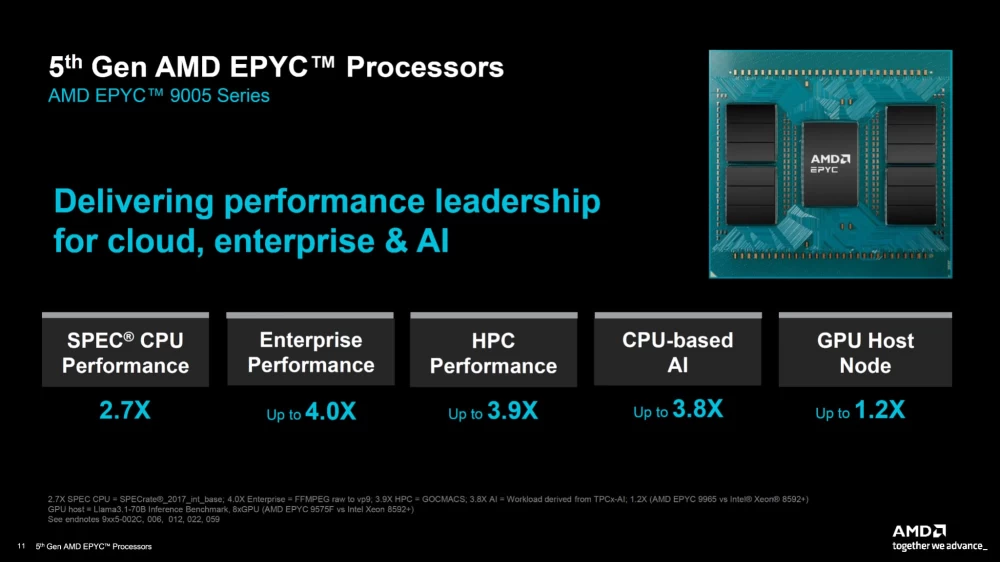

The new processors show an impressive leap in performance compared to their predecessors – +17% in cloud tasks and +37% in neural network work, all at a fairly modest price. From $527 for an 8-core model to $14,800 for a 192-core monster, even with small cores.

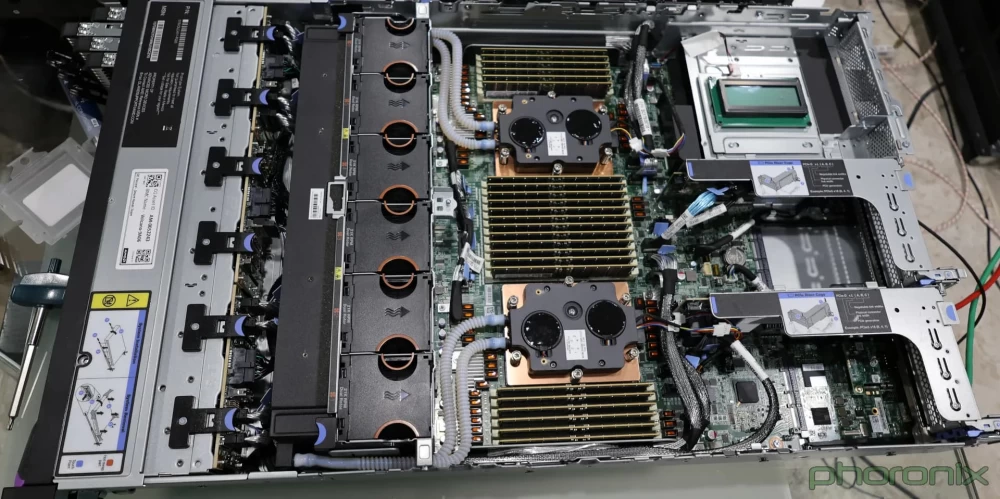

However, increasing the number of cores and transistor density inevitably leads to an increase in TDP. Despite the reduction of the process to 3 and 4 nanometers, the processor area remained the same. As a result, a non-trivial task arises - how to effectively dissipate 0.5kW of heat with a relatively small contact area?

This level of heat dissipation may mark the transition of the server segment into the era of water cooling. Perhaps soon we will see top models of EPYC supplied with integrated liquid cooling systems. As for classic air cooling systems in 1U format servers - it seems that engineers will have to rack their brains to fit a sufficiently powerful radiator there.

But let's look at it from the other side. The EPYC 9005 series offers server solutions with exceptional performance. For example, dual-socket servers with AMD EPYC 9965 processors provide 1.7 times higher performance per watt of the system than Intel Xeon 8592+ processors when running the SPECpower test.

Moreover, replacing 100 old servers based on dual-socket Intel Xeon 8280 with only 14 new servers with AMD EPYC 9655 can provide comparable performance while using up to 86% fewer servers and consuming 69% less energy. To achieve the same level of performance, 35 servers based on Intel Xeon 8592+ would be required. However, regarding the 6th generation Intel Xeon, AMD did not provide data in their brochures, which is strange.

So yes, the processors are hot, but they allow for a significant reduction in the number of servers and the overall energy consumption of the data center while maintaining the same or even greater computing power. How exactly OEM server manufacturers will address the cooling issue of all this power, we will find out soon.

Zen5 - pure power

The new Zen5 architecture is not just another step, but a real leap forward. AMD managed not only to pack more transistors on the chip but also to seriously optimize their operation. The result? Frequencies up to 5 GHz, which for a server processor sounds rather dubious, as the heat generated by these 5 GHz will need to be dissipated somewhere, and it is unlikely that all cores will be able to operate at such a frequency simultaneously. And single-core performance is not as important in the server segment as multi-threading, although for the same databases it probably makes sense.

Zen5C - density and efficiency

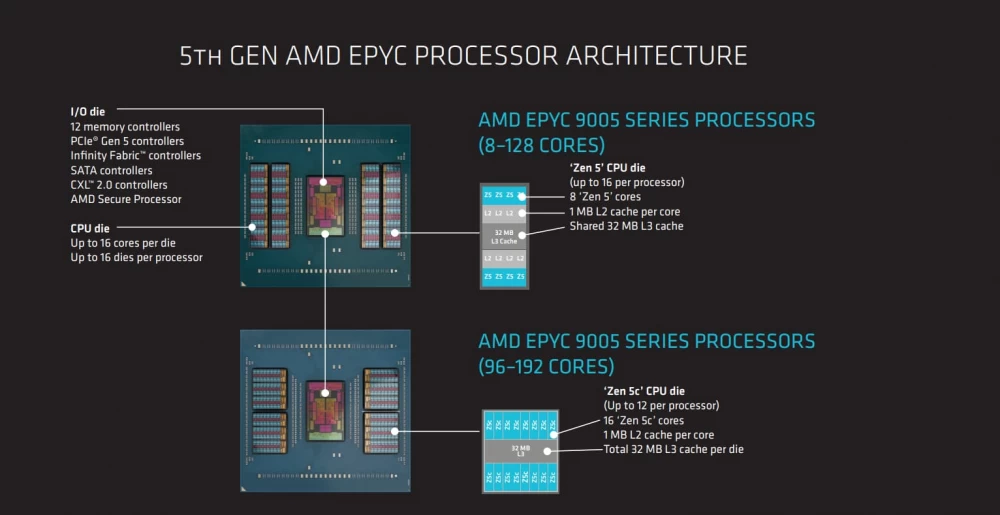

But AMD decided that this was not enough. Therefore, Zen5C appeared - the younger brother of the big Zen5, but with serious ambitions. Less cache, but more cores fit. And now we have 192 cores in one processor. Of course, they are not as powerful as full-fledged Zen5, but when it comes to multi-threaded tasks - here quantity takes precedence over quality.

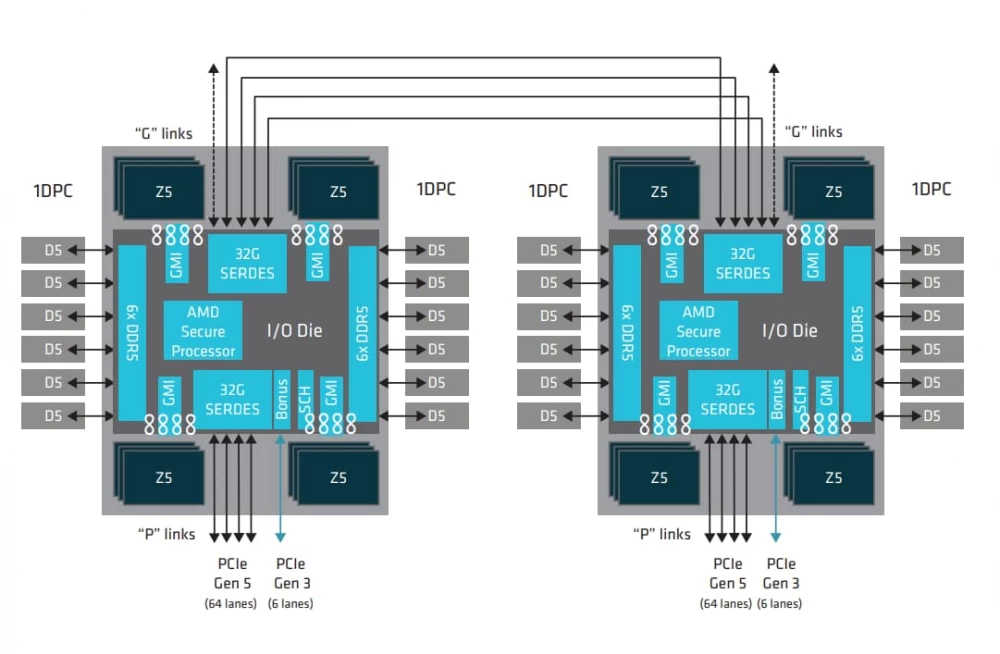

The Zen5C CPU die contains 16 cores, each with 1MB of L2 cache and a total of 32MB of L3 cache. To create processors with more than 128 cores, up to 12 such dies can be connected to an I/O die, resulting in up to 192 cores per processor for ultra-dense high-performance systems.

Memory and buses - more of everything

What about RAM? Here, the new Epyc is also good, but without fantasy. DDR5 is supported with frequencies of 6400 MHz with 12 channels. For example, the EPYC 9005 specifically supports up to 6 TB of DDR5-6000 memory, providing a maximum theoretical bandwidth of 576 GB/s per socket. This will be most noticeable in applications sensitive to memory bandwidth, such as in-memory databases.

And since we are talking about bandwidth, let's also note 160 PCI-E 5.0 lanes, so that the data exchange bus is not a bottleneck when connecting new server GPUs.

EPYC vs Xeon

The direct competitors of the new EPYC are the slightly earlier processors from Intel – Xeon 6700E and 6900P. But AMD once again does not give the blue colleague a chance to win. More cores, higher frequencies, more modern memory – EPYC 9005 surpasses the 6th generation Xeon in everything. This is especially noticeable in tasks related to AI, where EPYC shows itself as a real performance monster.

According to AMD estimates, dual-socket servers with 192-core EPYC 9965 demonstrate 2.68x higher throughput compared to 64-core Intel Xeon 8592+ when running SPECrate2017_int_base.

Superiority in business workloads

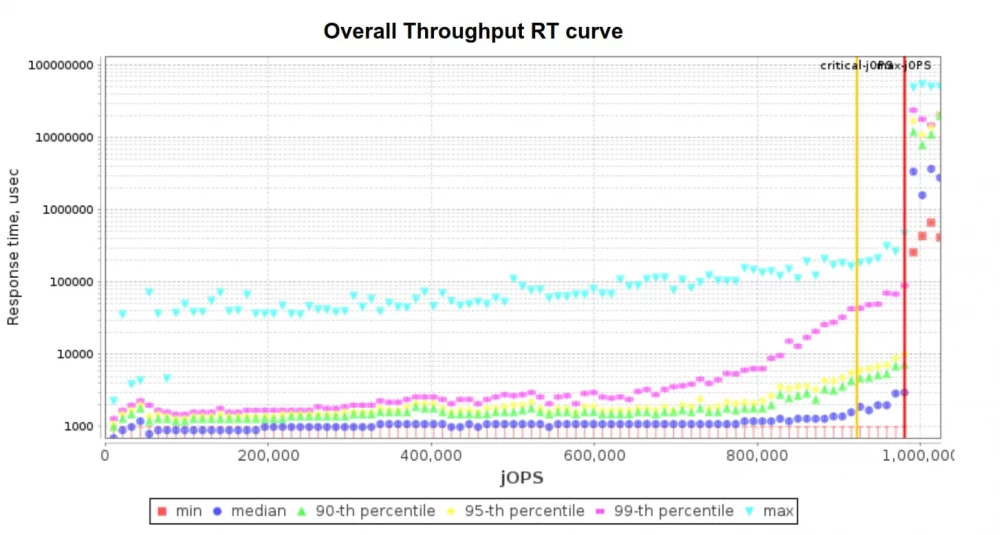

When it comes to real business applications, EPYC 9005 also shows impressive results. Using dual-socket servers based on 192-core AMD EPYC 9965, 2.2 times more critical jOPs are achieved in Multi-JVM compared to 64-core Intel Xeon 8592+ when running the SPECjbb2015-MultiJVM benchmark.

For MySQL workloads based on the TPC-C benchmark, dual-socket servers based on 192-core AMD EPYC 9965 provide up to 2.9 times more transactions per second compared to 64-core Intel Xeon 8592+.

AI – an attempt to jump on the departing train?

It is worth noting how AMD positions its new processors for artificial intelligence tasks. EPYC 9005 not only supports AI computing, it becomes a true foundation for creating powerful AI systems. The ability to connect a bunch of specialized accelerators via PCI-E 5.0, combined with a huge number of cores and fast memory, makes these processors an ideal choice for creating infrastructure for the most demanding AI applications.

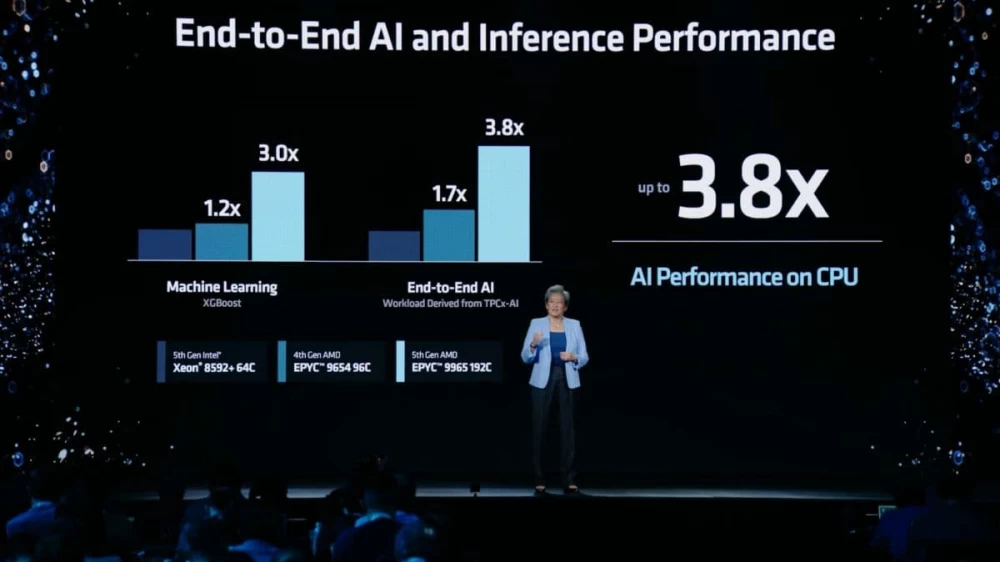

EPYC 9005 provides up to ~2.7x higher throughput for AI inference tasks such as XGBoost on the Higgs boson dataset compared to Intel Xeon 8592+. This makes them an excellent choice for a wide range of AI tasks, from image classification to natural language processing.

Why and who needs this when inference capabilities on GPU, or NPU/TPU far exceed those of even the most multi-core processors - traditionally left a mystery.

Optimization for GPU systems

However, the new processors are really good as additions to powerful graphics cards in AI-related tasks. AMD has optimized some EPYC 9005 models for use as host processors in GPU systems. For example, using two high-frequency AMD EPYC 9575F as a host for 8 GPU accelerators achieves ~15% faster training time compared to two Intel Xeon 8592+ when running Llama 3.1-8B.

Red path to innovation

AMD EPYC processors have separated the CPU core blocks and I/O functions into different dies, which can be developed on their own schedules and manufactured using technological processes appropriate to the tasks they need to perform. From generation to generation, the size of the CPU dies has decreased as photolithography technology has advanced. Today, 'Zen 5' cores are manufactured using 4nm technology, the 'Zen 5c' core is manufactured using 3nm technology, and the I/O die remains on 6nm technology from the previous generation.

This approach is more flexible and dynamic than trying to build all processor functions using a single manufacturing technology. With a modular approach, we can mix and match CPU and I/O dies to create specialized processors that precisely meet workload requirements. They range from high-performance processors with 192 cores to processors for scalable systems requiring only eight cores.

So, what do we have in the end?

AMD has once again proven that it can not only compete with Intel but also set the tone in the server processor market. The trend has remained the same as in previous generations, but the scale has increased – more transistors, more cores, more cache, but also more heat dissipation.

Well, now we wait for the new EPYC to start appearing in data centers around the world. And then, you see, they will reach our servers in ServerFlow. The main thing is not to forget to update the air conditioning system in the server room. Otherwise, how not to get a sauna instead of a server room with 0.5 kilowatts per processor.

The only mystery remains how Intel will respond to this? And how soon, again, right before the release of the new EPYC, which surpasses their Xeon on all fronts? And will Intel even survive until the new EPYC is released? We invite everyone to the comments to discuss this!

Write comment