- AI

- A

Can Pictionary and Minecraft become intelligence tests for AI?

Hello, this is Yulia Rogozina, a business process analyst at Sherpa Robotics. Today I translated for you an article about how Paul Calcraft, a freelance AI technology developer, created an application in which two AI models can play a game similar to Pictionary with each other. One model draws doodles, and the other tries to guess what they depict.

Most standard AI benchmarks do not provide us with enough useful information. They often offer tasks that can be solved by simple memorization or cover topics that are not relevant to the real needs of users.

In response to this, some AI enthusiasts are starting to use games as a way to test AI's ability to solve non-standard tasks.

Paul Calcraft, a freelance AI developer, created an application in which two AI models play a game similar to Pictionary. One model draws pictures, and the other tries to guess what is depicted.

"I thought it was a lot of fun and possibly interesting in terms of the models' capabilities," Calcraft said in an interview with TechCrunch. "I was sitting at home on a cloudy Saturday and implemented it."

Calcraft's idea was inspired by a similar project by British programmer Simon Willison, who tasked the models with drawing a vector image of a pelican riding a bicycle. Willison, like Calcraft, chose a task that he believed would make the models "think" beyond the data they were trained on.

"The idea is to create a benchmark that cannot be 'cheated'," Calcraft said. "A benchmark that cannot be beaten by memorizing specific answers or simple patterns encountered during training."

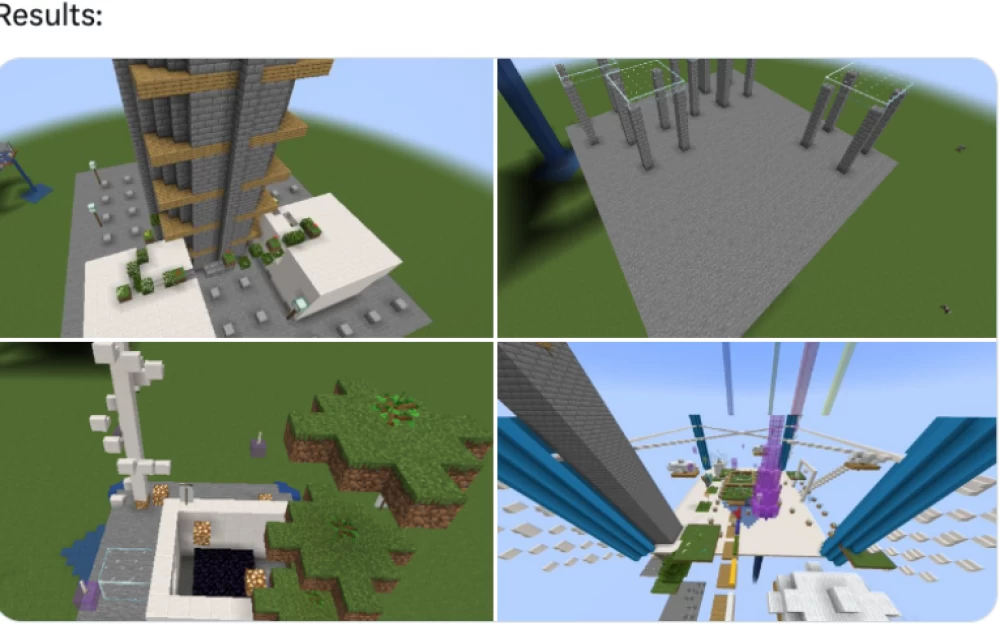

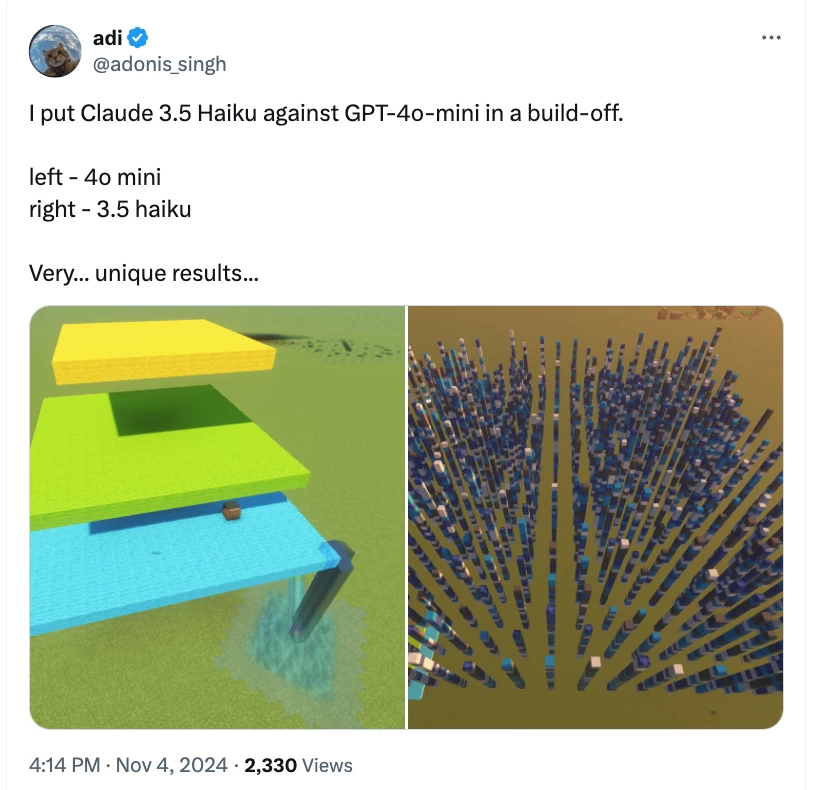

Minecraft also falls into this category of systems, according to 16-year-old Adonis Singh. He developed a tool called mc-bench, which gives the model control of a character in Minecraft and tests its ability to design structures, similar to Microsoft's Project Malmo.

"I believe Minecraft tests models for ingenuity and gives them more freedom of action," he said in an interview with TechCrunch. "It is not as constrained and not as saturated as other games for testing."

The use of games to evaluate artificial intelligence is far from new. This idea has been around for decades. As early as 1949, mathematician Claude Shannon argued that games like chess are a worthy test for "smart" software. In later years, Alphabet and DeepMind developed models capable of playing Pong and Breakout; OpenAI trained AI to compete in Dota 2 matches, and Meta created an algorithm that could compete with professional Texas Hold'em players. However, the situation has now changed. Enthusiasts have begun connecting large language models (LLMs) — systems capable of analyzing text, images, and other data — to games to test how well they can solve logical problems.

Today, there is a whole variety of LLMs, from Gemini and Claude to GPT-4, each with its own characteristics, so to speak, its own "character." They "feel" different with each interaction, a phenomenon that is difficult to quantify precisely.

Large language models (LLMs) are known for their sensitivity to question phrasing and overall unpredictability, making their work difficult to analyze, notes Calcraft.

Unlike text benchmarks, games offer a visual and intuitive way to assess model behavior and performance, adds Matthew Guzdial, an AI researcher and professor at the University of Alberta.

"You can think of each benchmark as a simplification of reality, focused on solving certain types of tasks, such as logic or communication," he says. "Games are just another way of making decisions using AI, and people are starting to use them as an approach similar to others."

Those familiar with the history of generative AI will surely notice that Pictionary is similar to generative adversarial networks (GANs), where the generator model sends images to the discriminator model, which evaluates them.

Calcraft believes that Pictionary can demonstrate the ability of LLM to understand concepts such as shapes, colors, and prepositions (e.g., the difference between "in" and "on"). While he does not claim that this game is a reliable test of logical thinking, he emphasizes that winning requires strategy and the ability to understand clues — tasks that models usually struggle with.

"I like the almost antagonistic nature of playing Pictionary, which is reminiscent of GAN, where there are two roles: one draws and the other guesses," he says. "The best artist is not the one who is more skillful, but the one who can most clearly convey the idea to other LLM models (including less fast and weaker ones!)."

"Pictionary is a simplified task that has no immediate practical application," Calcraft warns. "Nevertheless, I believe that spatial perception and multimodality are critically important aspects for AI development, and LLM Pictionary can be the first step on this path."

Singh believes that Minecraft is a useful benchmark that can serve as an indicator of LLM models' reasoning abilities. According to him, "The results I got on the models I tested completely match how much I trust the model in matters related to logical thinking."

However, not everyone shares his opinion. Mike Cook, a research fellow at Queen Mary University specializing in artificial intelligence, does not consider Minecraft to be a particularly unique tool for testing AI. "It seems to me that the fascination with Minecraft comes from people who are not related to games, who may believe that since the game looks like the 'real world,' it is closely related to real thinking and action processes," Cook said in an interview with TechCrunch.

«From the perspective of problem-solving, Minecraft is not much different from video games like Fortnite, Stardew Valley, or World of Warcraft. They are just games with different wrappers that may seem closer to real life due to actions like building or exploring.» Indeed, even the most advanced AI systems for games do not adapt well to new environments and cannot quickly solve tasks they have not encountered before. For example, a model that excels at playing Minecraft is unlikely to demonstrate the same results in Doom, which requires completely different skills.

«I think all that Minecraft can really offer in terms of AI are extremely weak reward signals and a procedural world that creates unpredictable challenges,» Cook continued. «But it is not really any more "realistic" in this regard than any other video game.»

Nevertheless, watching LLM models build castles in Minecraft is still fascinating.

Write comment