- AI

- A

What AI agents are: how they work and why they are important

AI agent is an intelligent system capable not only of responding to requests but also of setting goals, planning actions, and adapting during the process. Unlike traditional chatbots that perform strictly defined commands, the agent operates autonomously, similar to a virtual employee. Upon receiving a task, it breaks it down into steps, selects tools, analyzes the results, and adjusts the strategy if necessary.

Why are they needed?

People handle creative tasks well, but fall behind when it comes to processing large volumes of data, working around the clock, and keeping track of many variables. AI agents solve four key problems:

Unify disparate systems. For example, instead of manually collecting data from various sources (security logs, client databases), the agent links them and provides a ready-made report.

Operate 24/7. They automatically resolve IT system failures, process orders, or monitor sensor metrics tirelessly.

Handle complex scenarios. They manage power grids during emergencies, forecast demand for goods considering dozens of factors, or optimize logistics in real time.

Adapt instantly. For example, anti-fraud systems in banks update rules between transactions to block new fraud schemes.

How AI agents differ from regular AI models

Regular AI models work on a "query-response" basis: the user types a prompt — the system generates an answer, but doesn’t remember the context and doesn’t act independently.

An AI agent is a step up. It:

breaks down tasks into steps and adjusts strategy independently;

retains context (user preferences, past mistakes);

integrates with APIs, databases, and other programs;

learns from its own actions.

For instance, you ask an AI model: “What’s the weather in Moscow?” → it replies in text. But with an AI agent, you say: “Book tickets to Moscow for this weekend” → it finds flights, compares prices, books tickets, and adds the event to your calendar.

Simply put: AI agent = AI model + connecting code + tools + active memory.

How artificial intelligence agents work

Autonomous systems complete complex multi-stage tasks based on four components:

Personalized data (context and knowledge).

Memory (storing and using information).

“Sense → Think → Act” cycle (decision-making logic).

Tools (interaction with external services).

Let’s break down each point in more detail.

Personalized data

An agent can’t function without information. Depending on the task, data can be integrated in different ways.

Retrieval-Augmented Generation (RAG). The agent searches for information in connected knowledge bases (documents, websites, corporate repositories). Data is converted into numerical vectors (embeddings) for fast searching. When a query comes in, the system finds relevant fragments and inserts them into the model’s context.

Prompts. Context is manually added to the request. For example: "You are a lawyer at Company X. The client is asking about contract termination. Respond based on the Civil Code of the Russian Federation, Article 450."

Fine-tuning. The base model (such as GPT) is further trained on specialized data. This allows knowledge to be built into the model itself. For example, a medical AI trained on medical histories and scientific publications. A banking chatbot knows all credit rates and conditions.

Training a specialized model. If the task is very specific (such as analyzing X-ray images), a separate neural network is created.

Custom model from scratch. The company develops its own neural network for specific needs. Used in rare cases (for example, military or scientific AI).

Memory — so the agent can remember the context

Without memory, the agent would start from scratch every time. However, it has memory and adapts to user behavior to avoid repeated questions, like: "Are you still not eating nuts?"

There are three main types of memory.

Vector databases. Information is stored as numbers (vectors). The search is not by keywords, but by meaning. For example, the user says: "I don't eat meat" → the agent saves this in a vector DB and automatically excludes meat dishes when ordering food. Used in personal assistants and recommendation systems.

Knowledge graphs. Data is stored as connections: "Object → Relationship → Another object." "Yulia → works at → Company X", "Company X → uses → CRM system Y". Used to find complex dependencies (e.g., in medicine) and analyze social networks (identifying communities).

Action logs. The agent records all its steps. If something goes wrong, it analyzes errors. For example, the payment did not go through → the agent tries another payment method. API error → reverts changes and notifies the developer.

Sensing → Thinking → Acting Cycle

This is the engine of the agent. It works in an endless loop:

Sensing. The agent receives data:

text request ("Order a pizza");

sensor signal (temperature dropped below zero);

new email or notification.

Thinking. The AI agent analyzes the context, breaks the goal into sub-tasks, and assesses risks. For example, the task is: "Deploy a new version of the app" → check tests; make sure servers are free; prepare a rollback in case of failure.

Acting. The agent sends a reply to the user. Calls an API (for example, payment via YooKassa) and launches another process.

DevOps agent:

Senses: new code in the repository.

Thinks: checks tests and dependencies.

Acts: deploys the update or reports an error.

Tools — how the agent interacts with the world

The agent isn't limited to text. It can:

Send emails (Mail.ru, Yandex.Mail API).

Work with databases (SQL queries).

Control applications ( Bitrix24, VK Teams, 1C).

The history of agents began back in the 1950s

1950s: Logic Theorist (USA) vs. "Cybernetics" in the USSR

In 1954, the famous Georgetown Experiment took place in the USA — the first public demonstration of machine translation, where the IBM 701 system successfully translated more than 60 Russian sentences into English.

Meanwhile, in the USSR, algorithms for machine translation were being developed under the leadership of A. A. Lyapunov — automatic translation of scientific texts from French to Russian using dictionaries and grammatical rules. This work laid the foundations for natural language processing (NLP).

1970s: MYCIN (USA) vs. DIALOG (USSR)

While MYCIN in the USA was diagnosing medical conditions and recommending antibiotics, in the USSR in the 1970s academician Viktor Glushkov led the development of the DIALOG expert system — a revolutionary AI project for its time.

It could hold meaningful conversations in Russian, analyze complex queries, find information in databases, and was designed for medical diagnostics. In 1976, a portable diagnostic device for the BESM-4M computer based on DIALOG was created, which could learn during operation.

1980s: XCON (USA) vs. SIGMA (USSR)

The American XCON configured DEC VAX computers. Soviet SIGMA, developed at the Institute of Cybernetics of Ukraine, was a comprehensive computer-aided design (CAD) system for creating complex technical devices — from industrial machines to electronic circuits.

It used similar principles of expert rules but was applied in Soviet industry.

1990s: Deep Thought (USA) vs. Kaissa (USSR)

Deep Thought appeared — IBM’s chess supercomputer, predecessor to the famous Deep Blue.

But before it, there was Kaissa — the world’s first computer chess champion, created in the USSR in the 1970s.

Kaissa analyzed 10,000 opening variations, discarded weak moves, used bitboards. The program calculated moves in the background, employed a “null move” for quick evaluation and smart time distribution for thinking.

Later, Kaissa’s algorithms became the basis for commercial chess programs that were sold in the West.

2010s: Watson/AlphaGo (USA/UK) vs. Rostec and Yandex (Russia)

IBM’s cognitive system Watson won the popular American quiz show Jeopardy! (the Russian equivalent is “Svoya Igra”), where it analyzed questions in real time, processed natural language, and found answers in data arrays.

AlphaGo’s neural network from DeepMind sensationally beat the world champion in the strategy game Go, revealing new possibilities for machine learning.

Russian developers also made progress. In 2017 Yandex released the voice assistant “Alisa”, which supported complex dialogues in Russian and understood conversation context.

Rostec actively implemented advanced AI technologies in the defense sector, developing autonomous combat drones and next-generation intelligent air defense systems.

2020s: GPT-3 (USA) vs. ruGPT-3 (Russia)

GPT-3 OpenAI (2020) — a language model with 175 billion parameters. RuGPT-3 by Sber (2021) — its Russian-language counterpart, used for text generation and data analysis. Later, YaGPT by Yandex and a specialized AI for cybersecurity by Kaspersky Lab appeared, capable of detecting unknown threats without predefined signatures.

2024: PharmaAI (Global) vs. RFarmAI

The PharmaAI platform uses machine learning algorithms to predict biochemical interactions and optimize clinical trials. The system speeds up drug development by 10–100 times.

In Russia, the technology startup "Ensil" developed a platform based on AI that reduced the search and testing of promising molecular compounds from several months to just a few days. At the same time, the "BioAI TSU" system analyzes more than 10,000 compounds daily for antimicrobial activity, helping to combat antibiotic resistance.

Future trends

AI agents are rapidly evolving. Here's what technologies will define their future.

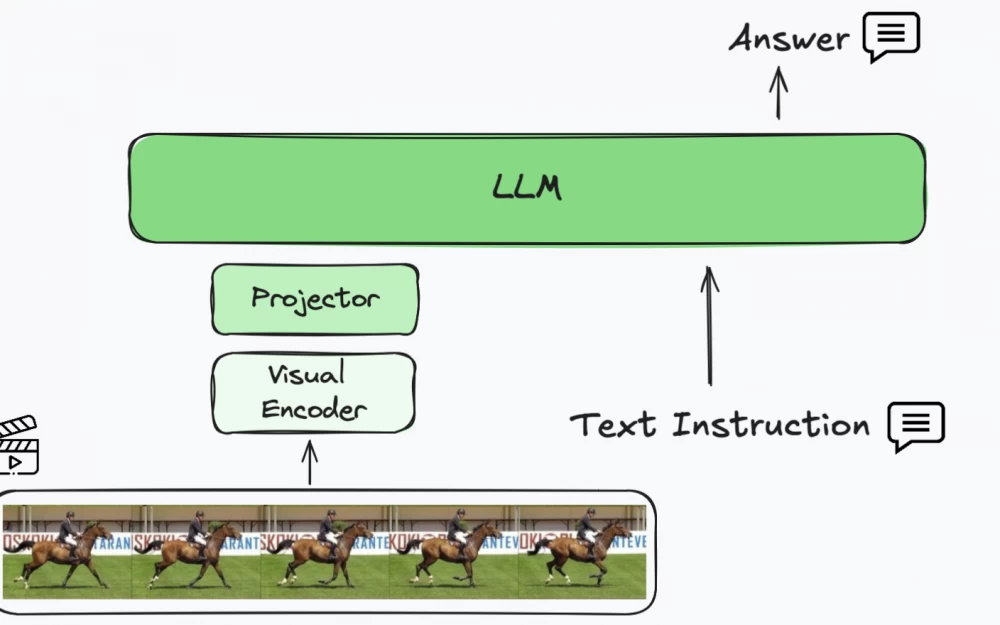

Multimodality. Combining text, audio, and visual data for deeper understanding.

Self-improvement. AI agents require human fine-tuning, but in the future they will be able to:

automatically find errors in their work and correct them;

optimize code for greater efficiency;

generate new training data (for example, create simulations for training).

Edge AI. Currently, most AI work in the cloud (data is sent to the server → processed → returned). In the future, computations will happen directly on the device.

Explainable AI (XAI). AI currently often works as a "black box": we see the result but don't understand how it was obtained. In the future, agents will be able to:

Explain their conclusions ("I recommend this course because you watched similar lectures").

Show logic in an understandable form (graphs, chains of reasoning).

Warn about doubts ("I'm not sure about this diagnosis, additional tests are needed").

Agent collaboration. Several AI will work together (for example, one analyzes the market, another negotiates, a third controls the budget).

Conclusion

Previously, agents operated only on strict rules manually written by people. Training took months and required tons of manual fine-tuning. Now the necessary technological base has appeared: powerful LLMs, distributed computing, and a developed API ecosystem. A developer can create an agent that: understands new situations on its own, doesn't require manual programming for every rule, learns faster and cheaper.

Write comment