- AI

- A

If you need to generate synthetic data — here's a selection of open-source solutions

We’ll talk about reducing costs of working with data at the webinar on August 13. Today we’ll discuss open tools that unlock new opportunities for experiments and working with ML. Below is a selection of four solutions on this topic — we’ll break down their features and usage examples.

Datasets without Routine

Bespoke Curator is a Python library under the Apache 2.0 license that simplifies building scalable pipelines for generating synthetic data (including subsequent training on this data). The project was launched in January 2025 by Bespoke Labs, a startup developing AI tools for working with LLMs. In addition to data generation, the library helps automate their cleaning and formatting processes — optimized for asynchronous operations.

Bespoke Curator can work with the APIs of providers such as OpenAI and Anthropic through LiteLLM and vLLM. One of the key advantages of the system is automatic caching of generated responses. This mechanism protects against failures when processing large volumes of data — you can resume generation from where it was interrupted (instead of starting over). At the same time, caching allows building multi-stage pipelines, reusing data from previous stages: developers demonstrated this feature using the classic Hello World example. When rerunning the code below, the answer is taken from the cache, not requested from the LLM.

from bespokelabs import curator

llm = curator.LLM(model_name="gpt-4o-mini")

poem = llm("Write a poem about the importance of data in AI.")

print(poem.dataset.to_pandas())Additionally, Curator includes CodeExecutor — a built-in tool from Bespoke Labs. It is suitable for generating synthetic datasets with code or developing automated tests.

Thanks to Bespoke Curator, datasets such as Bespoke-Stratos-17k, OpenThoughts-114k, and s1K-1.1 were created, suitable for training reasoning systems and containing math problems, code snippets, and even puzzles. Additionally, the tool was used to generate OpenThoughts2-1M, used for training the OpenThinker2-32B model.

Documentation includes setup guides and reference materials with code examples for generating datasets. It describes parameters, classes, and methods for working with language model APIs, configuring backends, and multimodal scenarios.

Scalable Pipelines

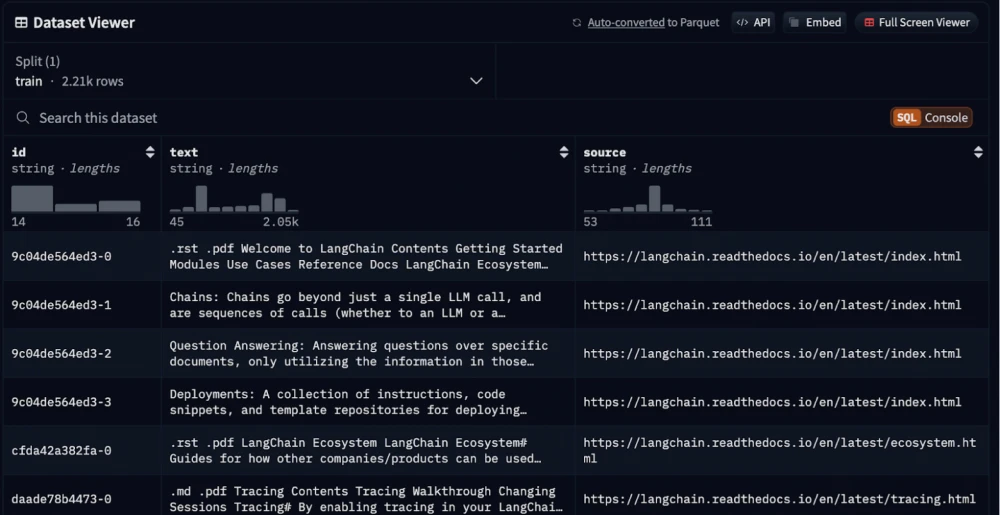

Distilabel— a framework for generating structured synthetic datasets with an Apache 2.0 license. It was developed by Argilla [specializing in AI tools] in 2023. There is integration with LLMs from OpenAI, Anthropic, and other providers via a single API.

As for the required dependencies, Distilabel relies on the libraries Outlines and Instructor. It also uses the Ray framework for scaling workloads and implementing distributed computing, and the Faiss library for finding similar vectors (nearest neighbors), optimized for working with large datasets.

With Distilabel, a dataset OpenHermesPreference was created with a million AI system preferences [“preference” is the choice a neural network makes when responding to prompts]. The framework was also used to create the dataset Intel Orca DPO and the dataset haiku DPO for generating Japanese haikus — a traditional three-line poetic form.

If you want to learn more about this tool or try it out in practice — the official documentation can be a good starting point. It contains installation and setup instructions, as well as a large number of how-to guides for generating synthetic data and more.

Safe Synthetic Data

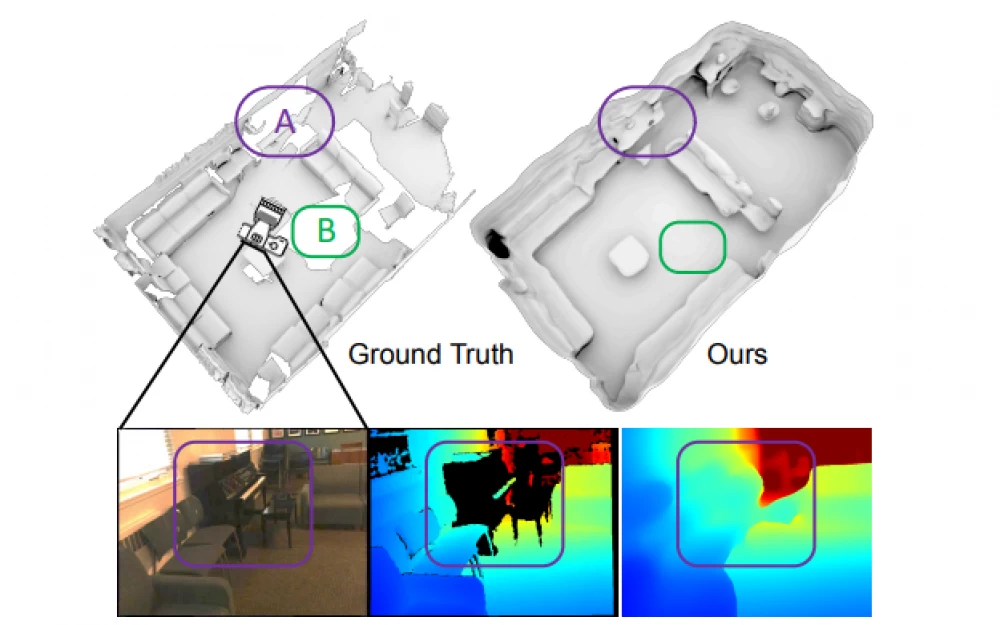

mostlyai— is a Python library under the Apache 2.0 license for generating anonymized synthetic data. It was developed in 2023 by the company MOSTLY AI, which specializes in datasets for machine learning and software testing.

The project is primarily aimed at organizations developing AI systems. Specifically, it can be used to build a synthetic dataset based on a table of customer data (e.g., age, region, transaction history). It looks realistic but will be cleansed of any real personal data.

The foundation of tabular models is the high-performance framework TabularARGN for processing mixed data sets, also proposed by engineers from MOSTLY AI. As the authors state, it allows generating millions of synthetic records in a few minutes (even in CPU-based computing environments). The default language model used is an LSTM without pre-training (LSTMFromScratch-3m).

The documentation for the project is quite comprehensive and covers working with tables, time series, text, as well as environment setup: using Docker and isolated environments without internet access. All this comes with code examples and step-by-step guides for a quick start.

Autopilot for LLM

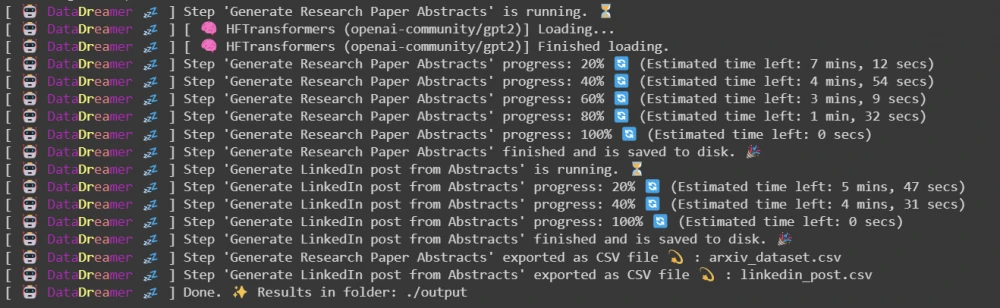

DataDreamer — another open Python library that emerged in 2024. This is an academic project developed by researchers from the Universities of Pennsylvania and Toronto. Their goal was to simplify synthetic dataset generation and improve research reproducibility with LLMs.

The library allows running multi-step pipelines using open models or commercial LLMs available via API. DataDreamer integrates with the Hugging Face Hub to upload datasets and publish results, automatically generating data and model cards with metadata. The tool is distributed under the MIT license. The documentation provides installation instructions, code examples, and scenarios for generating synthetic datasets.

The project highlights include a convenient API, integration with Hugging Face, and automatic caching, making ML research easier.

We'll discuss more about data work on August 13th — join us.

Write comment