- AI

- A

The impact of software and accelerator architecture on performance

A few years ago, at my previous job, I had an interesting discussion with a colleague from the microelectronics department. His idea was that the performance in neural network inference on NVIDIA's GPGPU surpasses our solution due to the use of a more advanced process technology, higher clock frequencies, and a larger die area. As a programmer, I couldn't agree with this, but at that time no one had the time or desire to test this hypothesis. Recently, in a conversation with my current colleagues, I remembered this discussion and decided to see it through. In this article, we will compare the performance of the NM Card module from NTC Module and the GT730 graphics card from NVIDIA.

Disclaimer

All information is obtained from open sources, open documentation, and/or conference reports.

Comparison of chip characteristics

The NM Card module is based on the K1879VM8YA processor, also known as NM6408, manufactured at the TSMC factory using 28 nm process technology in 2017. The die area is 83 mm2, contains 1.05 billion transistors, and the power consumption does not exceed 35 W [1]. The chip includes a PCIe 2.0 x4 controller and a DDR3 memory interface. The peak (theoretical) performance is 512 GFLOPS in fp32 data format. The NMC4 processor cores operate at a clock frequency of 1 GHz.

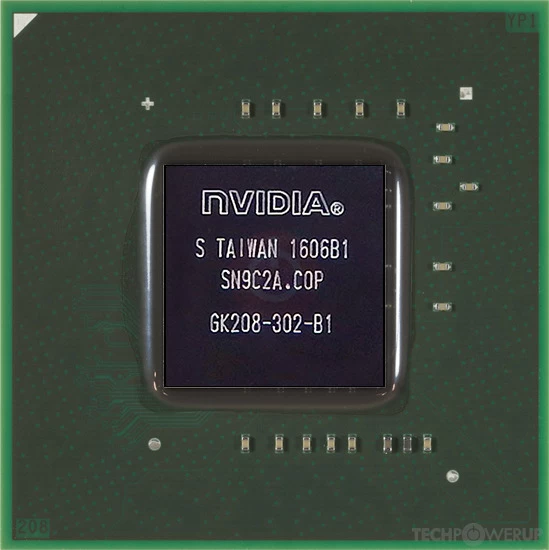

The closest analogs of this chip appear to be two graphics processors from NVIDIA: GK208B of the updated Kepler generation for discrete graphics cards [2] and GM108S of the Maxwell generation for the laptop segment [3]. Both chips are almost identical, released in March 2014. Since the GM108S is only available as part of laptops, and their price on the secondary market is too high for simple curiosity, the first option was chosen. However, it is worth noting that Maxwell is a newer architecture, supported by a more up-to-date software stack and potentially capable of achieving higher performance.

To avoid duplicating the text description, the comparison of chip characteristics is summarized in the table:

K1879VM8YA | GK208B | |

Factory and process technology | TSMC, 28nm | TSMC, 28nm |

Die area, mm2 | 83 | 87 |

Number of transistors, billion | 1.05 | 1.02 |

Maximum power, W | 35 | 23 |

PCIe controller | Gen 2, x4 | Gen 2, x8 |

Memory interface | DDR3 | DDR3* |

Peak performance, GFLOPS | 512 | 692.7 |

Frequency, MHz | 1000 | 902 |

* — GDDR5 memory is also supported | ||

It can be noted that the chips are almost identical in characteristics. However, the GK208B has a certain flexibility, so it is important to choose the right graphics card for a correct comparison.

Comparison of modules

As a module based on NM6408, as already mentioned, the NM Card was chosen [4]. In terms of performance, all modules with a single NM6408 show identical performance, which is provided in the documentation for the inference framework from Module called NMDL [5], which I will refer to later.

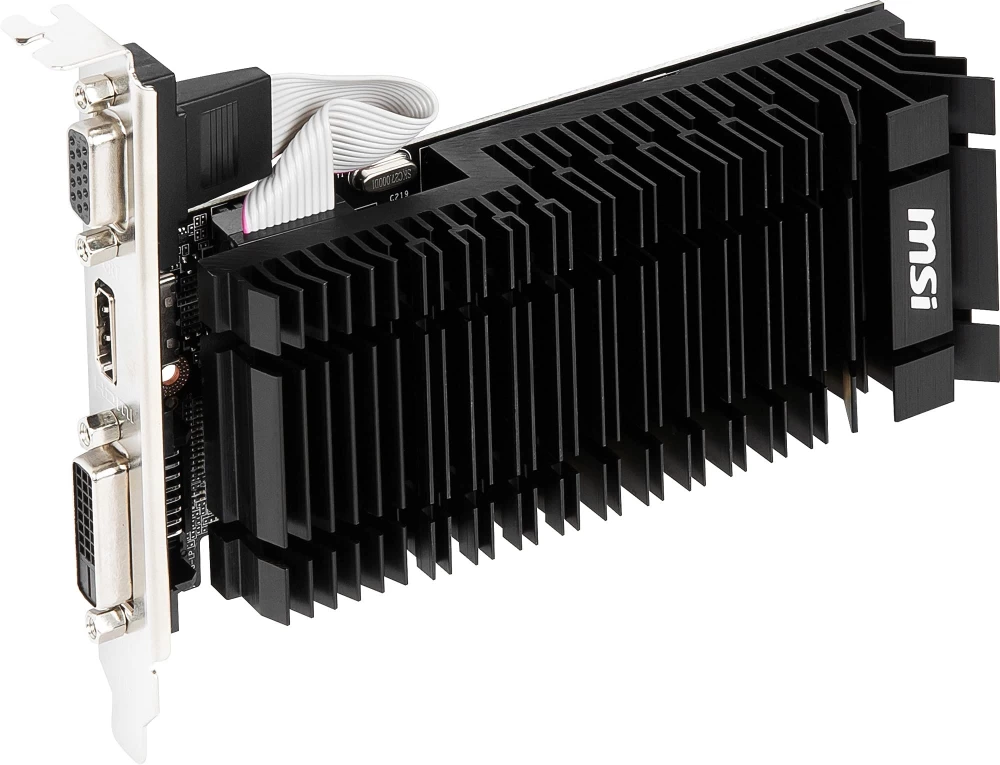

After a couple of days of searching through local flea markets, I managed to find an MSI GT 730 (N730K-2GD3H/LPV1) graphics card for 14 euros [6], in Russia at the time of writing this article it can be found on Avito for 500-1$0.00. The graphics card was manufactured in 2016 and is quite close to the NM Card module. Both cards have passive cooling, however, the GT730 has only 2 GB of memory compared to 5 GB of the NM Card. This affected the research by limiting the batch size, which potentially limited the peak performance for several networks. The second difference is in the standard frequency: 902 MHz versus 1000 MHz for the NM6408, but it can be increased in Afterburner with one action. After that, the peak performance will be 768 GFLOPS, which corresponds to a 1.5 times difference relative to the NM6408.

Test bench and software

As a test bench, I got a computer with very modest specifications: i3-7100 and 8 GB of DDR4 memory. However, since the performance of the graphics card is being measured, this should not have a significant impact. Due to the rather old architecture, the latest version of CUDA that works with this card is 11.6. The modern onnxruntime comes with a dependency on CUDA 12.x, for CUDA 11.x you need to build onnxruntime yourself.

As a result, to get a working version of onnxruntime with cuDNN and TensorRT, the following versions of the components were found and selected:

Component | Version |

onnxruntime | 1.14 |

CUDA | 11.6.2 |

cuDNN | 8.7.0 |

TensorRT | 8.5.3.1 |

A simple program using onnxruntime was written as a benchmark, which loaded the model, ran inference with different batch sizes, and measured execution time over 10 seconds, after which the average execution time was taken. For the sake of experiment purity, an attempt was made to use reference models as much as possible, which are distributed as additional data to the NMDL framework.

Model Name | Reference Model Used | Comment |

alexnet | + | |

inception_229 | + | |

resnet-18 | - | Incorrect model parameter |

resnet-50 | + | |

squeezenet | - | Incorrect model parameter |

yolov2-tiny | - | Layer size mismatch within the model |

yolov3-tiny | + | |

yolov5s | + | |

yolo3 | + | |

inception_512 | + | |

unet | + | |

yolo5l | - | Model not provided |

Data Processing Modes

NM6408 can operate in two modes: multi-unit and single-unit. To understand these two modes, it is necessary to briefly describe the chip architecture. NM6408 contains four independent computing clusters, each of which contains four NMC4 cores and one arm control core. Each cluster has its own DDR3 controller, to which 1 GB of memory is connected. In addition to these four clusters, there is an additional (control) arm core with its own DDR3 controller and an additional gigabyte of memory. All clusters have direct access to each other's memory, but this access is limited to the first 512 MB of memory, which is caused by the use of a 32-bit address space.

The single-unit mode represents the independent operation of each cluster. In the case of the same model, this can be represented as working with a batch size of 4 and in data parallelism mode.

In multi-unit mode, the common input tensor is processed simultaneously on all four clusters. This can also be represented as working with a batch size of 1 and in spatial parallelism mode.

The main difference between the NM6408 and traditional GPGPU operation is that in the former case, the entire model execution graph is on the device and runs with minimal host device involvement. To some extent, this is similar to how modern NPUs work. At the same time, GPGPUs work with so-called kernels, i.e., individual layers. This approach allows for better distribution of work across multiple parallel computing devices, but more on that another time.

In this study, the use of traditional GPGPU allows for greater flexibility in choosing batch size, thereby increasing device utilization.

Comparison results

An important difference in the measured quantities is that NMDL returns the execution time on the time scale of the arm control core without taking into account the transfer of input and output tensor data. Onnxruntime does not provide such a mechanism, so in this experiment, timestamps are taken on the CPU scale before and after running inference through onnxruntime. Thus, all other things being equal, the performance values obtained from onnxruntime will be lower.

For batch size 1, the following results are observed:

Model name | GT730 result, fps | NM Card result, fps | Acceleration, % |

alexnet | 42.05 | 12.6 | 233.7 |

inception_229 | 13.75 | 12.8 | 7.4 |

resnet-18 | 63.74 | 25 | 154.9 |

resnet-50 | 19.55 | 12.2 | 60.2 |

squeezenet | 149.8 | 74.4 | 101.3 |

yolov2-tiny | 25.96 | 21 | 23.6 |

yolov3-tiny | 29.14 | 27.3 | 6.7 |

yolov5s | 9.1 | 4.7 | 93.6 |

yolo3 | 2.89 | 3.7 | -21.9 |

inception_512 | 4.69 | 3.93 | 19.3 |

unet | 1.68 | 2 | -16 |

yolo5l | 1.66 | 1.39 | 19.4 |

Two out of 12 models were slower on the GT730, the performance of two models was on par, the rest received a significant performance boost. The average improvement relative to the NM Card was 56.8%

The next experiment consisted of finding the "optimal" batch size in powers of two, i.e., batch size of 1, 2, 4, ... 64.

Model Name | GT730 Result, fps | NM Card Result, fps | Acceleration, % | Optimal Batch Size |

alexnet | 259.06 | 13 | 1892.7 | 64 |

inception_229 | 17.42 | 20.3 | -14.1 | 32 |

resnet-18 | 98.66 | 47 | 109.9 | 64 |

resnet-50 | 27.68 | 20.6 | 34.3 | 64 |

squeezenet | 170.6 | 100 | 70.6 | 64 |

yolov2-tiny | 35.18 | 30.4 | 15.7 | 16 |

yolov3-tiny | 36.53 | 35.3 | 3.4 | 16 |

yolov5s | 9.39 | 5.7 | 64.7 | 16 |

yolo3 | 2.89 | 4.5 | -35.7 | 1 |

inception_512 | 5.24 | 5.44 | -3.6 | 8 |

unet | 1.7 | 2 | -14.5 | 2 |

yolo5l | 1.9 | 1.43 | 32.8 | 8 |

In this experiment, the fact that the GT730 has 3 GB less memory starts to show. At one time, versions of this video card with 4 GB of GDDR5 were released, but for the purity of the experiment, I specifically bought a card with DDR3. From these results, it can be seen that alexnet shows a colossal increase, if this model is excluded, the improvement is about 24%.

Conclusion

According to the measurements taken, it can be judged that the GT730 generally shows better performance with identical technological standards for the production of the system-on-chip and similar design solutions for the manufacture of the module. A certain interest could be represented by a comparison also with the GT940M based on the aforementioned GM108S, but the cost of laptops in working condition with this chip ranges from 100-150 euros, which somewhat cools my curiosity.

Write comment