- AI

- A

AI hints in code: cognitive crutches or a productivity jetpack?

AI assistants in programming have burst into developers' daily lives at incredible speed. But what lies behind the convenience? Acceleration or superficial thinking?

We explore how programming style, behavior, and code architecture change when part of the decision-making process is taken over by a neural network. Many examples, code snippets, subjective observations, and a bit of philosophy.

AI is more than just autocomplete

In 2021, when Copilot was just beginning its journey, many perceived it as a new autocomplete tool. But over time, things have become more complex: models started understanding context, "predicting" intentions, writing tests, generating SQL queries, and even advising on architectural decisions. And this led to some strange developments.

A simple example: a typical Python developer.

# Python

def get_top_customers_by_revenue(customers: list[dict], top_n: int = 5) -> list[str]:

return sorted(

[c for c in customers if c.get("revenue")],

key=lambda x: x["revenue"],

reverse=True

)[:top_n]

Copilot suggests this snippet almost entirely after typing def get_top_customers. But do you know why it suggests key=lambda x: x["revenue"] instead of itemgetter? Is it convenience or a deviation from knowledge?

Cognitive biases: You think it’s you thinking

AI changes behavior. We hold less context in our minds, and more often agree with "reasonable" suggestions, even if they are incorrect. This phenomenon in psychology is called "authoritative automation" — when a highly trusted system suppresses critical thinking.

When Copilot suggests a solution and you press Tab — you're agreeing. But automatically. If you were writing it yourself, maybe you'd think differently.

Speed ≠ Quality

There is a class of tasks where speed really wins: CRUD, form logic, integrations. But AI boost works here like steroids: you write the same thing but faster. This is excellent… until a non-standard approach is needed.

Let’s compare:

// Go

// AI suggests this code for filtering

func FilterAdults(users []User) []User {

var result []User

for _, user := range users {

if user.Age >= 18 {

result = append(result, user)

}

}

return result

}Why do we use AI in programming at all?

It's simple: it's convenient. You open the editor, connect Copilot or Codeium, start typing, and on the screen appears a piece of code that... often fits your intentions perfectly. It's magic. Especially when you write:

// JavaScript

function groupBy(array, key) {

return array.reduce((result, item) => {

const groupKey = item[key];

if (!result[groupKey]) {

result[groupKey] = [];

}

result[groupKey].push(item);

return result;

}, {});

}AI suggests this function almost immediately after you type function groupBy. It's convenient, fast, and efficient. But when every other developer uses similar snippets, the question arises — where's the style? Where's the ingenuity?

The problem of an averaged style

Neural networks trained on mass code don't think. They predict. This means: they suggest not the best solution, but the most likely. Often — the most trivial one.

Here's an example in Rust:

// Rust

fn fibonacci(n: u32) -> u32 {

if n <= 1 {

return n;

}

fibonacci(n - 1) + fibonacci(n - 2)

}Is it beautiful? Yes. Efficient? No. Even neural networks understand this, sometimes suggesting memoization, but only if you clearly indicate that you need optimization.

use std::collections::HashMap;

fn fibonacci(n: u32, memo: &mut HashMap) -> u32 {

if let Some(&val) = memo.get(&n) {

return val;

}

let result = if n <= 1 {

n

} else {

fibonacci(n - 1, memo) + fibonacci(n - 2, memo)

};

memo.insert(n, result);

result

}

fn main() {

let mut memo = HashMap::new();

println!("{}", fibonacci(30, &mut memo));

} AI doesn't suggest this code immediately. Why? Because it's less commonly encountered. Therefore, it's not averaged. It requires understanding, not just syntax.

How AI impacts architectural decisions

Here begins the most interesting part. When a programmer uses an AI assistant, a thought pattern often emerges: "assemble faster — deploy faster." In the short term, this works. But in architecture, the winner is the one who thinks 3-4 steps ahead.

Example: generating API layers

AI can generate:

# Python (FastAPI)

from fastapi import FastAPI, HTTPException

app = FastAPI()

@app.get("/users/{user_id}")

def get_user(user_id: int):

# stub

return {"user_id": user_id, "name": "John Doe"}But if you're designing an API for several years — you need schemas, validation, documentation, decomposition. What does a conscious developer do?

# Python (FastAPI with Pydantic)

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import List

app = FastAPI()

class User(BaseModel):

id: int

name: str

email: str

users_db = [

User(id=1, name="Alice", email="[email protected]"),

User(id=2, name="Bob", email="[email protected]")

]

@app.get("/users", response_model=List[User])

def list_users():

return users_db

@app.get("/users/{user_id}", response_model=User)

def get_user(user_id: int):

for user in users_db:

if user.id == user_id:

return user

raise HTTPException(status_code=404, detail="User not found")AI can generate this, but only if you were already halfway there. And that's not help — that's repetition. Not learning, but catching up.

How programming style is changing

Some observations that can be called "micro-refactorings of thinking":

Imperativeness is making a comeback. Even in languages with expressive functionality, AI offers a basic, sometimes even archaic style.

Superficial patterns. Models copy the popular, not the best.

Cluttering with boilerplate. AI often creates unnecessary wrapping, especially in tests.

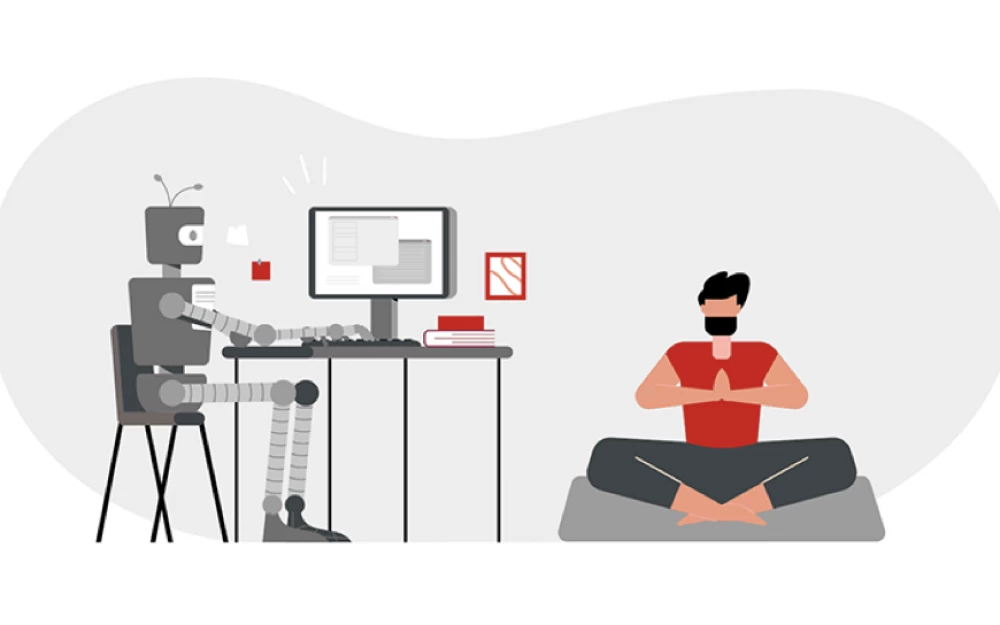

Paradox: what's best for juniors — a trap for mid-levels

For beginners, AI is almost a lifesaver. It teaches patterns, syntax, shows how it can be done. But if you're already middle+, laziness to think arises, laziness to read the docs. And there — language novelties, interesting cases, nuances that the model simply doesn't know.

Can the brain be "retrained"?

One way is conscious resistance: turn off hints when writing key logic. Or use them as an "assistant", not the author. Like in pair programming: "you give me the idea — I give you the implementation".

Conclusion: crutch or jetpack?

AI is a crutch if you rely on it at every step. But it's a jetpack if you know where to fly. Like everything in life, the benefit depends on awareness. The main thing is not to outsource your brain.

Write comment