- Gadgets

- A

Dreamcast VMU vs modern gamer accessories: evolution of "second screens"

Hello, console fans!

While everyone wants a PC or Xbox these days because they support a bunch of games, Sega used to set the trend. The Dreamcast VMU was the first to introduce a second screen right in the gamepad. Why? Wasn't one enough back then? But the advantages, like mini-games, telemetry, and hidden HUD, caught the public's attention.

Nowadays — companion apps, OLED panels in mice and keyboards, as well as web integrations with games. Let's break down how the VMU worked, what developers use for second screens, and how you can add companion functionality to your project. Details inside.

VMU Architecture: Hardware and Protocol

Let's take a look at what it represents.

If we dissect the VMU from the Sega Dreamcast, inside we find not just a piece of plastic, but a real mini-computer from 1998.

The board has an 8-bit Hitachi HD647180 microcontroller, which, when you break it down, is not just a "dumb chip," but a clever architecture close to Z80, but with an emphasis on energy saving and minimal hardware under the hood. The 48×32 pixel LCD screen is, of course, not Retina, but for games like Tamagotchi or displaying health in Resident Evil, it's quite sufficient.

It has 128 KB of SRAM, which even now is hard to believe fits everything, but it was enough for saves, mini-games, and various small things.

The gamepad and Dreamcast were connected via a physical bus (4-pin connector). Data exchange between them was carried out using a protocol often called "fake-SPI" — i.e., a semi-synchronous, pseudo-I²C protocol with four signal lines (VCC, GND, DATA, CLK). This isn't exactly standard I²C/SPI, but an internal Sega protocol for low-level data transfer.

The VMU looks like a USB drive with some data storage, but it's more like a nearly independent device — albeit with a battery life of just a couple of hours. At least it's not wired, and that's a plus.

VM/PDU are packets for communication with the console. The header defines the type of command, followed by the address, data, and checksum. Everything is synchronized by the controller's clock, and if something goes wrong, the VMU just disconnects — no communication without the correct startup.

By the way, a nice feature: the protocol architecture allows determining exactly what is connected — a gamepad, VMU, or even an unknown device. Of course, it's not USB, but in the late '90s, it was enough.

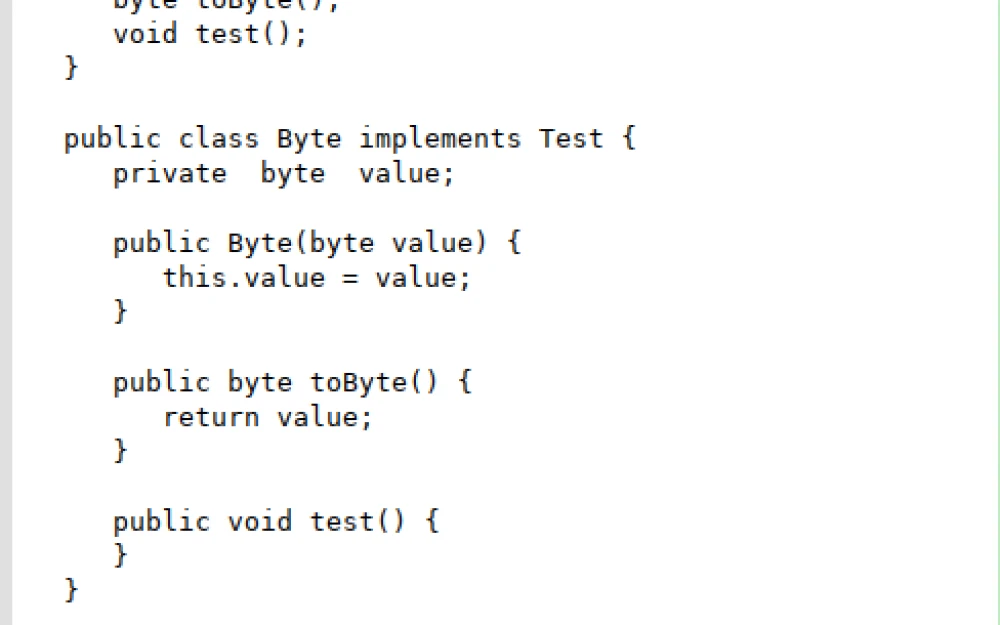

Memory Organization and Mini-Game Loading

The memory map of the VMU is divided into logical areas:

System area: service data, the bootloader control block.

User memory: saves (512-640 bytes per slot), high score tables, screenshots.

Executable Region: area for loading small binaries, mini-games (up to 24 KB).

From the main menu or console, you can launch games, and interestingly, when launched, it occupies a chunk of SRAM. Overall, nothing unusual, and additionally, the screen switches to dark mode, turning off everything unnecessary, all for the sake of energy saving, of course.

Now about saves. Each contains important data: the type of application, when it was created, CRC, and the data block itself. This means you can play with different software, copy, and transfer it.

Modern "Second Screens": Technology Stack

Over time, the stack has changed—the outside is definitely nicer now, with a new display and configuration, but the main focus is still on the tech side. One major trend is moving interface functionality to smartphones, tablets, and cloud services, instead of relying on specialized hardware.

To make this possible, you need to connect the game to the second screen. Here, WebSocket comes in handy—a technology that provides a bidirectional channel, as they say, in real time. The delay is tolerable unless you’re an esports pro (apparently also retro), and 50 ms on regular Wi-Fi is barely noticeable.

Thanks to technologies like this, you can not only transfer binary data quickly and cheaply, but also exchange telemetry, maps, and other files without any hassle. The server and client are always connected, so any change—entering a new zone, unlocking an achievement, or sending a message—instantly shows up on the second screen, regardless of the game engine.

WebSocket is usually used for chats, online games, dashboards, or broadcasts—basically anything working in real time. But there are nuances. The WebSocket connection is established once, and then the server can send any updates to the client, and the client to the server. On mobiles and similar devices, a constant connection drains a bit more battery, but for most projects, this trade-off is just fine.

For modern projects, these “minor issues” are manageable if you plan ahead for how the server will handle lots of users and possible failures. But if your service deals with data that rarely changes (like a basic landing page or an online store with a constant catalog), then WebSocket is overkill. Regular HTTP is enough.

And here’s another situation: no internet access or you simply need autonomy—good old Bluetooth Low Energy (BLE) saves the day. It’s a technology for compact devices focused on saving energy. For example, you can display your hero’s health status on your phone or directly control game settings using Unreal Engine. BLE allows you to send data in packets (say, every few seconds) or instantly notify about events—like when a player runs out of lives.

The bandwidth for BLE is modest (packet size up to 247 bytes, speed depends on distance and Bluetooth version), so it’s not suitable for heavy graphics or video streams, but it’s perfect for simple HUD elements. By the way, I recently published an article about how Bluetooth survived some of its most notorious exploits.

These days, the second screen is an entire technology stack.

The actual application is usually built with React Native or Flutter—so it works out of the box on both Android and iOS. Plus, there are ready-made SDKs for rapid interface assembly, and hybrid schemes let you split logic between the phone and the cloud.

Looking back, when deployment required tons of hardware, the difference is clear. Now, all you need is an average smartphone, standard protocols, and cloud services. From games and streams to interactive installations, museums, and esports.

API and SDK: What hardware manufacturers provide

Iron manufacturers offer developers to integrate their own functionality directly into the hardware. This opens doors for custom interfaces, indicators, and even mini-applications right on keyboards, mice, gamepads, and streaming panels.

Razer Chroma SDK and OLED API

For example, Razer provides the Chroma SDK — a RESTful API with which you can control backlighting and even OLED screens on their devices. To connect to the API, you just need to send a POST request to the local server, after which you can send commands to change the image on the display. Example in C++: display a status string (e.g., health level, time, player's nickname) or simple mini-graphics (FPS graph, battery charge) on the OLED mouse.

The main thing is to fit within 15 seconds, otherwise the server will disconnect due to a connection timeout.

Elgato Stream Deck SDK

This is no longer just a set of buttons, but a smart programmable panel with mini-displays on each key. To integrate custom functionality, there is the Stream Deck SDK in JavaScript/Node.js, which allows developers to create plugins for their tasks: displaying icons, texts, indicators, animations on buttons, and anything else needed for a specific scenario — whether it's a music spectrum, server status, or stream control.

Microsoft XInput + DirectInput and DualSense/DualShock Extensions

Microsoft’s approach is traditionally platform-based: XInput and DirectInput are standard APIs for working with gamepads on Windows. But when it comes to new controllers with OLED screens (e.g., DualSense), there are specific solutions — for example, for displaying notifications, battery status, indicators, or even custom images right on the built-in controller display. Working with such hardware requires not only knowledge of the standard APIs but also the manufacturer's documentation, which allows displaying unique content on the controller.

There were also devices like the Wii U. Having a map and inventory on the screen was pretty cool in games like Windwaker. But most of the time, it seemed like a hassle — constantly staring up and down when the other screen could simply be turned on the TV. It didn’t really solve many problems. Eventually, most games just ignored the gamepad screen. And there were also battery problems.

What does this give in practice — hardware manufacturers are opening up a toolkit for creating interfaces. You can write your own plugins, display telemetry, control backlighting and screens, integrate hardware into workflows, or do something fun, like a dancing Rickroll. Thanks to APIs and SDKs, modern "second screens" are no longer just accessories, but part of an ecosystem where functionality is limited only by the developer's imagination — and the capabilities of the hardware itself.

Integration in Game Engines

If you've worked on a large project with real deadlines, you know: the key is not to rush, but to choose a solution that won't turn into a headache for the whole team later. So when building quickly, don't forget that it's important to make changes along the way without rewriting half of the code because of new requirements—this is a must-have.

Experienced developers note: event-driven models and support for PUSH mechanics are what really save time in the long run. Why? Because it eliminates unnecessary polling cycles, reduces system load, and allows data to be sent to the second screen with almost no lag, which is critical for any interactive scenarios.

You can build a stack with ScriptableObject, on top of Node.js on Unity, or Unreal Engine with external APIs and custom websites. Unreal Engine's Blueprint graphs speed up UI prototyping.

But there are also nuances. On mobile, take into account background work limitations, and in the cloud, lag due to the location of servers, especially if data is traveling across half the world. So, when choosing a stack, always look not only at the "beautiful" functionality but also at the operating conditions—something works great on a desktop over a VPN, while something else works better in a local gaming club network.

Modern—what it has become

Now, the idea of a second screen is used in a completely different way: in the form of portable monitors, AR glasses, controllers with screens, and streaming controllers. Here are some examples:

Portable monitors

Compact USB-C/HDMI displays, usually 1080p/2K, with 60–144Hz refresh rate and low response time.

Jsaux FlipGo—two QHD screens in a vertical layout, FreeSync support, suitable for both gamers and streaming enthusiasts. You can read chat during a broadcast and watch YouTube while working/playing.

AR/VR glasses with a screen

Xreal One AR glasses—device with a micro-OLED display, creating a large screen effect in front of your eyes when connected to Steam Deck and other devices. Convenient and portable, but limited in viewing angle and quite expensive (~$499).

Dual-screen laptops

HP Omen X 2S—15.6″ laptop with an additional touchscreen above the keyboard, allowing you to display Discord, game maps, or streams without switching the main screen—modern adaptation of the VMU concept.

Portable gaming consoles

Devices like Steam Deck OLED, ROG Ally X, Lenovo Legion Go, MSI Claw 8 AI+—allow playing on the go, connecting to external screens or streams; they themselves become the center of the gameplay ecosystem and often combine screens, controls, and streaming.

Here’s the translated version:Why this is in demand

What’s a common issue now? We can get stuck on something for hours, scrolling, watching videos, playing games, etc. Additional screens allow us to do this twice as much, keeping the user engaged not only during the game but also outside it.

Modern companion apps offer extra activities: background pets, reminders, summary notifications, and real-time calls — expanding engagement without active gameplay.

If everything syncs smoothly, the second screen could be smartwatches, tablets, or even Smart TVs.

Additionally, DevOps and CI/CD approaches use companions for auto-testing, deployment, and updates. This applies not only to releases but also hotfixes, feature experiments, and even A/B testing of UI/UX on live users.

During the VMU era, second screens didn’t have basic usage practices or rules — users were just learning how to work with them. Now they have their own, often very strict ideas about what’s convenient and what isn’t. This is also highlighted by the current market, as everything looks alike now, since the "most convenient" model survives.

For example, no one will enter text commands ten times during a match if there’s a chance to "gesture" a message in the chat.

Visually, the second screen is more an extension of the social functionality of the player rather than a technical interface.

Therefore, it would be a mistake to include something that doesn’t work with "one click" or requires extra steps — attention quickly fades away.

Practice shows that the more a user can customize: what to show, where to show it, how to react to events — the higher the engagement. But universal solutions always require less support. Finding a balance between giving freedom and not turning the second screen into a Lego constructor (where no one ends up assembling anything) is an art in itself.

Is there a future for "second screens," or is this just another branch of modifications for the sake of differences? Perhaps you’ve already had experience with such functionality in projects — share your thoughts in the comments!

© 2025 MT FINANS LLC

Write comment