- AI

- A

Kolmogorov-Arnold Networks: a new "old" step towards interpretable AI

In the world of artificial intelligence, an interesting breakthrough has occurred. Researchers have developed a new type of neural network that can make their work more transparent and understandable. These networks, called Kolmogorov-Arnold networks, are based on a mathematical principle discovered more than half a century ago.

Neural networks today are the most powerful tools of artificial intelligence. They are capable of solving the most complex tasks by processing huge amounts of data. However, they have a significant drawback - their work is opaque. Scientists cannot fully understand how exactly the networks come to their conclusions. This phenomenon has been called the "black box" in the world of AI.

For a long time, researchers have wondered: is it possible to create neural networks that would give the same accurate results, but at the same time work in a more understandable way? And now, it seems, the answer has been found.

In April 2024, a new neural network architecture was introduced - the Kolmogorov-Arnold Networks. These networks are capable of performing almost all the same tasks as conventional neural networks, but their work is much more transparent. The basis of KAN is a mathematical idea from the mid-20th century, which has been adapted for the modern era of deep learning.

Despite the fact that KAN appeared quite recently, they have already aroused great interest in the scientific community. Researchers note that these networks are more understandable and can be especially useful in scientific applications. With their help, scientific patterns can be extracted directly from the data. This opens up new exciting opportunities for scientific research.

Compliance with the impossible

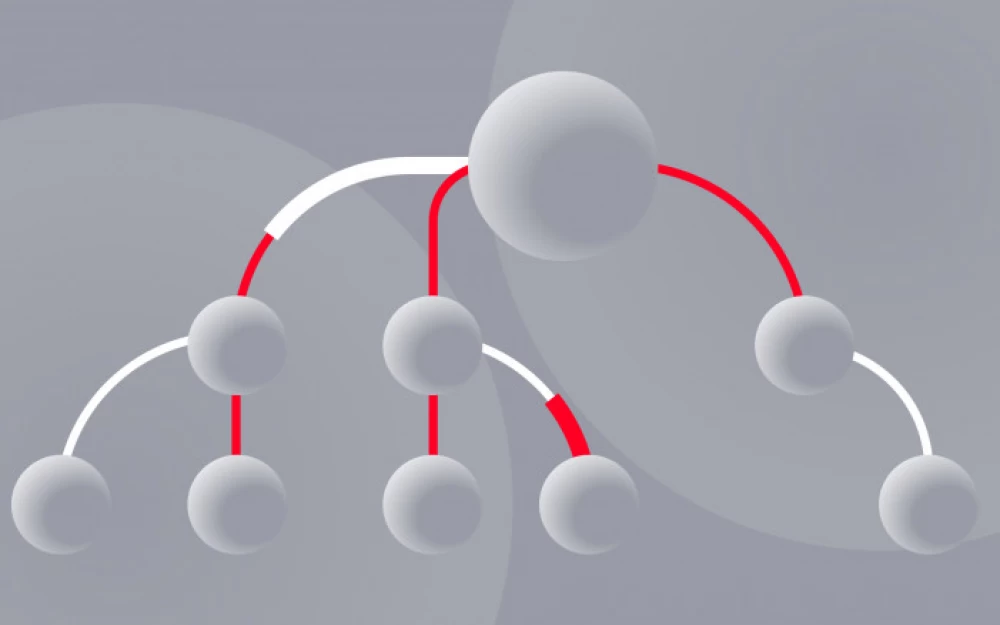

To understand the advantage of KAN, you need to understand how conventional neural networks work. They consist of layers of artificial neurons connected to each other. Information passes through these layers, is processed, and eventually turns into a result. The connections between neurons have different weights that determine the strength of influence. During the training process, these weights are constantly adjusted to make the result more and more accurate.

The main task of a neural network is to find a mathematical function that best describes the available data. The more accurate this function is, the better the network's predictions. Ideally, if the network models some physical process, the found function should represent a physical law describing this process.

For conventional neural networks, there is a mathematical theorem that says how close the network can get to the ideal function. This theorem implies that the network cannot represent this function absolutely accurately. However, KAN under certain conditions are capable of this.

KAN work fundamentally differently. Instead of numerical weights, they use functions on the connections between neurons. These functions are nonlinear, meaning they can describe more complex dependencies. At the same time, they can also be trained, adjusted with much greater precision than simple numerical weights.

However, for a long time, KAN were considered purely theoretical constructs, unsuitable for practical application. As early as 1989, a scientific article explicitly stated that the mathematical idea underlying KAN was "inappropriate in the context of trainable networks."

The origins of this idea go back to 1957, when mathematicians Andrey Kolmogorov and Vladimir Arnold proved an interesting theorem. They showed that any complex function of many variables can be represented as a combination of many simple functions of one variable.

However, there was one problem. The simple functions obtained as a result of applying the theorem could be "non-smooth," meaning they could have sharp angles. This created difficulties for building a trainable neural network based on them. For successful training, functions need to be smooth so that they can be smoothly adjusted.

So the idea of KAN remained a theoretical possibility for a long time. But everything changed in January last year when MIT physics graduate student Zimin Liu took up the topic. He was working on making neural networks more understandable for scientific applications, but all attempts ended in failure. Then Liu decided to return to the Kolmogorov-Arnold theorem, despite the fact that it had not received much attention before.

His scientific advisor, physicist Max Tegmark, was initially skeptical of this idea. He was familiar with the 1989 work and thought this attempt would hit a dead end again. But Liu did not give up, and soon Tegmark changed his mind. They realized that even if the functions generated by the theorem are not smooth, the network can still approximate them with smooth functions. Moreover, most functions encountered in science are smooth. This means that it is theoretically possible to represent them perfectly (rather than approximately).

Liu did not want to give up on the idea without trying it in practice. He understood that in the 35 years since the publication of the 1989 paper, computational capabilities had advanced far. What seemed impossible then could very well be real now.

For about a week, Liu worked on the idea, developing several KAN prototypes. They all had two layers - the simplest structure that researchers had focused on for decades. The choice of a two-layer architecture seemed natural, as the Kolmogorov-Arnold theorem itself essentially provides a scheme for such a structure. The theorem breaks down a complex function into separate sets of inner and outer functions, which corresponds well to the two-layer structure of a neural network.

However, to Liu's disappointment, none of his prototypes showed good results in solving the scientific problems he was hoping for. Then Tegmark suggested a key idea: what if they tried KAN with a larger number of layers? Such a network could handle more complex tasks.

This unconventional thought turned out to be a breakthrough. Multilayer KANs began to show promise, and soon Liu and Tegmark brought in colleagues from MIT, Caltech, and Northeastern University. They wanted to assemble a team that included both mathematicians and experts in the fields where KAN was to be applied.

In an April publication, the group demonstrated that three-layer KANs are indeed possible. They provided an example of a three-layer KAN capable of accurately representing a function that a two-layer network could not handle. But the researchers did not stop there. Since then, they have experimented with networks having up to six layers. With each new layer, the network became capable of performing increasingly complex functions. "We found that we can add as many layers as we want," noted one of the co-authors of the work.

Verified Improvements

The authors also applied their networks to two real-world tasks. The first was related to a field of mathematics known as knot theory. In 2021, the DeepMind team created a conventional neural network capable of predicting a certain topological property of a knot based on its other properties. Three years later, the new KAN not only replicated this achievement but went further. It was able to show how the predicted property is related to all the others - something that conventional neural networks cannot do.

The second task was related to a phenomenon in condensed matter physics known as Anderson localization. The goal was to predict the boundary at which a certain phase transition occurs and then derive a mathematical formula describing this process. No conventional neural network had ever been able to do this. KAN accomplished the task.

But the main advantage of KAN over other types of neural networks is their interpretability. This was the main motivation for their development, according to Tegmark. In both examples, KAN not only provided an answer but also an explanation. "What does it mean for something to be interpretable? If you give me some data, I'll give you a formula that you can write on a T-shirt," Tegmark explained.

This ability of KAN, although still limited, suggests that such networks could theoretically teach us something new about the world around us, says physicist Bryce Menard from Johns Hopkins University, who studies machine learning. "If a problem is indeed described by a simple equation, the KAN network does quite well in finding it," he noted. However, Menard warned that the area where KAN works best is likely to be limited to tasks similar to those found in physics, where equations typically contain very few variables.

Liu and Tegmark agree with this but do not see it as a drawback. "Almost all known scientific formulas, such as $$E = mc^2$$, can be written in terms of functions of one or two variables," Tegmark said. "The vast majority of calculations we perform depend on one or two variables. KAN uses this fact and looks for solutions in this form."

Final Equations

The article by Liu and Tegmark on KAN quickly caused a stir in the scientific community, gathering 75 citations in just three months. Soon, other research groups began working on their versions of KAN.

In June, a paper by Yizheng Wang from Tsinghua University and his colleagues appeared. They showed that their neural network based on Kolmogorov-Arnold ideas (KINN) "significantly outperforms" conventional neural networks in solving partial differential equations. This is an important achievement, as such equations are found everywhere in science.

The study, published in July by scientists from the National University of Singapore, yielded more ambiguous results. They concluded that KAN outperforms conventional networks in tasks where interpretability is important. However, in computer vision and audio processing tasks, traditional networks performed better. In natural language processing and other machine learning tasks, both types of networks showed roughly the same results. For Liu, these findings were not surprising. After all, the KAN development team initially focused on "science-related tasks" where interpretability is a top priority.

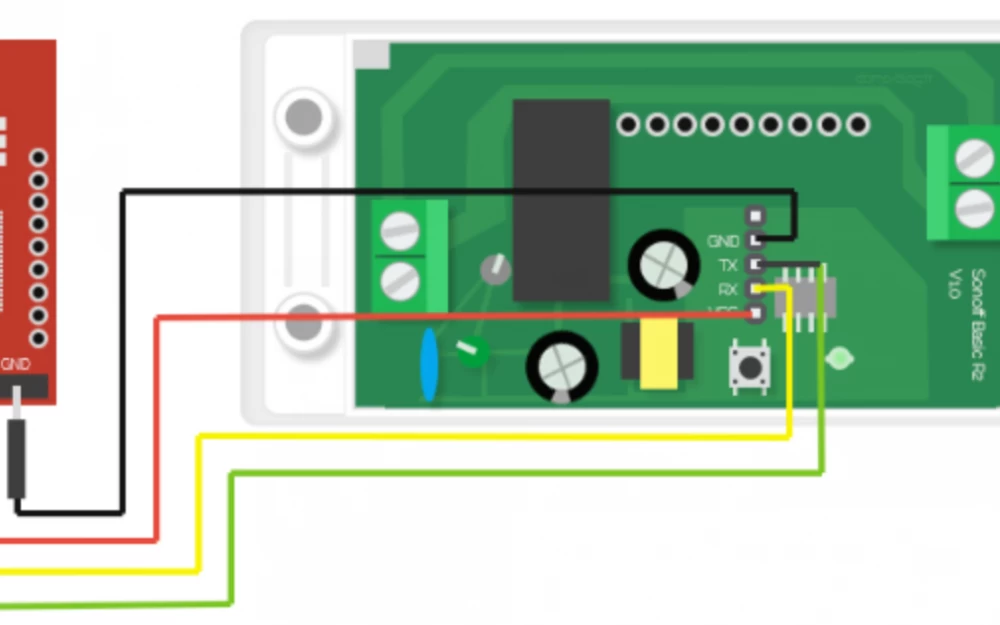

Meanwhile, Liu aims to make KAN more practical and user-friendly. In August, he and his colleagues published a new work titled "KAN 2.0". Liu described it as "more like a user manual than a regular scientific paper". According to him, this version is more user-friendly and offers new features, such as a multiplication tool, which were lacking in the original model.

Liu and his co-authors argue that this type of network is more than just a tool for solving problems. KAN promotes what the group calls "curiosity-driven science." It complements "application-driven science," which has long dominated machine learning.

For example, in studying the motion of celestial bodies, application-oriented researchers focus on predicting their future positions, while curiosity-driven scientists hope to uncover the fundamental physics behind this motion. Liu believes that with KAN, researchers will be able to get much more from neural networks than just help in solving complex computational problems. Instead, they could focus on gaining a deep understanding of the phenomena being studied for the sake of knowledge itself.

This approach opens up exciting prospects for science. KAN can become a powerful tool not only for predicting outcomes but also for revealing hidden patterns and principles underlying various natural and technical processes.

Of course, KAN is still in its early stages of development, and many problems need to be solved before it can fully realize its potential. But it is already clear that this new neural network architecture can significantly change the approach to using artificial intelligence in scientific research.

The ability to "look inside" the work of a neural network, to understand the logic of its conclusions, is what scientists have dreamed of since the advent of deep learning technology. KAN take an important step in this direction, offering not only accurate predictions but also understandable explanations.

This can lead to a real breakthrough in various fields of science. Imagine that a neural network not only predicts the weather with high accuracy but also derives new meteorological laws. Or not only recognizes cancer cells in images but also formulates new hypotheses about the mechanisms of tumor development.

Of course, KAN is not a universal solution to all problems. This technology has its limitations and areas where it may be less effective than traditional neural networks. But in the field of scientific research, especially where it is important not only to get a result but also to understand how it was achieved, KAN can become an indispensable tool.

The work of Liu, Tegmark, and their colleagues opens a new chapter in the history of artificial intelligence. It shows that sometimes to move forward, you need to look back and take a fresh look at old ideas. The theorem proved by Kolmogorov and Arnold more than half a century ago has found an unexpected application in the era of deep learning, offering a solution to one of the most complex problems of modern AI.

The future of KAN looks promising. As researchers continue to experiment with this architecture, new opportunities and applications are emerging. Perhaps we are on the verge of a new era in the development of artificial intelligence - an era where machines not only provide answers but also help us understand why those answers are correct.

Ultimately, the goal of science is not just to predict phenomena but to understand them. KAN offers a path to such understanding by combining the power of modern computing with the transparency and interpretability of classical mathematics. This fusion can lead to new discoveries and insights that were previously inaccessible.

So next time you hear about a breakthrough in artificial intelligence, remember KAN. These networks can not only solve complex problems but also explain their solutions in a language understandable to humans. And who knows, maybe KAN will help us uncover the next great mystery of nature, writing its formula on a T-shirt, as Max Tegmark dreams.

Write comment