- Network

- A

Decentralized hosting/data storage systems

Image by Freepik

It is unlikely that true "eternity" is possible in our changing world (well, unless we are talking about elementary particles, but that is "a whole different story"), however, there are options for sufficiently stable systems that are difficult to collapse or ban — and today we will discuss an interesting example of such constructions: decentralized networks for storing websites/files.

The absence of a central server, the distributed architecture — all of this makes the network's stability quite high. Let us consider existing ideas in this field…

Section headings below are active links, by clicking on them, you can go to the websites of the respective projects.

The idea for this network was initially proposed by student Ian Clarke in 1999, while studying at the University of Edinburgh. He had the idea to create a system that would allow people to exchange information without the risk of surveillance and censorship. The first version of the network appeared in 2000 and immediately attracted the attention of experts.

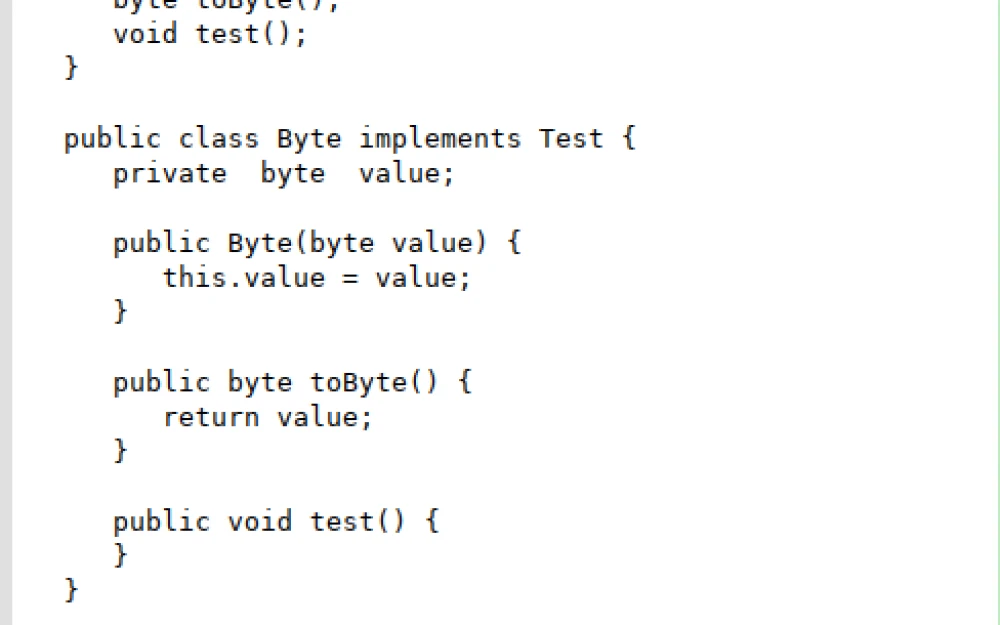

In terms of architecture, it is a peer-to-peer (P2P) network where each node is equal to another and, at any given time, acts as both a server and a client simultaneously (this eliminates the need for a centralized coordinating server).

Each node in this network stores its own encrypted data segment, making it difficult to identify the source and delete information as a whole: the data is divided into blocks of 32 KB, encrypted with the AES-256 algorithm, where the encryption key (also encrypted) is distributed across other nodes in the network.

Additionally, each data segment contains metadata that helps with routing and locating other related blocks.

As a result, no one, not even the owner of the fragment of information, knows exactly which fragment they store.

To protect information from potential loss of some nodes in the network, redundancy in storage was implemented: each information fragment is additionally copied to 3-5 nodes in the network (an additional effect of this is ensuring anonymity — data can come from any node in the network and not necessarily from the one that uploaded it).

If some information blocks are in higher demand (read: "frequently requested"), they are additionally copied to several nodes. Thus, it can be said that the more popular the information is, the more copies it has.

If, on the other hand, certain blocks are rare in terms of the system, they are marked as "priority," which also automatically leads to their copying to several nodes.

To maintain the balance of the network, i.e. to prevent some nodes from becoming overloaded while others are underloaded with requests, the nodes periodically exchange information fragments (balancing their number across different nodes).

The network works well with static sites, while dynamic ones may have issues — for dynamic content to work, at least one node in the network must store the latest version of the changes, otherwise only old versions will remain in the network, without the latest updates.

A more detailed description of the technical nuances can be found here.

How the network works:

A special client is downloaded, and after installation, as mentioned above, a node is created that connects to other nodes and starts acting as a server (a network node), storing fragments of information, even if no request for them has been made from your side;

Then, the client starts running in the background, and you, in a regular browser, enter special URLs, the launch of which sends a request to the client;

When requesting a network address, no polling of all nodes occurs, as this would take a significant amount of time; instead, routing is triggered using unique hash keys, characterizing each specific data block — and each node in the network knows which block their neighbors store; thus, polling of the minimum possible number of nodes takes place to gather all data blocks;

The collected blocks are accumulated in the client, then decrypted and delivered to the browser as a regular web page.

The random routing and encryption described above significantly slow down the network's performance, and thus, the website loading time can reach up to 5 minutes with an average data transfer rate of up to 50 kbps (but in reality, much less).

In the early years, the network developed actively, using the open-source paradigm and demonstrating quite high resistance to information blocking (such attempts have been made in the past), but due to its decentralized nature and the lack of any central server, a complete block proved to be impossible (unless the entire internet is disconnected).

The approximate number of sites in this network reaches several thousand, however, the exact number is unknown due to anonymity.

In 2020, the network underwent a rebranding, changing its name to hyphanet and introducing a new protocol (hypha), which improved the system's stability and routing, positively affecting the network's performance as well.

Nevertheless, despite all this, speed issues remain, so the network remains a niche solution for enthusiasts, and currently, it has competitors (discussed below).

I2P (Invisible Internet Project)

Another alternative for interaction emerged in 2003 and represents a tool for anonymous real-time communication, and this is its main difference from the previous network, which is more intended for storing static/dynamic website data.

The initial idea of the project appeared back in 2001 by a developer under the nickname "0x90", who was actually Lance James, who conceived the creation of an anonymous IRC chat, and its initial version was written in C++.

In 2003, the project was named I2P and rewritten by another developer (jrandom) in Java to ensure cross-platform compatibility.

In 2014, an alternative client appeared, once again written in C++, providing faster speeds.

In 2020, the project received new improvements concerning the transport protocol (NTCP2) and protection against attacks.

The project is unique in that during its operation, nodes create tunnels to transfer streaming traffic (a sort of anonymous TCP/IP).

The appearance of the possibility for streaming data is due to the increased speed of the network, which can reach 500 kbit/sec.

The network also supports the concept of "sites"—but unlike the previous version, where sites were divided into data fragments distributed across different servers, here the site is located on the author's own computer as a running service; accordingly, if the author goes offline, the site will also disappear from the network.

This architecture ensures fast operation, as there is no need to assemble the site from various fragments distributed across numerous nodes, and additionally, the problems with dynamic sites disappear because all the information is stored in one place and the content can be quickly updated, just like in a regular network.

It may seem that this architecture provides less anonymity than the previous version, but this is not the case because anonymity is ensured as follows:

each data packet is collected from separate fragments, where each fragment is encrypted separately, then the assembled packet is encrypted again;

sending and receiving happen through unconnected separate channels via random nodes, which are also changed every 10 minutes, making it impossible to match that the data was requested and received by the same node (and, broadly speaking, who the initiator and receiving party are);

additional masking is provided by the fact that nodes constantly transmit background noise, and in the event of an attack, it is impossible to distinguish between traffic and noise.

Thus, no node in the network knows where the site is physically located or what the complete request route is.

As with the previous version, a client must be downloaded to operate in the network, and browsing is done in a regular browser.

The client already includes some additional features, such as email and torrent, and additional resources can be found in the catalog (http://planet.i2p) of this network or searched via the search engine (http://identiguy.i2p).

For those interested, here is a very detailed article explaining how the system works from a technical standpoint.

According to some information, by the current year (2025), the network contains about 12,000 nodes.

Another decentralized network was created in 2015 by Hungarian developer Tamás Koch and is designed for web hosting without central servers.

For its work, the network uses Bitcoin cryptography technologies and BitTorrent for content distribution:

The Bitcoin technology is used for the system of private and public keys, where website addresses act as public keys (for example, in the case of Bitcoin cryptocurrency, these would be wallet numbers), and the private key is used by the website owner, who signs changes made to the site with it. Websites are distributed in the form of containers with a public key and the owner's signature, where links to data are stored in a content.json file, and the data itself (HTML, JS, images) is stored nearby in an unencrypted form;

The BitTorrent technology is used for data transfer, where, as in classic BitTorrent, there are torrent trackers. The network differs from the classic technology in that it can operate without trackers if they are all banned: if you need some resource, nodes in the network are queried to find the needed resource, allowing it to be found even without centralized trackers.

Unlike the Freenet network, where sites are split into fragments and located on different nodes, here, since BitTorrent technology is used, the site is located:

If the site has just been posted by the owner, it is only located on the owner's node;

If the site has been visited by someone, a copy of the site is stored on the nodes that visited it (and upon a request for information, it starts spreading from all those nodes).

Thus, the main problem of this network becomes immediately apparent: the stability of any node depends on how many people have visited it.

If a resource is not very popular, it can be easily suppressed by banning the necessary nodes.

By the way, about the ban: anonymity in this network is not provided by default (another critical vulnerability!)

But it's not all bad: optional integration with Tor is available, and since there is no access to the "big internet," and all traffic is distributed only within the network, the problem of classic Tor with potential tracking of its exit nodes disappears.

Tor support is built into the official client, and to enable it, you just need to go to: Settings — Use Tor — Always.

As for the speed of operation, it can be said that, in general, the network works faster than previous networks, since BitTorrent technology is used, and the average website loading speed is 1–5 seconds without Tor connection, and 5–15 seconds with Tor connected.

If the network visitor is an ordinary user, the logic of operation looks as follows:

Here's the translation you requested:the client is downloaded and installed;

resource search begins, for example, using the official built-in catalog (

http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D) of sites or the ZeroNet Play aggregator (http://127.0.0.1:43110/1PLAYgDQboKojowD3kwdb3CtWmWaokXvfp);if trackers are banned, you can search (if Tor is not enabled) by typing the site address in the browser (this works just like in previous networks, via a regular browser that redirects requests to the client running in the background); if Tor is enabled, its .onion sites become available, including mirrors of regular trackers (where you can find resource lists).

If you want to create your own site in this network, you can use the official tutorial.

The project development has been frozen since 2019, but the network is still quite active, with about 10,000 nodes and thousands of visits to popular resources daily.

IPFS (InterPlanetary File System)

This system was created in 2015 and, like the previous ones, still exists today as a peer-to-peer network for file sharing. Its creator is American entrepreneur Juan Benet, who developed the network as part of his startup Protocol Labs with the aim of upgrading the outdated paradigm of server-based storage by replacing it with a distributed network.

Like the previous network, it does not support anonymity at the basic level, but anonymity (just like in the previous network) can be enabled additionally by using Tor.

A key difference from previous networks is that the address of a site or file is a hash (CID, in the terminology of this network) that changes with any modification of the file/site, thus ensuring authenticity.

Another difference from previous networks is that working in this network does not require installing a client — the network is connected to the "big internet" via "gateways", and you can access it through them.

Therefore, we see a problem here — blocking gateways leads to the inability to access the network (from a regular browser;-) ).

However, despite such easy access (which may be appealing to beginners), this network also has its own client (IPFS Desktop), and installing it allows you to start working directly inside the network, eliminating the dependency on gateways (you work directly within the network — no gateways needed).

Content distribution, like in the previous network, uses technology similar to BitTorrent, where any file download or site visit creates a local copy of it on the visitor's device, and they automatically become a "seed" for that file.

Another interesting point is that since any changes to a file generate a new link to it, this means that any changes in the file cause a new file to appear in the network, while the old one is also preserved (there is no overwriting of the old file with the new one).

The network speed is quite high:

up to 10 Mbps without Tor;

up to 3 Mbps with Tor.

According to the official website of the network, it currently has over 280k nodes and more than a billion unique resource identifiers (CIDs).

Overall, this network is more suitable for storing static websites or files, and documentation on it can be found here.

To sum up this story, it's worth mentioning that the idea was to focus on less well-known networks, which is why widely recognized ones, such as Tor, were not considered.

The list discussed is not limited to these networks, as there are surely others not covered by the story (at least Solid — which appeared in 2018 and was created by none other than Tim Berners-Lee, so there is potential for research).

© 2025 MT FINANS LLC

Write comment