- Security

- A

CISQ. Software Quality Analysis Study 2020 — Part 1

The Consortium for Information and Software Quality launched the "State of the Industry" survey - the first comprehensive study of software quality analysis. This article translates part of the survey results - the Engineering section. The second part will translate the remaining two sections - System Integrators, Supplier Management.

In this article, a translation of part of the survey results - the "Engineering" section. The second part will be a translation of the remaining two sections - "System Integrators", "Supplier Management".

Presenting the "State of the Industry" report

The Consortium for Information and Software Quality (CISQ) launched the "State of the Industry" survey, the first comprehensive study of software quality analysis, covering tool vendors, system integrators, managers, and engineers in end-user organizations. The survey was open from July 2019 to January 2020. The impetus for this study was the alarming increase in software quality incidents, as well as concerns from CISQ members that organizations are not meeting basic requirements. We wanted to understand how the transition to Agile development and DevOps is changing not only software quality assurance practices but also developers' attitudes and behavior towards code quality. It is also important to understand how software quality standards are used by system integrators and end-user organizations; which standards are applied, which sectors contribute to their adoption, and how organizations benefit from software quality standards.

Methodology

The results in the "State of the Industry" report on software quality analysis are based on 82 responses to an online survey, 155 telephone conversations with enterprise IT leaders, their teams, and IT service provider managers, discussions at workshops organized by CISQ, and forums on LinkedIn. This report includes survey results, observations, and recommendations. The report is divided into three sections:

Engineering

System Integrators

Vendor Management

What do developers think about software quality analysis (SQA)?

The shift to Agile development and DevOps, as well as the unprecedented speed at which teams are working, is unprecedented. Teams are releasing software at an unprecedented rate. At the same time, we are facing much higher levels of risk and vulnerability.

The problems of low software quality and technical debt have existed since the advent of IT. It seems that we have reached a crisis point where it is necessary to take these problems much more seriously and start considering developers as engineers. This means not only writing code but also paying attention to other aspects of engineering, such as quality assurance, security, reliability, and the long-term viability of the solutions being developed. With the shift to SaaS and cloud technologies, enterprises and engineering teams may think that this does not concern them, but it does. SaaS solutions are created by engineers. If these solutions have weaknesses, it affects thousands, if not millions, of users.

In the CISQ "State of the Industry" report on software quality analysis, we wanted to test the hypothesis that reliability, security, performance efficiency, and maintainability, as they are often referred to as "non-functional requirements," remain secondary to customer-oriented features for product owners, managers, and teams. Let's move on to the survey results.

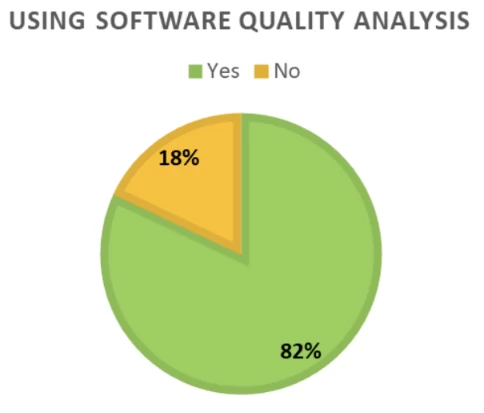

Are you currently using SQA?

Results: The majority of developers, 82%, report using software quality analysis. Moreover, 33% claim to always use static code analysis, compared to 17% who always use dynamic code analysis. 32% of developers report frequently using both static and dynamic code analysis, i.e., on a daily or weekly basis.

Observations: There was a perception that developers are reluctant to use software quality analysis in any form—static or dynamic. However, the data we collected shows that this is far from the truth, as more than 82% of developers claim to use software quality analysis in one form or another. We believe that the increased focus on continuous integration (CI) and continuous delivery (CD) in the DevOps community, as well as the availability of open-source tools for software quality analysis, contribute to this.

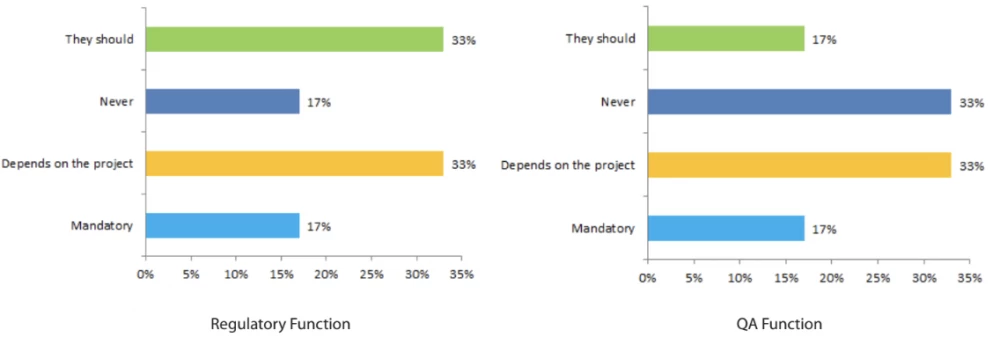

Does your internal regulatory function or quality assurance function require mandatory use of SQA by your development teams?

Results: 17% of developers report that the use of software quality analysis is mandatory as part of the internal regulatory function, a third note that it depends on the project, and another third believe that the regulatory function should require the use of SQA. This data shows that there is significant potential for closer interaction between engineering and risk management and compliance (GRC) units to reduce software risks, which has not yet been fully realized. The quality assurance (QA) function reflects regulatory policy: 17% of developers report that SQA is mandatory, and 33% say it depends on the project. However, a third of developers note that the QA function never requires mandatory use of code analysis.

Observations: It is clear that both the internal regulatory function and, surprisingly, the quality assurance function do not require mandatory use of code quality analysis tools. It seems that the vast majority of developers independently decide to use SQA. The project-dependent approach remains dominant, raising the question of how justified it is given the current level of IT and security risks.

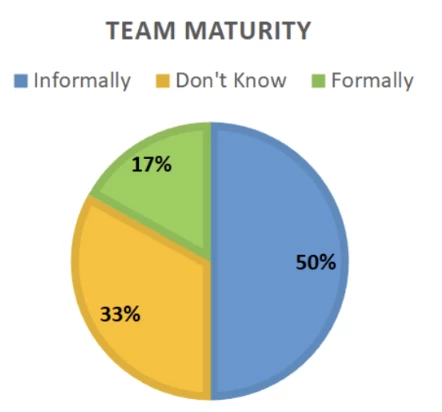

Is the degree of autonomy of your team related to the level of maturity of the team in the field of SQA and technical debt management?

Results: 50% of developers report that the level of autonomy provided to the team is informally related to the use of software quality analysis. 17% indicate that autonomy is formally related to SQA. 33% of developers do not know of any formal or informal relationship between code analysis and team autonomy.

Observations: Agile and DevOps are based on an autonomous organizational structure with self-organizing teams. However, it seems that few organizations allow autonomous teams to earn their autonomy by following the right approaches and best practices. Only 17% of developers report that their teams undergo a formal assessment of code structure quality.

We find this somewhat surprising given the prevalence of technical debt issues in many organizations. Autonomy should be linked to best practices and behaviors focused on software quality.

What standards do developers use for SQA?

Results: The most "frequently" used standards are: OWASP Top 10, ISO 25000. Standards used "sometimes" include: MISRA, MITRE CWE, SANS/CWE Top 25, OMG/CISQ.

Observations: It is not surprising that given its prevalence in the industry, the OWASP Top 10 list is one of the most frequently mentioned standards. It is important to consider that there is a difference between awareness and reference to the standard and developing code that complies with this standard. MISRA is an example of what we expect to see in the future - industry standards for software quality. We predict that cyber-physical devices and IoT will increase the use of software quality standards specific to certain areas.

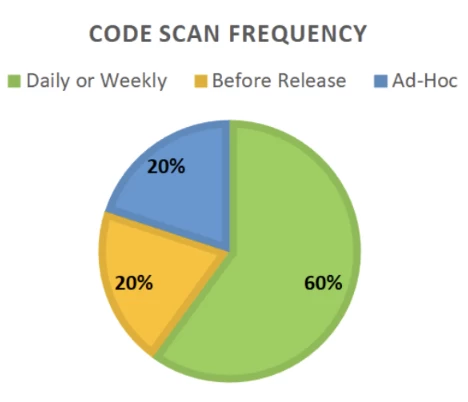

How often do you conduct SQA?

Results: 60% of developers report that code is scanned daily or weekly, 20% indicate that code is scanned before release at the quality control stage, and 20% report that code analysis is reactive and conducted as needed when a problem arises.

Observations: More than half of developers report regular use of SQA on a daily or weekly basis. This is expected, given our opinion that DevOps and CI/CD contribute to the wider adoption of SQA. We should be careful not to overstate the importance of SQA frequency, as the most important are the results of the scanned code and subsequent refactoring. There is still a high proportion of teams where SQA is not conducted on a regular basis. Software quality analysis should be integrated into the DevOps toolkit.

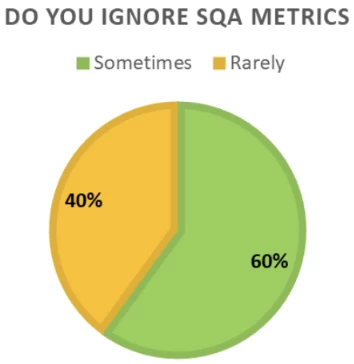

How often do you ignore SQA metrics?

Results: 60% of developers report that they "sometimes" ignore SQA results, while 40% say they "rarely" do so.

Observations: Although SQA tools have been around for some time, they are not perfect, and it should come as no surprise that they sometimes produce false positives, indicating a known vulnerability or weakness in the code that does not actually exist. Given this, it is not surprising that developers sometimes choose to ignore the results. However, we have reason to hope that developers still take the time to refactor the code, as 40% say they rarely ignore scan results. Development teams that frequently encounter false positives should invest in tuning the tools to reduce the number of false positives. In the case of the 60% of developers who "sometimes" ignore SQA reports, we believe this is due to the use of tools without proper tuning and configuration. It is important for DevOps teams to be supported by mature SQA tools that meet advanced software quality standards to minimize the problem of false positives and false negatives.

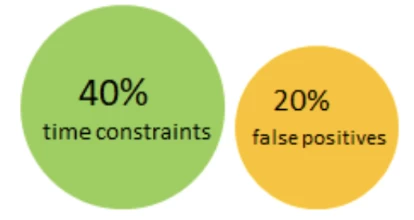

What is the most common reason for ignoring SQA metrics?

Results: The most common reason developers ignore SQA metrics is lack of time (40% of responses), 20% point to false positives, and the rest noted "not applicable" or "other".

Observations: One fifth of developers ignoring SQA metrics due to false positives is a sign of increasing tool maturity, as we expected this figure to be higher.

Business and application managers should note that 40% of developers ignore results due to lack of time. This contradicts Agile values related to delivering value to the customer and is related to a lack of understanding of the impact of non-functional requirements by product owners and product managers. It is not surprising that in conversations with developers, they often consider SQA a waste of time if they receive conflicting signals from the business and its representatives. Software quality needs a champion within the company, and this issue should be led by the business.

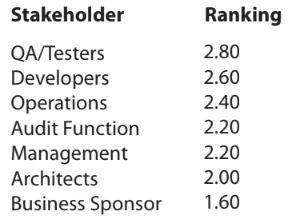

Who benefits the most from using SQA?

Results: The top 3 stakeholders who benefit the most from SQA, in the following order: 1) QA/Testers, 2) Developers, 3) Operations teams.

Observations: An interesting and somewhat surprising result was that so few developers believe that SQA brings direct benefits to the end customer or business sponsor. Given our previous remark that 40% of developers ignore SQA due to lack of time, it is understandable why they pick up the message from the business that it is not important to the customer.

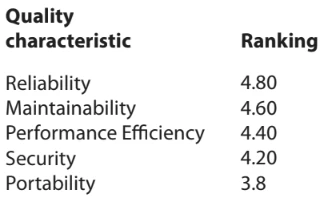

In which areas of code quality does SQA bring the most benefit?

Results: The top 3 areas of code quality that benefit the most from code analysis, in the following order: 1) Reliability, 2) Maintainability, 3) Performance efficiency.

Observations: It is evident that developers understand the relationship between SQA and classical non-functional areas. This raises concerns, returning to the question on page 6, that SQA results are ignored by teams. This also suggests an interesting observation. If developers believe that SQA does not bring significant value to the end customer, as we saw in the previous question, does this mean that they do not consider reliability and performance important for the customer? These somewhat contradictory results bring us back to the long-familiar topic of non-functional requirements (NFR), which are consciously or subconsciously considered secondary. It is not surprising that reliability and security rank high in terms of SQA benefits.

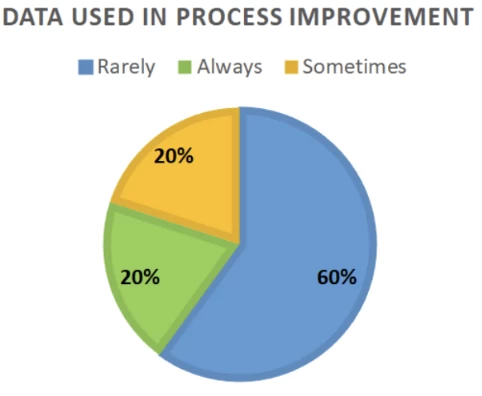

Does your team use SQA data to improve processes?

Results: 60% of developers claim that their teams "rarely" use software analysis data in retrospectives or to improve processes, 20% do this "sometimes", and 20% "always".

Observations: It is somewhat surprising that only one-fifth of developers work in teams where SQA results are always used in the improvement process and retrospectives. SQA is an indicator of the maturity of both individual specialists and the team in software development and is directly related to supporting practices and roles. We expect this figure to be higher. Our recommendation is to use SQA not only in retrospectives but also as part of the cross-training process to help developers improve their technical skills and code architecture.

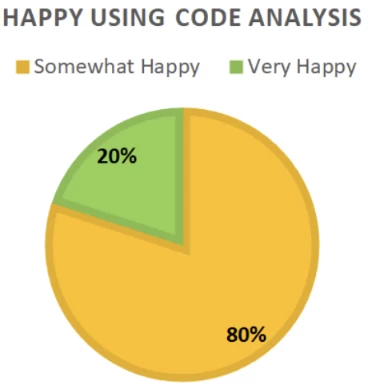

Are you satisfied with the use of SQA tools?

Results: 80% of developers report that they are "somewhat satisfied" with the use of code analysis tools, and 20% are "very satisfied." None of the respondents reported being "dissatisfied."

Observations: Although none of the survey or interview respondents explicitly said they disliked SQA tools, we get the impression that there are people who are not satisfied with the use of the tools but choose not to speak out about it. In most cases (80%), developers say they are only "somewhat satisfied." This may be due to the lack of calibration and integration of many tools, leading to manual processes and false positives. Additionally, developers in general may not like it when a machine (or someone else) points out mistakes they have made.

We still have ways to go before SQA is perceived as an equally important element as continuous integration (CI) and test-driven development (TDD). Nevertheless, the conversation should start with the business and move away from poorly named non-functional requirements. If reliability, performance efficiency, security, and maintainability are labeled as non-functional, they will continue to take a back seat to customer-facing features.

Conclusion

Time pressure on developers, product owners, and product managers exacerbates the negative attitude towards software quality analysis and non-functional requirements. We must consider that developers ignore SQA results and are not particularly happy with using these tools. It is easy to blame developers, but we believe this reflects the environment in which they work. Our recommendation is to ban the term “non-functional requirements” (NFRs). Until we do this, NFRs will be considered secondary. It is clear that developers are receiving mixed signals. Despite the high percentage of developers using SQA, the percentage of those who use it proactively to improve processes and act on the findings is lower than we would like.

Recommendations for management

Application managers and scrum managers should pay attention to their behavior and attitude towards non-functional requirements. They should proactively work with product owners or project managers to ensure proper attention to NFR. We have found that a conversation with the product management team focused solely on technical debt issues is not constructive. This discussion should be conducted in the context of business outcomes and risks.

The management team should ensure proper use of SQA. For example, teams should configure SQA tools to reduce false positives. It is also necessary to ensure that SQA tools are integrated into the toolchain. We must understand that this may mean reorganizing QA and testing functions so that teams conduct more quality testing related to NFR.

Best practice is that the level of autonomy given to each team is clearly and consistently linked to the relevant quantitative and qualitative key performance indicators (KPIs) or objectives and key results (OKRs), namely the team's ability to deliver high-quality code with low technical debt and high maintainability, reliability, and performance. Obviously, code security is also a key indicator of team maturity. Teams that do not demonstrate consistent best practices in these areas will be limited in release frequency, ability to sign off changes without approval, and code review frequency.

Recognizing that we are in a world where organizations provide development teams with greater autonomy, we must consider the need for enterprise-level standards. In this study, we found that teams that have a designated person responsible for the standards that the team will use have fewer software defect incidents, less technical debt, and more consistent tools, i.e., fewer false positives. Therefore, we recommend that each team have a "quality champion" who will promote and support the use of appropriate standards, while this person should not take on responsibility for quality - he is simply a champion in the team. We found that communities of practice (COP) are a useful tool for developing standards conducted by developers.

From a development culture perspective, and in line with Agile and DevOps, we need to shift the behavior and actions of teams from a focus on quality assurance (QA) to a focus on quality engineering (QE). This goes beyond test-driven development (TDD) or behavior-driven development (BDD) practices and requires a fundamental shift in lean manufacturing philosophy to ensure quality at every stage.

Recommendations for development

It is amazing to see that the level of SQA usage is higher than we expected, however, it is obvious that we still have a long way to go before SQA is effectively used. Our first recommendation is that teams stop using the term "non-functional requirements" (NFR) and educate their business analysts and product owners on the importance of NFR for the end consumer.

We recommend treating SQA tools the same way as CI/CD tools, integrating them into the toolchain with a high level of automation. For this to be successful and not create problems for developers, SQA tools must be configured to the programming environment and relevant coding standards to reduce the number of false positives.

Teams should use a data-driven approach to prioritize refactoring tasks. SQA tools configured to the team's codebase and programming styles can help prioritize and argue with the product owner or product manager the need to allocate time for refactoring. To set prioritization goals and reduce internal disputes, we recommend consistently using code quality standards along with SQA tools. Additionally, teams should use standards that can be automated and do not require manual intervention.

Given the low level of use of SQA metrics for process improvement, we recommend that all retrospectives include a review of SQA dashboards, even if it is just a quick review to avoid complacency. If teams work in organizations where autonomy must be earned based on specific metrics, this becomes even more important.

Finally, teams need to create a compelling business case for using SQA, especially if they work in environments where NFRs are considered secondary to customer-focused functionality. We have found that the best approach is to point out the causal relationship between low quality and customer experience, as well as the positive effect of using a standard SQA approach to reduce technical refactoring, which today typically accounts for 10-15% of sprint time.

"Non-functional requirements are not sufficiently emphasized in project management and are one of the main causes of budget overruns and project failures. Non-functional requirements are critical to success, and more attention needs to be paid to maintainability preparation, which is critical to the total cost of ownership."

Dr. Barry Boehm, Chief Scientist, SERC, TRW Professor of Software Engineering and Director of the Center for Software Engineering at the University of Southern California

Write comment