- AI

- A

How AI Assistants Are Changing the Game in Software Development — Using Cursor as an Example

Hello, tekkix!

Recently, I listened to Lex Fridman's podcast with the founders of the startup Cursor. The guys are working on creating a development environment with artificial intelligence integration. The idea is promising and promises to revolutionize the world of programming. However, of course, Cursor is not the only one working in this direction.

In 2021, Microsoft and OpenAI introduced the neural network assistant for programmers GitHub Copilot. Yandex developed its own assistant for working with code — Yandex Code Assistant. OpenAI introduced a new interface Canvas for working with ChatGPT for programmers. In December 2024, OpenAI officially announced o3 — a new improved version of o1, claiming that this model is capable of working at the level of an experienced senior. The coming year promises many changes. We see how a new paradigm of code creation is gradually emerging before our eyes, in which AI assistants become partners of programmers: they help them create, edit and debug code, generate new code by prompt in natural language, find errors and suggest improvements, convert code from one language to another.

I propose to consider what Cursor is and how the emergence of AI assistants is changing the future of software development. The article will be of interest to both developers and everyone who follows the progress of AI.

What is Cursor

Cursor is positioned as an "AI-enhanced development environment" or "next-generation IDE". In essence, it is a fork of the popular VS Code editor, supplemented with a set of intelligent features based on a trained language AI model. The main feature of Cursor is advanced autocompletion. As soon as you start typing code, Cursor immediately offers probable options for its continuation at the level of constructs and even blocks.

Unlike traditional autocomplete tools that are based on syntax analysis and templates, Cursor relies on semantics and context. This code editor "understands" the programmer's intentions and generates code snippets taking into account the project's context, class and function structure, technology stack, and specific domain. Cursor is a kind of "autopilot" for programming — it allows you to write half to a third fewer lines of code manually and get a higher quality result.

Behind the scenes, Cursor is continuously powered by an ensemble of AI models. It analyzes the code for typical errors, performance issues, design principle violations (SOLID, DRY, KISS, etc.) — and builds a fully connected semantic model of the project "on the fly". Then the model is checked — it is compared with a knowledge base, antipatterns, and typical errors.

Working in Cursor is like programming with a partner who not only instantly understands your idea but can also write most of the code himself, and in your unique style. Cursor becomes a source of new ideas and suggests optimal solutions to problems. It generates suggestions for code optimization (up to automatic fixes). The programmer can accept or reject them with one click.

Let's look under the hood of Cursor

Now we can talk about the technologies that drive the Cursor AI assistant. The foundation of foundations is, of course, large language models (LLM) trained on huge amounts of code from open repositories like GitHub. Cursor uses several models of different sizes and architectures, each of which is tailored to its task - generating, analyzing, explaining code. One of the co-founders of Cursor compared these models to the "collective mind" of the entire global programming community.

Training models on ready-made datasets is just the first stage of their preparation. Next, Cursor models begin to "retrain" in real-time based on "live" feedback from users. Every accepted or rejected suggestion, every developer interaction with the environment is a signal on which the AI continuously improves. One of the key metrics by which Cursor models are "trained" is the ratio of accepted to rejected autocompletions. If the developer accepts the suggested code, it means the model is doing well, and its behavior in similar situations is reinforced. If it rejects, the model tries to understand what exactly the user did not like and adjusts the algorithms. "Reinforcement learning" in action.

The company is actively investing in customizing models to meet the needs of developers. To do this, synthetic datasets and tasks that simulate real development scenarios are regularly generated. For example, to train a model to find vulnerabilities in code, it is first "spoiled", errors are introduced into it, and then examples are "fed" to the detector model. Another curious technique used in Cursor is "competitive" model training. For example, one model can generate code based on a task description, while another tries to find errors or vulnerabilities in it. In the process of such AI confrontation, both models improve (Generative Adversarial Networks).

Finally, an important aspect of training Cursor models is the collection of "live data". In "real-life" projects, models have to deal with unique technology stacks, business domains, and team practices. Without taking this specificity into account, even the "smartest" model risks giving advice that is disconnected from the realities of a particular company. Of course, transferring the internal code of companies "to the side" for training is a sensitive issue. To mitigate these risks, Cursor has implemented a whole system of data privacy and security. In particular, the code gets into the model only after depersonalization and obfuscation of sensitive artifacts such as access tokens, keys, and personal data.

This year, the London startup Human Native AI made a loud statement. These guys are building a marketplace for licensing data for training AI models. The project creators want to ensure transparent and fair exchange between companies developing language models (LLM) and content rights holders. Human Native AI is essentially creating a platform where publishers and other rights holders can offer their data, and AI developers can legally acquire it to train models. Human Native AI helps rights holders prepare and evaluate content, monitors copyright compliance, offers different deal formats — from revenue sharing to subscription, and also takes care of all transaction and monitoring nuances. In general, the startup meets the AI industry's need for Big Data for machine learning, without forgetting the interests of those who created and own the content. In April 2024, the guys launched, raised £2.8 million from the British funds LocalGlobe and Mercuri, and are now developing the platform — making the first deals, gaining momentum. In general, if the topic resonates, let me know: I will tell you more, but in another article. The point is that a new market is being formed — a marketplace for AI datasets, a potential promising source of profit for companies.

UX is in the details

Special attention in Cursor is paid to working with feedback from developers. The team strives to make the interaction between the programmer and AI as natural, intuitive, and unobtrusive as possible. All prompts, autocompletes, explanations, and warnings from the models should appear exactly when they are needed and in a form that does not distract but helps. To refine the UX, the Cursor team works closely with the community. The guys try to roll out new features as early as possible, even if they are "raw", to a limited circle of users. And they observe how developers interact with the new features of the product.

By analyzing usage patterns and frustration points, the team can quickly test hypotheses, discard non-working stories, or improve functionality exactly as people need. For example, the first versions of the advanced autocomplete caused some developers to feel that they were losing control over the code creation process. In general, it was disturbing. The team significantly improved the UX of the autocomplete. Now it more accurately guesses the developer's intentions and delicately suggests options using pop-up windows, without changing the code without the person's consent. All AI-suggested fragments are easy to distinguish visually and "roll back" if necessary.

Often Cursor developers, as active users of their own brainchild, become sources of ideas for improving the product. For example, the "smart" auto-import feature of missing dependencies was born out of an engineer's "pain" of manually adding the necessary libraries when copying code from an old project.

Ethical and technical challenges along the way

Despite all the successes and prospects of AI development, Cursor, like other similar companies, has to solve many complex engineering, product, and even philosophical problems along the way. Among the main tasks are ensuring the stability, predictability, and reliability of AI systems. It is no secret that neural networks are subject to "quirks" and errors, the nature of which is not always obvious. How to make sure that the AI assistant gives reasonable advice and does not suggest dangerous or incorrect actions with the code? How to eliminate "toxic" recommendations that can lead to data leaks, performance degradation, or build failures?

At Cursor, all AI recommendations undergo multi-stage filtering for potential harm and security violations. Models are "retrained" based on feedback from developers who reject incorrect suggestions. Finally, all generated AI code fragments are specially marked and can be easily disabled. Plans include integration with CI/CD systems for automatic testing and rollback of unsafe commits.

Another serious issue is ensuring privacy when collecting data and retraining models on client code. Companies are meticulous about their own intellectual property and code bases. Leakage of proprietary code, especially with descriptions of internal systems and business logic, is a "nightmare" for any technology company.

To dispel these concerns, Cursor has implemented an advanced privacy storage system based on the concepts of federated learning and private computations. In short, models are retrained on client data without transferring the code itself - instead, only intermediate "meta-updates" of the models are sent, from which it is impossible to restore the original code. In addition, all personal data and sensitive artifacts are removed from the code on client machines using special "obfuscation" algorithms.

There are also important limitations on the part of the development environment that prevent AI systems from "going too far." In particular, Cursor is designed so that all actions of the AI assistant are advisory in nature and must be confirmed by the developer. Models can suggest code changes, but they cannot make them on their own without the programmer's approval. This is a fundamental position intended to keep the human "in the loop" of decision-making.

How will the profession of a programmer be transformed under the influence of AI tools?

Already, some routine tasks such as refactoring, formatting, and adding documentation are being automated by "intelligent assistants." What if tomorrow AI learns to independently implement entire features based on a textual specification from a product manager? Discussing the future of Cursor, its creators tend to believe that their brainchild will not take jobs away from programmers. Quite the opposite, there will be more work. Arvid Lunnemark, who is responsible for long-term strategy at the company, provides a possible interaction scenario from the aviation field. Modern autopilots take on a huge number of routine tasks - from maintaining altitude and course to navigation and even landing. However, no sane person suggests removing pilots from the cockpit and relying entirely on AI.

Thanks to AI assistants, programmers will be able to delegate part of the routine "mechanical" work to machines — searching for and fixing trivial errors, refactoring, formatting, basic optimization. But at the same time, truly creative tasks will remain in their hands — coming up with new software solutions, designing innovative architectures, improving UX, performance, and scalability. A complex issue is finding a balance between the "autonomy" of AI and human control. As technology develops and developers' trust in AI grows, the balance may gradually shift from "assistance" to full "partnership".

In general, we are promised that the profession of a programmer will reach a new level. Future developers will delve less and less into low-level implementation details and syntax and think more and more at the level of architectures, abstractions, and domain models. Their primary "superpower" will be effective management of AI tools. The ability to quickly assemble working solutions from "blocks" of AI-generated code and services will also be valued. In addition, the soft skills of a programmer will play an important role — the ability to delve into the problem area, understand the needs of end users, communicate with product managers, and even clients. After all, if AI takes on the lion's share of coding routine, developers will have to rise to a higher level — actively participating in product discussions, design, functionality, and user scenario development.

Another problem: if AI-based tools make writing code so easy, fast, and enjoyable, the likelihood of "getting hooked" on automation and gradually losing fundamental programming skills will increase. To prevent such a scenario, Cursor initially incorporates "foolproofing" that limits the amount of code that can be automatically generated. They also plan to actively collaborate with educational platforms and communities to develop the skill of responsible use of AI tools in young programmers. AI should be perceived as a "calculator," not a "crutch" for the developer.

According to the founders of Cursor, in the next 10 years, the line between "intelligent development tools" like Cursor and "full-fledged AI programmers" will gradually blur. Even now, Copilot can generate quite meaningful code based on a textual description of the task. What if tomorrow systems like GPT-6 or PaLM learn to communicate with a manager in natural language, ask clarifying questions, clarify requirements, and then independently break the task into subtasks, design the architecture, and write 80% of the code to implement the task? And at the same time leave only a small share of truly non-trivial engineering solutions to the human?

In the horizon of 20-30 years, AI systems may well master not only routine development, but also product design, and even allow "programming reality" using natural language. The manager will be able to describe the desired product, and the AI will "think through" the details, select the stack, generate the code, deploy and maintain it. However, as the developers of intelligent solutions themselves note, we are still very far from "AI that will fire everyone." In reality, progress will be gradual, sometimes painful, with setbacks and disappointments. Not only tools will change, but also culture, processes, and development methodologies. Even now, Cursor observes the beginnings of these changes - the blurring of the line between programming and using AI tools, the "acceleration" of the code writing and testing cycle, the focus on interactive debugging instead of classic delayed testing.

It is still not entirely clear what all this will lead to. Advice from the founders of Cursor: do not be afraid to experiment with AI, but also do not lose skepticism, question the recommendations of models. It is necessary to reasonably "dose" automation in your work, not to lose grip on fundamental skills. And most importantly, develop the ability to effectively "conduct" an orchestra of AI tools.

One of their clients, Cursor, a large bank, conducted an experiment — organized a "competition". A team of human developers and an AI system based on Cursor competed in solving a real business problem. The AI showed excellent results: it surpassed humans in speed and code quality. However, the final product was still assembled by the team of human developers. Moreover, some of their findings were borrowed from the AI solutions. It turned out to be the very synergistic "centaur" effect that Cursor dreams of.

Bonus: list of tools

Here, by the way, is a small list of relevant AI tools to help developers, which colleagues have compiled.

Cursor.com: IDE with integrated AI.

v0.dev: a tool for frontend development.

replit.com: online IDE with AI agent.

bolt.new: a tool for rapid application creation.

Copilot by Microsoft: a code assistant that has become a standard for many developers.

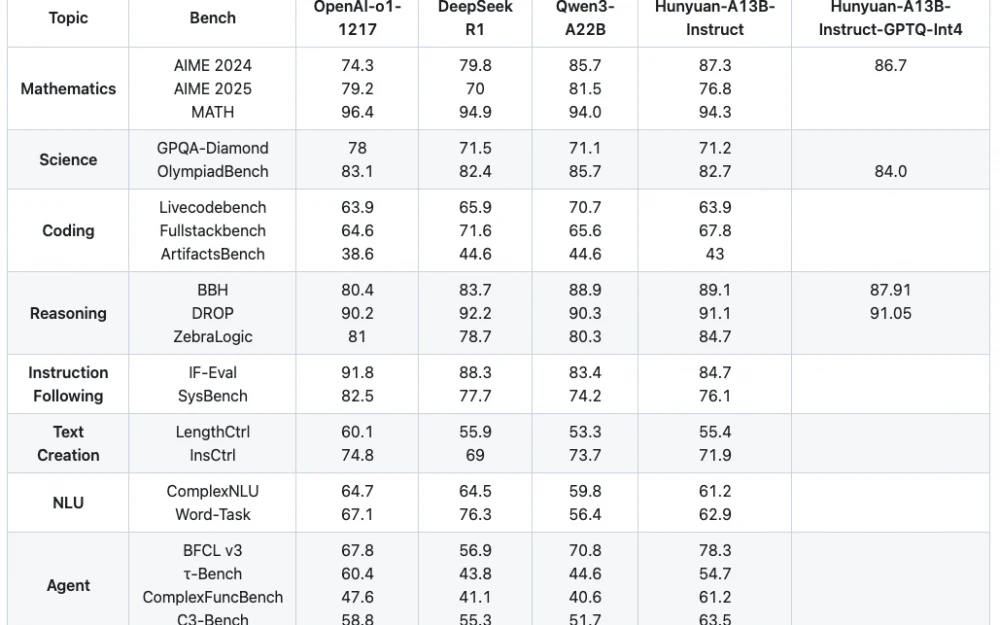

Recently, OpenAI released a new generation of artificial intelligence models — o3 (the smartest AI in the world), and the results are impressive. According to SWE-bench testing, the model showed a significant increase in the ability to find and fix code errors. And in the Codeforces ranking, o3 reached the level of an international grandmaster. A detailed analysis of the new model's capabilities and its impact on the future of software development can be found in the material by mathematics professor Vladimir Krylov - I recommend it.

Conclusion

The podcast with Cursor was informative. Of course, much in AI assistants for developers is still "raw" and far from ideal. Sometimes models make mistakes, sometimes they are annoying with their intrusiveness, sometimes, on the contrary, they underperform. But progress is rapid.

Superpowers provided by AI tools like Cursor are a great bonus. And the future seems to belong to those who learn to masterfully manage these tools: combine their capabilities, select optimal solutions for each task. Essentially, a new specialization is forming - a kind of "DevOps for AI in development". At the same time, AI progress raises legitimate concerns among programmers. No one wants their skills to become obsolete overnight due to a machine that performs the same job 10 times faster and cheaper.

But I believe in a different scenario: the future lies in hybrid systems where AI takes over the routine, and the programmer becomes a true technocreator, strategist, and a bit of a magician. I recommend listening to the podcast, getting acquainted with Cursor and similar tools, experimenting with them. Just don't forget about critical thinking - it is the ability to control and direct technology that distinguishes us from machines.

Share in the comments how you feel about AI assistants for developers? Are you already using something similar in your work? What pros and cons do you see?

Write comment