- AI

- A

Tutorial: running the Hunyuan-A13B model on vLLM and on llama.cpp

A new model was recently released - Hunyuan-A13B:

https://huggingface.co/tencent/Hunyuan-A13B-Instruct-GPTQ-Int4 (this one is quantized).

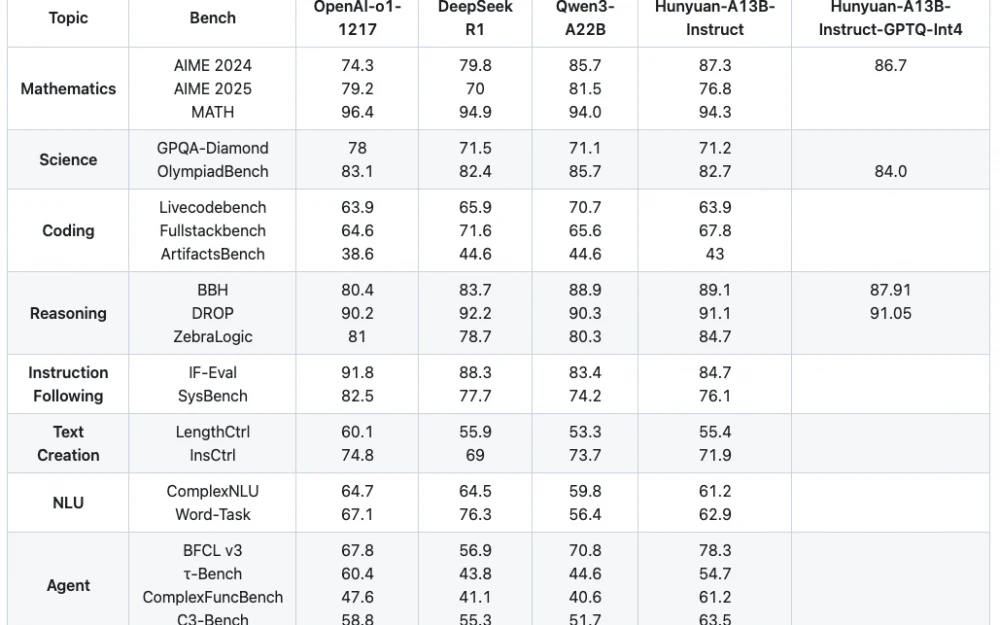

According to the tests, the model is on par with the best ones:

This is MOE (Mixture of Experts), the model size is 80 billion parameters, but for the next token computation only a limited number of "experts" are activated, using just 13 billion.

I’ve noticed that when new models come out, support is usually prepared for engines like vLLM, but for the more "popular" llama.cpp support usually arrives a few weeks later.

vLLM

https://github.com/vllm-project/vllm

This is a more "enterprise-oriented" engine.

It has better support for handling multiple requests simultaneously.

How is vLLM different from llama.cpp?

llama.cpp supports GGUF quants, but vLLM doesn’t do that well (https://docs.vllm.ai/en/latest/features/quantization/index.html). vLLM supports INT4 quants, which llama.cpp doesn’t work with at all.

llama.cpp can be launched to offload the model onto one or several GPUs and partially into RAM. vLLM gives you far fewer options here. Running on several identical GPUs is supported, but different GPUs or GPU + RAM doesn’t work. Maybe you could hack around it somehow, but I couldn’t get it to start.

vLLM does a better job supporting simultaneous requests, more efficiently using KV cache for each request.

Specifically for this model, the advantage of vLLM is that the INT4 quant was released by the model developers, and, as they say, it was trained together with the main model, so its quality is higher than, for example, GGUF quants.

The INT4 quant takes up 40GB VRAM. Plus you need memory for the context.

So, at minimum, you need 48GB VRAM. That gives you a context size of 19,500 tokens.

I ran it on a Chinese knockoff: RTX 4090D 48GB VRAM.

Running vLLM in Docker compose

services: vllm: image: vllm/vllm-openai:latest container_name: Hunyuan80B deploy: resources: reservations: devices: - driver: nvidia capabilities: [gpu] ports: - "37000:8000" volumes: - /home/user/.cache/huggingface:/root/.cache/huggingface ipc: host command: - "--model" - "tencent/Hunyuan-A13B-Instruct-GPTQ-Int4" - "--trust-remote-code" - "--quantization" - "gptq_marlin" - "--max-model-len" - "19500" - "--max-num-seqs" - "4" - "--served-model-name" - "Hunyuan80B"

I tested version 0.9.2.

The engine takes quite a while to start—about three minutes.

If you don’t have a 48GB GPU, it should probably run on two 24GB cards. In that case, you’ll need to add --tensor-parallel-size 2

You’ll need to change volumes to suit your setup.

Once the container starts, only REST requests will be available. vLLM has no UI.

You’ll need to send curl requests, or use something like OpenWebUI.

Running llama.cpp in Docker compose

The llama.cpp developers have recently added support for this model, and there are already different quants, but people are saying support quality could still be improved.

https://github.com/ggml-org/llama.cpp

You can download the model here: https://huggingface.co/unsloth/Hunyuan-A13B-Instruct-GGUF

I used quant Q4_0. The size is a bit larger than INT4.services: llama-server: image: ghcr.io/ggml-org/llama.cpp:full-cuda container_name: hun80b deploy: resources: reservations: devices: - driver: nvidia capabilities: [gpu] ports: - "37000:37000" volumes: - /home/user/.cache:/root/.cache entrypoint: ["./llama-server"] command: > --model /root/.cache/huggingface/hub/models--unsloth--Hunyuan-A13B-Instruct-GGUF/snapshots/14968524348ad0b4614ee6837fd9c5cda8265831/Hunyuan-A13B-Instruct-Q4_0.gguf --alias Hunyuan80B --ctx-size 19500 --flash-attn --threads 4 --host 0.0.0.0 --port 37000 --n-gpu-layers 999

llama.cpp has a decent UI that you can open in your browser and use for queries.

Speed

Here’s what I get in vLLM logs:

Avg prompt throughput: 193.3 tokens/s, Avg generation throughput: 71.4 tokens/s

And here’s what I get in llama.cpp logs:

prompt eval time = 744.02 ms / 1931 tokens ( 2595.36 tokens per second)

eval time = 29653.01 ms / 2601 tokens ( 87.71 tokens per second)

On a single request, llama.cpp is faster. I didn’t check with multiple requests since that’s not my use case, but it seems that’s where vLLM is supposed to do better than llama.cpp.

vLLM is supposed to produce slightly better answers, but I’m not sure how to test that.

By default, this model first “reasons” and then gives an answer. If you write "/no_think" at the beginning of your query, the model will skip reasoning and answer right away. This is much faster. It works the same in both engines. By the way, "/no_think" works the same for Qwen3 models as well.

Context

vLLM can’t handle more context than fits in GPU VRAM.

llama.cpp—if the context doesn’t fit in GPU VRAM, you can offload to a second GPU or to regular memory, though this will significantly slow things down.

Conclusion

I’m just an ordinary user, not an expert. There are people on tekkix who know more about this. So any criticism, additions, or suggestions for other parameters and configurations are welcome.

![How a Designer’s Stolen Passwords Nearly Tanked a Startup [MITRE: T1078 — Valid Accounts]](https://cdn.tekkix.com/assets/image-cache/w_1000h_625q_90/77/jpg/2025/04/c0c6e4f15f610e2a1d3502225ce59c2ecc05e85a.47d520d6.webp)

Write comment