- AI

- A

Implementation of LLM in Software Development: Is it Worth It?

Hello, this is Yulia Rogozina, a business process analyst at Sherpa Robotics. Today I translated an article for you, the topic of which concerns the use of Large Language Models as part of your product, rather than using AI as a tool in the development process.

Hello, this is Yulia Rogozina, a business process analyst at Sherpa Robotics. Today I have translated an article for you, the topic of which concerns the use of Large Language Models (LLM) as part of your product, rather than using AI as a tool in the development process (for example, tools like Cursor or Zed AI).

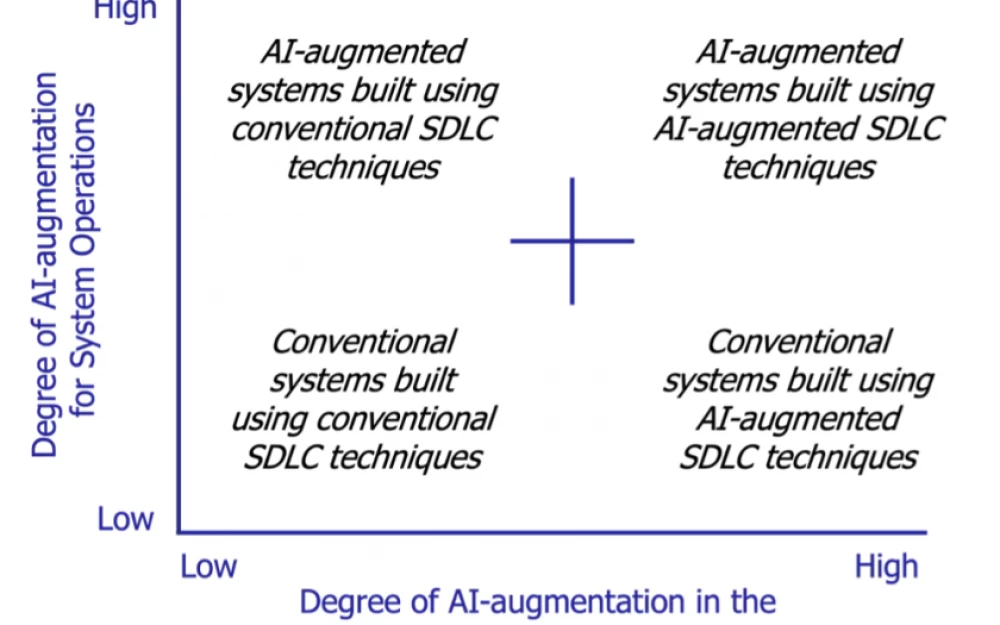

The application of LLMs for performing specific tasks within the software development life cycle (SDLC) has its challenges. However, it is important to understand that the way we create a product usually differs from what we sell to end users.

The problem with LLMs at the moment is that they are offered to users as a whole product, with no possibility to break it down into its components. You pay for the whole, and it is unrealistic to expect that it can be adapted or modified at the level of individual components. This is not as critical for machines as it is for cars, because driving is a strictly controlled activity. Even if you could assemble a car from individual parts, like a Lego set, it would likely not be legal.

This is probably exactly what large tech companies want — they want to sell you not a set of components that could be easily reworked or adapted, but a whole product or service. Thus, only a few major players remain in the market, and technological solutions like LLMs are shrouded in secrecy, maintaining their high value.

LLMs contradict one of the fundamental principles of computing — the idea that tasks can be broken down into components.

The working component of software, regardless of whether it was created in-house or not, consists of code that can be tested using unit tests. These components must interact with each other reliably and predictably. Take, for example, a product that uses an Oracle database. We all understand that data storage is a design element, and the decision about the type of storage is made at a technical level. At this stage, test scenarios are often involved, and although innovations in database technology continue, no client would think that the storage provider somehow controls the software.

In the academic environment, issues with a lack of decomposability are often combined with the problem of explainability. This leads to a number of serious business problems that affect the use of LLMs in real software solutions.

At this point, we cannot separate the operation of the language model from the data on which it was trained. We know that LLMs undergo training, but this process is usually not transparent, and the results are often presented as they are. This approach does not work in component development.

Security and privacy issues are inevitable because, at this moment, there is no reliable and verifiable way to indicate to the language model which parts of the information should be hidden. We cannot externally intervene in the neural network and explain to it that certain information is private and should not be disclosed.

Legal ownership remains problematic. We can prove that the result of an operation performed using cold computation is repeatable and would always yield the same answer given the same input data. However, since LLMs always carry with them the "burden" of the data on which they were trained, we simply cannot prove that they did not use someone else's developments.

Companies striving to control their carbon footprint are moving in the opposite direction from LLM creators, who require colossal computational power to achieve increasingly subtle improvements.

The article, however, is not about how to use LLM to assist in development, nor is it about simply giving the end user "raw" access to the LLM utility with a cynical shrug.

For example, if you created a text editor with built-in AI, there are no guarantees about what exactly it does. We all understand that such "features" are generally checkbox exercises: functionality that should be there but is not an integral part of the product.

For the reasons outlined above, the likelihood that LLM has a future as services within products is low—except in cases where the product itself will be an LLM.

But even this is a serious trap for business.

When Eric Yuan, the founder of Zoom, introduced the idea of AI clones attending meetings in Zoom, he was rightly mocked for expecting this capability to somehow appear "at the lower levels." By handing over the core innovations to the LLM model provider, he simply transferred control of his roadmap to another company.

How should software developers respond to this?

All of this is great, but how should software developers respond to this situation today? We all understand that a component should perform a consistent task or role, that it can be replaced, and that it should be tested alongside other similar components.

We also understand that if a component is external, it should be built to the same computational standards, and that we could recreate it using those standards. There is no need to try to change the game for short-term attention. The main point is to design a process that provides the necessary functionality for the business, and then to create a platform that allows developers to build solutions sustainably.

We should strive to create AI whose training yields clear, verifiable, repeatable, explainable, and reversible processes.

If we find that the LLM is mistaken in its perception of something that does not correspond to reality, this should be correctable through clearly defined steps. If this is not feasible, then using the LLM in computations at this moment makes no sense.

However, theoretically, there is no reason why this cannot change in the future.

The main concerns are that the difference between explainable AI and current language models may be as great as that between the scientific method and belief in a holy relic. We know that we can conduct a whole series of invalid experiments, but we also understand that expecting reconciliation between these two areas is unlikely to be reasonable.

Comment from Yulia

The author of the article raised an issue that once became a competitive advantage for one of our company's products, Sherpa Robotics. Namely, the creation of the first platform that allows for the fine-tuning of large language models on unique and confidential corporate data in a closed environment. Yes, we cannot exclude from the model the data embedded during its training, but we can adapt it as much as possible to the tasks of a specific company.

Indeed, to create a corporate AI assistant, it is not enough to simply use one of the LLMs. The business request is for the neural employees to be able to respond based on specific data and documents of the company, as a live specialist who has worked with them for a long time would.

Fine-tuning the model is the primary way to adapt AI to the needs of a specific company. However, there are sometimes difficulties with fine-tuning, especially when there are few examples on which to fine-tune the model to answer a given question.

In this regard, our company's specialists are developing methods for fine-tuning LLMs.

In particular, our expert applies the In-Context fine-tuning approach, which combines classical RAG and fine-tuning.

In the following articles, I plan to elaborate on this approach to fine-tuning.

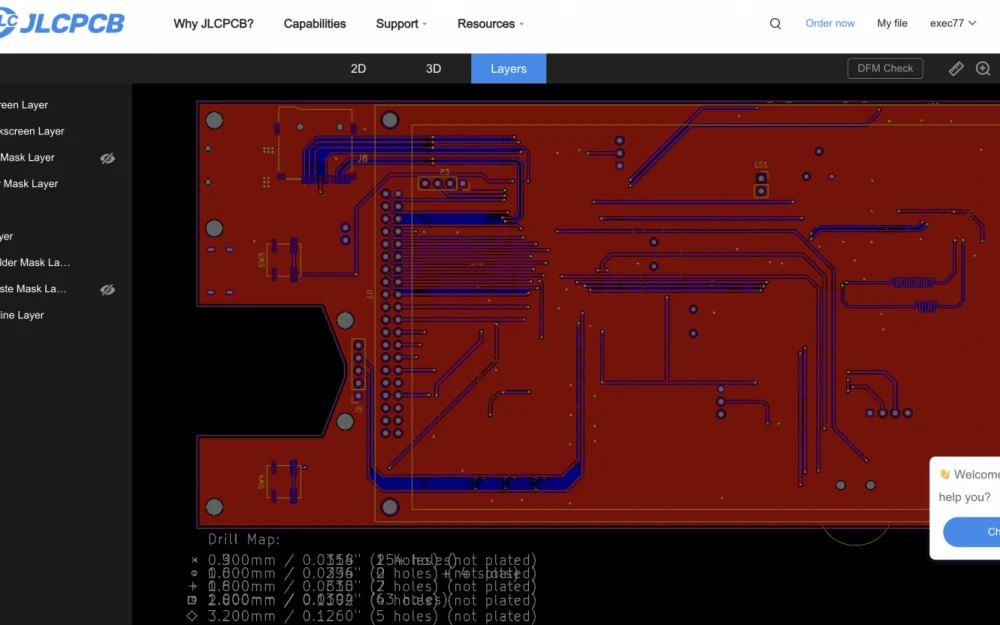

He was so liked by our developers that we even added a special window in our boxed product Sherpa AI Server in the assistant creation mode, which allows this approach to be implemented without any code at all, literally with a mouse. We called it "solution samples," where the user creating the assistant can show sample queries that the AI should respond to, and sample responses.

Write comment