- AI

- A

Let's keep it quieter with AI

Well, fine, we have AI. It has existed for about 3 years. And what's the point? How should we properly integrate it into the tech process?

I, like many here, am a programmer. I studied programming from 2003 to 2008, although even before that, I was drawn to technology, programming, and similar things.

Before I explain my reasoning, I want to clarify a few things.

Chapter Zero: Definition of a Couple of Terms

The first and very important thing: stupidity is when you don't know what should be there originally.

When you enter a house and try to hang your keys on a hook, but there's no hook because someone decided to remove it—the keys fall on the floor, and you look foolish. It's not very hurtful, and you'll even laugh when you realize you didn't know the hook was moved. But more serious and hurtful examples can be given. For example, when you're in an auto repair shop and listening to a lecture about how your car is doomed because you put diesel in a gasoline engine. You didn't know your engine was gasoline. Now, that's a very expensive and painful stupidity. Every time you felt foolish, it was because someone decided something about something, and you didn't know about it.

The second, no less important thing: something can only be called "working" if it works on its own, without supervision. It doesn't need to be held, pushed, clicked, or glued together to make it work.

Yes, most machines require certain maintenance, such as oil changes or running antivirus software on a server. But these are routine maintenance tasks that are well known. If, before printing a page on a new printer, you have to open a new app, click "Exit" three times, then run an old version of the app, and then reopen the new app—this can't be called a working app. It's broken.

Next point. My diploma says—software engineer. The word "engineer" itself implies that I can invent, create, maintain, and manage various programs and computer hardware. I can set something up, create something new, or remove something unnecessary. It doesn't matter what it is: whether it's a driver for a mouse on Windows 95 or a distributed system on Kubernetes—I can handle it. And if I don't know how to deal with it, I have the necessary tools to find instructions, learn them, and understand them, after which I'll manage the mouse driver or Kubernetes.

Now—the most important point.

A computer is a device that can collect, store, process, and transmit information. Information processing occurs by executing a clear sequence of commands that have been recorded in the computer.

Chapter One: What is Programming

Programming is an exact science. I can not only precisely say what will happen when a certain command is used, but I can also assess the reliability of a given system with a certain probability. Programming also has several methods for building fault-tolerant systems.

We can create systems where any node can be dynamically replaced with another node. There are also real-time systems — these are computers that produce computation results within a certain, physically limited time. Such systems are used in rockets and airplanes. Telephone stations operate on such systems.

There are banking systems that operate with numbers very precisely to keep track of balances and calculate them accurately, processing their clients in a way that they don't notice.

Computers can repeat the same action endlessly without change. And that’s why we need computers so much. None of you want to sit in front of Excel and add numbers in a column. In fact, the very meaning of the word "computer" comes from this. In the 1950s, "computers," or "calculators," were the people who added numbers for the space and military services in the US.

Chapter Two: And Here We Go — AI!

2025. We have what is called "artificial intelligence".

It’s an amazing system because it’s a computer that does things in a way that a computer doesn’t normally do. In fact, it’s a big matrix multiplier that adds random numbers to its responses and lets us manipulate data differently.

The thing is, this ability to add uncertainty to a computer's answers completely disrupts the very definition of the word “computer.” A computer is supposed to always give an accurate answer.

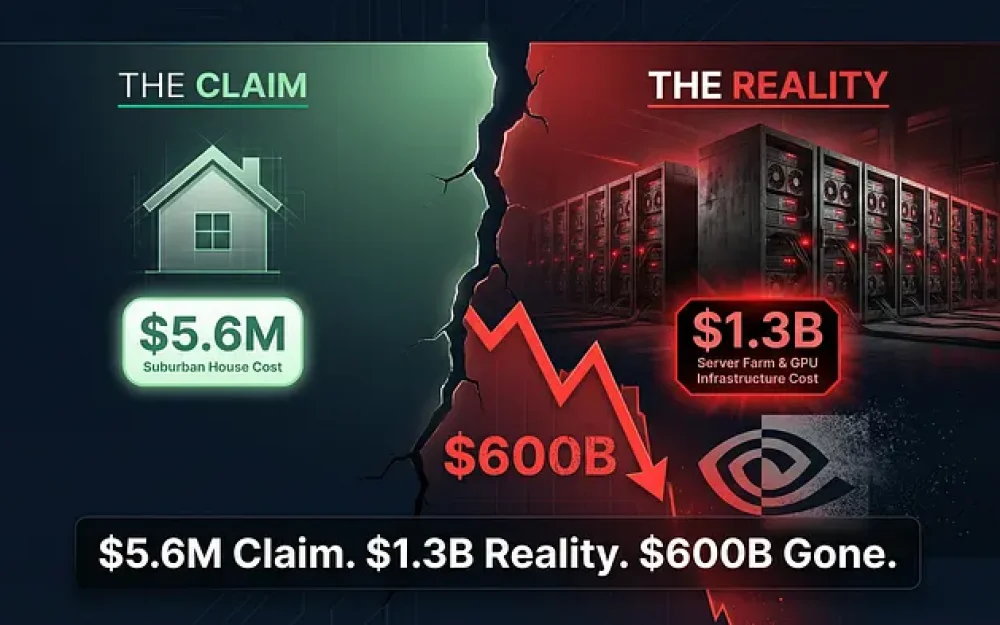

I see huge money being poured into AI. Literally, it’s the black hole of ONN, where dollars are poured in. This hole has been hyped to absurd levels. We have NVIDIA. They made money from video games. Then miners came along — and they made money from miners. Now AI guys have arrived — and they’re non-stop raking in money from AI folks. H200 is a card from NVIDIA that costs $35,000. One. Card. And they’re buying them in batches of 150,000 at a time.

Electricity is a whole other issue. In the US, investors are going around ready to pump money into those who are building power plants. By the way, I’m not joking. I can help. If you have a company that can build power plants anywhere in the world outside of Russia — hit me up on Telegram. $250 billion needs to be spent by the end of the year.

All of this is needed to support AI.

And that’s wonderful! NVIDIA didn’t crash. The market is growing and swelling.

The question is: what’s the point of AI? What is the purpose of AI systems? What’s the payoff?

You see, the payoff is obvious. They help process a large amount of information fairly quickly. And indeed, that’s cool. I don’t have to write code by hand. Bots write it for me.

But the problem is, no AI model can and will ever be able to guarantee 100% correct results. That’s why AI can only be used very limitedly in programming computers. For an example, check the next post. We’re talking about how, no matter how much you twist that machine, sooner or later, a bolt will fall out of its groove, and it will start to malfunction. You see, in fact, your AI assistant cannot be called a working system. It requires maintenance.

When working with AI systems, it is essential to maintain a strict balance. You need to clearly understand how much work you can offload onto AI and how much you need to do yourself. If you don't use AI systems at all, you'll be too slow. If you offload too much, you'll spend hours trying to fix what the AI broke.

There is a balance somewhere in the middle. You can significantly speed up the workflow by using AI. How much to speed up is the final question. But it can be sped up.

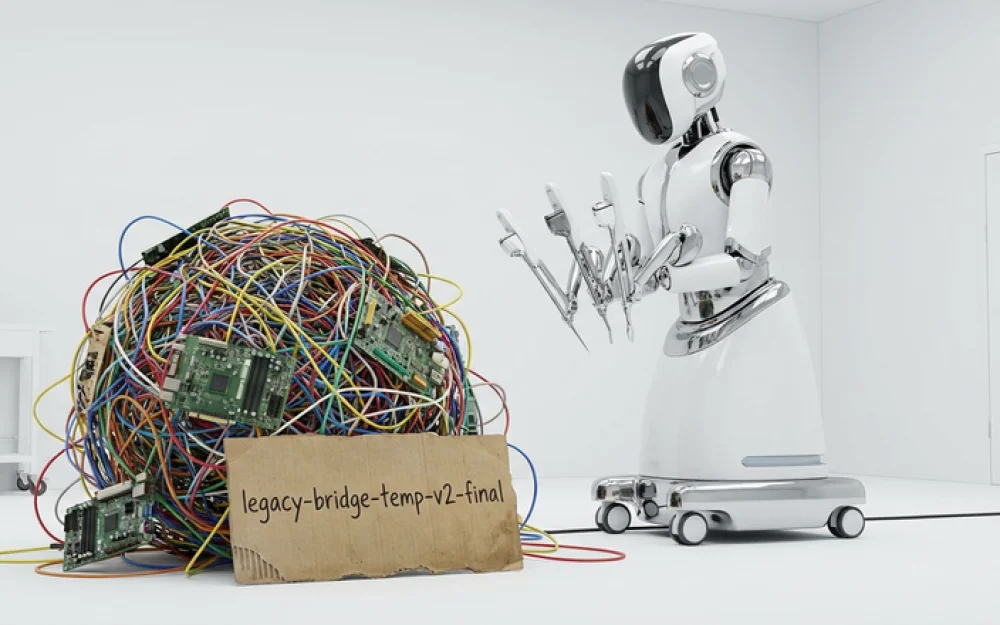

The point is that if you rely on AI too often, you can quickly slide into complete stupidity. Not knowing the difference between rm ./* -rf and rm . /* -rf will be fatal. It not only makes you dumb, but it could cost you your job. Not understanding and not proofreading the commands given by AI is a plague in modern development. Everything goes into production, no matter how it is written.

I see this from a slightly different angle. I work in banking development. When you come in and propose to implement AI, they look at you like this.

Chapter Three: Who Really Needs AI?

The accounting system operates according to algorithms written in the 1980s. It won’t be changed. It doesn’t need to be changed. Everything depends on whether the system in 2025 produces the same answers it produced in 1990.

Moreover, AI systems are infinitely bad at any niche programming.

What’s most interesting is that AI systems handle a very limited set of tasks very well. In particular — forecasting and processing big data. Look at Google's amazing models for weather forecasting. Do you understand? Forecasting. We're not sure about the result. The answer to the question “Will there be a hurricane in Florida?” is very important. For now, that answer is 50% on 50%. It either will be, or it won’t. We don’t know.

But, welcome to LLM and Big Data processing. Now we can give a slightly more precise, but still uncertain estimate. Great!

Processing texts with LLM is cool. “Rewrite this text and replace all instances of 'ты' with 'вы.'” Great! “Rewrite this text in an old-fashioned style.” Easy! Then I just need to sit down and proofread the text. But that’s fine.

You see, LLM works well with things where a precise result is not needed. You could have opened Word and replaced all instances of “ты” with “вы” using the auto-replace function. The result would have been awful. But thanks to LLM, now we have the ability to change “ты” to “вы” with 99.95% accuracy.

Translations? Easy, if you don’t need an exact translation. The LLM will use incorrect nuances and idioms. But it will be much better than my favorite copy-paste:

Clean your mouse. Unplug it from the computer, remove the genitalia, and wash it along with the rollers inside with alcohol. Stitch the mouse back up. Check the cable for breaks. Plug the mouse back into the computer. Look at your pad — it should not be a source of dirt and dust in the genitalia and rollers. The surface of the pad should not hinder the mouse's movement. (https://www.lib.ru/ANEKDOTY/mouse_driver.txt)

This kind of translation saves you hours of trying to understand what the text is about. But it doesn't work when you need a good artistic translation for a movie or a high-quality translation of a poem.

Chapter Four: The Human Factor

The most vulnerable spot for us will be in the human factor. Let's imagine for a second that you have your own business. You need a programmer. You post a job listing on some website and set up an LLM to filter resumes, screening out unsuitable candidates.

If you have three programmers and you're looking for a fourth, you won’t find them easily. But if you have ten thousand programmers and you get 1,500 resumes a day for each job listing, you won’t be able to hire without AI.

The point here is that you need to understand: for every “no” that AI may give about a person’s resume, that person has a reasonable justification or reason. In other words, you won’t be able to just hire with the help of AI. You’ll need to prepare for the fact that you’ll have to talk to the candidate in person.

And there you’ll find that AI, without thinking, might let the biggest jerk through, someone you’d never want to work with in your life. Or, on the other hand, a wonderful person who doesn’t know by heart what the square root of the 42nd-degree integral is might fail in an interview with AI.

Conclusion

What they tell you: AI is a goldmine where you can’t lose.

What it actually is: AI is just another technology you need to learn, understand, and know how to apply.

Any idea, no matter how wonderful, will be spoiled if it’s used too little or too much. AI everywhere is foolishness. AI only in the ChatGPT window once a month is lagging behind.

Don’t fall for every marketing ploy. Instead, ask for sales and profit charts from the companies that are feeding you the line that AI should be everywhere.

Write comment