- Hardware

- A

Setting up Linux for training models with GPU

Setting up a Linux PC for training models on GPU. The article provides links to scripts written to simplify the process of setting up a GPU PC on Linux for training machine learning models. The scripts and setup are divided into three key stages: setting up remote access using ssh/vnc/rdp, installing Nvidia drivers and CUDA for working with GPU, and configuring development tools such as Docker and Jupyter. The scripts can help with installation issues or serve as a basis for improvements/changes to quickly prepare a home lab for work.

Well, the hardware assembly is complete! My stand with the GPU is up and waiting for the command to act. But, of course, just assembling the PC is only the beginning of the journey. Now we need to teach the system to work with this beast by installing Linux, drivers, CUDA, and other joys. And this, as we know, can turn out to be quite a quest: if everything doesn't work perfectly right away, the "show of unpredictable problems" will definitely begin. I'm not a big fan of setting up and reconfiguring and not a big expert in Linux, but I periodically have to resort to settings, so I decided to write everything in the form of scripts right away to simplify the process and have the ability to roll back. The result in the form of scripts that "will do everything for you" and a description of them can be seen here! If you're lucky, they won't even break the system (joke, they will definitely break it).

Three steps to success

I do not cover the installation of Linux, it is well described, I will only say that I chose the Ubuntu 24.04 Desktop version as the basis (sometimes a desktop environment is required). And then I configured the system to my needs.

For convenience, I divided the installation into three parts, each of which solves specific tasks, making the process more flexible and convenient:

Remote access setup — includes SSH and security to connect to the machine.

Driver and CUDA installation — this is the key to harnessing the power of the GPU, without which your hardware is simply useless.

Development tools — Docker, Jupyter, and other nice things to make writing and testing code comfortable and safe.

For each step, I wrote scripts that install and remove or manage installed components. Settings for each step are in the config.env files. More detailed readme.

First step: remote access

I use the PC as a home server, but sometimes I use its desktop environment, otherwise, I could have installed the server version of Linux. In general, the PC stands in the dark without a monitor, and everything that runs on it should be accessible remotely. Therefore, in the first step, we set up remote access. For this, the following are provided:

SSH — for secure connection to the server.

UFW (Uncomplicated Firewall) — for network protection.

RDP — for remote desktop.

VNC — also for graphical access.

Samba — for file sharing on the network.

Detailed readme for the first stage.

Second step: NVIDIA drivers and CUDA

Now let's consider the moment for which everything was started. After all, I needed a GPU, and if so, it is indispensable without NVIDIA drivers.

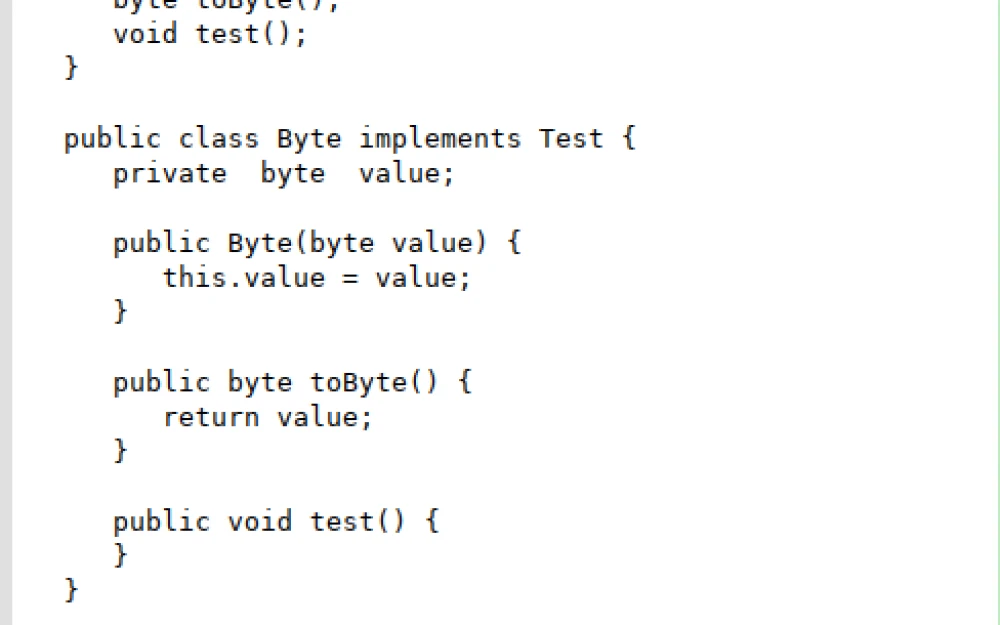

So, what do we install:

NVIDIA drivers — so that the video card finally understands what is required of it.

CUDA — the magic of parallel computing, it is indispensable for training networks.

cuDNN — cuDNN library for deep learning tasks.

Python — for development, in my case, the Ubuntu distribution already contained Python 3.12, but it was necessary to install the second version up to 3.11.

We correct the config and run the scripts, if you're lucky, you won't get a sudden reboot with a black screen (which, by the way, also looks quite minimalist and stylish). If this does happen, then maybe you are just Malevich?

Let's move on with those who successfully installed. Check the installation of the nvidia software:

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Fri_Jan__6_16:45:21_PST_2023

Cuda compilation tools, release 12.0, V12.0.140

Build cuda_12.0.r12.0/compiler.32267302_0If the output of the following command shows your GPU, then karma is clean and everything is ahead, if not, it's time to reconsider your life priorities. I was lucky.

$ nvidia-smi

Fri Sep 27 17:01:20 2024

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.183.01 Driver Version: 535.183.01 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce RTX 3090 Ti Off | 00000000:01:00.0 Off | Off |

| 0% 41C P8 15W / 450W | 4552MiB / 24564MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 2441 C python 4546MiB |

+---------------------------------------------------------------------------------------+Ну и вишенка на торте — проверим, действительно ли ваш GPU готов работать на благо науки. Используйте следующий код (не забудьте установить предварительно pytorсh):

import torch

print("CUDA доступна: ", torch.cuda.is_available())

print("Количество доступных GPU: ", torch.cuda.device_count())Результат должен быть:

python test_gpu.py

CUDA доступна: True

Количество доступных GPU: 1Если вывод подтверждает, что CUDA доступна, значит, настройка прошла удачно, и все готово, чтобы погрузиться в мир глубокого обучения на скорости GPU. Ну, или по крайней мере начать разбираться, что ещё пошло не так.

Подробный readme ко второму этапу.

Третий шаг: инструменты для разработки

After the first two stages, we have remote access configured, drivers installed, CUDA works. What's next? Next, we need a working environment so that we can train our models, run them for testing, and generally load all these CPU/GPU cores and memory available in the hardware to the fullest. Here, scripts will help, which in my case install the minimum necessary components for me, namely we install:

Git: Version control system.

Docker: Containerization platform.

Jupyter — isn't it every developer's dream to see their mistakes right in the browser?

Ray — a platform for those who decided that one GPU is boring and it's time to scale up.

Detailed readme for the third stage.

Conclusion

Surely it can be done better, cooler, and so on, but I hope that my scripts will help someone save time preparing a PC for training models, and someone will have a healthy or unhealthy reaction. I will be happy for the first, thank the second, and pity the third. Next time I plan to talk about installing LLM models.

Write comment