- Security

- A

Human-AI Interfaces: The Key to Future Interaction

Analysis of the evolution and development prospects of interfaces for harmonious cooperation between humans and artificial intelligence.

This article is dedicated to the study of the evolution and future of Human-AI interfaces as the most important tools that transform our interaction with technology. In the process of adapting these interfaces based on the individual's subjective experience, new conditions are created for the joint growth of humans and artificial intelligence, as well as for economic changes associated with these transformations.

Introduction

Artificial intelligence (AI) has come a long way from simple systems based on finite automata rules to complex models such as transformers and deep neural networks that can learn from large amounts of data and perform various tasks with high accuracy. However, despite all the achievements in algorithms and computing power, the key element of AI interaction with the outside world remains the interface. As Stuart Russell and Peter Norvig note, “an agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators” [1, p. 34]. Sensors and actuators are the foundations on which all AI interfaces are built, from simple systems to modern self-learning programs.

1, Russell S., Norvig P. Artificial Intelligence: A Modern Approach. Third Edition. — Pearson Education Limited, 2016. — 1296 p.

History and Relevance

Early Approaches

In the early development of AI, interfaces were simple and straightforward. For example, in the 1950s, the first programs, such as Alan Turing's "Chess Game" (Turochamp), interacted with the user through text commands. These interfaces were closely integrated with AI algorithms, providing direct access to internal processes but limiting flexibility and portability.

1960s: Early Experiments

-

ELIZA (1964-1966): the first conversational interface, simulating a psychotherapist. Despite its simplicity, it laid the foundation for Natural Language Processing (NLP) interfaces. A virtual interlocutor, a computer program by Joseph Weizenbaum. ELIZA showed that computers could process natural language, albeit at a very basic level.

-

STUDENT (1964): a system for solving algebraic problems with a text interface, demonstrated the ability to understand natural language. Written in Lisp by Daniel G. Bobrow as part of his dissertation.

1970s: The Era of Expert Systems

-

SHRDLU (1970): a revolutionary interface for manipulating virtual objects through natural language. Developed by Terry Winograd at MIT.

-

MYCIN (1972): a medical expert system with an interactive question-answer interface. Stanford University, doctoral dissertation by Edward Shortliffe under the supervision of Bruce Buchanan, Stanley Cohen, and others.

Read also: -

Xerox PARC (1973): the first experiments with graphical interfaces for AI systems. A research center founded at the insistence of Xerox's chief scientist Jack Goldman.

Modularity and Standardization

With the development of technology and the increasing complexity of AI systems, it became apparent that tight integration of the interface with the AI core created many problems. In the 1980s, modular approaches began to emerge, where the interface was separated from the AI core. This allowed for the creation of more flexible systems that could interact with various AI models. However, this also required the development of standardized interaction protocols, which was not an easy task.

1980s: Graphical Interfaces

-

Symbolics (1980): the first commercial workstations with graphical interfaces for AI development. A collective effort of MIT AI Lab alumni.

-

KEE (1983): a hybrid system with a graphical interface for developing expert systems. Knowledge Engineering Environment developed and sold by IntelliCorp.

1990s: Multimodal Interfaces

-

IBM Simon (1994): the first smartphone with a touchscreen and AI elements in the interface. Its behavior was entirely determined by pre-programmed algorithms simulating human functions. An internal project of IBM Research.

-

Dragon NaturallySpeaking (1997): the first commercially successful speech recognition system by Nuance Communications, USA. Acoustic and language models, voice adaptation, contextual analysis. A collective effort.

Intelligent Interfaces

In the 2000s, with the advent of the first successful AI applications such as Siri from Apple, it became clear that interfaces should not only transmit data but also adapt to the user, providing intelligent interaction with the user. This led to the emergence of interfaces that themselves became AI systems. Such interfaces could learn based on interaction with the user, which significantly improved the user experience, making interfaces not just tools, but full-fledged partners in communication and problem-solving.

2000s: Web Interfaces

-

Google Search (2001): The introduction of AI in the search interface. Teamwork led by Larry Page and Sergey Brin.

-

IBM Watson (2006): A new approach to natural language processing in interfaces by David Ferrucci and his team of specialists.

Read also:

2010s: Mobile Assistants

-

Siri (2011): A revolution in voice interfaces. Siri was developed by Siri Inc., founded by Dag Kittlaus, Adam Cheyer, and Tom Gruber. It was then integrated into iOS.

-

Google Now (2012): Context-dependent interfaces. Confidential teamwork.

-

Alexa (2014): Integration of AI interfaces into the smart home. Alexa is based on speech recognition technology acquired by Amazon from the Polish company Ivona in 2013. Collective efforts of a team of specialists.

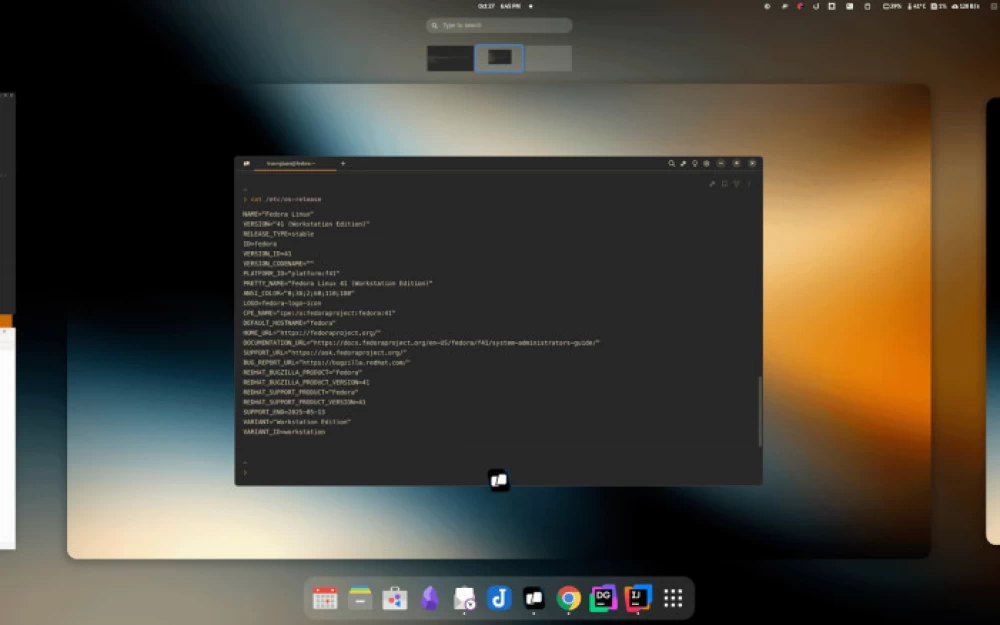

Modern LLM Transformers

Interface Features

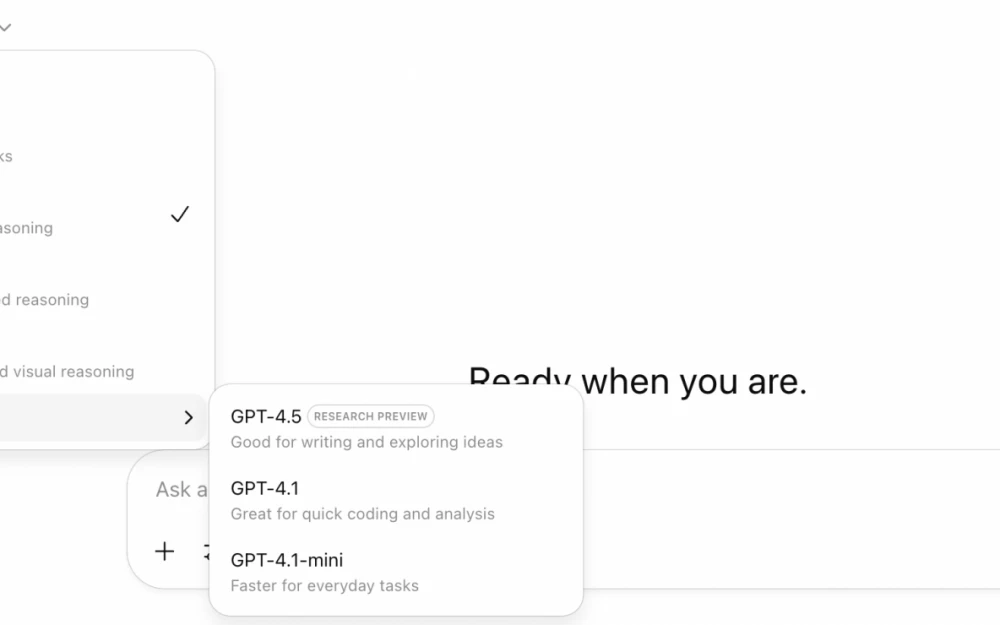

Modern models, such as GPT-3 and ChatGPT, use a hybrid approach to interfaces. They combine:

1. Basic web interface: Software providing user access.

2. AI elements: Context processing, creation, and formatting of responses based on user interaction.

3. Integration: Close connection with the language model, ensuring high processing speed and performance.

2020s: The era of large language models

-

GPT-3 (2020): The new standard for text interfaces.

-

DALL-E (2021): Next-generation multimodal interfaces.

-

ChatGPT (2022): A revolution in conversational interfaces.

Technical aspects of LLM interfaces

Attention mechanisms in interfaces

-

Self-attention: Processing the context of user input

-

Cross-attention: Linking user input with the knowledge base

-

Multi-head attention: Parallel processing of various aspects of interaction

Comparative table of approaches to building LLM interfaces

|

Approach |

Advantages |

Disadvantages |

Application |

|

Pure API |

Maximum flexibility. Low latency |

Development complexity |

Enterprise solutions |

|

Web Interface |

Accessibility, Ease of use |

Limited customization |

Public services |

|

Mobile SDK |

Native integration, Offline work |

High device requirements |

Mobile applications |

|

Embedded |

Autonomy, Privacy |

Limited functionality |

IoT devices |

Efficiency Metrics

Response Speed

-

API interface: 50-100 ms

-

Web interface: 100-200 ms

-

Mobile interface: 150-300 ms

Context Understanding Accuracy

-

Basic transformer: 85-90%

-

With context window: 90-95%

-

With long-term memory: 95-98%

Resource Consumption

-

RAM: 0.5-2 GB per session

-

CPU: 2-4 cores per session

-

GPU: 4-8 GB VRAM for inference

Examples of ready-made interfaces

-

ChatGPT: This is a powerful web interface with adaptive AI elements, allowing it to provide users with contextual and relevant responses to queries.

-

Google Assistant: An intelligent voice interface that adapts to user behavior and can offer personalized solutions.

-

DeepSeek Chat: An interface optimized for contextual communication with a language model, supporting interaction across various platforms.

Prospects and Economic Benefits

Development Prospects

The future of AI interfaces is seen in the further integration of AI components that not only transmit data but also analyze context, predict user intentions, and adapt to their preferences. As a result, it will be possible to create interfaces capable of deep interaction with the user, providing unprecedented levels of personalization and efficiency.

Main Approaches to Interface Creation

Interface as Part of AI

Advantages:

- Close Integration: The interface is directly connected to AI, allowing for high personalization.

- Direct Access to Algorithms: The interface can be configured for maximum performance and specific use cases.

Cons:

- Tight coupling: Any changes in the interface can affect the AI core, complicating development.

- Low flexibility: The interface is difficult to adapt to different platforms or changing requirements.

Interface as separate software

Pros:

- Modularity: Ease of development and updates due to independence from the main AI.

- Portability: Ability to integrate with different AI models and systems.

Cons:

- Additional standardization costs: Protocols are needed for interaction between different components.

- Delays: An additional software layer can introduce slight delays in data processing.

Interface as a separate AI

Pros:

- Adaptability: Ability to learn and evolve with the user.

- Independence: The interface can develop in parallel with the AI core.

Cons:

- Complexity: Managing two AI systems requires more resources.

- Conflicts: Interaction between two AIs can lead to data or algorithm conflicts.

Hybrid approach

Pros:

- Flexibility: The optimal balance between integration and modularity can be achieved.

- Scalability: The interface easily adapts to new requirements and evolving tasks.

Disadvantages:

- Development complexity: more complex architecture requires additional effort and resources.

Example of modern implementation: ChatGPT

ChatGPT is an example of a successful combination of a hybrid approach to AI interfaces. Using a basic web interface with a deep level of integration and AI elements, it allows for effective interaction with users and processing of complex queries.

Economic Benefits

The development of intelligent interfaces opens up significant opportunities for business. Investments in such interfaces allow:

- Improve user experience: more convenient interfaces increase customer loyalty and stimulate demand.

- Create new markets: the application of AI in various industries such as medicine, education, and transportation will open up new commercial opportunities.

- Standardization: Creating unified interaction protocols will simplify the integration of AI systems with other technologies and devices.

Intelligent interfaces demonstrate significant economic impact for companies. The implementation of these systems allows reducing the time spent on employee training by 40-60%, increasing overall productivity by 20-35%, and reducing the number of errors by 50-70%. The return on investment in these technologies is usually between 6 to 18 months.

Some successful examples of implementing such solutions can be found in various industries. In the banking sector, JP Morgan reduced request processing time by 45%, and Bank of America achieved a 35% increase in customer satisfaction. In healthcare, Mayo Clinic reduced diagnosis time by 30%, while Cleveland Clinic increased prescription accuracy by 25%. In e-commerce, Amazon increased conversion by 15%, and Alibaba reduced product search time by 40%.

Forecasts for the development of the intelligent interface market for the next 10 years indicate rapid growth. In 2024, the market size is estimated at $15 billion, by 2029 it will grow to $45 billion, and by 2034 it is expected to reach $120 billion. The main development directions include interface personalization, multimodal technologies, the use of Edge Computing - a concept of distributed computing where data processing occurs as close as possible to the place of its creation, for example, on user devices or local servers, rather than in remote data centers (cloud).and the introduction of quantum computing into interfaces.

Who is working on AI interfaces?

The development of artificial intelligence (AI) interfaces requires an interdisciplinary approach and involves several key professional roles. UX/UI designers are responsible for creating visually appealing and user-friendly interfaces. AI engineers develop algorithms and integrate them into interfaces, ensuring the functionality of AI systems. Data engineers process and analyze the information necessary for the operation of interfaces, making their contribution important for the accurate and efficient functioning of AI.

Engineering psychologists, in turn, study the interaction of users with interfaces, adapting them to the needs and preferences to improve the user experience. These specialists analyze user behavior, optimize interfaces to enhance usability, and develop adaptive solutions that change depending on user behavior.

Key leaders in the field of AI interface development include companies such as OpenAI, where prominent figures such as Sam Altman (CEO) and Greg Brockman (Chairman) hold leadership positions. Ilya Sutskever, who was one of the co-founders and chief scientist of OpenAI, left the company in 2024. Currently, his position is held by Jakub Pachocki, who leads key research at OpenAI, including the development of GPT-4 and other AI products.

As for Google, Geoffrey Hinton previously played an important role in AI research, but his main area of activity is related to deep learning, not interfaces for Google Assistant. Hinton left Google in 2023 and is now focused on other projects, continuing his AI research. The main development of interfaces at Google is now under the responsibility of the Google DeepMind team, which is actively working on improving AI products.

The leading countries in the development of AI and interfaces include the USA, with leading companies such as OpenAI, Google, and Microsoft. Europe is actively developing its positions through innovative startups and research institutes. In Switzerland, more than 14 major institutes are working on AI projects in collaboration with the USA and China. China continues to invest heavily in AI, with companies like Baidu and Tencent playing a key role in the development of AI interfaces.

Interface Development Features

The development of interfaces for AI interaction with operators and users differs in its requirements.

For AI-operator interfaces, they must be standardized and provide high data transfer efficiency. They must be scalable to handle large volumes of information and load.

For human-AI interfaces, more attention is required to usability. They must be adaptive, adjusting to user preferences, and visually understandable, ensuring ease of understanding and interaction.

Current Issues in Interface Development

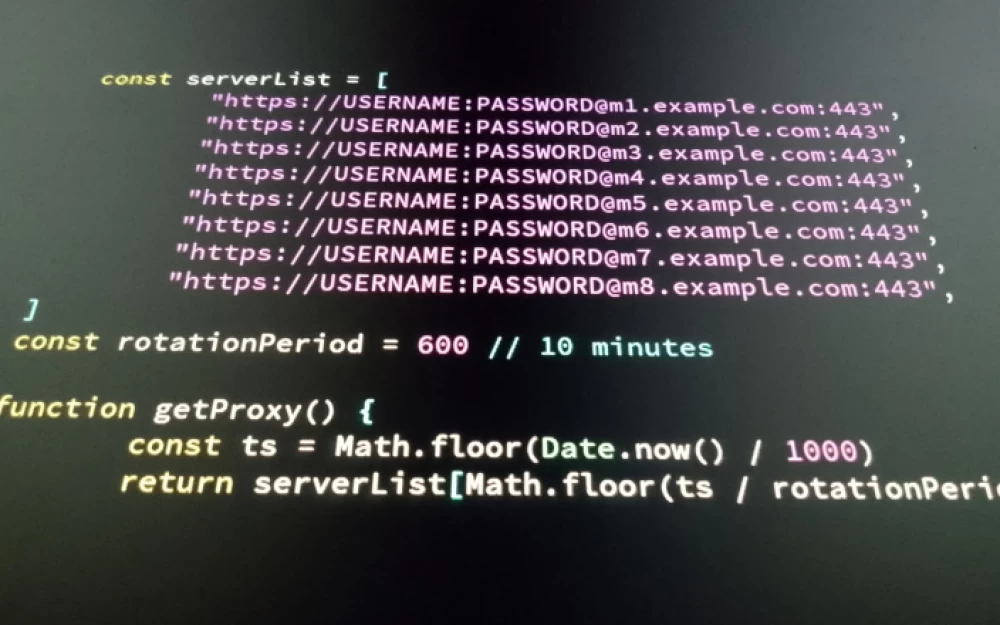

1. Security

One of the key issues in the development of AI interfaces is ensuring data security. The use of AI components requires the creation of reliable protection mechanisms to prevent data leaks and ensure user confidentiality. This includes encryption, multi-factor authentication, and continuous system monitoring for vulnerabilities.

2. Ethics

Ethics and transparency issues are critical for the integration of AI into interfaces. AI must operate predictably and transparently, giving users control over their data. In addition, it is important to ensure that AI algorithms do not exhibit bias or discrimination, adhering to principles of fairness and responsibility.

3. User Experience (UX)

Optimizing user experience is an ongoing task in interface development. This requires regular interface testing and user feedback analysis. UX design should be intuitive, adaptive, and capable of meeting the needs of various types of users, minimizing interaction complexity.

4. Interface Development within the Framework of Network Interaction with Web 3

Developing interfaces for Web 3, the decentralized internet, opens up new opportunities. The main features of Web 3 that affect interfaces include:

-

Decentralization: The ability to work with decentralized applications (dApps) and services without centralized servers.

-

Security: Blockchain technologies ensure data security through cryptographic protocols and the impossibility of modifying records.

-

Transparency: Web 3 offers transparent interaction, which increases user trust and allows tracking all operations.

These issues and trends show that for the further successful development of AI interfaces, it is necessary to focus not only on technological progress but also on adhering to security principles, ethics, and improving user experience, as well as adapting to new architectures such as Web 3.

Conclusion

AI interfaces play a crucial role in creating effective interaction between users and artificial intelligence systems. The evolution from simple text commands to adaptive systems like ChatGPT highlights the importance of flexibility, scalability, and adaptability for the successful integration of AI into everyday life.

Modern AI systems require developers to create not only convenient but also intelligent interfaces capable of learning and adapting to user needs. The economic advantages of such systems are obvious: they improve user experience, create new markets, and open up business opportunities while contributing to the standardization of interaction.

A hybrid approach that combines integration and modular architecture provides broad prospects for the development of AI interfaces, ensuring high performance and flexibility in the face of rapidly changing market demands.

The future of AI interfaces lies in their ability to deeply interact with the user. These systems will not only respond to requests but also offer intelligent solutions based on the analysis of user behavior and preferences.

Investments in AI interfaces are becoming a strategically important direction, contributing not only to the improvement of current technologies but also to the opening of new ways of interaction between humans and artificial intelligence, which will help create a more sustainable and efficient economic environment.

DHAIE (Design Human-AI Engineering & Enhancement) offers a comprehensive approach to human-AI interaction, developing adaptive interfaces that evolve with the user. These technologies have the potential to become the foundation for new economic and technological models of the future.

Write comment