- Security

- A

Keeping up with deepfakes: application of technology and ethical aspects

The "deepfake" technology carries deep ethical implications, raising concerns about misinformation and manipulation. By seamlessly blending fabricated content with reality, deepfakes undermine trust in the media and public discourse. And as people's images are exploited without their consent, it also jeopardizes personal safety.

Trust issues are exacerbated as distinguishing truth from lies becomes an increasingly challenging task. Mitigating these ethical dilemmas requires proactive measures, including reliable detection systems and regulatory frameworks.

What are deepfakes?

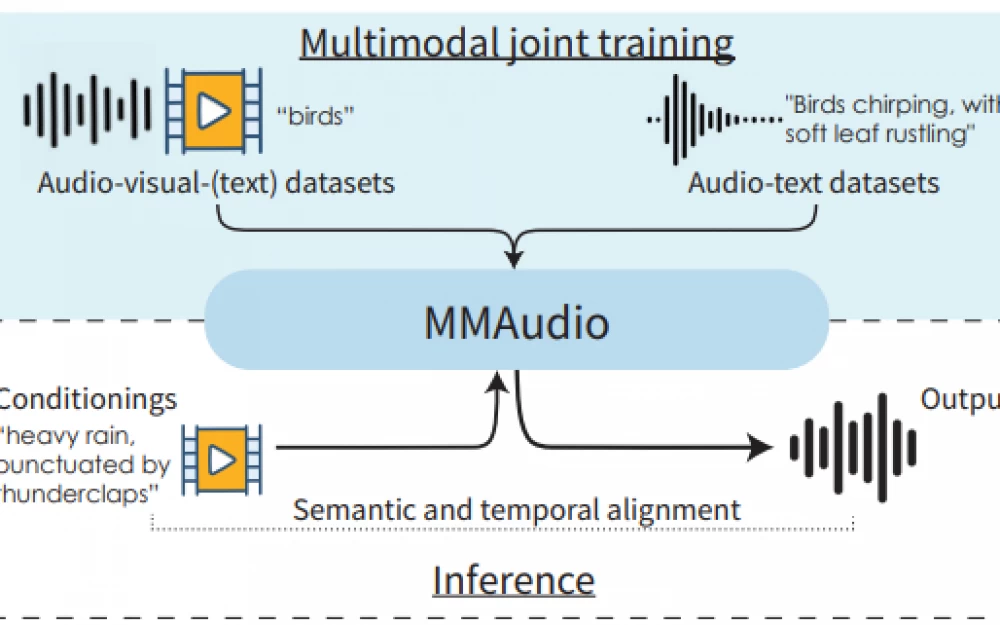

Developments in generative artificial intelligence (genAI) and large language models (LLM) have led to the emergence of deepfakes. The term "deepfake" is based on the use of deep learning (DL) to recreate new but fake versions of existing images, video, or audio materials.

Deep learning is a special type of machine learning that involves "hidden layers." These are a series of nodes in a neural network that perform mathematical transformations of real images into really good but fake ones. The more hidden layers in a neural network, the "deeper" it is. Neural networks, and especially recurrent neural networks (RNN), are known to handle image recognition tasks quite well, so using them to create deepfakes is not difficult.

There are two main types of tools. The first is generative adversarial networks (GANs), which pit two networks against each other. One network (the generator) creates a deepfake, while the other (the discriminator) tries to identify it as a fake. The constant battle improves both the forger and the detector.

The other type is autoencoders, which are neural networks that learn to compress data and then recreate it. This allows for data manipulation and deepfake generation by altering the compressed version.

Criminal activity using deepfakes

Today, there are countless legitimate applications of deepfakes in industries such as art, entertainment, and education. In the film industry, this technology can be used, for example, to rejuvenate actors, allowing them to play younger versions of themselves without the need for makeup or body doubles. In the latest Indiana Jones movie, actor Harrison Ford was made to look over 40 years younger. Deepfakes are also used to "bring back to life" historical figures or events, providing a visual representation that adds depth and immediacy to the narrative.

But the problem is that deepfakes are not only used for legitimate purposes, which is especially critical for modern society, where the vast majority of people get information about the world and form opinions based on content from the Internet. That is, anyone who is able to create deepfakes can spread misinformation and influence the masses, making others behave in a way that somehow serves the personal interests of the faker. Misinformation based on deepfakes can sow chaos on micro and macro scales.

On a small scale, deepfakers can, for example, create personalized videos in which, apparently, a relative asks for some amount of money to help him get out of an emergency.

On a global scale, fake videos in which well-known world leaders make fictional statements can provoke violence and even war.

Using synthetic content for cyberattacks

From 2023 to 2024, frequent phishing attacks and social engineering campaigns led to account hacking, asset and data theft, identity theft, and reputational damage to businesses across all industries.

A notorious deepfake attack was a fraud incident that affected a bank in Hong Kong in 2020. A manager received a call from the "company director" requesting authorization for a transfer as the company was about to make an acquisition. Additionally, he received an email signed by the director and a lawyer, which looked genuine, but both the document and the voice were fake. The manager made the transfer. Investigators were able to trace the stolen funds and found that 17 people were involved in the fraud.

There is also a risk for insurance companies as fraudsters can provide evidence through deepfakes for illegal claims. Insurance fraud using fake evidence is not new. But while in the era of analog photography it required a lot of effort and expertise, today image processing tools are part of any specialized software.

Attacks on medical infrastructure

Although the threat of deepfakes in healthcare remains largely hypothetical, the industry is actively engaged in preemptively addressing this danger. Concerns cover several key aspects:

false content can hinder the dissemination of accurate health information, potentially undermining trust in reliable sources;

scammers can use convincing audio and visual materials to deceive patients, posing as medical professionals to obtain confidential data;

hackers can use synthesized sound to hack hospital systems.

As early as 2019, Israeli researchers demonstrated how malware can be used to alter MRI or CT scans. As part of the demonstration, they hacked and edited medical 3D scans to add images of tumors. Radiologists were then brought in to interpret the results, and they could not distinguish between real and fake scans.

There are many motives for conducting such attacks, including falsifying research evidence, insurance fraud, corporate sabotage, job theft, terrorism, etc.

Evidence impact in legal proceedings

The spread of deepfakes can make anyone doubt the veracity of evidence. The fact that fake information can be both convincing and difficult to identify raises concerns about how this technology can jeopardize the court's duty to establish the truth.

How to protect yourself or your business from deepfakes?

Attempting to hold those responsible for deepfakes accountable is fraught with numerous problems. In addition to the difficulty of identifying offenders, producers, like other cybercriminals, can operate outside the state. At the same time, despite the fact that deepfakes have the potential for large-scale and dangerous consequences for our society, they remain largely unregulated by law.

However, there are several measures that people can take on their own to reduce the risks associated with deepfake activities.

Transparency and vigilance. Knowledge is the first line of defense. Regular training sessions and seminars can equip company employees with tools to distinguish between genuine and fake content.

Secure communication channels. The ability to use encrypted communication channels and platforms with multi-factor authentication for critical business communications, especially those related to finance or confidential internal matters.

Investment in cybersecurity. Cybercriminals are becoming virtuosos in handling artificial intelligence, and to thwart them, it may be necessary to fight fire with fire.

There may not be a perfect solution to protect against the dynamic threat of fraud using deepfakes. As technology evolves, people will find new ways to use it for both innocent and other purposes. Nevertheless, there are strategies that organizations and individuals can use to prevent fraud using deepfakes and mitigate its consequences if it occurs.

Moreover, scientists, researchers, and founders of technology companies are now working together on ways to track and label AI content. Using various methods, forming alliances with news organizations, they hope to prevent further undermining of the public's ability to understand what is true and what is not.

Manufacturers Sony, Nikon, and Canon have begun developing ways to embed special "metadata" that lists when and by whom a photograph was taken directly at the time of image creation.

Some companies, including Reality Defender and Deep Media, have created tools that detect deepfakes based on the underlying technology used by AI-based image generators.

But even if all implemented methods are successful and all major technology companies fully join them, people will still have to critically evaluate what they see on the internet.

The solution of ethical, social, and personal dilemmas requires a multifaceted approach to detecting deepfakes. Legal frameworks are also needed to protect the rights and privacy of individuals. Awareness and public education about the responsible use of AI should be woven into business operations, government initiatives, and industry stakeholder pathways. Collaboration between technology developers, policymakers, researchers, and society as a whole is crucial to overcoming the challenges associated with deepfakes.

However, not everything is so bad. This technology has enormous positive potential for the public. It opens doors to use cases that can bring amazing transformations to the world, such as improving accessibility for people with disabilities, educational tools for modeling various scenarios and events that would otherwise be inaccessible, or the invention of personalized virtual assistants capable of human interaction and virtual communication.

Write comment