- Security

- A

No security exists: how the NSA hacks your secrets

Finite fields, hash mincers, covert radio channels, and trojans soldered into silicon. While we pride ourselves on AES-256 locks, intelligence agencies seek workarounds: they substitute randomness generators, eavesdrop on the whine of laptop coils, and smuggle keys out through invisible ICMP packets. This article assembles a mosaic picture of modern attacks—from mathematical loopholes to physical side channels—and asks an uncomfortable question: does absolute security even exist? If you’re sure it does, check whether your shield is cracking at the seams.

Today is Friday the 13th. In culture, this day is shrouded in a sense of impending doom, as if luck itself is taking a day off. In the novel "The Three-Body Problem" and its adaptation, humanity faces a horror scenario: scientists around the world end their lives, whispering that "physics no longer exists." An alien intelligence uses perfect technology ("sophons") to sabotage experiments and make the laws of nature appear broken. This raises a disturbing question: what if, in the digital world, our sense of security is just a convenient illusion? What if the cryptographic protection we rely on can fall apart the moment a skilled enough hacker appears? Maybe absolute security doesn’t exist—and it’s time to peek behind the curtain of this intellectual thriller.

Finite fields: a game by closed rules

Imagine an ordinary clock: 9 + 4 on the dial gives 1, because the hand goes around and returns to the same twelve-division universe. This is how modular arithmetic works—the foundation of what's called a finite field. You have a limited set of numbers, and any operation—addition, multiplication, exponentiation—never breaks out of its borders. Crypto algorithms love this “fenced playground”: everything is deterministic, verifiable, repeatable.

But the coziness of a closed space also brings weakness. If the field is finite, then in principle, every state can be calculated or enumerated—it’s just a matter of resources. In ciphers, the field size is monstrous: AES operates with 2¹²⁸ possible blocks, a number so astronomical that brute force seems insane. Nevertheless, it’s still finite. Which means, in theory, there’s a way, no matter how star-priced, to go through the whole cycle and return to the starting point. And if someone—say, intelligence agencies with unlimited budgets—gets their hands on some clever math or a quantum lever, the cozy playground turns into a trap.

Hash functions: a meat grinder that sometimes reverses

Hash functions are often called a digital meat grinder: any message goes in, and out comes a fixed-length “cutlet.” Theoretically, you can’t turn minced meat back: the hash forces infinitely many different inputs into the same imprint. But let’s remember the finiteness of fields. If the function works inside a closed universe of bit strings, it, like a clock, will eventually repeat—it’s just a matter of how long the cycle is.

This very crack was exposed by researchers who showed collisions for SHA-1. Instead of fantastical billions of years, a few months of cloud computing and neat mathematical tricks sufficed. It became clear: irreversibility isn’t “magic,” but rather that the cycle is currently too vast for mere mortals. But if you have access to exaflops, to a cunning algebraic method, or to a quantum accelerator, the meat grinder can be spun back to the original bone. All you have to do is find the right rotation frequency—and the sanctity of the hash turns into an illusion.

Block ciphers: secrets on the field of battle

Let’s move on to the heavy artillery — block algorithms. Let’s take AES, the king of modern encryption. On paper it looks monumental: 10–14 rounds of diffusion and confusion, a huge key, the stern mathematics of the GF(2⁸) field. For each key, AES generates just a single giant permutation of all 2¹²⁸ possible blocks. In other words, the cipher is a strictly defined function on a finite set, which means it’s theoretically reversible if you know the secret order.

What’s the catch? Inside the AES rounds lies elegant algebra. Bytes go through the S-box, which can be described as an inverse modulo x⁸ + x⁴ + x³ + x + 1 followed by an affine transformation. All this operates in that same finite field, and if someone ever figures out how to “straighten out” these transformations into a system of equations, the cipher’s resilience collapses. There are no such methods today — at least, not publicly. But the story of DES teaches that the possibility of some “unknown science” in the hands of intelligence agencies is quite real.

Let’s add the notorious problem of implementations. Hardware accelerators like AES-NI act as a black box: yes, they encrypt faster, but what else are they doing with the key while the user blinks? The smallest engineering flaw — and a side channel emerges. One extra power consumption spike, one misaligned timing — and a cryptoanalyst with an oscilloscope starts reading secrets like a paperback novel.

This is how it turns out: the math can be reinforced concrete, but a single microscopic gap — and all that grandeur turns into a decorative façade, through which an experienced adversary walks without keys or lockpicks.

Randomness: generators in the crosshairs

No cipher is stronger than its key, and no key is stronger than the source that generated it. Randomness is the heart of cryptography, and that’s exactly what today’s most advanced hackers are targeting. A computer, no matter how hard it tries, can’t really “roll the dice”: inside, there are just cold transistors running deterministic code. So pseudorandom generators come into play — algorithms that grow an entire ocean of bits from a tiny seed of entropy.

And here’s where the magic starts to resemble card tricks. In 2013, there was a scandal with Dual_EC_DRBG, a generator ratified by NIST. It turned out its elliptic curve hid mysterious constants known only to a select few. Whoever knew these numbers could, within seconds, recover the internal state and predict every “random” value to follow. Rumor had it that RSA Security received several dozen million dollars to include this generator in their libraries by default — a quiet backdoor, disguised as a standard.

Hardware RNG modules look better: they listen to thermal noise, radiation bursts, quantum fluctuations. But recall the RDRAND instruction in Intel processors — a little black box that thousands of programs trust without question. How many engineers actually checked what’s inside? How do you make sure there isn’t an extra register deep in the silicon, quietly sending every batch of “randomness” to a secret engineering stash?

And while we argue about what counts as sufficient entropy, the attacker chooses the simplest path: to remove the mask. He measures how your generator sticks to repetitions, analyzes weak linear relations, slips you a predictable initialization vector. Because if randomness isn't random, all cryptography collapses instantly — like a house of cards in the wind.

Hardware backdoors: a trojan soldered into silicon

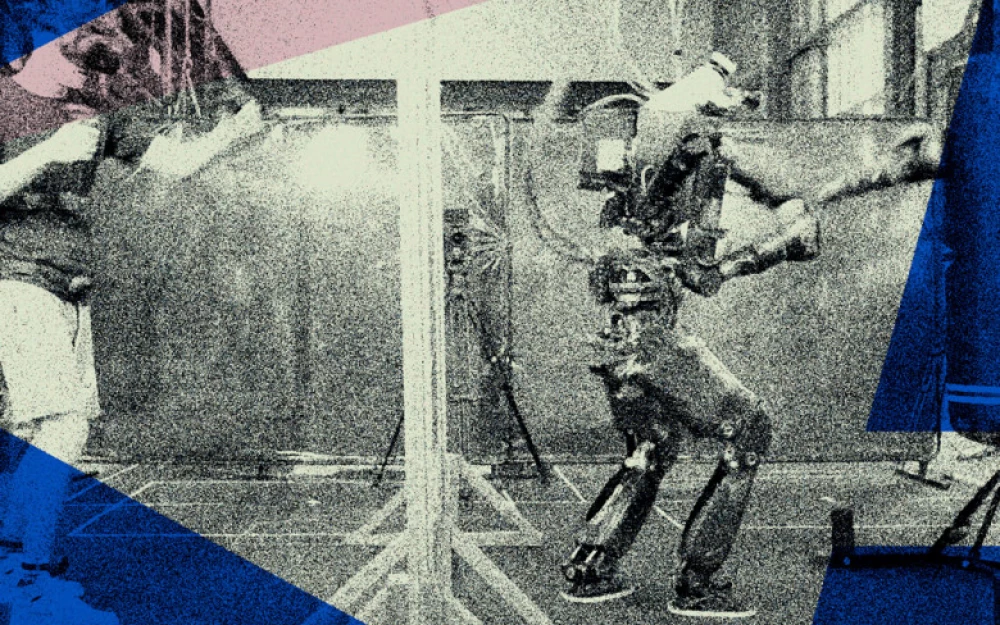

Cryptography can be perfect, but what if the silicon itself is already playing for the enemy? After Snowden's leaks, it became clear: intelligence agencies have been working in the supply chain for years. The revealed documents described a mundane scene — a box of Dell servers is "accidentally" delayed for a few hours, a micro-module bug is installed inside, and the equipment continues on to an unsuspecting bank.

The most predatory hub for such an "infection" remains a hidden subsystem inside modern processors. For Intel, it’s the Management Engine: a tiny independent core that boots before the operating system, with its own network stack and direct access to memory. It doesn’t appear in the BIOS or Task Manager, but it can read, encrypt, and send anything.

Network equipment is even more vulnerable: one extra microchip on the motherboard, a pair of non-standard traces — and now you have a transceiver capable of forwarding traffic around any VPN. Catching such a bug is almost impossible without X-ray and a lab. Even microcode updates that "improve stability" can secretly enable a mode where a specific command sequence lifts all access restrictions.

A hardware backdoor is a dream attack. It doesn’t depend on software, survives OS reinstalls, and triggers silently while the user confidently reads, “Connection secured with 256-bit encryption.”

Physical leakage channels: when the walls have ears

Let’s suppose the algorithm is flawless, the hardware is clean, the keys are generated perfectly. Even in such a “sterile” picture, the computer keeps talking — through the physics of its own body. Every operation inside the chip alters currents and fields: the processor draws a little more power, the switching coil gives a faint squeak, a capacitor radiates a microscopic beam of radio waves. For a cryptanalyst, this isn’t noise — it’s a full-fledged language.

TEMPEST technologies (Telecommunications Electronics Material Protected from Emanating Spurious Transmissions) appeared back in the 1960s, when intelligence realized: by fluctuating voltage in a telegraph machine, you can read the typed text. And then it got bigger. In 1985, researcher Van Eck showed that the image from a CRT monitor could be reconstructed with a radio receiver behind a wall, simply by listening to the electromagnetic background. Today's attacks are on the nanoscale: Israelis extracted an RSA key by holding a $20 radio module up to a laptop; a Cambridge lab read out an encrypted phrase by analyzing the barely-audible “whine” of VRM coils.

The leak can also go in reverse. A USB-C charging cable becomes an antenna: a sleeping smartphone gently modulates the current and transmits the keys into the air, in milliwatts, without alerting Bluetooth scanners. The status LED on a router sends Morse code to an infrared camera ten meters away. A laser microphone in a parking lot picks up window vibrations as a password is typed.

The main thing for the attacker is not to break the cipher, but to eavesdrop on the physical whisper of the device. Cryptography protects the bits in mathematics, but it doesn’t protect your hardware from making sounds, lighting up, and breathing in current. In the field of real emissions, even the perfect formula turns into a paper shield.

Invisible protocols: how packets spy without noise

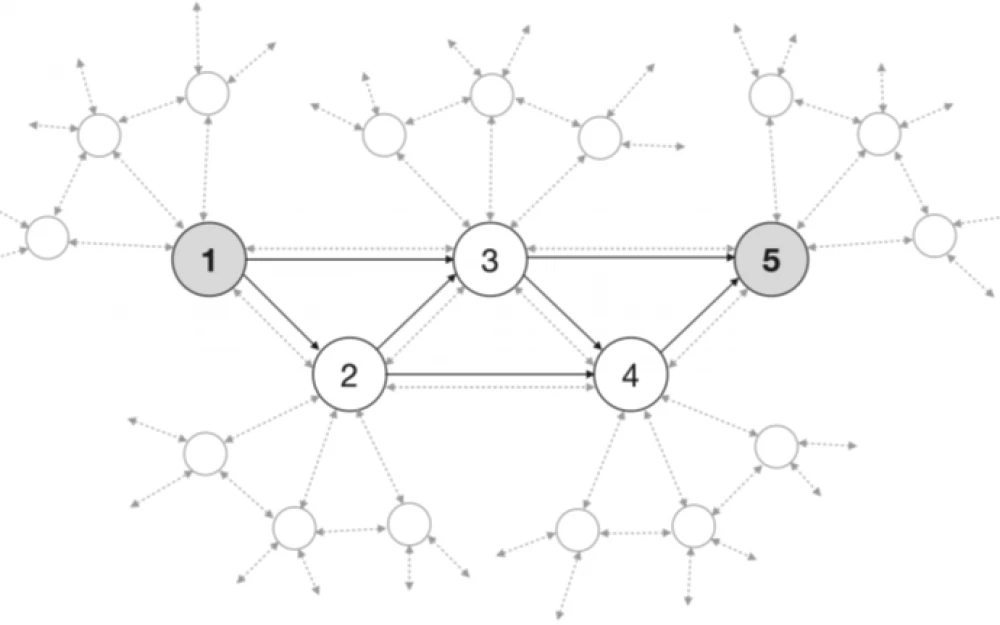

Even if emissions are suppressed and there are no bugs, the spy still needs to get the loot out. For that, there’s an entire menagerie of covert channels—paths that network filters consider “service junk” and let through automatically. The classic example is ICMP echo, that same ping. Formally, the packet only carries diagnostic bytes, but its data field allows up to 64 KB of useful payload. Encrypt a key in there—and the traffic will go out, leaving no log in the proxy.

DNS tunneling is even trickier: a domain name like kd9fp3y7.example.com looks random, but in reality each label encodes another fragment of the secret. Providers resolve millions of these requests every day, and a couple hundred extra lines in the logs drown in the crowd.

It’s the same story in mobile networks: the 5G stack has management channels that routers don’t log at all. Send a “service” message with the right MIB label—and a satellite receiver thousands of kilometers away will accept it as routine telemetry.

The point is simple: not to break the cipher, but to extract the key along a road where no one is looking. And while we enjoy the green lock of TLS, somewhere an innocent ICMP packet flashes by, carrying that very 256-bit secret harvested by a hardware trojan.

Security illusion: simulation of trust

Let’s put the puzzle together. Finite fields offer a theoretical path to reversibility. Hashes and block ciphers are only resilient as long as no one finds a shortcut. A randomness generator can be “fed” fake entropy. A processor can shuffle keys right in the silicon, and a hidden radio module can send them outside. Then invisible protocols join in, sneaking the loot past IDS and VPN eyes.

The result is a strange world: from the user's side, everything shines with locks and certificates, while the adversary has a set of lockpicks, each trying not to even touch the cipher itself. Instead of a frontal assault, he goes around where mathematics, physics, and the supply chain converge. Add up these vectors and you see how almost any cryptosystem can be unraveled without ever breaking the formal “fortress” of the algorithms.

This leads to a provocative conclusion: there is no absolute security, only the costliness of attack from our perspective. For some, there are different resources—different horizons. Hence the main question: isn’t cryptography just a simulator of trust, a convenient game of locks until a strong player decides it’s time to come out of the shadows?

Final chord: what’s left for us?

If security is a stage where everyone has a part to play, then we, the users, are often sitting in the audience: applauding a beautiful performance of abbreviations—AES, SHA, TLS, TPM—and believing that the backstage is well sewn up. But the actor playing the villain has long been walking the service corridors, knows every hatch, and knows exactly where the secret door hides in the scenery.

What should we do? First — admit: there are no vulnerabilities only in dead systems. Living software, living hardware, and living people will always leave a loophole. Second — learn to divide trust: store keys where microcode can't be replaced; generate randomness from different independent sources; keep an eye on the physical aspects of the device. Third — don't be afraid to doubt: ask manufacturers uncomfortable questions, check standards, turn protocols inside out.

And finally, keep in mind: absolute zen is unattainable, but a restless mind is the best defense. As long as we keep our eyes open, the next "sofawn" has less chance to silently replace reality. Security is a process, not a state; a road with no finish line. The more carefully we look into the shadows, the less space is left for those trying to hide there.

Write comment