- AI

- A

Why I stopped using AI-based code editors

This article is a translation of a blog post by Luciano Nooijen published on April 1, 2025

I’m publishing this translation while agents are writing code for a couple of projects. Every few minutes I review, give clarifications.

During long walks on Samui, we discussed this professional transformation a lot with my friend, a very cool coder whom I look up to https://github.com/0xhyperdust.

Like many of our acquaintances, our discussions were about what our favorite activity (coding) will become in a few years and what should be done right now to remain in demand.

One of the main hypotheses was that in 2–3 years we will become AI operators, whose role is to comprehend global and local project logic, set tasks, write architectural parts of the code, and step in when something goes wrong.

However, this article offers a different perspective on what exists now and on the likely future.

The opinion of the community is very interesting, as well as seeing the reality in 2–3 years.

Below is the translation (done by ChatGPT 4o):

TL;DR: I decided to use AI only manually, because I felt how slowly I was losing professional competence with regular use, and I advise everyone to be careful about making AI an integral part of your workflow.

April 1, 2025

In short: I decided to use AI only manually, because I felt how slowly I was losing professional competence with regular use, and I advise everyone to be careful about making AI an integral part of your workflow.

At the end of 2022 I started using AI tools for the first time—even before the first version of ChatGPT appeared. In 2023 I started actively using AI in my workflow. At first I was completely amazed by the capabilities of large language models. The ability to just copy and paste incomprehensible compiler errors along with code in C++, and instantly get an explanation of where the error is—seemed like magic.

As GitHub Copilot was getting more powerful, I started using it more and more. I tried different LLM integrations right in the editor. AI became part of my workflow.

But at the end of 2024 I deleted all LLM integrations from my editors. I still sometimes use language models and I think AI can be extremely useful for many programmers. So why did I stop using AI editors?

Tesla FSD (Full Self-Driving)

From 2019 to 2021 I drove a Tesla. Now I wouldn’t buy it again—not for political reasons, but simply because the build quality is low, the price is too high, and repair and maintenance are a nightmare.

When I first bought a Tesla, I started using Full Self-Driving (FSD) whenever possible. It was amazing: you get on the highway, turn on FSD, use the turn signal—and the car changes lanes by itself. Driving boiled down to just making it to the highway, turning on autopilot, and listening to podcasts.

If you drive a lot, you know: on the highway, attention works “in the background”—staying in the lane, keeping the speed—it all happens almost automatically, without the focus needed when reading a book. It’s more like going for a walk.

But when I switched to regular cars in 2021, I was amazed: driving once again required my full attention, especially during the first month. I had to relearn “automatic driving.”

My dependence on FSD stopped me from being “on autopilot” myself.

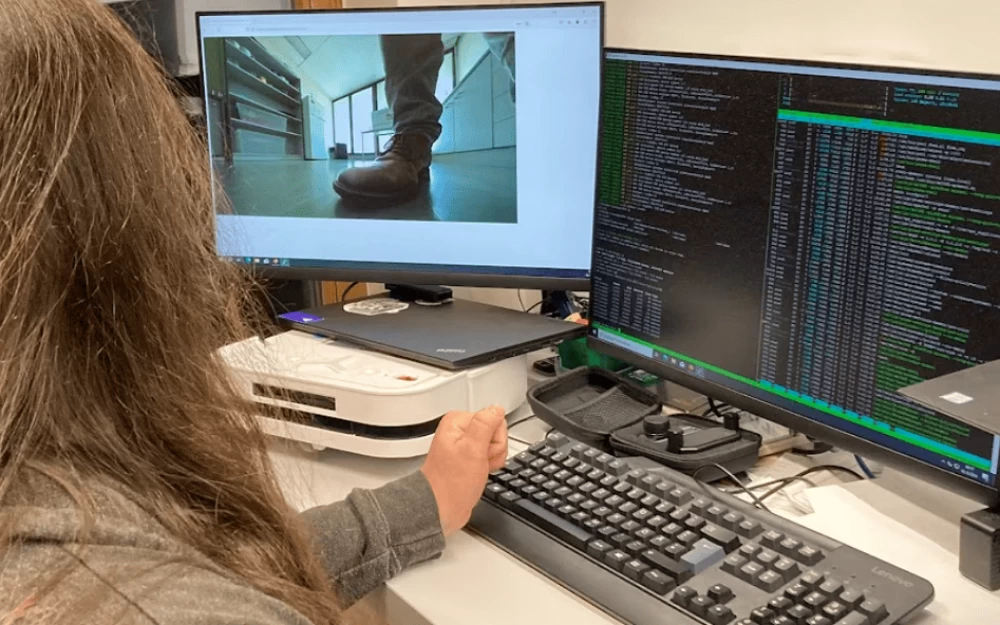

Experience with AI editors

It was similar with AI editors. At first, I started working much faster. My work didn’t require much complexity, and AI was like Tesla on FSD: I just steered the car.

In my free time, I started a side project from my personal account on my work device. This account had no access to Copilot or other AI tools. That’s when I felt the same thing I did with the Tesla.

I became worse at handling basic development tasks that had come easily just a year before. I found myself involuntarily waiting for the AI to write the function implementation for me. I even had to remember the syntax for unit tests.

Even in my main job, AI started to lose its usefulness. I lost interest and felt less confident in my own decisions. It was much easier to just “ask the AI.” But it couldn’t always help, even with good prompts. Because I wasn’t practicing the basics, I also became worse at solving complex problems.

Fingerspitzengefühl

Fingerspitzengefühl is a German word that literally means “feeling in the fingertips,” and basically stands for intuitive understanding, sensitivity to the situation, and mastery in details.

It’s hard to define a “senior” level. But besides soft skills, technical maturity is expressed exactly in this feeling. The longer you work with a language, framework, or codebase, the more intuition you develop: “this isn’t working right” turns into “this is how it should be.”

And it’s not just at the level of architecture. It’s in the details—what pointers to use, when to use assert, what to take from the standard library when there are several options.

I started losing this intuition by relying on AI. And that’s coming from a lead developer. When I see the hype around “vibe coding,” I think: how are you planning to become a senior at all? How will you maintain a project if the AI is unavailable or too expensive?

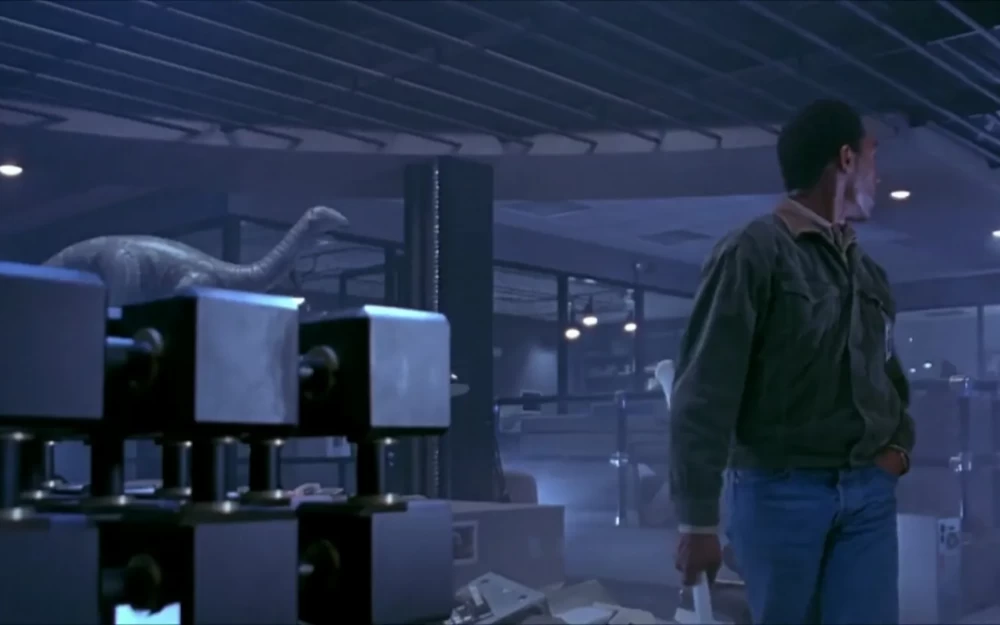

Sometimes AI just doesn’t cope

Yes, now we have big context windows, reasoning models, and agents. But for example, if someone messages you in Slack:

“The website is working, but the app crashed in prod, locally it’s fine, there’s nothing in Sentry”—good luck with AI.

Maybe it’ll manage, maybe it won’t. But if it doesn’t—will your reply be “Sorry, Cursor didn’t get it, I’ll try another prompt tomorrow”?

You can totally live without AI

Sometimes it feels like soon there will be no work without AI. But this mantra of “everything will change in 3-6 months” has been repeated for over two years already. I stopped believing CEOs promising functionality “in 3-6 months” a long time ago. In 2019 I paid €6400 for FSD, which still doesn’t work as promised.

AI still isn’t able to reliably handle projects more complicated than a university assignment. Working with legacy code, enterprise systems, internal DSLs and non-standard frameworks—AI is often powerless here. In some fields, using AI is outright forbidden for a variety of reasons.

Security isn’t a joke

When you’re implementing authentication systems like JWT or RBAC, adding “and make it secure” to the prompt won’t save you from vulnerabilities if the model was trained on insecure GitHub code. Security is your responsibility zone. Critical code should be written and reviewed by humans.

If we come to a scenario where one AI writes code, another checks PRs, and a third deploys — we will see a surge in security problems.

Where I draw the line

I still use AI — but consciously. It is not integrated into my editor. Everything I pass is done manually. This is deliberately complicated to make me use it less.

Examples of tasks:

"Convert these Go tests to map"

"Make this calculation SIMD"

"If content-type is application/zlib, decode the body"

I set up models to show only the changes and give me instructions on how to apply them manually. That way, I still feel in control of the code.

AI for learning

However, AI is great for learning. I have niche interests, and it's not always easy to find materials on them. Adding network code to an engine with an ECS architecture is a rare topic. I ask AI:

"Explain this assembler code"

"What does this shader do?"

"Which books explain client/server desynchronization in game engines in detail?"

The results are mixed, but still better than search engines.

Plus, it's cheap. I use a desktop app with several LLMs, and in three months, I've spent only $4 on credits.

No AI on my personal website

There is no AI-generated image or text on my website. Personally, I don't like AI art — it's soulless. AI texts are boring, they lack character. What is made by a human has more value to me.

Do what you love

It's important to remember that life is not only about efficiency. There is enjoyment too. If you love programming — do it yourself. Even if AI does it better.

In 1997, Deep Blue defeated Kasparov. But people still play chess.

It's the same with programming. It's not just work. It's enjoyment.

Advice for beginners

Don't become "eternal juniors" who only prompt. If you want to be a programmer — learn to program. Be curious, dive deep. It pays off.

Understanding what's happening under the hood is a thrill. Don't be a "prompt engineer" (if that's even engineering).

And even if AI is smarter — never trust it blindly. Don't base the whole process on it. Try working without it for a couple of days sometimes.

The better you are at programming, the more often AI will get in your way.

If you're learning now and building skills — you will be able to handle the chaos that "vibe coding" creates. If you don't want to go deep — maybe programming is just not for you. All tasks that can be solved "on the vibe" will be the first to go with AI development.

And remember: if you can't write code without AI, you can't write code.

Conclusion

When you use AI — you sacrifice knowledge for speed. Sometimes it's justified. But even the best athletes in the world still do basic exercises. In programming — it's the same. To solve complex problems, you need to practice the simple ones. You need to keep the axe sharp.

We are still far from AI taking our jobs. Many companies are hyping FOMO to sell their solutions, attract investors, secure funding, and launch the next "revolutionary" model.

AI is just a tool. It is neither good nor bad. What matters is how you use it. Use it wisely, but don’t rely on it. Make sure you’re still able to work without it. Don’t release code you don’t understand. Don’t replace your own thinking.

Stay curious. Keep learning.

/// This is the end of the translation

And maybe it would be interesting to run a survey about how much fewer programmers will be needed in 3–5 years.

Write comment