- AI

- A

The impact of AI on Earth and space research

Hello, this is Elena Kuznetsova, a business process automation specialist at Sherpa Robotics. Today I have prepared for you a translation of an article on the application of AI in the field of scientific research of space and planet Earth.

In my work, I automate routine processes, but in a different field, and I was interested in how AI takes on scientific routine and already today opens up knowledge to us, which would take us many years to obtain. I invite you to get acquainted with the views of researchers Katie Bouman, Matthew Graham, John Dabiri, and Zachary Ross. At the end of the article, I share my thoughts on how AI pushes us to develop and focus on more complex tasks.

Machine Learning and the Event Horizon Telescope. Creating Images of Black Holes

Presented by Katherine Bouman, Assistant Professor of Computational and Mathematical Sciences, Electrical Engineering, and Astronomy; Rosenberg Scholar and Researcher at the Heritage Medical Research Institute.

Studying black holes is not only exciting but also extremely challenging. The network of telescopes known as the Event Horizon Telescope (EHT) collects data on black holes using the synchronous operation of several installations scattered around the world. Together they form one giant virtual telescope that helps us look into the most distant corners of the Universe.

When collecting data, "gaps" in information coverage arise, leading to uncertainty in image formation. By combining data from different telescopes, we apply algorithms to fill in these gaps. However, the task of assessing this uncertainty is very laborious. For example, it took the international team several months to obtain an image of the black hole M87*, and for a more recent image of Sgr A* in the center of our Galaxy, it took whole years.

This is where machine learning comes to the rescue, specifically generative deep learning models. These models can not only create a single image but also generate an entire set of possible images corresponding to the complex data we collect. This approach allows for more effective uncertainty assessment and extraction of more information from the available data.

One of the exciting applications of machine learning is the optimization of sensors for computational imaging. We are developing machine learning methods to determine locations for new telescopes that will be integrated into the EHT. Joint design of telescope placement and image reconstruction software allows us to extract more information from the collected data and obtain higher quality images with less uncertainty. This concept of co-designing computational imaging systems is relevant not only for EHT but also for medical imaging and other fields.

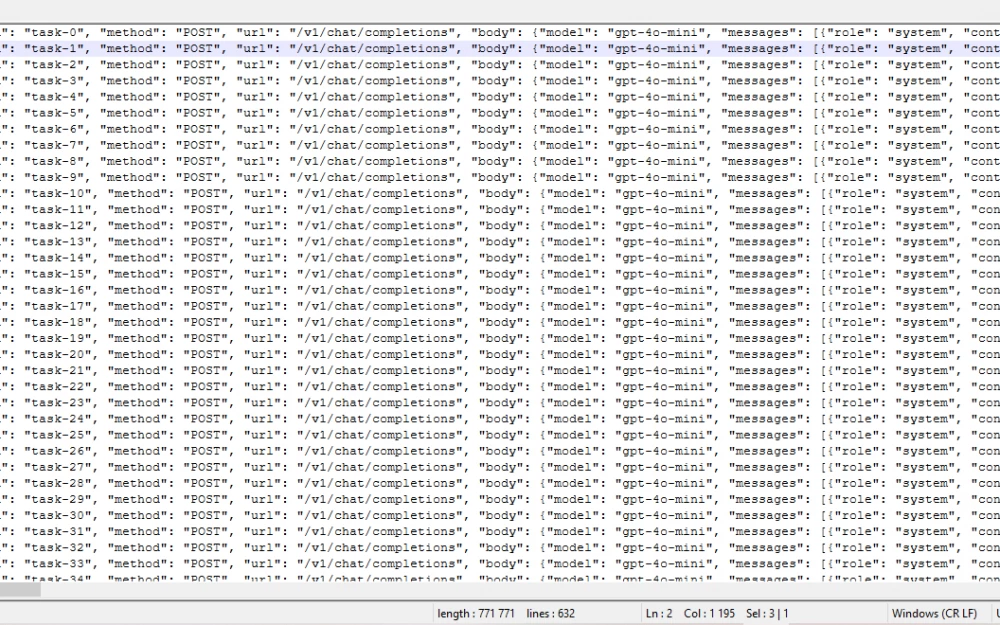

Caltech astronomy professors Greg Hallinan and Vikram Ravi lead the DSA-2000 project, in which 2000 telescopes in Nevada will map the entire sky in the radio range. Unlike EHT, where there is a need to fill in data gaps, this project will collect a huge amount of information - about 5 terabytes per second. All processing stages, such as correlation, calibration, and visualization, must be fast and automated.

Scientists don't have time to work with data using traditional methods. Therefore, they use deep learning methods, which automatically clean the image, so users get results just a few minutes after data collection.

How AI is Changing Astronomy. Matthew Graham's View on the Evolution of Astronomical Research

Astronomy is undergoing significant changes thanks to big data, and Matthew Graham, the scientific director of the Zwicky Transient Facility project, talks about it.

Over the past 20 years, astronomy has undergone significant changes, which are largely related to the processing of large volumes of data. Modern data is too complex, extensive, and sometimes comes from telescopes too quickly — sometimes at gigabytes per second.

Today, scientists are actively turning to machine learning methods. The application of new large datasets is becoming a key factor in the search for rare objects. For example, if you are looking for an object with a probability of one in a million, in a dataset of a million objects you are likely to find it. But in a project like the Legacy Survey of Space and Time from the Rubin Observatory, where 40 billion objects are expected, you will already have a chance to find as many as 40,000 such "one in a million".

Personally, I am interested in active galactic nuclei — regions where a supermassive black hole is located at the center of a galaxy, surrounded by a disk of gas and dust that falls into it and makes it incredibly bright. I can explore a dataset to find such objects. I have an idea of what patterns to look for, and I am developing a machine learning approach for this task. I can also model what these objects should look like and then train an algorithm to find similar objects in real data.

Today we use computers for routine work that was previously done by undergraduate or graduate students. However, we are gradually moving to more complex areas where machine learning is becoming more sophisticated. We are starting to ask computers: "What patterns do you find in this data?"

Machine learning not only helps in data processing — it opens new horizons for astronomy, allowing us to identify previously unnoticed patterns and make more accurate predictions. How will this affect our understanding of the universe? This is a question we all hope to answer in the near future.

How is AI changing ocean monitoring and research? An engineer John Dabiri's perspective

Engineer John Dabiri describes how modern technologies, including artificial intelligence, can radically change the approach to ocean research and monitoring.

Surprisingly, only 5-10% of the ocean volume has been explored. Traditional measurement methods using ships are expensive, and recently scientists and engineers have increasingly turned to using underwater robots to survey the ocean floor, study interesting objects, and analyze the chemical composition of the water.

Our team is developing technologies to create a swarm of small autonomous underwater drones and bionic jellyfish that will help explore the ocean. Drones face complex currents, and fighting them leads to energy loss or knocks them off course. Instead, we want these robots to use ocean currents just as hawks use thermal currents in the air to reach great heights.

However, in the conditions of complex ocean currents, we cannot calculate and control the trajectory of each robot as it is done for spacecraft.

When it comes to deep-sea exploration, controlling drones with a joystick from the surface, being at an altitude of 20,000 feet, is almost impossible. We also cannot transmit data about local ocean currents to them, as we cannot detect the robots from the surface. As a result, it becomes necessary to endow ocean drones with the ability to make movement decisions independently.

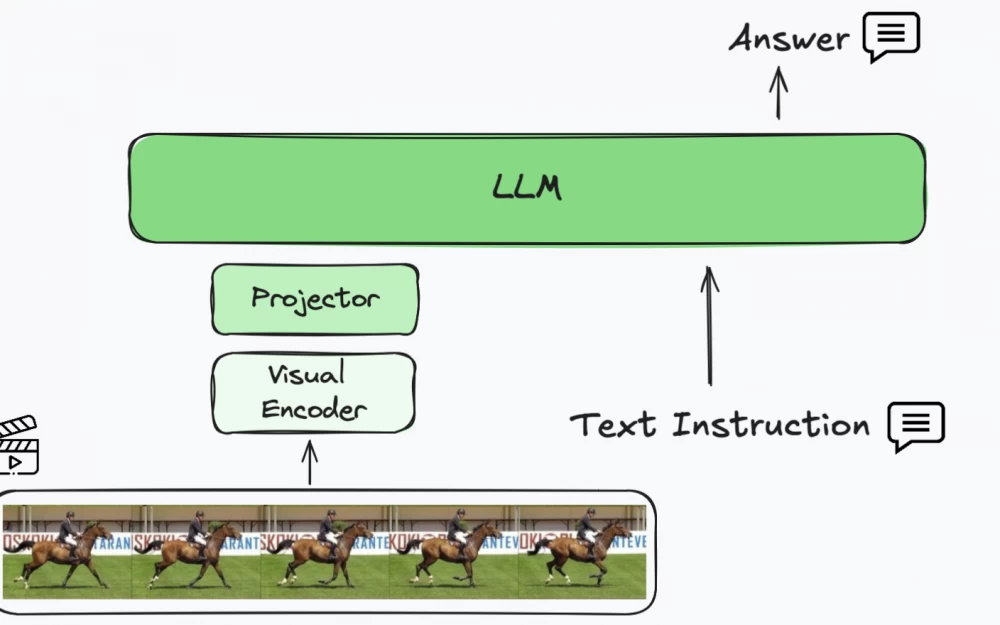

To this end, we have equipped drones with artificial intelligence, specifically, reinforcement learning deep networks running on low-power microcontrollers the size of a square inch. Using data from the drones' gyroscopes and accelerometers, the AI repeatedly calculates trajectories. With each new experiment, it learns how to move and maneuver effectively in various currents.

Thus, the introduction of artificial intelligence into underwater technology opens up new horizons for ocean exploration. We are on the verge of an era when drones will be able to autonomously explore the mysterious depths of the ocean, providing us with new data and discoveries that once seemed inaccessible. How will this affect our understanding of ocean ecology and dynamics? There are more questions than answers, but the future looks promising.

How is machine learning changing earthquake monitoring? A seismologist's view by Zach Ross

The word "earthquake" conjures up images of powerful jolts. However, it is important to remember that before and after this moment, there are small underground tremors. To understand the whole process, it is necessary to analyze all earthquake signals and identify the overall behavior of these vibrations. The more data we collect on tremors and earthquakes, the clearer the picture of the complex network of faults within the Earth responsible for earthquakes becomes.

Monitoring earthquake signals is not an easy task. Seismologists work with much larger volumes of data than those available to the general public through the Southern California Seismic Network. This volume of data is too large to handle manually. In addition, we do not have a simple way to separate useful earthquake signals from "noise" signals, such as sounds from loud events or passing trucks.

Previously, students in our seismology lab spent a lot of time measuring the properties of seismic waves. Although this process can be mastered in a few minutes, it is not very interesting to do. These routine tasks become an obstacle to real scientific analysis. Students would prefer to use their time for more creative tasks.

Now artificial intelligence helps us recognize the signals we are interested in. First, we train machine learning algorithms to detect various types of signals in data that have been carefully annotated manually. Then we apply our model to new incoming data. The model makes decisions with the same accuracy as seismologists.

Thus, the introduction of machine learning into seismology opens new horizons for a deeper understanding of earthquakes and their precursors. AI allows us to reduce time on routine tasks and focus on more important things - data analysis and interpretation, which ultimately can lead to better prediction and understanding of seismic processes. The future of seismology looks promising, and we are only beginning to realize the potential that new technologies open up.

Comment

In fact, this article prompted me to think. We constantly talk about how AI reduces task completion time and allows us to focus on more important processes. And what processes are more important?

We can get data for analysis faster, based on which we can draw conclusions and apply the obtained information for decision-making at an unprecedented speed.

And it turns out that the very important process for which AI frees up time is precisely decision-making: setting goals, formulating tasks, etc.

AI can give us an answer to the question, but it is the person who decides what to do with this information.

The main difference between artificial intelligence and natural intelligence is the lack of will. AI itself does not ask questions about what is there - in the depths of the ocean, in the bowels of the Earth, or in the vast expanses of space.

The same thing happens on a more down-to-earth level – AI itself will not make money and will not create a business. But a person with AI as a tool not only gains new, previously unavailable opportunities, but also new requirements for the level of their own development and speed of adaptation to changes.

AI management has become a new competence. Already now we are faced with such a phenomenon as neuro-employees, for the use of which we need to somehow make decisions, train staff to interact, write technical specifications for developers on their functionality, etc.

I would say that AI takes away from us the functionality that we have long mastered well, but perform slowly, and sets before us the need to perform more complex activities: to find a goal and strive to achieve it.

Write comment