- AI

- A

What to really expect from AI and why it will start generating profits sooner than it seems

The VK Cloud team translated an interview with Aidan Gomez, CEO and co-founder of Cohere. Cohere is one of the most talked-about AI startups at the moment, but its focus is slightly different from many others. Unlike, for example, OpenAI, it does not produce consumer products at all. Instead, Cohere focuses on the corporate market and creates AI products for large companies. From the interview, you will learn about the features of this approach, what AI can and cannot do, and what is happening in the corporate artificial intelligence market.

What it will be about

First of all, Aidan and I talked about how this approach differs and why it gives Cohere a much clearer path to profitability compared to some competitors. Computing power is expensive, especially in AI, but Cohere's structure gives his company an advantage as it does not require spending as much money on creating its models.

Another interesting topic is the advantages of competition in the corporate market. Many high-tech industries are very heavily concentrated on a limited number of options for various services. If you need graphics processors for your AI models, you are likely buying something from Nvidia — preferably a whole stack of Nvidia H100s if you can get them.

But Aidan notes that his corporate clients are both risk-averse and price-sensitive: they want Cohere to operate in a competitive environment as it allows them to make better deals instead of being tied to a single supplier. Therefore, from the very beginning, Cohere had to be competitive, which, according to Aidan, contributed to the company's prosperity.

We also talked a lot about what AI can and cannot do. We agreed that it definitely has not yet reached the highest level. Even if you train AI on a limited, specific, deep dataset, such as contract law, human oversight is still needed. But Aidan believes that AI will eventually surpass human knowledge in areas such as medicine.

There is indeed a big contradiction that will make us argue throughout this episode. Until recently, computers were deterministic. When you input a certain signal into them, you know exactly what result you will get. It is predictable. But if we all start talking to computers in human language and getting answers in it... well, human language is confusing. This will change the whole process of understanding what to input and what result we will get from computers. I was very curious to know if Aidan thinks that modern LLMs can meet all our expectations from AI, given this confusion.

Now let's move on to the interview itself.

I'm glad we had the chance to talk. It seems that Cohere has a unique approach to AI. I want to discuss this and the competitive environment. I can't wait to hear if you think the market situation is a bubble.

I'll start with a very big topic. You are one of the eight co-authors of the paper that started it all — Attention is all you need. It describes the Transformer Google (translator's note: transformer is the name of the neural network architecture that was invented by Google researchers in 2017). The letter T in GPT refers to it.

When they make documentaries about music bands, they often use the cliché: first, kids play instruments in the garage, and then they are immediately adults performing in a stadium. But no one ever shows the intermediate stages.

When you wrote the paper and developed the transformer, you were "in the garage," right? When did you realize that this technology would become the foundation of the modern AI boom?

I don't think I understood it. While we were working, it seemed like an ordinary research project. It seemed that we were making progress in translation, for which we created the transformer, but it was a fairly well-understood, known problem. We already had Google Translate - we wanted to improve it a bit. We increased the accuracy by a few percent by creating the transformer architecture - and I thought that was it. This is our contribution: we improved the translation a bit. Later, we began to notice that the community was picking up our architecture and starting to apply it to many tasks that we never considered when creating it.

It took about a year for the community to notice our work. First, it was published, it was presented at an academic conference. Then we began to observe a snowball effect: everyone began to adapt it to new use cases. It began to be applied in other applications related to natural language processing (NLP). Then we saw its application for language modeling and language representation. That's when everything started to change.

This is a very familiar process for any new technological product:

People develop new technology for a specific purpose.

Many start using this technology.

The purpose changes, use cases go beyond what the inventors envisioned.

The next version of the technology adapts to what users are doing.

The same change happened with transformers and large language models. But it seems that the gap between the capabilities of the technology and people's expectations is increasing.

Since you were at the very beginning and observed this first change, do you think we are going beyond the capabilities of the technology?

Expectations are growing drastically, and it's funny. The technology has improved significantly and has become more useful.

When we created the transformer seven years ago, no one thought about what it would lead to. Everything happened much faster than expected. But this only raises the bar of expectations. It's one thing to have a language model, but a living language is our intellectual interface, so we very easily anthropomorphize technology.

You expect the same from technology as you do from a human. And it makes sense: it behaves in a way that really seems smart. Everyone working on this technology, creating language models and implementing AI in life, strives for the same thing.

Over the past seven years, there has been a lot of skepticism about AI: "It won't get better," "The methods and architecture you use are wrong," and so on.

Critics said: "Well, the machine can't do this." Three months pass, and the model already does it. Then they say: "Okay, it can do this, but it won't do that..." This continued for all seven years. With this technology, we constantly exceed expectations.

Nevertheless, we have a long way to go. I think the technology still has flaws. Since interacting with it is very similar to communicating with a human, people overestimate the technology or trust it more than they should. They start using it in scenarios for which it is not yet ready.

This brings me to one of the key questions I am going to ask everyone working in the field of AI. You mentioned intelligence, mentioned capabilities, said the word "logic." Do you think that language and intelligence in this context are the same thing? Or do they develop differently in technology: computers are getting better at using language, but intelligence is growing at a different rate or may have already reached its limit?

I don't think intelligence and language are the same thing. In my opinion, understanding language requires a high level of intelligence. The question is whether these models understand the language or just imitate it.

This concerns another very famous Google document — the stochastic parrots paper. It caused a lot of controversy. This paper argues that models simply repeat words and have no deep intelligence. By repeating words, models will express the biases they were trained on.

Intelligence helps you deal with this, right? You can learn a lot, and your intelligence will help you surpass what you have learned. But do you see that models are capable of surpassing what they were trained on? Or will they never go beyond it?

I would say that people also repeat a lot and are full of biases. This is largely what the intellectual systems we know — people — do. There is an expression that each of us is an average of the 10 books we have read or the 10 people we communicate with most often. We model ourselves based on our knowledge of the world.

At the same time, people are truly creative. We create things we have never seen before. We go beyond the training data. I think this is what they mean when they talk about intelligence — the ability to discover new truths. It is something more than just repeating the already known. I think models do not just repeat, they are able to extrapolate beyond what we have shown them, recognize patterns in the data, and apply these patterns to new data that has not been encountered before. At this stage, we can say that we have gone beyond the stochastic parrot hypothesis.

Is this unexpected behavior for models? Did you think about this when you started working with transformers? You said it was a seven-year journey. When did you realize this?

There were several moments in the very early stages. At Google, we started training language models using transformers. We were just experimenting with it, and it was not yet the language model you interact with today. It was trained only on "Wikipedia," so it could only write for that article.

Perhaps it was the most useful version. [Laughs]

Yes, perhaps. [Laughs]

In those days, computers could barely put together a sentence correctly. Nothing they wrote made sense. There were spelling mistakes. There was a lot of noise.

But one day we seemed to wake up, took a sample from the model, and it started writing entire documents as fluently as a human. It was a real shock to me. It was a moment of awe at the technology, and it happens again and again.

I constantly feel that all this is just a stochastic parrot. Perhaps the model will never be able to achieve the level of usefulness we desire because it has some fundamental weakness. We can't make it smarter. We can't extend its capabilities beyond a certain limit.

But every time we improve the models, they overcome these boundaries. I think this overcoming will continue. We will be able to get everything we want from the models.

It is important to remember that we are not yet at the final stage. There are obvious areas of application for which the technology is not yet ready. For example, we should not allow models to prescribe medications to people without human supervision. But one day the technology may "mature". At some point, we will have a model with all of humanity's knowledge of medicine and we will trust it more than a living doctor. I consider this a very likely future.

But today I hope that no one takes medical advice from models and that a human is still involved in this process.

This is what I mean when I say the gap is widening. And this brings us to the discussion of Cohere.

I'll start with the so-called second act, because the second act traditionally gets so little attention: "I created something, and then turned it into a business, which took seven difficult years." Cohere is very focused on the corporate segment. Can you tell us about the company?

We develop models and provide them to large companies. We are not trying to compete with ChatGPT. We are trying to create a platform that will allow companies to implement AI technology. We are working in two directions.

First: we have just reached a state where computers can understand language. Now they are able to talk to us. This means that we can equip almost every computing system, every product we have created, with a voice interface and allow people to interact through their language. We want to help companies implement this technology and integrate a language interface into all their products. This is outward orientation.

The second direction is inward-oriented — towards productivity. I think it is already clear that we are entering a new industrial revolution, which instead of physical labor frees humanity from intellectual labor. Models are smart. They can perform complex work that requires reasoning, deep understanding, and access to a lot of data — what many people do at work today. We can shift this work to models, significantly increasing the productivity of companies.

Human language is associated with misunderstandings — this is easy to see from the history of mankind. We use it quite vaguely. And programming computers has historically been very deterministic, predictable. What do you think about overcoming this contradiction? "We are going to sell you a product that will make interaction with your business less clear and more confusing, possibly more prone to misunderstanding, but at the same time more comfortable."

Programming using language technology is not deterministic. It is stochastic, probabilistic. There is a probability that the model can say anything. Something completely absurd.

I believe that our task as technology developers is to provide good control tools. So that the probability of an absurd model response is one in many trillions and in practice we would not encounter this.

However, I think that companies are already accustomed to stochastic elements and doing business with their participation, because we have people. We have salespeople and marketers. The world is resilient to this. We are resilient to noise, errors, and inaccuracies.

Can you trust all sellers? Do they never mislead or exaggerate? In fact, they sometimes do. When a seller approaches you, you apply the appropriate restrictions to their words: "I will not take everything you say as the ultimate truth."

I think the world is actually very resilient to the participation of such systems. It may be scary at first, you might say: "Computer programs are completely deterministic." But fully deterministic systems are very strange. This is a new phenomenon. And we are actually returning to something much more natural.

When I encounter a jailbreak prompt for another chatbot, I see in it a leading prompt, which usually says something like: "You are a chatbot. Do not say this. Make sure you respond this way. And this is completely unacceptable for you." They constantly leak, and I always find them interesting to read. Every time the thought immediately flashes: this is a crazy way to program a computer. You are going to talk to it like an irresponsible teenager. You say: "This is your role" - and you hope that it will follow it. But even after all these instructions, there is a one in a trillion chance that the computer will go completely wrong and say something crazy. It seems to me that the internet community enjoys making chatbots fail.

You sell enterprise software. You go to large companies and say: "Here are our models. We can control them, which reduces chaos. We want you to rethink your business with these tools because they will do some things better. This will increase your productivity. This will make your customers happier." Isn't there a contradiction here? After all, this is a big cultural shock. Computers are deterministic, we have built the modern world based on their very deterministic nature. And now you are telling companies: "Spend money and risk your business to try a new way of interacting with computers." This is a big change. Are you succeeding? Is anyone enthusiastic? Do you encounter resistance? What are the responses?

This brings us back to the conversation about where to implement the technology and what it is suitable for, what it is reliable enough for. There are areas where we do not want to implement AI today because the technology is not stable enough. I am lucky that Cohere is a company that works closely with clients. We don't just throw a product on the market and hope it succeeds. We actively participate in the implementation and help clients think about where to deploy the technology and what changes can be achieved with it. I hope no one will trust these models with access to their bank account.

There are situations where determinism and very high reliability are really needed. You are not just going to install a model and let it do whatever it wants. In the vast majority of cases, it is about an assistant for a person. You have an employee who will use the model as a tool to do their job faster, more efficiently, more accurately. It helps them, but they are still involved in the process and check that the model is doing something reasonable. After all, the person is responsible for their decisions and what they do with this tool in their work.

I think you are talking about situations where a person is completely excluded from the process and all the work is transferred to the models. But we are still far from that. We need much more trust, manageability, and the ability to set boundaries for the models to behave more deterministically.

You mentioned prompts and that you can control models through conversations.

Every time it seems like madness to me.

I think it's some kind of magic: you can effectively control the behavior of models through conversation. But besides that, you can set control and some restrictions outside the model. You can create a model that will monitor another model, intervene, and block certain actions in certain cases. I think we need to change our perception that this is one model. This is one AI that we just hand over control to. What if it makes a mistake? What if everything goes wrong?

In reality, these will be much larger systems with monitoring tools, deterministic and identifying failure patterns. If the model does something specific, it will simply be stopped. This is a completely deterministic check. And then there will be other models that can observe and provide feedback to the supervised model to prevent it from making mistakes.

The programming paradigm, or technological paradigm, started with you describing a model and applying it to a task. It's just a model and a task. Now there is a shift towards larger systems with high complexity and more components. And it already looks less like AI doing the work for you. This is complex software that you deploy to do the work for you.

Currently, Cohere has two models: Cohere Command and Cohere Embed. You are obviously working on them: training them, developing them, applying them for clients. How much time does the company spend on what you describe: creating deterministic control systems and understanding how to link models together to ensure greater predictability?

Large companies are extremely risk-averse — while always looking for opportunities. Almost every first conversation with a client starts with the phrase: "Is this system reliable?" We need to show them a specific use case. For example, helping lawyers draft contracts, which is what we do at Borderless.

In this case, you need a person involved in the process. Under no circumstances should fully generated contracts be sent without any verification. We try to help with guidance and training on the types of systems that can be created for oversight, whether it be people involved in the process or automated systems to reduce risks.

With consumers, it's a bit different, but in large companies, the first question the board of directors or company management asks us is about risks and their prevention.

If you apply this to Cohere and how you develop your products: how is the company's structure organized? Does it reflect on it?

I think so. We have internal security teams focused on increasing the manageability and reducing the bias of our models. At the same time, all this remains in some way an educational campaign — because first of all, it introduces people to what AI is.

This is a paradigm shift in software and technology creation. To teach people this, we create projects like LLMU — something like an LLM university, where we show what pitfalls this technology has and how to avoid them. Our structure is aimed at helping the market get acquainted with the technology and its limitations as it is implemented.

How many people work at Cohere?

It's always amazing to talk about this, but there are about 350 of us now, which seems crazy to me.

It's crazy only because you're the founder.

Just yesterday it was only Nick [Frost], Ivan [Zhang], and I in this tiny... basically in a closet. I don't know how many square meters it was, but it was a single-digit number. And a few weeks ago we had a corporate outing, and there were hundreds of people creating something together with you. I wonder: how did we get here? How did all this happen? It's really fun.

How are the responsibilities distributed among these 350 people? How many of them are engineers? How many are in sales? Corporate companies require a lot of post-sales support. What is the structure?

The vast majority are engineers. The go-to-market team has recently grown significantly. I think the market is only now starting to actually implement this technology. Employees, clients, and users are starting to apply it.

Last year was marked by POC (Proof of Concept) — everyone learned about the technology. We've been working on it for almost five years, but it was only in 2023 that the general public really noticed it, started using it, and fell in love with the technology. Corporations also have people — and they started thinking about implementing it in business. They started experimenting to better understand the technology.

The first group of these experiments is completed, and people like the products they have created. Now the task is to take these pre-implementation tests and actually implement them in a scalable way.

This scalability in the sense: "Okay, we can add another five clients without significant additional costs"? Is it computational scalability? Scalability in how quickly you develop solutions for people? Or all at once?

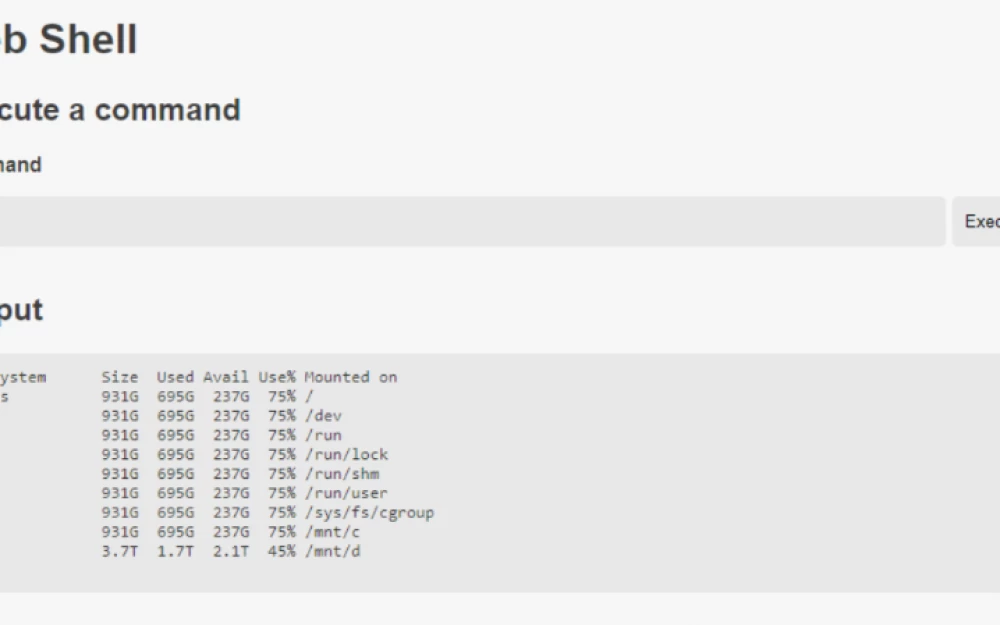

All at once. Technologies are expensive to develop and expensive to launch. We are talking about hundreds of billions, trillions of tunable parameters in just one of these models, so it takes a lot of memory to store it all. It takes a huge amount of computing resources to run. In an experiment, you have five users, scalability doesn't matter, price doesn't matter either. The only thing that matters is proving the possibility. But if you are happy with what you have created and plan to put it into production, then you go to the finance department and say: "This is how much it costs for five users. And we want to provide it to all 10 million."

The numbers don't add up. It's not economically feasible. For Cohere, the priority was not to create the largest model, but to develop a model that the market could actually consume and that would be useful for corporations.

This means that we are focused on compression, speed, scalability to create a technology that the market can use. In recent years, many studies have not been widely implemented. Scalability issues have not yet arisen, but we knew that for corporations that are very cost-sensitive and focused on economic efficiency, return on investment is important. If they don't see the potential for return on investment, they don't implement it. It's simple. So we focused on creating a category of technologies that really meet market demands.

You started this work at Google. It has endless resources and huge operational scale. Google's ability to optimize and reduce the cost of such new technologies is very high due to its infrastructure and reach. What made you do this on your own?

Nick also worked at Google. We both worked for Geoff Hinton in Toronto. He was the one who created neural networks — the technology that underlies all machine learning, including LLM. It underlies virtually every artificial intelligence you encounter on a daily basis.

We enjoyed working there, but we lacked the ambitious product and speed that we considered necessary to realize our ideas. That's why we founded Cohere. Google was a great place for research, and I believe that some of the smartest people in the world in the field of AI work there. But the world needed something new. The world needed Cohere and the ability to implement this technology from a company not tied to any one cloud or hyperscaler. For corporations, the ability to choose is very important. If you are the CTO of a large retailer, you are probably spending half a billion or a billion dollars on one of the cloud providers for your infrastructure.

To make a good deal, you need to have a real ability to switch between providers. Otherwise, they will squeeze you endlessly. You hate buying proprietary technologies available only on one platform. You really want to keep the ability to switch between them. That's what Cohere offers. Since we are independent and not tied to any of the major clouds, we can offer this to the market, which is very important.

Let me ask you a question from Decoder. Up to this point, we have talked a lot about the path, about the tasks that need to be solved. You are the founder of the company. You now have 350 people. How do you make decisions? What is your methodology for making them?

My methodology... [Laughs] I flip a coin.

I'm lucky to be surrounded by people who are much smarter than me. Everyone at Cohere is better than me at what they do. I have the opportunity to ask for advice from people, whether it's the board of directors, the executive team, or individual employees, those who do the real work. I can ask and aggregate their opinions. When opinions are divided, the decision is up to me. Usually, I rely on intuition. But fortunately, I don't have to make many decisions because I'm surrounded by people who are much smarter than me.

But, of course, there are big decisions to be made. For example, in April you announced two models: Command R and Rerank 3. Their training is expensive. Their development is also costly. You will have to rebuild your technology to accommodate the new models and their capabilities. These are big steps.

It seems that every AI company is rushing to develop the next generation of models. How does your assessment of these investments change over time? You have talked a lot about the cost of proof of concept versus the operational model. The new models are the most expensive of all. What do you think about these expenses?

It is really very expensive. [Laughs]

Can you name a specific number?

I'm not sure I can name a number, but I can give an approximate order. To do what we do, you need to spend hundreds of millions of dollars a year. That's the cost. We believe we are extremely capital efficient. We are not trying to create models that are too big for the market or superficial. We strive to create tools that the market can actually consume. Therefore, it costs us less and we can focus our capital. Some companies spend many, many billions of dollars a year creating their models.

For us, this is extremely important. We are fortunate that we are relatively small, so our strategy promotes greater capital efficiency and the creation of technologies that the market really needs, rather than potential research projects. But, as you noted, it is extremely expensive, and we manage it by: a) raising capital to pay for the necessary work, and b) focusing on our technology. That is, instead of trying to do everything, instead of solving every potential technology task, we focus on the patterns or use cases that we believe will dominate or are already dominating.

One example is RAG, generation with augmented retrieval. Models are trained on internet data, they know a lot about public facts and the like. However, you want the model to know about your company, your closed information. RAG allows you to place your model next to your private databases or knowledge stores and link them. This approach is used everywhere. Anyone implementing this technology wants it to have access to their internal information and knowledge. We focused on achieving an exceptionally high level in this.

We are lucky: we have the person who invented RAG, Patrick Lewis. This allows us to solve problems much more efficiently with our models. We will continue, but still this project requires many millions annually. It is very capital intensive.

I want to ask you if this is a bubble. I'll start with Cohere, and then I want to talk about the industry as a whole. Hundreds of millions of dollars a year just to keep the company running, to perform calculations. Not counting salaries. And salaries in the AI field are quite high, so that's another pile of money to pay. You need to pay office rent. You need to buy laptops. And a bunch of other expenses. But only calculations cost hundreds of millions of dollars a year. Do you see a path to profitability that justifies such a volume of net computing costs?

Absolutely. We wouldn't create this if we didn't see it.

I think your competitors believe it will come someday. I'm asking you this question because you have built a business aimed at the corporate market. I assume you see a much clearer path to profitability. So what is it?

As I already said, we use investments much more efficiently. We can spend only 20 percent on computing compared to what some of our competitors spend. But what we create perfectly meets the market needs. We can cut costs by 80% and offer something just as attractive to the market. This is the main direction of our strategy. Of course, if we did not expect to make billions in profit, we would not be building this business.

What is the path to billions? How long will it take?

I don't know how much I can tell, but it's closer than it seems. There are a lot of expenses in the market. Certainly, billions are already being spent on this technology in the corporate sector today. Most of these expenses go to computing rather than model creation. But a lot of money is indeed spent on AI.

As I said, last year was more about experiments, and POC costs are about 3-5% of what is required for operation. And now such solutions are starting to be put into operation. This technology is used in products that interact with tens and hundreds of millions of people. It is really becoming ubiquitous. So, I think getting the necessary profit is a matter of a few years.

This is typical for the technology adoption cycle. Corporations are slow. They tend to adopt new things slowly. They are very conservative. But once they have adopted something, it stays there forever. However, it takes time for them to gain confidence and decide to adopt the technology. It has only been about a year and a half since people realized this technology, but during this time we are already seeing adoption and serious production loads.

Corporate technologies are very resilient. They will never disappear. The first thing that comes to mind is Microsoft Office, which will always be there. The cornerstone of corporate strategy is Office 365. Microsoft is a major investor in OpenAI. They have their own models. They are your major competitor. They sell Azure to the corporate sector. They are hyperscalable. They can offer you a deal. They integrate a voice interface so you can talk to Excel. I have heard from Microsoft employees more than once that they have people on the ground who need to wait for analysts' responses, but now they can just talk directly to the data and get the answer they need. This is very attractive.

You are a startup. Microsoft is 300,000 people, and you are 350. How do you win market share from Microsoft?

In a sense, they are competitors, but they are also partners and a channel for us. When we released Command R and Command R Plus, our new models, they were initially available on Azure. I see Microsoft as a partner in providing this technology to corporations, and I think Microsoft also sees us as a partner. I think they want to create an ecosystem based on many different models. I am sure they will have their own models, OpenAI models, ours, and it will be an ecosystem, not just Microsoft's own technologies. Look at the history of databases — there are outstanding companies like Databricks and Snowflake that are independent. They are not subsidiaries of Amazon, Google, or Microsoft. They are independent, and the reason for their success is that they have an incredible product vision. The product they create is truly the best option for customers. But at the same time, their independence is a key factor in their success.

I described why CTOs don't want to deal with a proprietary software platform: it causes a lot of problems and strategically limits the ability to negotiate. I think the same will be true for AI when models become an extension of your data. They reflect the value of your data, which is that you can use it to create an AI model that will benefit you. Data itself has no value. The fact that we are independent means that companies like Microsoft, Azure, AWS, and GCP are interested in our existence. They have to support us because otherwise the market will reject them. The market will insist on independence, which allows switching between clouds. So competitors are forced to support our models. This is exactly what the market wants. I don't consider them exclusively competitors. I see them as partners who help bring our technology to market.

One of the reasons I was happy to talk to you is that you are so focused on the corporate sector. There is certainty in your words. You have identified many clients with specific needs. They have money for this. You can count how much. You can build your business with this money in mind. You continue to communicate with the market. You can invest your budget in technologies that match the amount of funds available on the market.

When I ask if this is a bubble, I'm talking about the consumer side. There are large companies engaged in AI for consumers that create large consumer products. People pay $20 a month to interact with such a model, and the developers spend more money on training per year than you do. These are advanced companies. I'm talking about Google and OpenAI. However, there is an entire ecosystem of companies that pay OpenAI and Google a share to work based on their models and sell a consumer product at a lower price. This does not seem like a sustainable business to me. Do you have the same concerns about the rest of the industry? It attracts a lot of attention and is inspiring, but it doesn't seem sustainable.

I think those who build their products based on OpenAI and Google should build them based on Cohere. We will be the best partners.

[Laughs] I prepared this especially for you.

You correctly identified that the focus of companies and technology providers may conflict with the interests of their users. Imagine a startup trying to create an AI application for the world and building it based on the product of one of its competitors, who is also creating a consumer AI product. There is a clear conflict here, and you can see how one of my competitors might steal or copy the ideas of this startup.

That's why the existence of Cohere is necessary. There need to be those like us who are focused on creating a platform that allows others to build their applications. And there need to be those who are truly invested in their success — without any conflict or competition.

That's why I think we are good partners: we allow our users to succeed without trying to compete or play on the same field. We just create a platform that you can use to master the technology. That's our entire business.

Is the whole industry a bubble?

No. I really don't think so. I don't know how often you use LLM (large language models) in your daily life. I use them constantly, several times an hour, so how can it be a bubble?

This may be useful, but economically unfeasible. Let me give you an example. You talk a lot about the dangers of excessive hype around AI, including in our conversation. You mentioned two ways to fund computing: attracting customers and growing the business or attracting investment. I look at how some of your competitors attract money and they do it with statements like: "We are going to create AGI based on L1" and "We need to halt development because this technology could destroy the world." This seems quite inflated to me. Like: "We need to raise a lot of money to continue training the next advanced model before we build a business that can support the computing of the existing model." But you don't seem too worried about it. Do you think it will somehow work itself out?

I don't know what to say except that I completely agree that this is a dangerous situation. The reality is that companies like Google and Microsoft can spend tens of billions of dollars on this, and that's fine. It's just rounding. Startups that choose this strategy need to become a subsidiary of one of these large tech companies that print money, or build a very, very bad business to do it.

Cohere does not strive for this. I largely agree with you. I think this is a bad strategy. And we have a different strategy: to develop products and technologies that are suitable for our customers. This is how all successful businesses have been built. We don't want to get too far ahead. We don't want to spend so much money that it becomes difficult to turn a profit. Cohere aims to create a self-sufficient independent business, so we have to steer the company where it is possible.

You called the idea that AI poses an existential risk absurd and a distraction. Why do you think it's absurd and what do you think are the real risks?

I think the real risks are the ones we talked about: overly hasty implementation of technology. People trust it too much in situations where, frankly, they shouldn't. I have a lot of understanding for the public interest in "Doomsday" or "Terminator" scenarios. They interest me because I watched science fiction and it always ends badly there. We've been told these stories for decades. It's a very vivid plot. It really captures the imagination. It's exciting to think about, but it's not our reality. As someone who is technically savvy and close to the technology itself, I don't see us moving in a direction that confirms such stories spread in the media and by many tech companies.

I would like us to pay attention to two things. First, to overly hasty implementation, implementation in scenarios without human control. This really worries regulators and the government. Will it be harmful to the population if the financial sector or medicine implements AI in this way? They are practical and really rely on technology.

Second, I would like a broad discussion of opportunities, the positive side of the issue. For someone to talk about what we can or want to do with this technology. Yes, it is important to avoid negative scenarios, but I also want to hear public opinion and public discussions about positive opportunities. What good could we do?

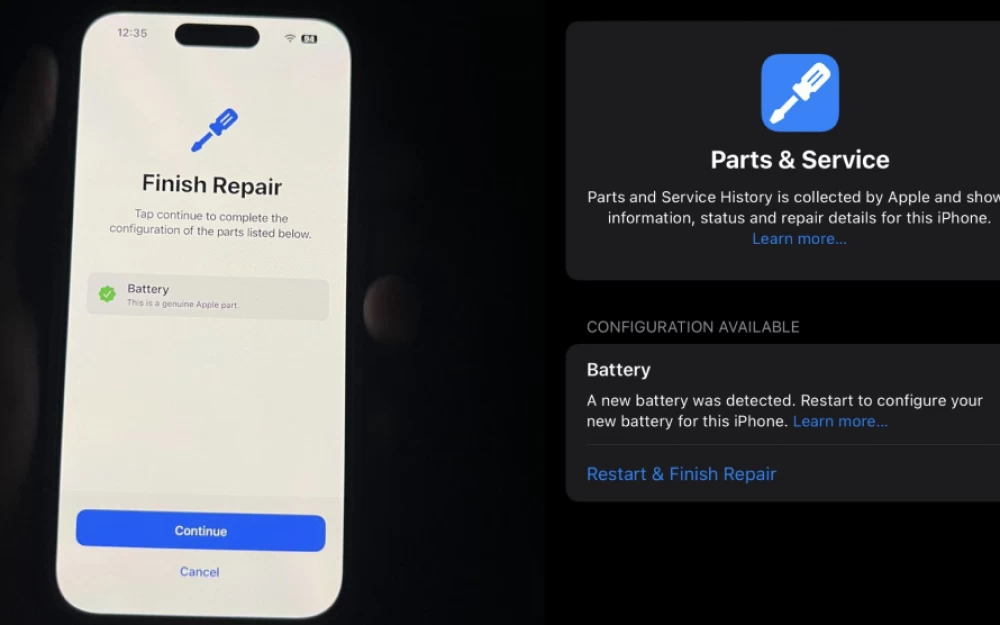

I think one example is medicine. Doctors spend 40% of their time on records. They do this between patient visits. Finished the appointment, sit down at the computer and write: "Such and such came, he had this, I remember, a few weeks ago it looked like this. We need to check this next time he comes. I prescribed this drug." We could attach passive microphones to doctors' clothes that would record the conversation, and by the next meeting, a transcript and pre-filled documents would be ready. Instead of writing all this from scratch, the doctor just reads and says: "No, I didn't say this, I said that, and add this." It becomes an editing process. Instead of 40%, they will spend 20%. Overnight, doctors have 25% more time. I think this is incredible. This is a huge benefit to the world. We didn't spend money on training doctors. We didn't increase the number of doctors in schools. They just get 25% more time through the implementation of technology.

I want to find more such ideas. What applications should Cohere prioritize? What do we need to get better at? What should we solve to do good for the world? This is not written about. No one talks about it.

As a person who comes up with headlines, I think, firstly, there are not enough examples yet to say that this is real. And this, as it seems to me, causes skepticism in people. Secondly, I listen to your reasoning and think: "Oh, damn, the owners of many private clinics just added 25% more patients to the doctors' schedule."

I hear from our audience that they feel that AI companies take a lot, not giving enough in return. This is a real problem. This has manifested mainly in creative industries; we see this anger directed at creative generative AI companies.

You work in the corporate sphere and don't notice it. But you have trained a bunch of models, you should know where the data comes from. The people who created the original works on which you train probably want to be compensated for it.

Oh, yes, of course. I fully support this.

Do you compensate those whose data you use?

We pay a lot for data. There are many different sources of data. There is information that we collect from the internet, and in these cases we try to respect people's preferences. If they say, "We don't want you to collect our data," we respect that. We look at robots.txt when we scan code. We look at the licenses associated with that code. We filter data if people have clearly said, "Don't scan this" or "Don't use this code." If someone sends us an email asking, "Hey, I think you collected X, Y, and Z, can you delete it?" — we will, of course, delete it, and all future models will not be trained on that data. We do not train on materials if people do not want it. I am very, very sympathetic to creators and really want to support them and create tools to help them become more productive and help in their creative process.

The flip side of this: the same creators are watching as the platforms they publish on are filled with AI content, and they don't like it. There is a bit of competition here. This is one of the dangers you mentioned. There is a direct threat of misinformation spreading on social platforms, which seems to be insufficiently controlled so far. Do you have any ideas on how to reduce AI-generated misinformation?

One of the things that scares me a lot is that the democratic world is vulnerable to influence and manipulation in general. Exclude AI — and democratic processes are still very vulnerable to manipulation. At the beginning of the conversation, we talked about the fact that people generally perceive the last 50 posts they have seen, or something like that. You are very susceptible to the influence of what you consider to be the consensus. If you look at the world through social media and it seems to you that everyone agrees with X, then you think: "Okay, probably X is right. I trust the world. I trust the consensus."

I believe that democracy is vulnerable and needs to be very carefully protected. You may wonder how AI affects this? AI allows for much larger-scale manipulation of public opinion. You can create a million fake accounts that promote one idea and create a false consensus for people consuming this content. This sounds really scary. It's terrible. It's a huge threat.

But I think it is preventable. Social media platforms are the new town square. In a real town square, you knew that the person standing on the box was probably a voting citizen alongside you, and therefore you cared about what they said. And in the digital town square, everyone is much more skeptical of everything they see. You don't take it for granted. We already have methods in social media to check if a person is a bot, and we need to maintain them much more carefully so that people can see if a particular account is verified. Is it really a person on the other side?

What happens when people start using AI for mass deception? For example, I publish an AI-generated image of a political event that did not happen.

When one person can generate many different voices saying the same thing to create the appearance of consensus, this can be stopped by verifying that there is a person behind each account. You will know that there is really a person on the other side, and this will stop the scaling of millions of fake accounts.

On the other hand, what you are describing is fake news. They already exist. There is Photoshop. I think creating fake news will become easier. There is a concept of "media verification", but at the same time, you will trust different sources differently. If it is published by your friend, whom you know in real life, you trust it very much. If it is some random account, you do not necessarily believe everything it claims. If it comes from a government agency, you will trust it differently. If it comes from the media, your trust will depend on the specific source.

We know how to assign appropriate levels of trust to different sources. This is definitely a problem, but it is solvable. People are already very well aware that other people lie.

I want to ask you one last question. I thought about this the most, and it brings us back to the beginning.

We place a lot of commercial, cultural, and inspirational hopes on models. We want our computers to do all this, and the basic technology is LLM. Can they bear the burden of our expectations? There is a reason why Cohere is doing this purposefully, but if you look more broadly, there is a lot of hope that LLM will take us to a new level of computing technology. You were at the origins. I think large language models can really withstand the pressure and expectations placed on them.

I believe that we will always be dissatisfied with AI technology. If we realize that in two years the models will not invent new materials fast enough to give us everything we need, we will be disappointed. I think we will always demand more because that is human nature. At each stage, the technology will surprise us, meet the situation, and exceed our expectations, but there will never come a time when people say: "We are satisfied, this is enough."

I'm not asking when it will end. I'm saying: do you see that as technology develops, it will be able to withstand the pressure of our expectations? That it has the ability or at least the potential ability to really create what people expect from it?

I am absolutely sure that it will be so. There was a period when everyone said: "Models hallucinate. They make things up. They will never be useful. We can't trust them." Now you can track the level of hallucinations over the years — it has significantly decreased, the models have become much better. With every complaint and with every fundamental barrier, all of us who build this technology, work on it and improve it, exceed our expectations along with it. I expect that this will continue. I see no reason why not.

Do you see a moment when hallucinations will come to naught? For me, this is the point when the technology becomes really useful. You can start relying on it for real when it stops lying. Currently, models hallucinate in really funny ways. But there is a moment, for me at least, when I think that I can't fully trust it yet. Is there a point when the level of hallucinations will be zero? Do you see this on the horizon? Do you see any technical developments that can lead us to this?

We have a non-zero frequency of hallucinations.

Yes, but no one trusts me to manage anything. [Laughs] There is a reason why I am here asking questions, and you are the CEO. But I'm talking about computers: if you are going to involve them in the process in this way, then you want the level of hallucinations to be zero.

No, I mean that people forget, make things up, make mistakes in facts. If you ask if we can surpass the level of human hallucinations, I think yes. This is definitely an achievable goal, because people hallucinate a lot. I think we can create something extremely useful for the world.

Useful or reliable? This is what I'm talking about — trust. The level of trust in a person varies, of course. Some people lie more than others. Historically, we have trusted computers at a very high level. With some of these technologies, the level of trust has decreased, which is really interesting. My question is, will we reach a point where we can fully trust a computer in a way that we cannot trust a person? We trust computers to control the F-22 because a person cannot control the F-22 without a computer. If you said, "The F-22 control computer will sometimes lie to you," we would not allow that. It's strange that we have a new class of computers that we should trust a little less.

I don't think large language models should prescribe medication to people or engage in medicine. But I promise you, if you come to me with a set of symptoms and ask me to diagnose you, you should trust the Cohere model more than me. It knows much more about medicine than I do. Whatever I say will be much worse than what the model says. This is already the case, right now, at this moment. But neither I nor the model should be diagnosing people. However, is it more reliable? You really should trust this model more than this person in that case.

In fact, you should trust a real doctor who has undergone ten years of training. So the bar is here. Aidan is here. The model is slightly above Aidan. I am absolutely confident that we will reach this level, and at that point, we can stamp and say that the model is reliable. It is actually as accurate as the average doctor. One day it will be more accurate than the average doctor. We will achieve this with technology. There is no reason to believe that we won't. But it is a process. It is not a binary choice between "you can trust the technology" or "you can't." The question is, where can you trust it?

At the moment, in medicine, we really need to rely on people. But in other areas, AI can be used. When a person is involved in the process, the model is actually just an aid. It's like an additional tool that is really useful for increasing your productivity, getting more work done, entertaining, or exploring the world. There are areas where this technology can already be effectively trusted and applied today. The tasks for which this technology can be implemented and trusted will only grow. Answering your question about whether the technology will cope with all the tasks we want to entrust to it: I really deeply believe that it will cope.

Write comment