- AI

- A

Sora by OpenAI: principle of operation, video examples and comparison with Runway

After 10 months of rumors, leaks and speculative tweets from various AI enthusiasts, OpenAI has finally unveiled its long-awaited AI model for video generation - Sora.

A few days ago, the AI community was already anticipating the release of Sora, and I was one of those who constantly refreshed the Sora.com website, like a desperate sneakerhead trying to purchase limited edition models.

If you cannot access the site, check the list of supported countries here. You may need to use a VPN if your country is not on the list.

Honestly, I expected very little from Sora. It has been almost 10 months since we heard any serious news about a video model from OpenAI, and with new names like Kling AI, Runway Gen-3, and Hailou AI gaining traction, I thought Sora would be late to the party.

But I must say, after seeing some of the results shared by people, I am impressed. It might indeed give these competitors a run for their money.

However, just because a few early samples impressed me doesn't mean I'm ready to buy. There are many things that matter when it comes to AI video creation tools:

Supported input media (text, image, and video)

Generation speed

Output quality (resolution, consistency, and length)

Editing controls (expansion, trimming, merging, etc.)

Pricing

These are the points I focused on. I want to understand if Sora has enough capabilities to justify its use and ultimately if it's worth paying for.

What is Sora?

If you're hearing about it for the first time, Sora is an AI video generation tool from OpenAI that can create short clips from text, images, and even other videos.

At the beginning of this year, OpenAI introduced a preview version of Sora, showcasing its achievements in "world modeling" - essentially training the model to understand and represent aspects of the physical world.

Now Sora Turbo, a faster and more advanced version, is being released as a standalone product available to ChatGPT Plus and Pro users on Sora.com.

How Sora Works

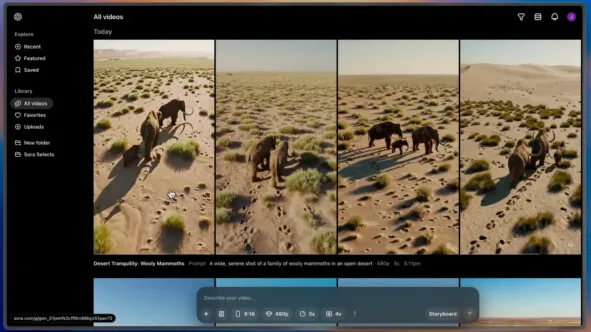

Using the Sora Video Editor, you can create videos up to 20 seconds long while maintaining good visual quality and following your prompts.

Here is the basic process:

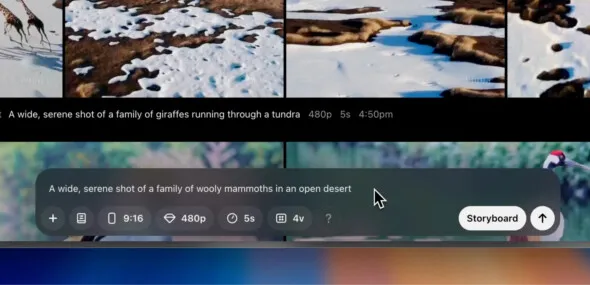

At the bottom of the screen, you can enter a text description of the desired video.

If you prefer to start with an existing image or video, click on the "+" in the input field to upload your file. Remember that you must own the rights to everything you upload, and you cannot upload images or videos of other people without their explicit written permission.

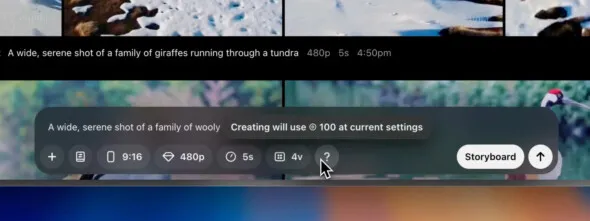

Before clicking the "submit" button, review and adjust the settings. You can change the aspect ratio, resolution, duration, and number of variations. These settings will affect the cost of your generation. Hover over the "?" icon to check how many credits will be spent with your chosen settings.

After entering your prompt, Sora will start processing it, which may take up to a minute. You can track the progress of video generation by clicking on the icon in the upper right corner of the page.

Once the video is generated, hover over the preview in the library to see all the variations.

Click on a specific variation to view it more closely and access editing tools. You can refine, redo, or expand the created clip based on what Sora has generated.

It looks great. It reminds me of a video editing tool from Kling, Runway, or RenderNet AI.

Video Examples

Now let's look at some videos generated by Sora and my thoughts on each of them.

Prompt: A serene and otherworldly scene unfolds with high-resolution digital clarity, capturing a minimalist beach at twilight where fashionably dressed figures recline on floating chairs woven from delicate reeds, hovering just above the gentle waves; the ocean emits ethereal smoke particles that rise and form abstract patterns in the air; bioluminescent plankton in the water glow softly, illuminating the scene with a magical light as the chairs drift effortlessly along the shoreline.

The smoke and water splashes look incredibly detailed. I also like how the objects behave as they float on the water. It seems that Sora knows how objects should move and interact in a physical environment.

Prompt: Whales Soaring Skyward. (Subtle Remix): make the video more vibrant and the butterflies more colorful.

This is a challenging task because it requires not just making the video look realistic, but also making it beautiful and vibrant. Sora managed to handle this task.

The butterflies look more colorful, and the whole scene seems more magical, like something out of a fairy tale. It doesn't have to obey the physics of the real world, but it still looks professional and impressive.

Prompt: Japanese Winter Market (Storyboard)

Generating people in AI videos is always difficult. Even here, I notice some strange details - the nails look odd, and the limbs may be misaligned. Most existing models struggle with human anatomy, and Sora is no exception. It's not perfect, but I see some improvements compared to what I've seen before.

Prompt: Kraken Attack Chaos. The shot is foggy with sharp color contrast, the look and feel captured is found footage quality with low visibility, providing a sense of immediacy and chaos.

This example shows how Sora can create a specific mood. The foggy setting, contrast, and found footage feel are all top-notch. It seems like it could be suitable for a movie trailer or short film. It's impressive that Sora can work with such a cinematic aesthetic.

Prompt: Bling Zoo Aquarium (Storyboard). The ‘bling zoo’ shop in new york city is both a jewelry store and zoo.

AI models usually struggle with text, and Sora is no exception. Although the scene looks good overall, the spelling is off and the text is inaccurate. This is similar to what happens with image generators. Text rendering remains a big challenge.

Key Features of Sora

One thing that catches my attention is the interface that OpenAI has developed for Sora. They didn't just give us a prompt input field and stop there. They introduced a variety of features: storyboard tool, Remix, Recut, Loop, Blend, and style presets.

Remix. Changing the scene by switching backgrounds, replacing objects, or adding and removing elements.

Recut. The Recut feature allows you to shorten and lengthen video frames.

Storyboard. Frame-by-frame video layout for better control over complex sequences.

Loop and Blend. Loop creates continuous animation, while Blend smoothly merges two clips into one.

Style Presets. Instantly apply a predefined visual style, such as papercraft style, without endlessly editing prompts.

How Sora Compares to Competitors

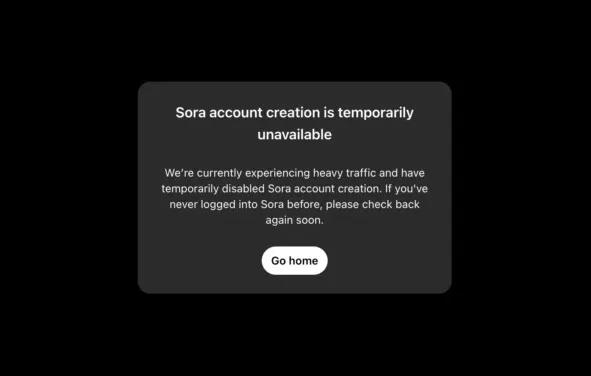

Right now, I'm stuck waiting for stable access. Due to the hype, the servers are overloaded, so new users can't create videos at all. If you see this error message, you're not alone.

Once I get access, I plan to compare Sora with Runway Gen-3 and Kling using the same prompts and see which results are more consistent and realistic.

In the meantime, let's try comparing a pre-made Sora video with Runway Gen-3. Here's an example of a video created with Sora:

Prompt: a family of grizzly bears sit at a table, dining on salmon sashimi with chopsticks.

Video generated by Sora

This 5-second video clip shown below is generated in Runway.

Video generated by Runway Gen-3 Alpha

From what I see, Sora's result seems more lively. The bears are not just sitting still. The camera moved, and there was a sense of activity in the scene. The Runway Gen-3 version, on the other hand, looks more static, almost like a slightly animated image.

I also need to find out if the claimed "speed" is true. The early research model was slow and expensive to use. Sora Turbo should be significantly faster, but "fast" in AI terms can still mean a few minutes of waiting for a 20-second clip.

Will this speed improve over time? Possibly.

Prices for Sora

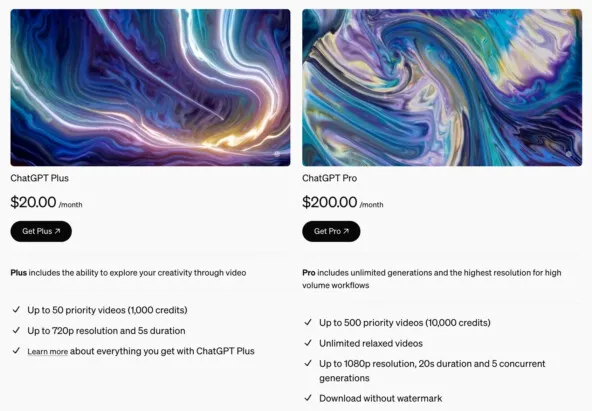

If you want to use Sora, it is currently tied to specific ChatGPT subscription plans:

ChatGPT Plus ($20/month)

You will be able to generate up to 50 priority videos (1,000 credits) per month, create videos with a resolution of up to 720p and a duration of up to 5 seconds.

ChatGPT Pro ($200/month)

You will be able to generate 500 priority videos (10,000 credits) per month, an unlimited number of videos with a resolution of up to 1080p and a duration of up to 20 seconds. You can also run up to 5 generations simultaneously, and the downloadable videos will be watermark-free.

Many people, including myself, are currently waiting for stable access to Sora. Server capacity issues are clearly frustrating, and social media is full of users who cannot register or generate videos at all.

Nevertheless, the initial results of Sora are impressive. The realism and consistency are excellent, and it is interesting to imagine what this could mean for creative people, filmmakers, and anyone who wants to bring their ideas to life through video.

But these same capabilities also raise serious concerns. With such realistic videos, we must acknowledge the risk of misuse: fake news, fraud, and deepfakes.

It is curious that tech blogger Marques Brownlee noticed: when he asked Sora to generate a video with a tech reviewer, there was a suspiciously green plant on the table, very similar to the one that can often be seen in his own videos.

Was it a strange coincidence, or did the OpenAI training data include his work and repeat these details? It's hard to say for sure, but it highlights the uncertainty associated with how these models are trained and what they might accidentally discover.

In any case, if they manage to establish access, maintain quality, and create a community that will responsibly use the tool, Sora could replace Kling or Runway as the main tool for creating AI-generated videos among users. For now, I refrain from drawing any final conclusions until the hype dies down, the servers work properly, and I get a chance to really test it.

Write comment