- AI

- A

ECCV 2024: how it was. Current articles and main trends

Hello! My name is Alexander Ustyuzhanin, and I am a developer in the YandexART team. Recently, I visited Milan for one of the largest international conferences on computer vision — ECCV. This year it was held for the 18th time, and I was not the only one from Yandex, but part of a whole delegation of CV specialists from different teams. The guys helped collect materials for this article, and I will definitely introduce everyone during the narration.

The conference was held from September 29 to October 4. Researchers submitted 8585 (!) papers to ECCV, and 2395 were accepted — that's just under 30%. Such large-scale conferences always attract the attention of people from both academia and industry: from big companies to startups — people come for new information, networking, and the atmosphere of a big scientific party.

The program was intense but comfortable — starting at 9 am and ending at 6 pm — leaving enough time for activities outside the schedule. Of course, it is impossible to get acquainted with thousands of reports, so we checked the schedule and decided in advance who wanted to go where. In the article, I will share both my findings and the feedback from my colleagues — thanks to this, it seems, the review turned out to be diverse and gives a good idea of what is happening in the world of CV right now. Let's go!

General impressions and atmosphere

First of all, I would like to note that Milan is a beautiful city and having a conference here is also beautiful. ECCV 2024 was held in the futuristic MiCo Milano. Let's admire the views a little :)

...and take a walk on the ground floor, where the company stands were located.

For example, here is a demo with the AI3D Sculpt application for Apple Vision Pro, and the corgi is the object being modeled. He became the real star of the conference, but even stars need rest.

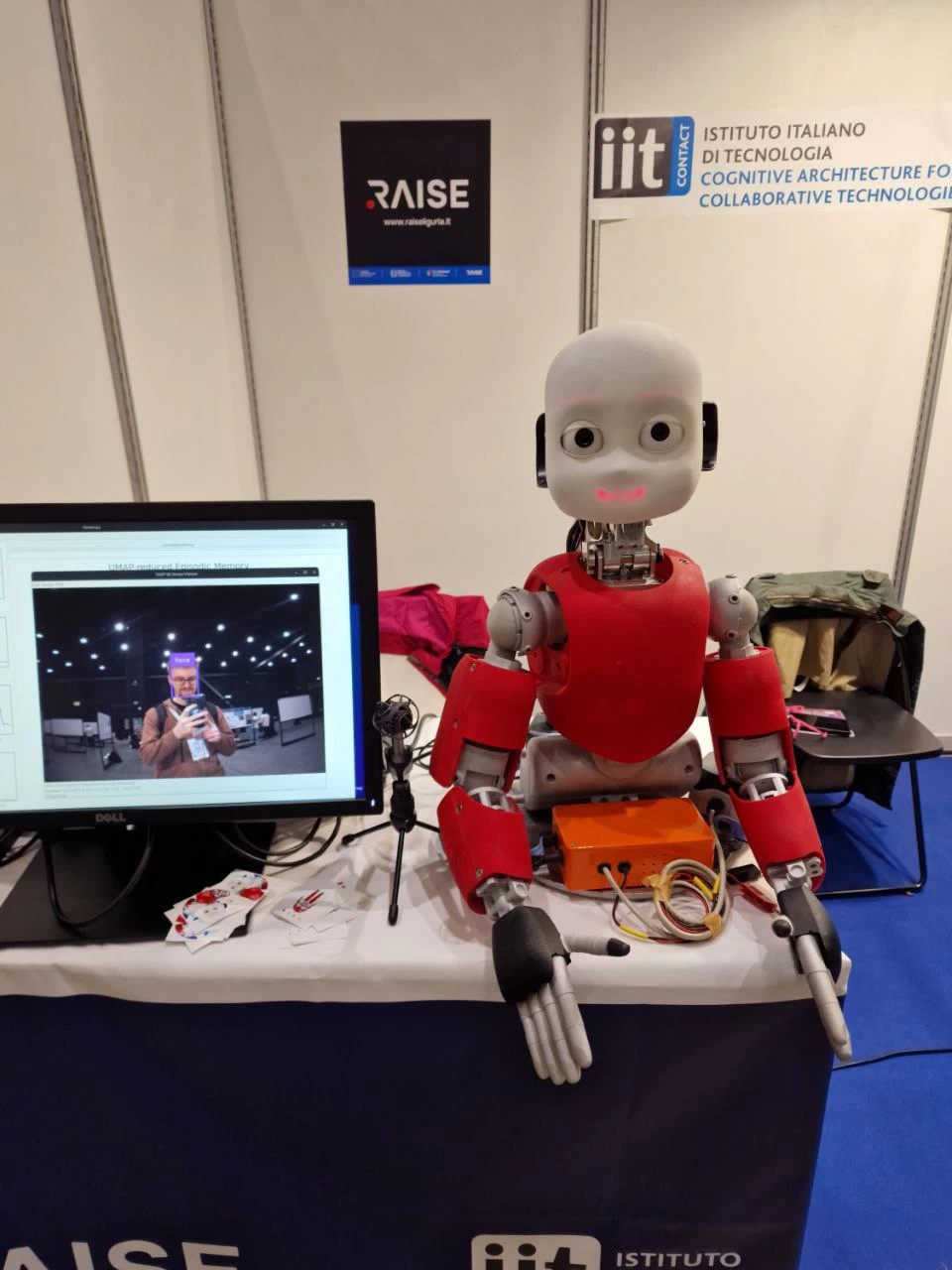

Well, of course, in 2024, no self-respecting high-tech conference is complete without robots.

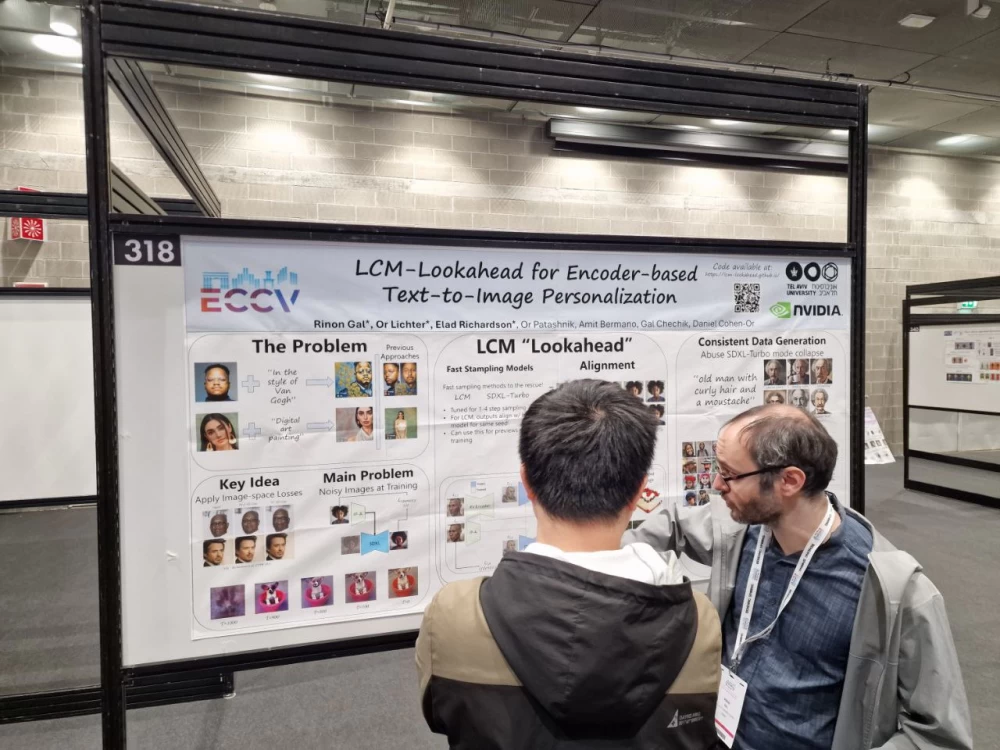

Among the visitors was Rinon Gal (pictured right) — a very famous researcher in the circles of personalization enthusiasts.

In general, I did not have time to get bored. I really enjoyed talking with colleagues from all over the world at poster sessions, asking questions, exchanging experiences, and sharing knowledge — it is a very valuable experience. The formats were standard for conferences of this level:

keynotes;

oral presentations (so-called orals) with selected papers;

poster sessions, where many authors present their work on stands simultaneously, and we walk around and ask questions;

workshops for those who like to dive into a narrow topic;

tutorials, where there are even more details.

And now — to the hardcore. Let's discuss trends in computer vision and the papers we liked.

Popular topics and trends

First, I will talk about the most popular areas in my opinion, and Sergey Kastryulin, a colleague from Yandex Research, helped me to supplement the section with trends.

Neural 3D Reconstruction and Rendering

Probably the most popular direction at ECCV 2024! The task is to model complex scenes and objects in 3D and then generate a flat image. Such neural networks are capable of synthesizing photorealistic generations of an object from a different angle (Novel View Synthesis) from a single input photograph. For example, Stability AI presented the work SV3D, in which they adapt the consistency of generations of their diffusion video model for the 3D task. The ability to draw textures for a 3D model or recreate the physical lighting of a scene makes life easier for 3D designers, reduces financial costs for purchasing 3D assets, and allows for quick prototyping of ideas.

The technology FlashTex will help the artist in their work, which, through the classical approach of Score Distillation Sampling (SDS) for 2D diffusion with an adjustment for lighting parameterization, allows for realistic texturing of 3D models. Also interesting is the work LGM, which raises the issue of the inefficiency of NeRF scene representation and SDS-based optimization for 2D diffusion and proposes to directly predict 3D Gaussians with a neural network (from 3D Gaussian Splatting), which, with differentiable rendering, would reproduce multi-view generations of a pre-trained diffusion model.

These technologies are used in augmented reality, CGI for cinema and advertising, video games. It is obvious that the future is not limited to the plane: the transition to three-dimensional space is a natural step towards the development of computer vision technologies, just like adding a temporal component (video, animation). It seems that the main difficulty lies in combining volume and time. An example of an approach to the problem is the work Generative Camera Dolly, which solves the problem of Novel View Synthesis for a video sequence.

However, one of the problems of neural (volume) rendering is the impracticality of the internal representation of a 3D scene: it is necessary to resort to classical algorithms like Marching Cubes to estimate the polygonal mesh of a 3D model. Amazon in its work DPA‑Net sets the trend for the future development of 3D and proposes to shift the community's attention from neural rendering towards neural modeling — a more practical direction for industrial tasks in 3D.

Dense visual SLAM

SLAM (Simultaneous Localization and Mapping) is aimed at dynamically building a map of an unknown environment, obtaining an estimate of the agent's position and orientation in it. This process plays a key role in the fields of robotics, autonomous transportation, augmented and virtual reality. Simply put, neural networks synthesize the environment from various sensors to obtain a navigation strategy and the ability to interact with the environment.

The general trend in this area is driven by the adaptation of the sensational 3D Gaussian Splatting technology for the SLAM task, which is successfully handled by GS‑ICP SLAM and CG‑SLAM. Modern self-driving cars, warehouse robots, virtual reality glasses — these are tools that materialize and other similar modern technologies. For example, for the Autonomous Driving task, VLM is used to improve the planner's performance, and in the field of robotics and augmented reality technologies, researchers from the University of Zurich presented an interesting work Reinforcement Learning Meets Visual Odometry, linking RL and Visual Odometry — a subtask of SLAM. Historically, computers have helped people in the virtual, informational world, but now technological progress allows them to tackle tasks in the real physical world, and for this, it is necessary to teach the computer to navigate in space.

Video Manipulation and Understanding

The irresistible desire to generate video captured everyone immediately after the stunning success of diffusion models in the task of image generation. Initially, short animations were produced, then progress reached the generation of full-fledged and consistent short videos. This can be done by Sora from OpenAI, Lumiere from Google, and Movie Gen from Meta*, publicly presented on the last day of ECCV, but not participating in the conference.

However, these models are proprietary, and not everyone can afford to train their own. For now, it is not worth expecting frequent additions among generative models, especially in open access, so it makes sense to focus on modifying and analyzing existing solutions.

Meta* presented the Emu Video Edit (EVE) model for video editing. It is based on training adapters for editing each individual frame and maintaining frame consistency. It is distilled from their own Emu Video model. An interesting work on image-to-video generation was PhysGen, where the source of dynamics is a real physical simulation of objects segmented from the image.

To understand what is happening in the video, researchers resort to multimodality. For example, to answer questions about the video (VQA), Google trains its BLIP2 analog. A similar approach is taken in the work LongVLM, focusing on the hierarchical localization of internal representations of video frames over time.

Multimodality in Vision

Of course, technologies at the intersection of the visual domain with others have not been overlooked — everything that combines different modalities arouses lively interest among researchers. The most popular combination is, of course, Vision-Language Models (VLMs). Ai2 made a statement with their work MOLMO — a family of VLMs in open access, whose flagship, Molmo 72B, catches up with the giant GPT-4o and surpasses Gemini 1.5 Pro and Claude 3.5 Sonnet in ELO (Human Preference Evaluations).

It is also interesting BRAVE — a work from Google on the method of aggregating image encoders VLM within one model, surpassing the original components in quality. An interesting study was also done in the field of video-audio in the work Action2Sound. It is dedicated to generating a soundtrack for the input video with a focus on correctly voicing the physical actions of the participants in the video, which clearly stand out against the background sound (ambient sound).

A little more about trends

According to Sergey, generative models still make up a small percentage of conference papers — about one-tenth. And this is despite the fact that the hype in the media space is very high. Nevertheless, when coming to ECCV, participants see many works on completely different topics: segmentation, signal formation and processing, and similar "things in themselves".

VLMs are becoming more and more popular, which I have also noticed. At the same time, there is a kind of transfer of experience from domain to domain. There is a very popular area of large language models, and since there is a huge amount of research concentrated there, people borrow and reuse what has already been done in the field of LLM. For example, this concerns the study of the features of large models: they study how models can reason, what they understand about the real world, what biases they have, etc.

Another important and popular story is the removal of concepts. Modern models are so powerful that they learn everything they see. And some things turn out to be unacceptable and dangerous — they need to be removed. This results in such Machine Unlearning, when researchers make the model forget what it knew.

SSL models were also at the conference, but in very small quantities, although this direction was also at the peak of hype quite recently. And text-to-image generation is being pushed aside by the already mentioned video and 3D — there are many posters and orals on these topics. It cannot be said that the problem of image generation has been solved and can be considered closed, nevertheless, a lot has already been done in this direction, and the attention of researchers is beginning to shift to other areas. As for auxiliary modalities, they are increasingly being added to generative models, for example, they generate not just an image, but something similar in structure to a depth map or represent objects on it in the form of a segmentation mask.

A little regret

Sergey and I agreed that ECCV 2024 lacked new general approaches. There were a lot of particulars, local improvements, improvements to existing technologies. The authors of the articles improve the architectures, try to squeeze as much as possible out of diffusion and other popular solutions, but we did not see anything breakthrough, for which many go to such large conferences.

On the other hand, interest in very narrow niche topics is falling. For example, there was only one poster about IQA/IAA, and, surprisingly, there were no new datasets and benchmarks at all, although research in this area also moves the industry forward as a whole.

I would add that I lacked work on personalization. Adaptation to a specific object is absolutely necessary for a number of practical applications of neural network technologies. So far, they rest on the inevitable compromise between the speed of model adaptation and the visual correspondence to the given object. It turns out that fresh research is already directed towards complex modalities like video and 3D, leaving aside the issues of product applicability.

Reviews of interesting articles

In preparing this part, the most interesting and technically rich, I was helped by colleagues: Alisa Rodionova, Daria Vinogradova and Sergey Kastrulin.

3D

DPA-Net: Structured 3D Abstraction from Sparse Views via Differentiable Primitive Assembly

Modern text-to-3D and image-to-3D models do not understand physics very well — they draw incorrect shadows and shapes of objects. Researchers propose different approaches to solving this problem: for example, the use of diffusion and neural rendering technologies such as NeRF or 3D Gaussian Splatting. The author of the presented work claims that understanding physics requires building a grid with a 3D representation of the scene through primitives.

FlashTex: Fast Relightable Mesh Texturing with Light ControlNet

The work is dedicated to generating text-to-texture for three models. They fix regular lighting parameters and camera poses, as well as three types of materials. Then they render the mesh of the input object under the above conditions for each material, feed it into ControlNet as a condition, and teach the mesh to texture in 2D. Then the authors present the material in the form of a multi-resolution hash grid and optimize the material with classical losses: reconstruction by ControlNet Light outputs for regular light and camera parameters and SDS — like in DreamFusion — for continuous parameters.

For consistency of multi-view generation, the authors feed a collage with several camera parameters into ControlNet at once. ControlNet parameterization by light allows separating the model material from lighting to generate more realistic textures.

Stability AI proposes an image-to-3D model. They use the image-to-video model Stable Video and fine-tune it for the task of generating video with a virtual camera rotating around the object in the image. Similar to the ControlNet Light approach, spherical camera parameters are added to the unet itself as a condition, as well as the clip embedding of the input image. Then the model is trained on a regular grid of azimuths and a constant elevation value, and only at the next stage do they switch to continuous parameterization with arbitrary values. The priors from Stable Video allow obtaining consistent novel views.

To approach obtaining a 3D mesh, the authors propose a two-stage pipeline: first, train NeRF on the reconstruction task (without SDS) on top of the outputs of the fine-tuned SV for orbital shooting at regular camera poses. Then, already at the second stage, Masked SDS is used on continuous poses. Moreover, masking occurs on parts of the mesh that are not observed from regular angles. This is important so that there is no degradation (blur) of the observed parts.

The authors also talk about the problems of baked-in lighting. To solve them, a simple illumination model is trained to "untangle" color and lighting. The authors compare multiview generations with Zero 1-to-3 and confidently defeat them.

Synthetic to Authentic: Transferring Realism to 3D Face Renderings for Boosting Face Recognition

The authors propose a "realism mechanism" that allows reducing the domain shift between real and synthetic images of people. As a result, higher results on the original task compared to training on synthetics and without transformations. On some benchmarks, the use of such a mechanism is comparable even to training on real data.

Neural Representation

A story based on the Knowledge in Generative Models workshop

The authors ask the question: how is knowledge about a visual image encoded in the network? The classic way is to segment the object in the image, and then look at the activations of the neurons that lead to the pixels inside the mask. However, this method does not detect all the information that the model has.

It is proposed to take a set of "picture" models: generative StyleGAN2, discriminative DINO VIT, ResNet, and so on, and then look at the same pictures for similarity in layer-by-layer activations. To do this, we generate a picture through StyleGAN2, and then run it through discriminative models. All the found pairs are the desired knowledge in the model. These Rosetta neurons can then be used in inversion and editing. But this is for GANs.

For diffusion, this thing does not work because neuron activations change over time. Therefore, it is proposed to use model weights as a constant component. The model should be fine-tuned on different concepts, then the resulting weights should be considered as a point in the weight space. Here you can find interesting linear separability by some features, as well as continuously sample (close points are semantically similar) images.

Investigating Style Similarity in Diffusion Models

The authors aim to understand whether the model can reproduce the styles of artists from their actual paintings. Classical SSL methods like CLIP encode semantic information and, accordingly, are not suitable for such analysis. Therefore, the authors first train a model to extract stylistic embeddings and even release it.

Then they take LAION-aesthetics, extract a subset of 12 million pairs with an aesthetic score of more than six, and parse it to extract style information (based on captions). For example, if the caption contains something like "in a style of van Gogh," they place the sample in the "Vincent van Gogh" class. The markup is noisy, nevertheless, the resulting subset is called LAION styles.

Next, the authors take styles from this dataset and see how similar the stylistic embeddings of images in the dataset are to the stylistic embeddings of generations. Running through a large number of classes, they use this as an assessment of the models' ability to generate images and mimic different styles.

As a result, the model shows the best performance in both the evaluation of the correspondence of long texts to the image and as a text encoder for text2image diffusion.

Long-CLIP: Unlocking the Long-Text Capability of CLIP

First, the authors determine that the effective sequence length in CLIP is about 20 tokens. This is very little for some applications, such as retrieval or determining the similarity of an image to long texts. Moreover, CLIPs are often used as text encoders for text-conditional generative models, where such a sequence length is also insufficient.

The authors of the article try to fine-tune the model on longer sequences, but the main drawback of this approach is the difficulty in highlighting the important parts. The model starts to perceive all attributes as equivalent and reacts to the slightest changes in each of them. To solve this problem, the authors propose a two-stage tuning:

fine-grained tuning on long captions;

extraction of the main components of images and texts using PCA and their alignment with each other using a regular contrastive loss (coarse-grained tuning).

Text-to-image Diffusion Models

TurboEdit: Text-Based Image Editing Using Few-Step Diffusion Models

The article is about editing real images using text2image diffusion models. The work is based on two observations:

With equal seeds, editing long text prompts has significantly less impact on changing the overall composition of the generation, unlike manipulations with short prompts. This is explained by the smaller magnitude of changes in the cross-attention layers.

One-step generative models like SDXL Turbo do not face difficulties in the optimization task of inversion, and also allow manipulation of attention maps for image editing.

The combination of these ideas gives an optimization process that teaches the inverting model. With its help, the initial noise is obtained, for which the denoising procedure of the original model with the edited prompt is launched to get the edited generation.

Two approaches are proposed to improve reconstruction. Instead of a one-step model, train a multi-step refiner model in the style of ReStyle. Alternatively, attention maps can be masked to localize changes.

EDICT: Exact Diffusion Inversion via Coupled Transformations

The authors propose a new sampler for editing images based on text inversion. The essence is that the results of the previous and next steps are used for integration. At the same time, no computational overhead is added, because the results are obtained naturally. Compared to DDIM inversion, this approach gives almost perfect recovery.

Be Yourself: Bounded Attention for Multi‑Subject Text‑to‑Image Generation

The work is about multi‑subject grounded generation. The well-known problem of "confusion" of semantically similar concepts occurring in attention blocks is raised. The authors propose using the spatial information of attention maps not only to mask "neighboring" competing tokens but also for guidance during model inference. In addition, they suggest shifting the diffusion trajectory in the direction that maximizes the concentration of attention in the given bounding box for the corresponding object in the prompt.

ReGround: Improving Textual and Spatial Grounding at No Cost

The article is based on an architectural analysis of the network. As a baseline, the authors consider the very popular GLIGEN model of its time — it allows adding an additional condition on the spatial arrangement of objects during generation using a bounding box.

Researchers noted the sequential nature of the gated self‑attention block embedded in the network, which is responsible for grounding tokens. Such an architectural choice disrupts the expected distribution of input into the cross‑attention module and thereby disrupts the textual component of conditional generation.

A simple switch from sequential to parallel connection solves the problem and allows finding a compromise to meet both conditions.

ReGround improves the architecture of the GLIGEN model, which, in turn, is part of other, more complex models. Therefore, if GLIGEN is improved, all existing works that use it as a component will automatically improve as well.

The article discusses a method for accelerating generations with a focus on production and a method based on caching some x_t‑generations of individual concepts. The idea is to take complex long prompts, break them into concepts, filter out non-visual ones, and then do partial generation up to step t and place the result in the database.

To generate an image from a full prompt, the necessary partial generations are summed, and the remaining trajectory is generated separately. The authors claim that the acceleration is on average 30% without a significant loss in quality.

Video Understanding

VideoAgent: A Memory-augmented Multimodal Agent for Video Understanding

The authors propose using a multimodal agent for analyzing long videos. They endow it with several types of memory.

Firstly, these are textual descriptions of each two-second clip (here they use the LaViLa model). Secondly, these are descriptions at the embedding level: of the clip itself (here they take ViCLIP) and the resulting text caption (text-embedding-3-large from OpenAI). And memory of specific tracked objects: their embeddings for re-identification (from CLIP) and moments of appearance in the video (tracked by ByteTrack) are stored in an SQL database.

Using such memory, the agent can:

describe two-second video fragments;

search for a clip by a text query with a description of what is happening — text and video features of the clips are used to determine similarity to the text query;

answer a question about the video — the most relevant fragment is highlighted and Video-LLaVA is launched;

talk about the qualities of specific objects — for example, their number. Here, a search is performed on the features in the tracking database and the corresponding SQL query is sent.

The agent itself chooses the most appropriate action using an additional LLM. The system looks heavy, considering how many models are needed for it. However, it allows beating cool models like Video-LLaVA, LaViLa, and InternVideo on well-known QA-video datasets.

ECCV 2024 Best Paper Award

Minimalist Vision with Freeform Pixels

A truly low-level solution was proposed in the paper that received the Best Paper Award. The authors created a prototype of a fully autonomous power supply camera.

Instead of conventional matrices, the camera uses 24 photodiodes. In front of each of them, a mask-filter is installed, which acts as the first layer of the neural network. The optical transfer function of the mask depends on the task for which the camera is trained.

In essence, the first layer provides an arbitrary shape for each pixel — as opposed to the fixed square shape of traditional cameras. The subsequent layers output the result of the task. Thus, the authors demonstrate the possibility of monitoring the workspace and assessing traffic using only 8 pixels out of 24.

In addition, the camera performed well in the task of assessing room lighting. Using the same 8 pixels, it was able to determine which light sources were on at any given time. At the same time, none of the sources were directly visible to the camera — it collected information based on the state of the room.

In addition to low power consumption, this approach ensures the privacy of people in the frame, as the recorded optical information is not sufficient to restore image details. The camera prototype is equipped with a microcontroller with Bluetooth. Solar panels are located on four sides to generate electricity.

Instead of a conclusion

This is how we remember ECCV 2024 — a huge conference, thousands of reports and researchers, a lively community where, although sometimes difficult and long, new approaches and solutions are born. Progress cannot be stopped! And it's great to be a part of it. To see that we at Yandex are working on relevant tasks. It will be interesting to return to the conference in 2025 and see if the technological landscape changes. Will new architectures appear? Which hype topics will fade into the background, and what, on the contrary, will gain popularity? Will a corgi come to ECCV or will the organizers decide to bring a whole llama? We'll see!

Write comment