- AI

- A

ACM RecSys — 2024: trends and reports from the largest conference on ML in recommender systems

Hello! My name is Peter Zaidel and I am a senior developer at Music. Together with other guys from Yandex, who develop recommender systems in various services, I visited the international conference ACM RecSys — 2024 in the Italian city of Bari in October. Today I want to share with tekkix my impressions, trends, and, of course, reviews of the most interesting scientific papers from the conference. I think my story will be useful to all specialists in the field of recommender systems who follow trends and are ready to try something new and interesting in their work.

How and where the conference was held

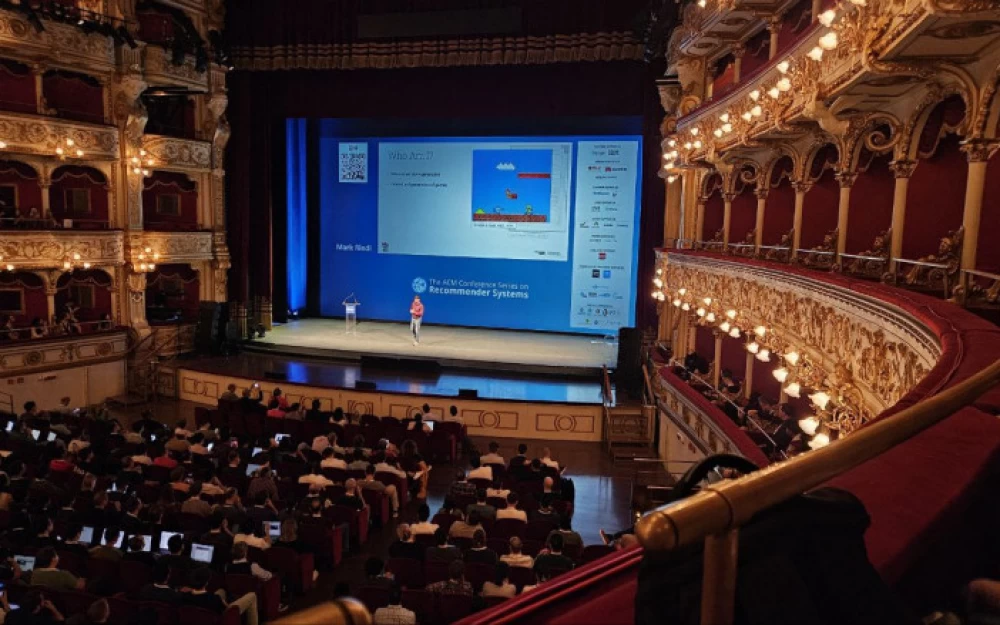

Bari is a small cozy town on the Adriatic Sea coast. The main venues for the conference were the local technical university (Politecnico di Bari) and the Piccinni Theater, the oldest in the city. Workshops were held at the university on the first and last days of ACM RecSys and part of the poster session, while the main presentations and a classical music concert were held at the theater.

Of course, the life of conference participants is not only about moving between posters in an attempt to talk to all the interesting authors: on the last day, the organizers arranged a party at a villa, where we all spent time in a much more relaxed atmosphere.

Bright moments for me also included a presentation by the research director of DeepMind on the future of generative recommendations and a keynote on the main stage from Spotify: they talked about the history and development of recommendation systems in the company.

Recently, a detailed article by my colleague Kirill Khrylchenko on trends was published on tekkix. Our findings definitely overlap, but I look at the same issue specifically from the perspective of what was most talked about at the conference: around which topics there was a lot of hype, more posters and oral presentations.

LLM in recommendations

Perhaps the main trend is a lot of work, a lot of hype. Almost all companies are trying to use LLM for recommendations in one way or another. Large language models help in content generation, data preprocessing, and as part of the pipeline. Google talked about how LLM can be used to predict the user's high-level interests. Examples of articles:

LLMs for User Interest Exploration in Large‑scale Recommendation Systems

Large Language Models as Data Augmenters for Cold‑Start Item Recommendation

Generative models

A trend close to LLM, but more about how models can immediately output items for recommendations. There were several articles from Spotify and Google, in which the authors develop approaches to generating item IDs directly.

Articles on the topic:

So far, publications are mainly academic, industrial applications are only from the words of employees.

New ways of representing items for models

This is an important trend, as ideally we always strive to find a balance between generalization and memorization in order to be able to easily generalize to new items. Alternative ways of representation are also necessary for the development of LLM applications, since standard methods such as item ID, content, or content embeddings are not suitable.

Articles on the topic:

Better Generalization with Semantic IDs: A Case Study in Ranking for Recommendations

Efficient Inference of Sub‑Item Id‑based Sequential Recommendation Models with Millions of Items

Scaling models

This trend continues to actively develop — large companies want large models. So far, the size of recommendation systems is significantly inferior to current LLMs, but catching up with them is a matter of time. The main task is to find the capacity and justify the use of large models economically. I think this is quite realistic: Google believes in the synthesis of LLM and recommendations, and Meta* is developing large generative models for recommendation systems. Examples of articles:

Anti-trends

In general, there is a decrease in interest in topics that were very popular just a couple of years ago — RL and graph networks. There seem to be no major and notable works on the first one, and graph networks are used in some services where they are necessary, for example in social networks or on Pinterest.

And now — to the articles!

Colleagues Nikolai Savushkin, Vladimir Tsypulin, and Kirill Khrylchenko helped a lot in creating this section. Their reviews are presented here along with mine.

My personal top-3

Keynote by Ed Chi, Research Director at DeepMind

The presentation was devoted to a review of the development of recommendation systems over the past 10 years and where we are heading, starting with matrix factorization and ending with generative models. The main idea of the report: it is necessary to combine language models with recommendation models, build agents, etc. Ed Chi talked about how Google connects LLM with recommendations using semantic IDs and how they manage to retrain models for recommendation tasks with very small amounts of data.

The article itself was not presented at the conference, but there were several presentations on it and engineering articles from the team that worked on this model at the workshops. I am sharing with you the review by Kirill Khrylchenko.

Neural network recommendation systems have one big problem: they do not scale well, whereas in NLP and CV, scaling the size of neural network encoders is very good. Several reasons for this phenomenon are highlighted: a giant non-stationary item vocabulary, the heterogeneous nature of features, and a very large volume of data.

The authors propose reformulating the recommendation task in a generative setting. To begin with, they present the data as a sequence of events. Real-valued features (counters and others) are discarded, a single sequence is formed from interactions with items, and then events of changing static information, such as changing location or changing any other context, are added to it.

The architecture for generating candidates looks quite standard and similar to SASRec or Pinnerformer: we represent the user as a sequence of events (item, action) and in those places where the next event is a positive interaction with an item, we predict what this item is.

But for ranking, the novelty is quite serious: to make the model target-aware (Deep Interest Network from Alibaba), it was necessary to create a more sophisticated sequence in which item and action tokens alternate: item_1, action_1, item_2, action_2... From item tokens, the action that will happen to them is predicted. It is also said that in practice, any multi-head multitasking can be solved in this place. It is important to note that the authors do not train a single model for both candidate generation and ranking, but train two separate models.

Another innovation is the rejection of softmax and FFN in the transformer. It is argued that softmax is bad for learning the "intensity" of anything in the user's history. The real-valued features that were discarded by the authors mostly concerned it. For example, how many times a user liked a video author, how many times they skipped, etc. Such features are very important for ranking quality. The fact that the rejection of softmax solves this problem is evident from the experimental results: indeed, there is a significant improvement in ranking results with such a modification.

As a result, HSTU (Hierarchical Sequential Transduction Unit, as the authors named their architecture) shows excellent results on both public and internal datasets. It also works much faster than the previous DLRM approach due to autoregressiveness and the new encoder. The online results are also very good: on a billion-scale short-form video platform (we assume these are reels), they achieved a +12.4% relative increase in the target metric in an A/B test. However, the final architecture, which the authors measure and implement, is not very large in terms of the number of parameters — about hundreds of millions. But the scaling in terms of dataset size and history length turned out to be very good.

Also, posters of the articles Toward 100TB Recommendation Models with Embedding Offloading and Enhancing Performance and Scalability of Large-Scale Recommendation Systems with Jagged Flash Attenti were presented at ACM RecSys, dedicated to the technical details of implementing large recommendation models.

LLMs for User Interest Exploration in Large-scale Recommendation Systems

The main idea of the article is the application of LLM Gemini Pro to predict new user interests. The scheme proposed by the authors allows the use of the language model's knowledge for recommendations without significant infrastructure costs.

The first stage is the extraction and clustering of videos. For each video, the pre-trained model calculates a 256-dimensional embedding based on the description, tags, video, and audio. Based on the embeddings, a four-level hierarchical clustering is built. For video descriptions, the authors use second-level clusters — a total of 761 clusters. Each cluster is described by a set of tags.

The request to the LLM is arranged as follows: based on the list of user interest clusters (two are used), they are asked to predict the next one. To make the model better understand the task and be able to output a really existing cluster, it is fine-tuned on logged (C1, C2) → CL, where CL is a new user interest with which they successfully interacted. At the same time, not all data is used, but for each target cluster CL, only the top-10 pairs of clusters on the left are selected, i.e., a total of 7610 examples. This is necessary to increase the diversity of the data and allows to eliminate the bias towards more frequent clusters. In general, fine-tuning increases the model's understanding of the task (recall increases) and reduces the percentage of errors — predictions of a non-existent cluster.

For LLM inference, it is used as follows: offline, predictions for all pairs of clusters (761 × 761) are obtained within a few hours and the resulting table is stored in memory. Online, two clusters from the user's history over the past 30 days are sampled and the corresponding next cluster is found in the table. Then, candidates from the cluster are ranked using standard personalization models.

The system was implemented in YouTube Shorts and showed an improvement over the baseline — tree-based LinUCB. The number of users with N (from 20 to 200) different interest clusters over seven days increased. The experiment also showed an increase in overall watch time and the number of users with video watch time of more than 10 minutes.

More articles, good and different

Generative recommendations

Recommender Systems with Generative Retrieval

Modern models for candidate generation are usually built as follows: encoders (matrix factorizations, transformers, DSSM-like models) are trained to obtain embeddings of the request (user) and the candidate in the same space. Then, an ANN index is built for the candidates, in which the nearest candidates are searched for by the request embedding according to the selected metric.

The authors propose to move away from this scheme and learn to generate item IDs directly with the model they train. To do this, they propose using an encoder-decoder transformer model based on the T5X framework.

The question remains: how to encode items for use in a transformer model and how to learn to directly predict IDs in the decoder? For this, it is proposed to use the developments from the previous work — Semantic IDs. Such IDs for describing items have the following properties:

hierarchy — IDs at the beginning are responsible for general characteristics, and at the end — for more detailed ones;

they allow describing new items, solving the cold-start problem;

when generating, sampling with temperature can be used, which allows controlling diversity.

The article conducts an experiment on the Amazon Product Reviews dataset, consisting of user reviews and product descriptions. The authors use three categories: Beauty, Sports and Outdoors, and Toys and Games. For validation and testing, they use the leave-one-out scheme, where the last item in each user's history is used for testing, and the penultimate one for validation. This approach has been criticized a lot for possible leaks, but the authors use it to compare with existing baseline results.

Semantic IDs were built as follows: each product is described by a string of name, price, brand, and category. The resulting sentence is encoded by the pre-trained Sentence-T5 model, obtaining an embedding of size 768. RQ-VAE is trained on these embeddings with layer sizes of 512, 256, 128, ReLU activation, and an internal embedding of 32. Three codebooks of size 256 embeddings are used. For training stability, they are initialized with k-means cluster centroids on the first batch. As a result, each item is described by 3 IDs, each from a dictionary of size 256. To prevent collisions, another ID with a serial number is added.

Encoder and decoder are transformers of four layers, each with six-headed attention of dimension 64, ReLU activation, MLP at 1024, and input dimension 128. 1024 (256 × 4) tokens for codebooks and 2000 tokens for users were added to the token dictionary. As a result, the model has 13 million parameters. Each example in the dataset looks like this: hash(user_id)% 2000,

The authors conduct an ablation study for semantic IDs: they consider options for replacing them with LSH and random IDs. In both cases, the semantic ID gives a large increase and is an important component of the approach. An analysis is also carried out on the model's ability to generalize to new items. To do this, 5% of the items are removed from the dataset, and during inference, the share of new candidates in the top-k (with the first three IDs matching) is set as a separate hyperparameter, and their model is compared with KNN.

The article turned out to be largely academic, but it draws attention to an important direction that is now actively developing. A similar approach can be used for encoding items for LLM, which, judging by the conversations at the conference, is already being actively pursued. It can also be noted that the article does not address some important issues: how to add new items and how to retrain rq-vae (in real services, the content distribution often changes), and it would also be desirable to see a comparison on more realistic datasets.

Better Generalization with Semantic IDs: A Case Study in Ranking for Recommendations

The sensational article from Google DeepMind. The authors propose encoding the document content as several tokens using VAE and vector quantization — the approach was originally proposed in another article. Each document is represented as a set of fixed-length tokens. They obtain a clever dictionary that can encode documents, where one document = several tokens. They claim that it works not much worse than trainable IDs (without collisions), but the embedding matrix is radically smaller, and collisions in it have semantic meaning.

The approach works better than content embeddings because the vectors for tokens are trained end-to-end with the top model for the recommendation task. The authors also tried to train a small head on top of content embeddings, but it turned out worse in quality. In addition, due to the hierarchical nature of tokens, a decoder can be trained on them, which was described in the article Recommender Systems with Generative Retrieval, which I described in detail above.

Text2Tracks: Generative Track Retrieval for Prompt‑based Music Recommendation

The authors consider the task of recommending music based on text queries, for example: old school rock ballads to relax, songs to sing in the shower, etc. The effectiveness of the model is explored on broad queries that do not imply a specific artist or track.

There are different ways to recommend a track based on a text query. For example, ask a language model a question, parse the answer, and find tracks through search. But this can lead to model hallucinations or search ambiguity: sometimes completely different tracks can have the same title. In addition, prediction can take a lot of time and require significant computational resources.

The authors propose fine-tuning an encoder-decoder model (flan-t5-base), which could generate a track identifier directly from the text input, inspired by the differentiable search index approach. The main question answered in the article is how to better encode a track. To do this, they compare several approaches:

a track is encoded as a random natural number, which is input as text. For example, 1001, 111, etc.;

a track is encoded as two numbers: the artist ID and the track ID within the artist. That is, the tracks of artist 1 will be represented as 1_1, 1_2... Separate tokens are added to the dictionary for the top 50k artists;

each track is described by a list of IDs based on hierarchical clustering of content (playlist names with the track) or collaborative embeddings (word2vec). A separate token is added for each cluster.

These strategies significantly reduce the number of tokens needed to represent a track compared to a text description. The result was as follows: the second approach (artist ID + track ID) performed best. The approaches with clustering of collaborative embeddings and track ID as a natural number performed the worst.

The authors use popularity, bm25, and a two-tower encoder (all-mpnet-base-v2), which they fine-tune with multiple negatives ranking loss, as the main baselines. They compare the models on three datasets: MPD 100k, CPCD, and Spotify editorial playlists.

The researchers show that their model significantly outperforms the baselines on all datasets. In the future, they plan to explore the capabilities of decoder-only architectures and the use of user history for personalized recommendations.

Industrial Articles

Joint Modeling of Search and Recommendations Via an Unified Contextual Recommender (UniCoRn)

In another interesting report from ACM RecSys, Netflix developers share their experience in combining models for personalized search and recommendations. The article has several premises. First, it is easier to maintain one model in production than several. Second, the quality of combined models can be higher.

The presented architecture is trained on three tasks: personal recommendations, personalized search, and recommendations for the current video. For this, the neural network ranker is fed a search query, the ID of the current entity (video), the user ID, the country, and the ID of the task being solved (search or one of the rankings). The ranker is also fed the embedding of the user's action history, obtained by the so-called User Foundation Model, the details of which are not disclosed either in the conference theses or in response to a direct question after the oral presentation.

To fill in the embeddings of entities that are missing (for example, search queries in the recommendation task), the authors conducted a series of experiments, as a result of which they decided that in the search task it is better to substitute a separate null value instead of the context, and in the recommendation task — to use the name of the current video instead of the query string.

The authors noted that before the implementation of this approach, at the stage when the user entered the first few letters in the search query, results were shown that did not match the user's interests, as the search was not fully personalized. Now the problem has been solved. The report also confirms that the logic for selecting candidates for search and recommendations turned out to be predictably different.

Results — a 7% increase in offline search quality and a 10% increase in recommendations. This is apparently achieved due to regularization arising from training on several tasks and due to the transition to full personalization in search.

Encouraging Exploration in Spotify Search through Query Recommendations

Spotify talked about how they implemented query suggestions in search. They collect queries from various sources: catalog (tracks, artists, albums, playlists), queries from other users, queries of the type artist + mix/covers, and queries generated by LLM based on metadata. All this is sent to a ranker trained on search logs, from which the top-4 are shown to the user. Results: +9% exploratory queries, which are the search for new content, and +10% to the average query length.

Ranking Across Different Content Types: The Robust Beauty of Multinomial Blending

A simple but sensible product idea from Amazon Music: allow products to set proportions by content type. There are two models for this: one ranks carousels, and the other ranks content within carousels. When the carousels are ranked, they are grouped by content type, the type is sampled proportionally to the weights set by the products, and the most relevant carousel from the sampled type is selected. In an AB test, this approach was compared to a system that works on an MMR-like algorithm, and excellent metric growth was obtained.

Previously, the authors used linear thompson sampling for ranking, now they use a neural network that is trained online on a subsample of logs with a delay of tens of seconds. They are now actively trying sequential models, but not yet in production.

Large scale

The poster about the Jagged Flash Attention algorithm is when you don't use padding in the user's history, but instead pack it into two tensors: continuous history and history sizes.

The authors promise open source code soon. They reported acceleration on inference, but did not say on what batch sizes and history lengths the figures were obtained. On graphs with training acceleration, they always pad to the maximum length, not to the maximum length in the batch, so the figures are inflated. But overall, the story is very useful.

Sliding Window Training: Utilizing Historical Recommender Systems Data for Foundation Models

Researchers at Netflix are training a foundation model for downstream tasks. Essentially, this is sasrec - they predict the next item. Different history lengths are used at different epochs (fixed for the entire epoch). For each user, one random window of the specified length is selected per epoch. Only IDs are fed in, action type is used only in the loss, where losses on different action types are mixed with different weights. The user's history consists of different positives: clicks, views, etc.

The authors do not further train the model in downstream tasks, but simply feed the obtained embeddings into the upper model. Lookahead and action type were not tried in the model input. The embedding dimension is 64. The loss is a fair softmax over the entire base.

Other

Do Not Wait: Learning Re‑Ranking Model Without User Feedback At Serving Time in E‑Commerce

The idea of the article: if we have a re-ranking function and a function that approximates the reward by user and list, at runtime we can "adjust" the parameters of the ranking function towards maximizing the evaluation function. Such adjustments can be applied several times to obtain a ranking model that works better than the original one.

The authors claim that they increased the number of orders per user by 2%. Clicks, however, increased by only 0.08%, which looks very strange against the background of the increase in the number of orders. The ranking function is some kind of thompson sampling, and Argmax is found using a reinforce like method. Interesting, but the practical benefit is questionable.

AIE: Auction Information Enhanced Framework for CTR Prediction in Online Advertising

A rather interesting framework. The authors added scaled CPC as the weight of the positive in the log loss and achieved metric growth (expressed in money) in the AB test. Unfortunately, the author did not indicate what the theoretical prerequisites were - apparently, some very general intuition worked. Offline, they mainly use AUC and csAUC, which are usually well converted into online metrics.

Conclusions

Recommendation models are becoming more and more like language models every day. In large companies, either the models are increased to the size of LLM, or they are approaching the direct use of them for recommendations. It seems that this is the main trend for the coming years, and we will soon see models in production of sizes (specifically in the dense part) comparable to current LLMs. Also, the largest companies believe in the application of large language models for retrieval and ranking, which means there will be more articles, more resources, and more results.

Write comment