- AI

- A

How to do business in Open Source

Interview with Emeli Drail — co-founder and technical director of Evidently AI. Her open source library for evaluating, testing, and monitoring the quality of data and machine learning models has already been downloaded more than 22 million times. We will ask her about her career path, knowledge transfer, necessary skills, and the future of the industry.

On September 26, Emeli will speak at AI Conf 2024 with a presentation titled "Beyond the Script: Continuous Testing for Adaptive and Safe LLM Systems". In the meantime, we will ask her about her career path, knowledge transfer, necessary skills, and the future.

— How did your career in Data Science begin? Why this path?

— My journey began with the Yandex School of Data Analysis (YSDA). I enrolled after the 3rd year of university at RUDN, where I studied applied mathematics and computer science. I graduated from YSDA in 2012, but started working from the first year at YSDA — I was invited for an internship at Yandex, and after completing it, I was offered a job as a search quality analyst.

There I got acquainted with machine learning and Data Science, although I was studying to be a developer. At that time, there were few analysts in the department who wrote code for distributed calculations, but there were enough tasks for analyzing large amounts of data. These are terabytes of search logs. And I just knew how to quickly write the necessary scripts. So we had a match.

— What cases did you solve using machine learning?

— My favorite is the optimization of the gas fractionation plant. The idea is that gas fractionation plants consist of several distillation columns that separate the gas mixture. Depending on the equipment parameters, different types of separation occur. The main thing is to extract pure fractions from the gas mixture at the output. This allows you to meet the standard and get a product suitable for sale or use in industry.

Such a system is difficult to model in real-time because you never know the chemical composition of the natural gas that will come in. You can't stop the plant to wait for a chemical analysis and then go back to adjusting the temperature on the control plates. You have to constantly adapt the temperatures on them and manage the reflux flow to make it optimal.

The project already had a working system. But it turned out that with the help of machine learning, it could be improved by predicting the chemical composition of the gas that would enter even with a minimal horizon of a few minutes.

The project was complex because it involved working with thermodynamic models, predictive machine learning models, and solving optimization problems on top of all that. At that moment, my entire mathematical background came in handy.

– And how did the technology stack change as you grew? What did you need to know when you first entered the profession, conditionally 12 years ago?

To build models on top of large volumes of data, it was often necessary to write code in compiled programming languages. There were not as many convenient libraries that automated model training. Algorithms were often written independently. By the way, this is how many algorithms written in low-level languages appeared later. For example, in C, C++, or Java. Over time, algorithms were ported, so to speak, to syntactically simple interpreted programming languages. Simply put, in practice, this meant that from Python or R it became possible to call a training procedure that was written in C, compiled, and worked quickly. And the analyst only writes the code for the call, the wrapper. This mini-revolution opened the way to Data Science for many mathematicians. This is when libraries like Scikit Learn in Python and many packages in R appeared.

In Yandex, tools like MatrixNet became available in Python. Then TensorNet appeared (not to be confused with TensorFlow - this is a different technology). It became easier and faster to conduct experiments. It was very cool, many analysts from different parts of the company worked with it.

A major milestone was the growth of Kaggle - a platform for competitive data analysis. Participants in the competitions were provided with the same data sets to optimize their model. They used their knowledge of statistics and machine learning for this. And after the competition ended on a fixed closed data set, it was possible to see who turned out to be the best. It was an era of competitions when few people wrote code for training from scratch, but almost everyone worked with feature engineering, hyperparameter optimization, and built model compositions.

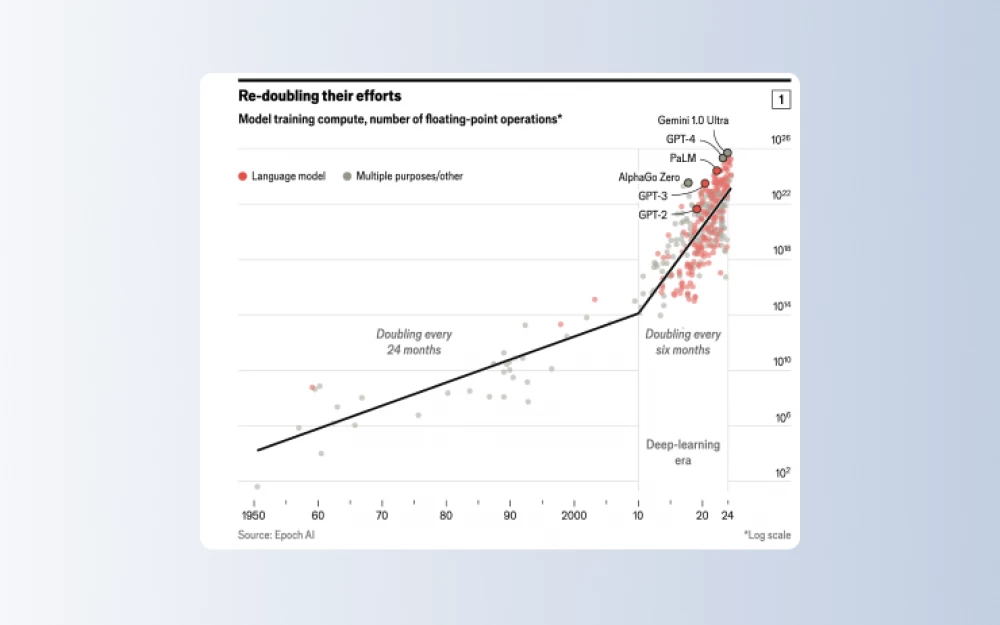

The development of neural networks occurred in bursts. For example, convolutional neural networks appeared, which allowed effective work with images. Then recurrent neural networks. Then waves of articles about various neural network architectures. It was amazing! You could take an article, read how it works, go implement it and immediately get results. You could go to a conference to learn about a new architecture, implement it and get a 3% or 5% accuracy increase and a powerful economic effect.

Now we all see a big growth in the market of pretrained models available through third-party APIs. It turns out to be incredibly convenient for a quick start and relatively cheap. Especially now, when there is high competition in the market.

The possibility of doing Fine Tuning has appeared. You no longer need to be a huge corporation that has downloaded the entire internet to get high-quality solutions even for very specific tasks. Of course, you can still train models from scratch, but in percentage terms, this is done less often and more consciously.

— Tell us about Evidently AI! Is it possible to make a business out of open-source?

— In 2018, I left Yandex to start my first startup about applying AI in industry. Mostly we worked with steel production, but not only. Then it became clear to me that I see a pattern of the same problems.

You are working on a project that takes off well but doesn't work very long with high-quality indicators. Then problems begin with the model recommending something wrong or poorly predicting. It quickly becomes clear that you don't have a convenient infrastructure for basic observability. This means the team doesn't understand if there is data drift, concept drift, or data quality issues until a model user comes and says that AI has stopped working. Then you load the data and see that, for example, the model should have been retrained two months ago because the data changed. Accordingly, you retrain and wonder why this cycle is still not automated.

As our startup grew, a large tail of projects accumulated that needed to be maintained. And it became clear to me that more tools were needed to automate processes around working applications to do Advanced ML.

This is how Evidently Ai appeared.

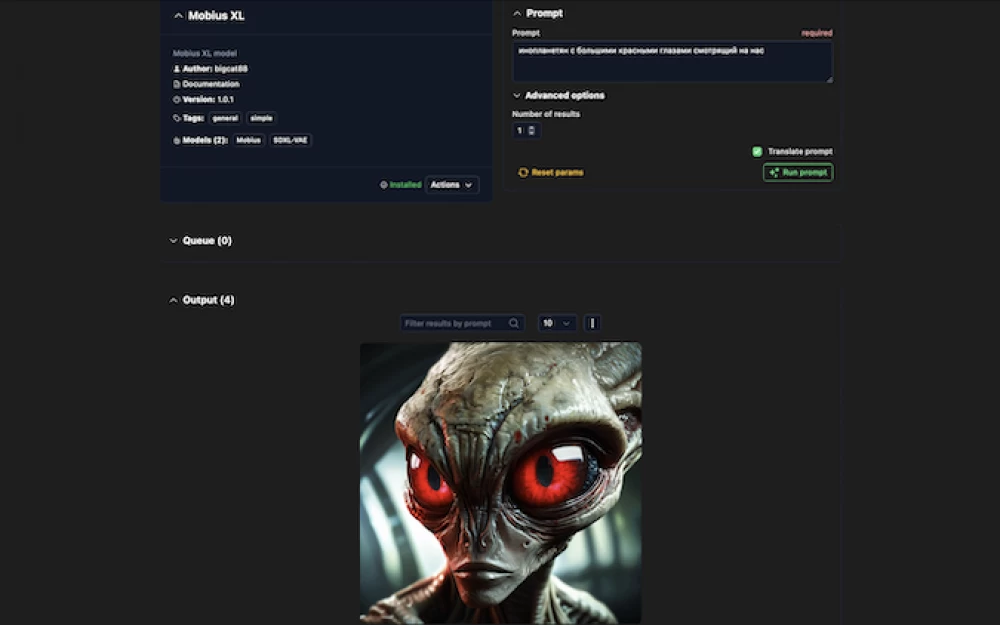

At first, it was an Open Source library that helped calculate Data Drift — our flagship starting piece of Evidently. Then the library grew and suddenly became a fairly popular tool for testing and monitoring models. Now we have more than 100 metrics and tests available, support different types of data, and the library has been downloaded more than 22 million times.

A platform appeared next to the library! This allowed access for analysis not only to technical users but also to managers and product analysts.

Interestingly, the same story is repeating with LLM as it was in my industry — active implementations have begun, and problems and the need for advanced observability have appeared.

We have already made LLM support in open-source, there was a launch recently, so now generative models are not offended! In general, I believe that an observability tool should be open-source. How can you trust validation results if you don't understand how it happens?

— What inspires you in the very idea of Open Source and how important are such products for Data Science?

— Community comes first. It works in machine learning.

When the wave of machine learning just rose, not everyone believed that so many services would be tied to this technology. Scientists, statisticians, and developers openly shared with each other to better understand.

All articles, almost all algorithm implementations were open. Because of this, the field grew so quickly. You could take the code that was written, conditionally in China, use it and refine it. Because of this, there were practically no visible boundaries. You write on the forum or at the conference that something is not working, and while you sleep in Europe, people from California will look, respond, and help. There is a huge support and great involvement.

I sincerely believe that now it is in the field of AI and ML that Open Source will work. In our field, almost any closed source tool has an open source counterpart. Even if we take models, for example, from OpenAI or Anthropic. We will find open versions of the LlaMa and Mistral models. And I don't mean that Anthropic is worse, but that there is still an Open Source alternative, and the community supports it very well. There are many open tools and courses in the field of AI. I want to continue this.

— How useful are Data Science conferences?

— From a networking point of view, conferences are just great! For me, the most valuable thing about conferences is the opportunity to quickly and efficiently meet a large number of colleagues from the professional community and exchange opinions, ideas, problems, and solutions. Often such communication grows into projects and it energizes me a lot! Everything happens much faster and more energetically than in the same correspondence, and I always return inspired with a bunch of new ideas. People are very important to me to do new things, so large conferences are a big joyful event for me.

On September 26, I will be speaking at AI Conf 2024 with a presentation “Beyond the Script: Continuous Testing for Adaptive and Secure LLM Systems”. This is the largest AI conference in Russia. We will discuss industry trends and their practical application.

Write comment