- AI

- A

Processing and comparing marketplace products on LLM

There is a classic problem on any e-commerce platform related to how to categorize and understand product descriptions. It is especially exacerbated by the fact that users create confusing descriptions even for the simplest products. For example, a regular blue T-shirt can be described as sky blue or even dark blue aquamarine.

Some sellers manage to cram product information directly into the image itself, drawing on a poorly lit photo with bright green letters: "The best T-shirt in the world!". As a result, two identical products may look like they are from different universes.

As a result, searching, categorizing, and analyzing these products becomes a real headache (and a guarantee of employment for data specialists).

Of course, over the years, different ways have emerged to deal with this:

1. Matcher specific to each category

Create a separate "matching" model or algorithm for each product category - for electronics, clothing, cosmetics, and so on, down to subcategories. This approach is highly specialized and works, but it can turn into a real headache if you have 100,500 categories.

2. Candidate search using embeddings

Embeddings are vector representations of data (e.g., product descriptions or names) that determine their similarity. Using text or image processing methods (e.g., word2vec, sentence-transformers), you can find similar products based on the proximity of the resulting embeddings.

3. Attribute extraction for each product

Product information (such as brand, model, color, size, etc.) is extracted from descriptions, for example through regex, to analyze and match products at a deeper level.

4. Gradient boosting

Gradient boosting algorithms (CatBoost, etc.) are applied to classification tasks, determining whether products are similar. These models are trained on pre-labeled data and take into account both textual and numerical attributes.

All this really helps up to a certain point, but people are amazingly inventive in describing things. Feature hell is a reality when there are 400 ways to say "convenient," and outwardly identical clothing can be called completely different words ("Hemp Eco T-shirt" vs. "100% Plant-Based Eco Top").

And in the field of clothing and similar products, which can be identical but described completely differently, matching can only be done by photographs, based on color, shape, and fabric structure, i.e., the main information will still not be in the product description.

The new approach is to use the multimodal capabilities of LLM (language processing AI models) and Vision-Language Models (VLM) to solve this problem. Here is the general scheme:

Candidate search using embeddings

Essentially, the first step remains the same as before. Embeddings generated by advanced LLMs (such as OpenAI models or Sentence-Transformers) can be used to find potential matches by comparing vectors of descriptions or product attributes.Attribute extraction for each product using LLM

LLMs are used to extract specific attributes (e.g., brand, color, size, material) from product text descriptions.

For example, "Stylish bright red cotton T-shirt for men" is broken down into: color=red, material=cotton, targeted demographic=men, etc.

(See examples below.)Matching two products based on extracted attributes using LLM

Once the attributes are extracted, LLMs use them to compare two products and determine if they match.

Models can take into account both explicit similarities (e.g., same brand and size) and implicit ones (e.g., "eco-friendly" and "sustainable").

For example, matching "Men's Nike Air Max sneakers" with "Nike Air Max shoes for men" by recognizing that they are the same product.

(See examples below.)Image-based matching using VLM (Vision-Language Models)

Some attributes, such as color, design, or unique pattern, are better analyzed visually rather than textually. VLMs combine visual and textual data for more accurate product matching. These models analyze product images along with their descriptions to better understand the product. For example: matching an image of a black leather bag with another similar bag by identifying visual characteristics (shape, texture, etc.) and combining them with textual data.

Advantages of LLM

More accurate matching. Fewer false mismatches (e.g., when you received a "turquoise" T-shirt instead of a "dark green" one).

Accuracy in the range of 90-99%, especially with finely tuned models (both precision and recall).

Processing diverse data. Text, images, random emojis — anything.

Improved understanding of product descriptions and visual characteristics.

No specialized training required: no need to create and maintain 50 different specialized matching algorithms.

Disadvantages

Requires much more computational power and is more expensive

Latency is not great. Works well for batch processing, but not for real-time.

Overall, for some categories, there is no point in using LLM if it can be done without it. If the category is simple and standard algorithms cope, it will be much cheaper. LLM is suitable for processing medium and complex categories. That is, it is better to use LLM in combination with standard methods to optimize cost, speed, and quality.

Attribute extraction using LLM

Example prompt:

I have a product card from the "Refrigerators" category on the marketplace. I need to extract and format attributes from it.

key_attributes_list = [

{

"name": "model",

"attribute_comment": "Refrigerator model. Include the brand or company name, but exclude color and size."

},

{

"name": "capacity",

"attribute_comment": "Total refrigerator capacity, usually measured in liters (L). Look for terms like 'Total capacity'. If unavailable, set the value to null."

},

{

"name": "energy_efficiency",

"attribute_comment": "Extract the energy efficiency class, such as 'A++', 'A+' or 'B'. Look for terms like 'Energy efficiency class'. If unavailable, set the value to null."

},

{

"name": "number_of_doors",

"attribute_comment": "Number of doors, such as '1', '2' or 'Side-by-side'. Look for terms like 'Doors', 'Number of doors'. If absent, set the value to null."

},

{

"name": "freezer_position",

"attribute_comment": "Freezer position, such as 'Top', 'Bottom' or 'Side'. Extract from terms like 'Freezer position'. If not specified, set the value to null."

},

{

"name": "defrost_system",

"attribute_comment": "Type of defrost system, such as 'No Frost' or 'Manual defrost'. Look for terms like 'Defrost system'. If not mentioned, set the value to null."

},

{

"name": "dimensions",

"attribute_comment": "Include height, width, and depth in centimeters (e.g., '180x70x60'). Look for terms like 'Dimensions'. If unavailable, set the value to null."

}

]Result:

extracted_attributes = {

"model": "Upstreman UF70",

"capacity": "7.0 cubic feet",

"energy_efficiency": "ENERGY STAR certified",

"number_of_doors": "1",

"freezer_position": "Convertible",

"defrost_system": "Low frost design",

"dimensions": "56.1x21.3x22.76 inches",

}Mapping using LLM

Example of color comparison

Prompt:

There are two product cards from the "Freezers" category on the marketplace. Compare the "color" attribute. The answer should include:

is_match: A boolean value indicating whether the values match.reason: A detailed explanation of the comparison result. The response format is in JSON.

Product Card 1: | Product Card 2: |

Model: Upstreman UF70 Color: White | Model: Midea WHS-109FSS1 Color: Stainless Steel |

Result:

{

"is_match": false,

"reason": "Color attributes do not match. Product 1 has color 'White', while Product 2 has 'Stainless Steel'."

}Comparison of multiple attributes

Prompt:

There are two product cards from the "Freezers" category on the marketplace. Compare their attributes to determine if the two products are similar enough to be considered the same model. Attributes for comparison:

Model Name: Remove spaces for comparison.Capacity: Matches if the difference is less than or equal to 0.2 cubic feet.Energy Efficiency: Matches if both have ENERGY STAR certification or identical efficiency ratings.Dimensions: Matches if the difference in height, width, and depth is no more than 1 inch.Color: Matches only if the color is identical.

The response should include:

is_match: A boolean value indicating whether the two products are considered the same model.reason: A detailed explanation of the comparison result. The response format is in JSON.

Product Card 1: | Product Card 2: |

Model: Upstreman UF70 Capacity: 7.0 cubic feet Energy Efficiency: ENERGY STAR certified Dimensions: 56.1x21.3x22.8 inches (H x W x D) Color: Pearl White | Model: Upstreman UF70 Capacity: 6.9 cubic feet Energy Efficiency: ENERGY STAR certified Dimensions: 56x21.2x22.9 inches (H x W x D) Color: White |

Conclusion:

{

"is_match": true,

"reason": "The two freezers are considered the same model based on the following comparisons:

1. The model name matches after normalization ('UpstremanUF70').

2. The capacity is similar (7.0 vs 6.9 cubic feet, a difference of 0.1 cubic feet, which is within the allowable range of 0.2 cubic feet).

3. Both have ENERGY STAR certification, indicating identical energy efficiency.

4. The dimensions are almost identical (difference: height 0.1 inches, width 0.1 inches, depth 0.1 inches, all within the allowable error of 1 inch).

5. Both products have similar colors: 'White' and 'Pearl White'."

}Working with Photos and Images

Attribute Extraction

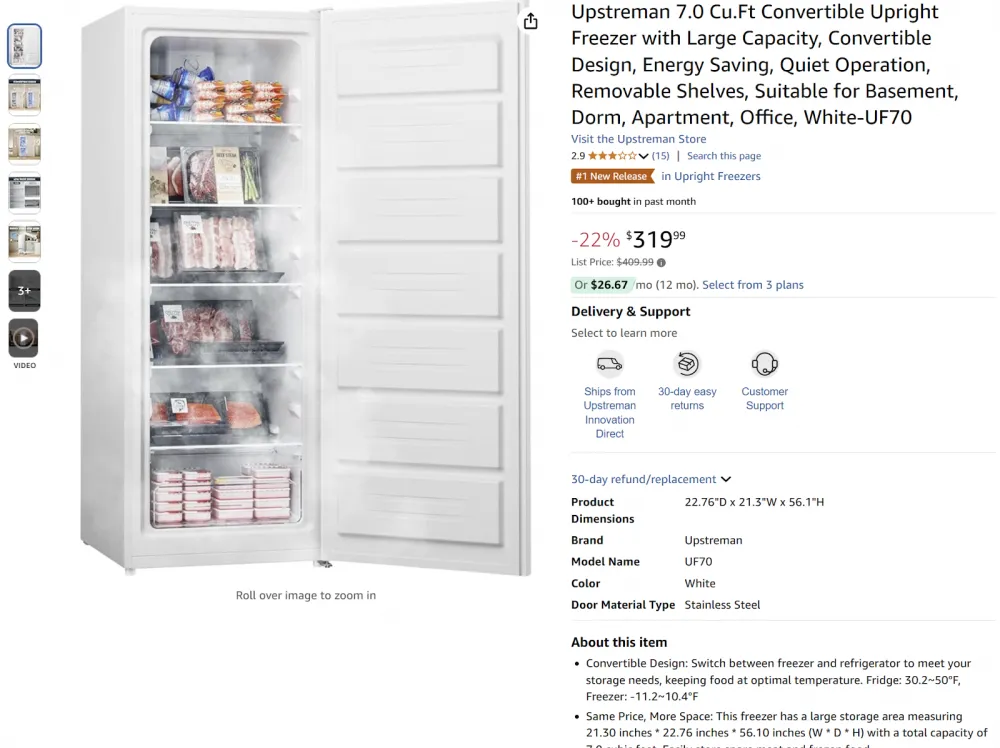

Suppose we have such an image. We will use the same query as for text extraction:

There is a product card from the "Refrigerators" category on the marketplace.

It is necessary to extract and format attributes from it.

key_attributes_list = [

# ...

{

"name": "dimensions",

"attribute_comment": "Include height, width, and depth in centimeters (e.g., '180x70x60'). Look for terms like 'Dimensions'. If unavailable, set the value to null."

}

]Result:

{

"dimensions": "56.10x21.30x22.76 inches"

}Another one:

Output:

{

"category": "Headphones",

"brand": "KVIDIO",

"color": "Black",

"features": [

"Full-size ear cups design",

"Wireless",

"Bluetooth connectivity",

"Soft ear cushions"

]

}Clothing Item Matching

Prompt:

As a fashion expert, you should compare two photos of women's tops. The analysis should focus exclusively on the tops themselves, ignoring:

Any other visible clothing items, even if they are part of the outfit or match the style.Differences in poses, body movements, or how the tops are worn.

Goal: determine whether the tops are identical or absolutely the same. Give a clear answer "Identical" or "Different", accompanied by a brief phrase with an explanation. The answer should be in JSON format.

Result:

LLM was able to detect a slight difference in the neckline, which, being a man, I am still not sure I see.

{

"result": "Different",

"reason": "The tops have different necklines: the first one has a round neckline, and the second one has a boat neckline."

}Here's another example:

{

"result": "Identical",

"reasoning": "Both tops have the same color, design, and fabric characteristics, including long sleeves, a fitted cut, and a light aqua shade."

}Perfecto!

Models and Throughput

Throughput is extremely important for a marketplace, as thousands of items are processed daily, and new ones are added every day. Throughput depends on two factors:

Your hardware

The size and type of model

If you use a 70b model, such as LLama or Qwen, it will work well but slowly. Without a supercomputer, you will face a throughput of 0-5 requests per second for large models on commercially available GPUs.

To improve performance, several steps can be taken:

Using a smaller model trained on your dataset.

The size of the model depends on the task, and you will likely need to train several smaller models, as each of them will be able to handle only a limited number of categories.7b models are usually well-suited for text extraction.

1b models may be suitable for a limited set of finely tuned attributes, and it is possible to obtain such a model through distillation of a large model.

This can increase throughput by 10–20 times. However, it should be noted that smaller models do not handle extracting multiple attributes at once and may struggle with complex queries, so you will need to test them on your task.

Quantization

Quantization can increase the number of requests per second (RPS) by 20–50% without significantly reducing the quality of the model's performance.Scenarios with large volumes of data

In such cases, it makes no sense to use anything other than a self-hosted model, as the costs of using OpenAI or Anthropic will be too high. However, such services are suitable for prototyping and testing ideas.

For self-hosting, based on our experience at Raft, I would recommend using the latest versions of LLama or Qwen models, as they have shown the best results in our tests. Start with 70b for testing, and then optimize to smaller models until the performance satisfies you.

You will likely need to additionally fine-tune the model for specific categories. For example, abbreviations are often encountered in the medical field, as well as in the construction industry. A universal model may not handle such cases well, so in such situations, it will be useful to use the LORA (Low-Rank Adaptation) method.

Besides, consider the language. For example, LLama works well with English, but for Chinese it is likely to hallucinate. In this case, the Gwen model is likely to be a better choice. In Russian, endings, as well as names and their declensions, often cause errors, it is worth paying attention to products where this is one of the attributes: movies, music, books.

Write your questions in the comments.

All the best!

Write comment