- Network

- A

Access to multiple Kubernetes pods via TCP protocol and a single external IP

In the Amvera cloud, microservices and user databases run in a Kubernetes cluster. To access applications, it is usually sufficient to use the nginx ingress controller, which works wonderfully with HTTP traffic and allows access to hundreds of services using only one external IP address.

But what if the user wants to access the deployed DBMS not only from within the cluster but also from outside? Of course, we could assign each DBMS its own white IP and create a ClusterIP, but this would lead to additional costs for renting addresses. In this article, I would like to share an elegant method of proxying TCP traffic based on SNI messages, which allows using one white IP for hundreds of DBMS.

Task Statement

There is a Kubernetes cluster in which a number of different types of DBMS are deployed: Postgres, MongoDB, Redis, etc.

Requirements:

-

Provide the ability to remotely connect to these databases.

-

Enabling/disabling external access for a specific DBMS should not affect other active connections.

-

The number of allocated white

IPaddresses is significantly less than the number of DBMS.

Considered Solution Options

-

Nginx

By default, nginx itself only works with

HTTP(S)traffic, so simply deploying an nginx ingress controller will not work for you, as databases use their own application-level protocols and we will have to work at the transport level withTCPpackets. Unlike higher-level protocols, here we do not know anything about the host we want to connect to, and classic domain name-based proxying will not work.Nginx supports

TCPpacket proxying, but this is configured at the general configuration level. That is, if you need to add external access to a new database, you will have to make changes to theConfigMap, which will lead to a controller reload and disconnection of establishedTCPconnections. Moreover, each application will have to be given its own unique port, which may require firewall reconfiguration.apiVersion: v1 kind: ConfigMap metadata: name: udp-services namespace: ingress-nginx data: 8001: "my-db/postgres-1:8001" 8002: "my-db/postgres-2:8002"

-

Proxying based on

SNIwith host name determinationServer Name Indication (

SNI) is an extension of theTLCprotocol that allows the client to explicitly transmit the host name it wants to connect to during the establishment of a secure connection. That is, if we use a secure connection, thanks toSNIwe can specify the domain we want to reach. By the way, most modern database clients support SSL connection establishment.

Kong Proxy

Spoiler: This method did not work for PostgreSQL.

Kong can work with TCP traffic in two ways:

-

Based on the port - all traffic that came to this port will be redirected to the specified Kubernetes Service.

TCPconnections will be distributed to all running pods. -

Using SNI - Kong accepts the encrypted

TLCstream and redirects traffic to the Kubernetes Service based on theSNImessage that was received during theTLChandshake. It should be noted that Kong is responsible for establishing the secure connection, and the decryptedTCPstream is sent to the application.

The official example details the installation process and usage example, so we will omit it in this article.

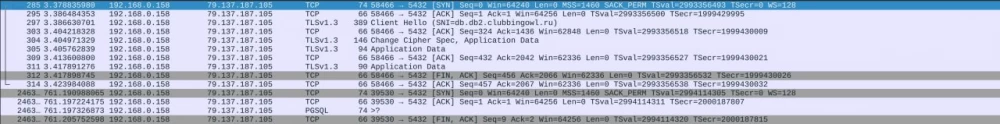

However, we note that we were unable to access the DBMS using only PGAdmin with SSL enabled. If you first set up a secure tunnel through stunel and then connect through it, PGAdmin successfully establishes the connection. To better understand what is happening, it is worth looking at the network dump from Wireshark:

It can be seen that when connecting to a regular application from the example, the ClientHello message is clearly visible at step 3, while when connecting to the DBMS (bottom lines), such a message is not explicitly visible. After studying the forums, it became clear that this is a feature of establishing a secure PostgreSQL connection, namely the problem lies in STARTTLS.

Traefik

Finally, we have come to the solution that was ultimately adopted.

Traefik, unlike Kong, managed to work with the DBMS through SNI without using stunel. For installation in the cluster, a Helm chart with the following values was used:

ingressClass:

enabled: true

isDefaultClass: false

additionalArguments:

- "--entryPoints.postgres.address=:5432/tcp"

providers:

kubernetesCRD:

enabled: true

allowCrossNamespace: true

listeners:

postgres:

port: 5432

protocol: HTTP

ports:

postgres:

port: 5432

expose:

default: true

exposedPort: 5432

protocol: TCPAnd to create access for a specific DBMS Postgres, deployed through the CNPG operator, it is enough to apply the following TCPIngress:

apiVersion: traefik.io/v1alpha1

kind: IngressRouteTCP

metadata:

name: my-postgress-ingress

namespace: dbs

spec:

entryPoints:

- postgres

routes:

- match: HostSNI(`my-postgres.mydomain.ru`)

services:

- name: my-postgress-service-rw

port: 5432

tls:

secretName: tlsSecretName

It is worth noting that the name specified in entryPoints is the same as the one specified in the chart listeners. So, if we need to add mongoDB on another port, we will specify it in the listeners and ports in the chart and specify mongoDB in the entryPoints.

The tlsSecretName secret contains a regular self-signed certificate issued for the specified domain. Which can be obtained through the command:

openssl req -new -x509 -keyout cert-key.pem -out cert.pem -days 365 -nodesAdding it to Kubernetes via kubectl:

kubectl create secret tls tlsSecretName --cert=./cert.pem --key=./cert-key.pem

Write comment