- Security

- A

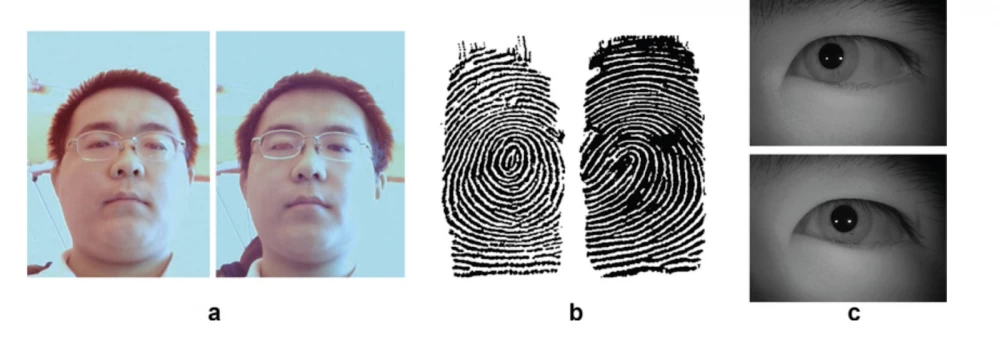

The complexity of biometric identification of monozygotic twins

Imagine a courtroom. The prosecutor proudly presents what he believes to be irrefutable evidence: a fingerprint, a surveillance camera recording, a DNA test. Everything matches perfectly, the guilt is obvious. And then the lawyer says: "Your evidence also matches his twin brother"...

The room falls silent, the judge frowns. Technologies that cost millions to develop turn out to be not reliable enough.

This is not an episode from a crime series, but a reference to real cases where modern systems faced the problem of identical data. For example, in 1985 in the USA, two twin sisters deceived the justice system by shifting the blame onto each other (in the end, it was possible to prove the involvement of each in fraudulent activities and they were both convicted).

Or the case from Germany in 2009, when twin brothers Hassan and Abbas O. avoided trial for jewelry theft due to the police's inability to prove which of them the found fingerprints and DNA belonged to.

-----

Recently, I wrote an article about some scenarios of bypassing biometric systems in cinema, and I was pointed out that I did not touch on the topic of identical twins. The topic is indeed interesting.

Physical biometric identification of identical or monozygotic twins (there is also behavioral, but this is a topic for a separate article) is a specific task of biometrics, relevant for systems with a critically high cost of error (for example, in defense). There are very few identical twins in the population and the topic is not often discussed. Essentially, the task boils down to one question - can the system distinguish identical twins from each other. But each new case shines brightly because it is an anomaly and points to the imperfections of existing technologies.

Let's figure out what the difficulty is and what research tells us. First, we will look at the issues in individual types of biometric systems and then, we will put everything together.

Many words about biology, the problem, and research

Biometric identification is based on the principle of uniqueness. We use fingerprints, iris, and face because they seem unique to each person. And in most cases, this is indeed true.

But identical twins are an exception to the rule, a good stress test for any system. Because their identity goes deeper than just external features. Their genetic code may have an identical DNA sequence, except for de novo mutations that occur after the embryo splits and rarely affect areas relevant to biometric data. This means that the difference between twins is not macro-level features, such as the shape of the nose or the structure of the iris, but microscopic details that are often hidden even from the most advanced algorithms.

Theory of twins: genetics and real identity

Twins are different. Broadly speaking, they are usually classified as:

Dizygotic (fraternal) twins.

Their genetic similarity is no higher than that of ordinary siblings. From a biometric point of view, this is a typical case that does not cause serious problems.Monozygotic (identical) twins.

They appear in 3-4 cases out of 1000, when one fertilized egg divides, creating two (or more) embryos with an identical genetic code. But even here there is variability: during growth and development, epigenetic differences may appear that make them not quite the same, affecting rather phenotypic manifestations (for example, tissue structure), and not directly biometric parameters, such as fingerprints or iris texture. Changes can begin at the early stages of embryonic development.

Modern methods, such as SNP genotyping, can distinguish identical twins at the molecular level (but they do not yet play a significant role in biometric systems). And a 2021 study shows that about 15% of monozygotic twins are identical in terms of their genome. And this is very important information because it is traditionally believed that all or almost all identical twins are genetically identical or nearly identical.

Empirical studies of identical twins related to biometrics generally do not include a stage of genetic identity research of the participants (I cannot assert about all existing studies in the world, but I have not come across them, and it is clear that conducting a DNA test for each participant is not a cheap task). This means that we do not know how many truly identical twins participated in the research samples. Nor do we know the degree of genome matching of the participants.

I emphasize that the degree of genetic identity can affect physiological parameters such as skin texture, iris structure, or fingerprint features. Without precise knowledge of the genetic identity of the twins participating in the study, it is difficult to determine which of the identified differences are due to random developmental factors and which are due to minimal genetic variations, including de novo mutations. So, this can introduce errors in the interpretation of data on the accuracy of biometric systems.

Why do technologies face challenges?

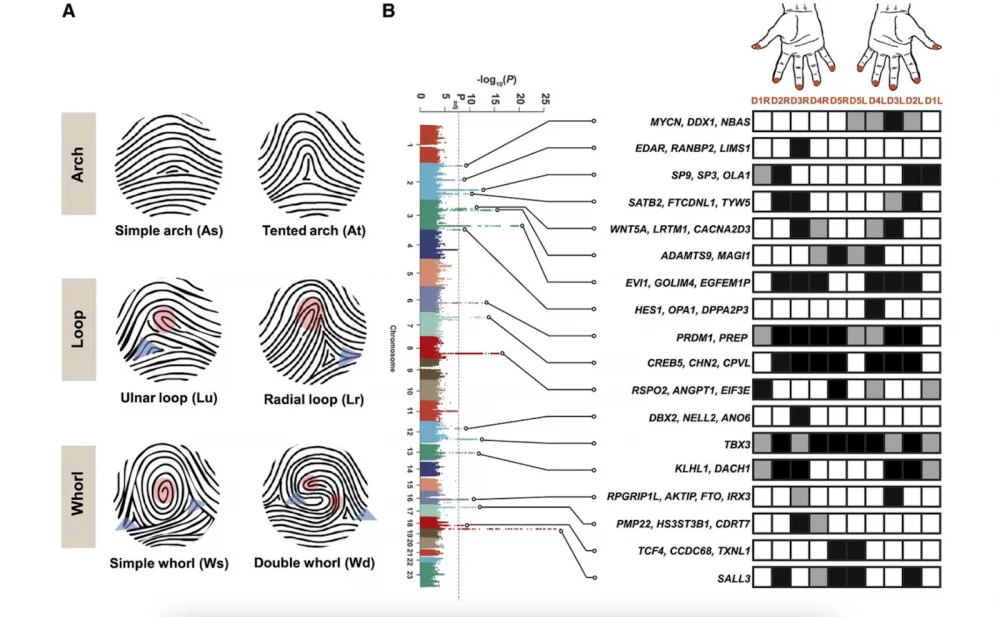

Distinguishing identical twins can be difficult not only because their appearance is similar. For example, the pattern of fingerprints is formed at 10–16 weeks of intrauterine development and depends on both genetics and random factors: the pressure of amniotic fluid, temperature, and angles of contact with the uterine wall. As a result, large patterns (arches, loops, whorls) in twins may coincide, but micro-details—pores, line endings—differ.

For systems working with large features, such differences become invisible. And if you add polluted sensors, poor lighting, or imperfect finger positioning, even state-of-the-art algorithms start to make mistakes. So, identical twins with microscopic differences are at the limit of the resolving power of modern algorithms.

Fingerprints: from genetic code to microscopic differences

The fingerprint pattern is laid down at 10–16 weeks of intrauterine development when primary ridges begin to appear on the pads of the fingers. This process is driven by the interaction of genetics and microscopic randomness. Studies have shown that three key signaling pathways — WNT, BMP, and EDAR — control the formation of ridges:

WNT stimulates cell growth, forming ridges.

BMP suppresses growth, creating grooves.

EDAR regulates the shape and size of ridges.

These signaling pathways work like a complex system of "on" and "off" switches, where the slightest fluctuations in their activity lead to unique patterns. For example, ridges begin to form at three points on the finger pad: in the center, at the nail bed, and at the joint fold. Their growth is directed by waves interacting with the anatomy of the finger. The larger the pad and the earlier the ridges begin to form, the more likely the pattern will be a whorl. A later start often leads to the formation of arches.

Genetic signals WNT, BMP, and EDAR play a key role, but they act in conjunction with other molecular pathways, such as FGF and SHH, which regulate cell migration and proliferation. Modern scientific work does not yet provide a complete understanding of the mechanism, as the interaction between these pathways remains the subject of active research, and the influence of the intrauterine environment on the final fingerprint pattern is not yet fully understood.

In identical twins, genetics sets the major patterns, but micro-details — minutiae, pores, distances between lines — are formed under the influence of random factors. Studies, such as the work of scientists from Fudan University, have shown that even small differences in the timing of signal pathway activation or the shape of the pad lead to unique patterns. For example, if the ridges of one twin begin to form a day later, their patterns will already be different.

An interesting off-topic is the connection between ridges and hair follicles. It turned out that the same WNT, BMP, and EDAR pathways that control the formation of fingerprints are responsible for hair development. On the fingers, this process stops at an early stage, and instead of follicles, ridges are formed.

How are fingerprints recognized?

Modern recognition technologies rely on the extraction of key points (minutiae): line endings, bifurcations, and pores. The accuracy of such systems depends on the quality of sensors and algorithms. To recognize details, precise sensors are needed:

Optical sensors capture a 2D image.

Capacitive sensors measure electrical resistance, determining the skin relief, but in real systems, they are vulnerable to problems of dry skin, heavy contamination, or the presence of oil.

Ultrasonic sensors create a three-dimensional map of the fingerprint by analyzing the depth and texture of the lines, used, for example, in Samsung Galaxy.

Problems with twins and error statistics

At the beginning of the article, I mentioned a high-profile case in Germany, where the fingerprints of twin brothers became a dead end for the investigation. A glove was found at the crime scene. Based on the fingerprints and DNA inside the glove, the police identified a suspect who had a monozygotic twin brother. Both - Hassan and Abbas O. - were arrested. However, experts could not determine to whom the glove belonged, and the brothers were released without charges or trial. By the way, it was a theft of jewelry worth 6 million euros.

Statistically, the probability of matching large patterns in monozygotic twins reaches 74%, which is much higher than that of non-identical relatives (32%). This creates significant difficulties in forensics and other fields.

Facial Recognition: Behind the Scenes of Biometric Technologies

Facial recognition is based on the task of finding and matching unique features. The camera captures an image, after which the system highlights key features: the distance between the eyes, the shape of the nose, the contours of the lips, the structure of the jaw. These parameters are converted into a digital template - embedding, which is then compared with the database. Modern algorithms, such as ResNet and FaceNet, use deep neural networks trained to highlight both visible and subtle invisible features, such as skin texture or light reflections. These embeddings are compared using metrics such as cosine distance, which allows for effective face differentiation.

Large datasets, such as VGGFace2 or CASIA-WebFace, containing millions of facial images with various angles, expressions, and shooting conditions, are used to train the systems. For example, ArcFace and CosFace apply advanced loss functions, which minimize data scatter within one category (e.g., photos of one person) and increase differences between categories. This makes them some of the most accurate algorithms today.

Modern approaches include 3D scanning technologies that create three-dimensional models of the face to withstand changes in tilt angle or lighting. Apple's Face ID is an example of the successful application of this technology in commercial devices. Thermograms, which capture the thermal patterns of the face, increase the uniqueness of the data, but due to their high cost, they are only used in specialized systems. Dynamic recognition, which analyzes micro-movements such as blinking or smiling, protects against attacks using static images.

Innovations such as generative networks (GANs) help synthesize additional training data, improving algorithm accuracy. However, GANs can also be used to create deepfakes, which poses a security threat. Multimodal approaches, combining facial data with voice or manner of movement, are used in tasks where errors are unacceptable.

Twins: a stress test for technology

Monozygotic twins, whose faces have almost identical geometry, challenge any algorithms. Modern systems can work based on geometric and texture features, such as the distance between the eyes, nose shape, cheekbone structure. In twins, these parameters can be so similar that distinguishing them becomes extremely difficult. However, modern algorithms are trained on special datasets (e.g., Twins Days) to minimize errors in such cases.

A well-known case of the Zhou sisters from China: they used each other's passports for several years, visiting more than 30 countries, including China, Japan, Brazil, Russia (countries believed to have some of the most sophisticated airport security systems), until Chinese authorities exposed their scam in 2022. How exactly the authorities uncovered their scheme remains unknown.

Research, conducted using the Twins Days dataset from the University of Notre Dame, showed that under ideal conditions (neutral expression, studio lighting), algorithms can distinguish twins with minimal error. One of the systems achieved an EER (Equal Error Rate) of only 0.01 (in laboratory conditions).

But add non-ideal conditions — such as facial expressions, glasses, or poor lighting — and accuracy drops sharply. Even state-of-the-art algorithms like ArcFace or CosFace start to make mistakes.

Moreover, if the images are taken several years apart, the probability of identification error in a pair of monozygotic twins increases. This is due to microscopic changes in the face, such as wrinkles or asymmetry, which can confuse algorithms trained on templates without accounting for age-related changes in twin pairs. In one experiment, systems were able to distinguish twins in only 80% of cases, with accuracy dropping to 50% if the template image was taken more than a year ago.

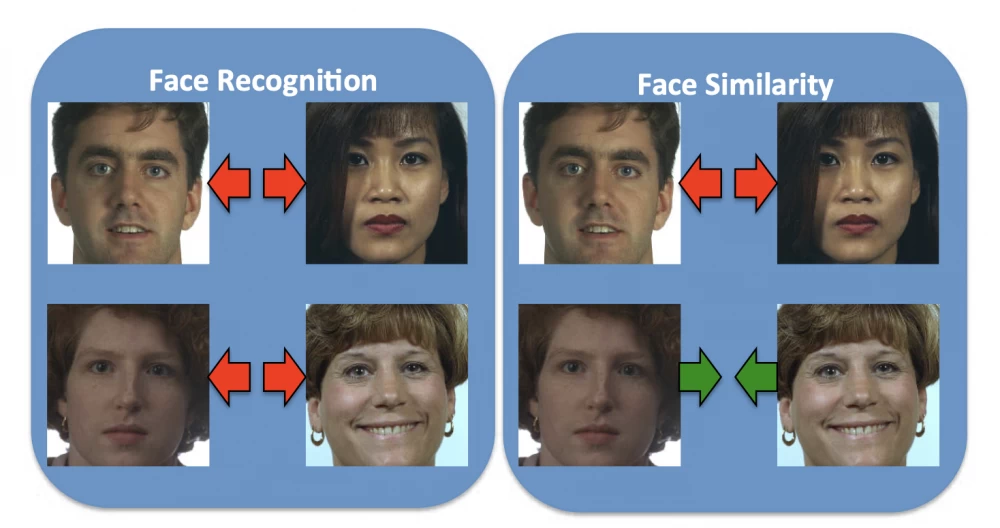

Concept of Similarity and Look-alikes

Recognition (Face recognition) and similarity (Face similarity) are not the same. If the task of recognition in identification is to match the template, then similarity is aimed at assessing how similar two faces are.

For example, the network trained by Sadovnik et al. was specifically designed to assess visual similarity. It analyzed pairs of faces where similarity was assessed by humans and achieved an AUC of 0.9799. This is an impressive result, but the same network was less effective for identification tasks, highlighting the difference between these two tasks.

In practice, similarity can help filter out complex cases. For example, if two individuals have a high degree of similarity, the system can pass this data for deeper analysis instead of accepting them as one person.

Unlike twins, doppelgängers are individuals who have a high degree of similarity but are not genetically related. With the increase in databases, the likelihood of encountering a doppelgänger increases. For example, in large systems with millions of records, such as government security systems or commercial applications, the likelihood of false matches can grow exponentially. Working with doppelgängers is relevant for systems where accuracy requirements are less critical, unlike security tasks.

One study showed that at a false acceptance rate FAR 1%, up to 99.99% of subjects in large databases demonstrated false matches for certain pairs of doppelgängers. The larger the database, the higher the likelihood that two individuals will be so similar that the system will accept them as one person. Even advanced systems demonstrate a high level of errors when working with doppelgängers.

Irises: looking into the eyes of identity

The iris of the eye has long been considered one of the most reliable biometric tools. Its unique texture is formed at the early stages of fetal development and remains virtually unchanged throughout life. Unlike fingerprints, which can wear out, or the face, which is subject to age-related changes, the structure of the iris is stable.

How is the iris formed?

The iris is formed in the first six months of intrauterine development and takes its final structure by 8 months of pregnancy. Its structure is the result of a complex interaction of genetic and random factors. Genetics determine the basic parameters: color, pigmentation density, initial geometry of crypts and vessels. However, the final pattern of the iris is created under the influence of random processes, such as the rate of cell division, features of vascular growth, and pigment distribution.

Microanatomical structures (MAS) such as crypts, radial furrows, and the vascular network are formed on the surface of the iris. They are arranged chaotically, their spatial distribution varies even between the two eyes of one person. In identical twins, MAS demonstrate macroscopic similarity, but microscopic differences remain, creating a technical challenge for recognition systems.

Iris Recognition Technology

Modern iris recognition systems use infrared scanning to capture the texture of the iris. Why infrared radiation? It allows you to highlight details that are invisible under normal lighting, such as the vascular network and crypts. The camera captures a high-resolution image (usually 640×480 pixels or higher), after which the data is transferred to the analysis system.

The classic recognition process includes several stages:

Iris Localization. Algorithms highlight the iris area, excluding the pupil and sclera. The Hough method is often used to determine circles.

Normalization. The iris is translated into a polar coordinate system to eliminate distortions caused by different viewing angles or pupil dilation.

Feature Extraction. Gabor filters are applied to highlight the iris's textural features, such as frequency, orientation, and texture amplitude. This data is converted into a binary code—a unique "fingerprint" of the iris.

Template Matching. The iris code is compared with the database standards. The main metric for assessing similarity is the Hamming distance, which measures the difference between binary codes. Modern algorithms can additionally use convolutional networks to improve accuracy.

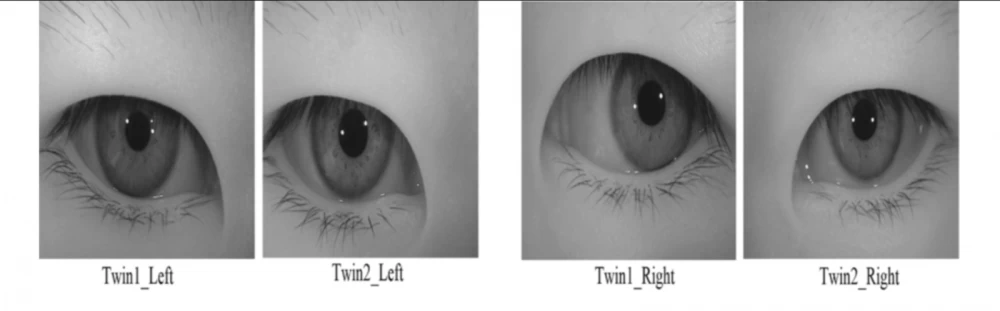

Problems with Twins

The main difficulty lies in the similarity of their irises at the macro level. If their genetic program is identical and intrauterine conditions are similar, this can lead to the same color palette, vessel density, and basic geometric features of crypts.

Commercial systems, based, for example, on Daugman’s IrisCode, use many textural features (it is worth noting here that IrisCode is a widely used commercial algorithm). However, if the image resolution is not high enough or the shooting conditions are far from ideal, small differences may be lost.

Experiments show that the accuracy of twin recognition is reduced by 20–30% compared to the general population. For example, when analyzing 50 pairs of identical twins, standard algorithms achieved 80% accuracy, but for the remaining 20%, additional methods such as deep neural networks were required.

In addition, even small changes in viewing angle, pupil dilation, or lighting can significantly affect the results.

Examples of difficulties in real-world conditions

In the Aadhaar system (India), which serves more than a billion people, there have been cases where twins registered simultaneously, causing false matches. The problems arose due to algorithm imperfections rather than fundamental insufficiency of iris data and were resolved by introducing additional analysis parameters, including pupil dynamics. The system itself also uses a combination of data (face, fingerprints, iris).

In conclusion

For systems with high security requirements, such as banking systems or access control systems for secure facilities, accurate identification, even in the case of monozygotic twins, is important. Although this task may be considered rare, its solution contributes to the reliability and efficiency of such systems. Combining multiple identification approaches, using multimodal approaches, and precise equipment reduces the likelihood of errors, both in general and in the task of recognizing identical twins.

Currently, for example, VisionLabs reports that their biometric identification system successfully distinguishes even twins, and TBS Biometrics tested their biometric equipment on identical twins, demonstrating the ability to distinguish them.

Further research in this area will create more reliable and versatile identification methods capable of handling a wide range of biometric data variations.

Thank you for reading to the end! I hope you learned something new or interesting!

Write comment