- Security

- A

Five things you shouldn’t tell ChatGPT

Don’t let your thoughts become training material for AI — or leak in a data breach. It doesn’t matter which chatbot you choose: the more you share, the more useful it becomes.

Don’t let your thoughts become training data for AI — or surface in the event of a data leak.

No matter which chatbot you choose, the more you share, the more useful it becomes. Patients upload lab results for interpretation, developers send in code snippets for debugging. However, some AI researchers advise caution about what we disclose to these tools with their human-like tone. It’s especially risky to share information such as passport data or corporate secrets.

Disclaimer: this is a loose translation of a column by Nicole Nguyen from The Wall Street Journal. Translation prepared by the Technoctratia editorial team. To get updates on new materials, subscribe to “Voice of Technocratia” — we regularly cover news about AI, LLM, and RAG, and share must-reads and current events.

AI companies are hungry for data to improve their models, but even they don’t want to know your secrets. “Please don’t share confidential information in your conversations,” warns OpenAI. And Google addresses Gemini users with a request: “Don’t enter confidential data or information you wouldn’t want reviewed.”

Conversations about strange rashes or financial fumbles can be used to train future AIs — or leak in the event of a hack, experts say. Here’s what not to include in your queries — and how to keep your dialogue with AI more private.

Keep your secrets

Chatbots can sound scarily human, which makes people overly candid. But, as Jennifer King from Stanford’s Human-Centered AI Institute says: “Once you’ve written something to a bot, you no longer control it.”

In March 2023, there was a glitch in ChatGPT that let some users see others’ messages. The issue has been fixed, but OpenAI also mistakenly sent subscription confirmation emails to the wrong people, revealing their names, addresses, and payment information.

Your conversation history could end up in a stolen database or handed over by court order. Use strong passwords and two-factor authentication. And avoid mentioning the following in chats with bots:

• Personal data. This includes passport details, driver’s license numbers, as well as dates of birth, addresses, and phone numbers. Some chatbots try to mask this. “We want our models to learn about the world, not about private individuals, and we actively minimize personal data collection,” an OpenAI spokesperson said.

• Medical data. If you want an AI to interpret a test, crop the image or edit the document: “Leave only the results and remove any personal details.”

• Financial information. Protect your bank and investment account numbers — someone could use them to access your funds.

• Corporate secrets. Using a regular version of a chatbot at work puts you at risk of disclosing client data or information under NDA—even when writing a routine email. Samsung banned ChatGPT after an engineer uploaded source code. If AI is useful in your work, the company should use an enterprise version with data protection.

• Logins. As AI agents get better at doing real tasks, the temptation to share passwords rises. But such services aren't meant for password storage—use password managers for that.

Cover your tracks

When you rate AI responses—thumbs up or down—you might be agreeing to let your conversation be used for training. If a conversation is flagged as potentially risky (for example, if violence is mentioned), employees might review it.

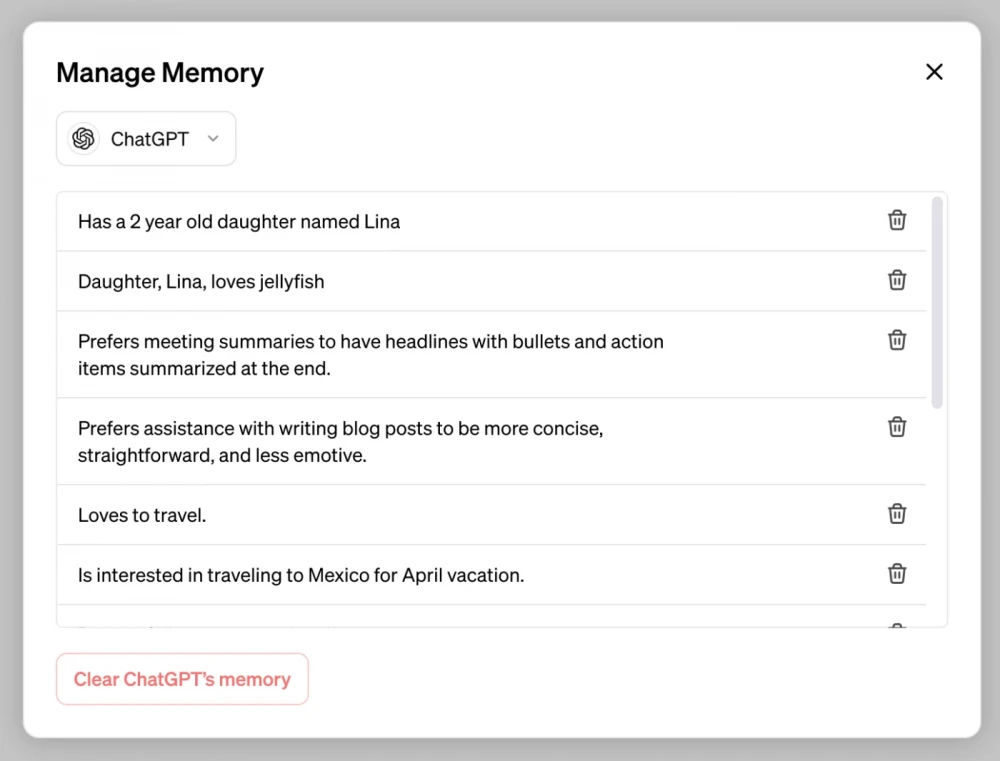

Anthropic (Claude) doesn't use your chats for training by default and deletes data after two years. ChatGPT by OpenAI, Copilot by Microsoft, and Gemini by Google do use chats, but offer opt-out in their settings. If privacy matters to you, try these tips:

Delete your conversations. Especially cautious users should delete each chat right after finishing, says Jason Clinton, Chief Information Security Officer at Anthropic. Companies usually purge “deleted” data for good after 30 days.

Exception — DeepSeek: its servers are in China, and according to its privacy policy, it may store your data indefinitely. There’s no way to opt out.

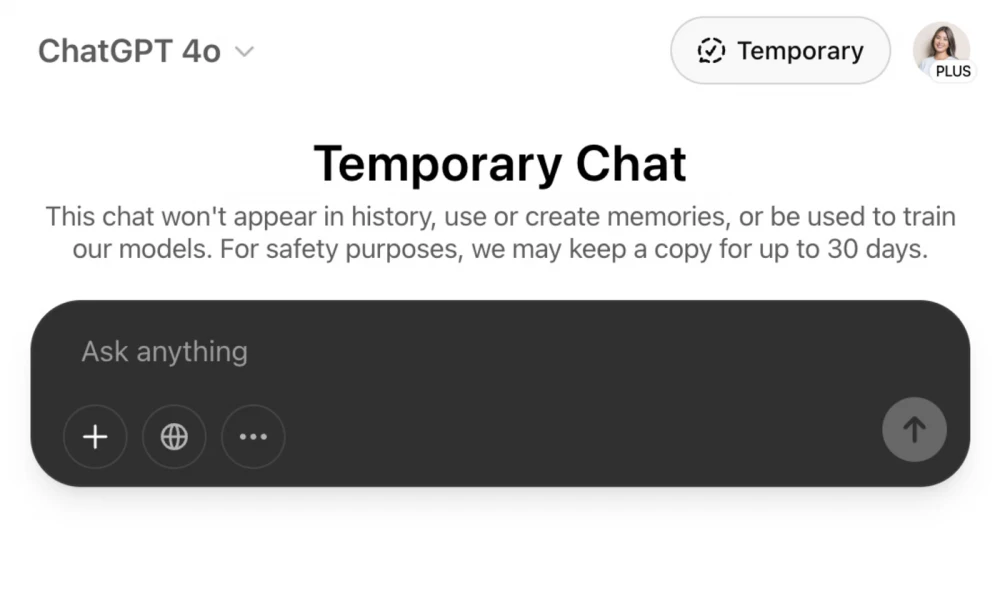

Enable temporary chat. ChatGPT has a Temporary Chat mode, like “Incognito” in browsers. Activate it so your conversation isn’t saved in your history or used for model training.

Ask questions anonymously. The service Duck.ai from DuckDuckGo anonymizes queries sent to Claude, GPT, and others, promising they won't be used for training. However, its functionality is limited—you can't upload files, for example.

Just keep in mind: Chatbots are designed to keep the conversation going. It’s up to you whether you resist—or hit "delete".

Write comment