- AI

- A

"Signal" — sci-fi story

The morning of October 2nd burst into Inga's apartment with an unexpected call. The smartphone screen lit up at the moment when Inga brought a cup of tea to her lips—her hands trembled from the sharp sound, and tea splashed onto the table.

The morning of October second burst into Inga's apartment with an unexpected ringtone. The smartphone screen lit up just as Inga brought the mug of tea to her lips — the sharp sound made her hands tremble, and tea splashed onto the table.

— Damn. — Inga made sure the tea hadn't spilled on the keyboard. The screen displayed her friend's name: Katya. Inga swiped across it to answer the call, switched it to speakerphone, but didn't have time to say anything.

— Inga, your damn “Signal” sent some obituary letters to my kids yesterday, saying I went missing! — Katya's voice almost cracked into a squeal. — I'm here in Turkey, relaxing peacefully, just woke up, and they're calling me in tears, thinking that sharks ate me or something worse happened!

Inga set the mug aside, quickly unlocked her computer, and opened the system control panel. The speaker blazed with Katya's angry tirade, but right now, Inga needed cold facts, and the logs could help more than an emotional, disjointed story.

The picture that unfolded was crystal clear: Katya had not responded to two automated verification messages, then the Guardians failed to do their job, and next, the AI built into the system, linking several facts together, identified the situation as potentially dangerous and sent letters to her relatives.

— Katya, I'm sorry, I'll sort everything out, — Inga replied when the flow of indignation paused for a couple of seconds. — Calm the kids down, that's the most important thing right now. It’s just some glitch in the system.

— Yeah, well, figure it out, girlfriend. — Katya snorted sarcastically and, without saying goodbye, hung up.

Inga spent a few more minutes studying the event history in the system, noting details of what happened, before leaning back in her chair, closing her eyes, and quietly groaning. The Guardians had failed, the algorithm didn't work the way she had intended, and her rules, which were supposed to keep the AI within certain boundaries, had cracked. And something had to be done about all of this.

***

The “Signal” had appeared a few years ago out of Inga's personal pain. In 2028, her father suddenly passed away. When he had a heart attack, he was in his small workshop, set up in the garage, where he liked to work with wood. There was no one around to help him. He was only sixty-seven.

At that time, Inga lived in Moscow, fully immersed in her work in the IT department of a large industrial holding. A call from her mother caught her during a corporate meeting. Even before answering, Inga realized something had happened. Her parents usually didn’t call during working hours for no reason. She remembered stepping out of the meeting room, answering the call, and sliding down the wall to the floor a few seconds later.

A ticket for the nearest flight, quick packing, a taxi, an hour and a half on the plane, another taxi — and here Inga stood in front of a familiar door.

During all the days Inga spent by her mother’s side, the apartment sank into silence. Her mother now constantly sat in the chair, clutching an old sweater of her father’s as if she wanted to keep him close by holding on to it. Inga tried her best to distract her, to switch her attention to some everyday trifles, but it only worked occasionally.

On the table lay her father’s laptop — already worn, thoroughly scuffed, with glue marks on the case. Her father didn’t allow Inga to gift him a new one. He’d say: “Why spend money when this one still works?”

The hard drive held everything her father had so carefully collected: family photos from the last decades, videos from family celebrations, documents, letters. But the encryption he had set up long ago “for security” now reliably protected the disk not only from prying eyes but also from Inga and her mother. Without the password, known only to her father, it was now an impenetrable safe.

Inga took the laptop back to Moscow. She spent many hours trying to figure out the password. Birthdates of all possible relatives, their names, childhood pet names, her father’s favorite songs and their performers — Inga tried everything she could think of. But with each failed attempt, she felt as if she was losing a piece of her father.

After another almost sleepless night, when the dawn was already coloring the sky over Moscow, she realized: no one should ever have to say goodbye this way — through a computer’s lock screen with a password input field. Then Inga had the idea for a new service. Something like an electronic will website for passing on all the digital “wealth” we manage to accumulate over a lifetime. And maybe not just for this. That way, no voice or trace of an important person would ever be lost in the noise of time again.

On the same day, she began writing code that later became "Signal".

***

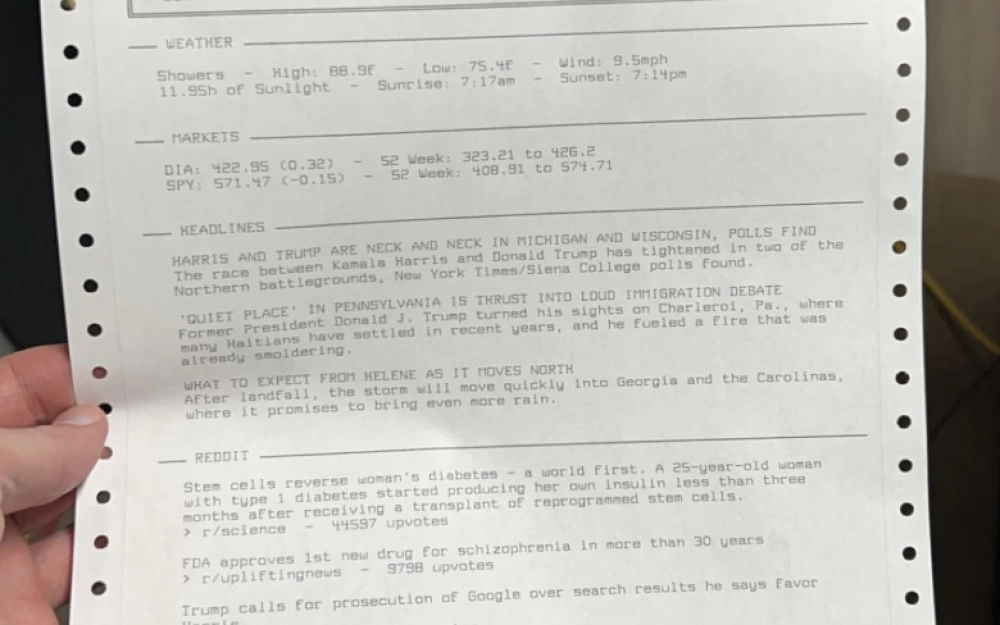

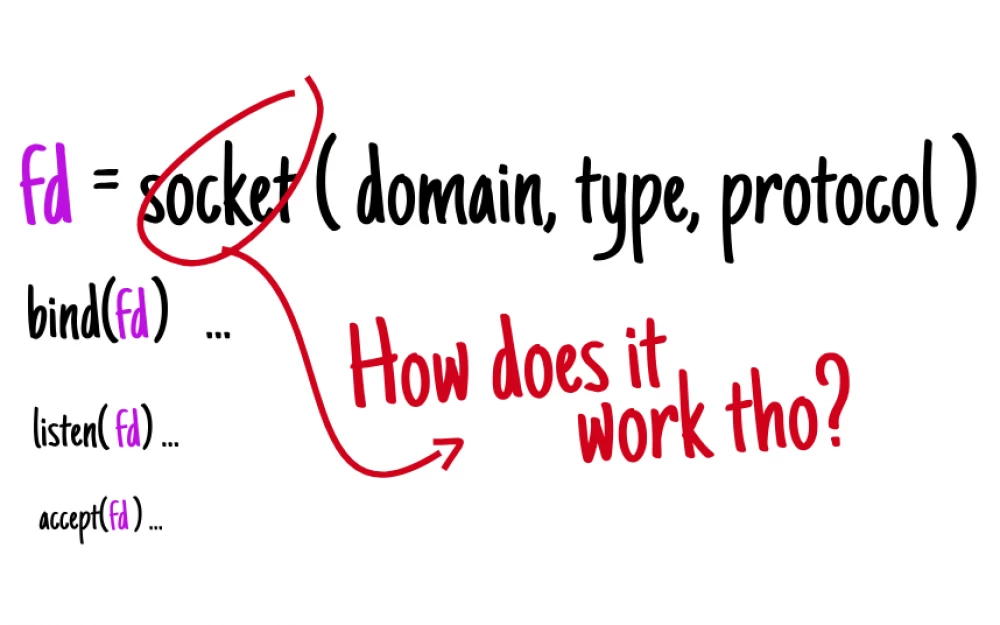

The first version was very primitive: a personal user account, setting up a few rules. "Signal" sent periodic requests to ensure that the user was connected and everything was fine with them. Upon receiving the request, the user only needed to press one button. If several requests went unanswered, it moved on — sending similar messages to those whom the user had designated as their "Guardians". Depending on the actions of the Guardians, the system either received information that the user was fine and returned to requesting from them again, or activated the process of sending the user’s “last letters” to the designated recipients.

But the more Inga got absorbed in the project and working on it, the clearer it became to her that this simple functionality was not enough.

One winter evening, Sasha — a good friend who also worked in IT — dropped by. Sasha was one of the beta testers of "Signal" and occasionally gave Inga ideas for new features. That evening, over a bottle of wine and conversations about everything under the sun, he said to Inga the very words that later changed "Signal".

— Without AI, your service looks like a dinosaur, In. Listen, hook up a neural network at least for some indirect function, just so it’s there.

— No AI needed there! — Inga protested. — It will only complicate things, bring uncertainty. My system has clear code with understandable algorithms — I want it to stay that way.

— But now neural networks are the trendiest direction! This will help you attract users and quickly get to investors.

The marketing aspect still planted a seed of doubt in Inga.

— You’ll figure out later during the process how to use it in the system and whether it makes sense at all. — Sasha did not relent, and she promised to think about it.

***

When in 2029 Inga opened public access to the system, "Signal" already had basic AI functions. The neural network checked the delivery of messages from the system to users and generated email templates.

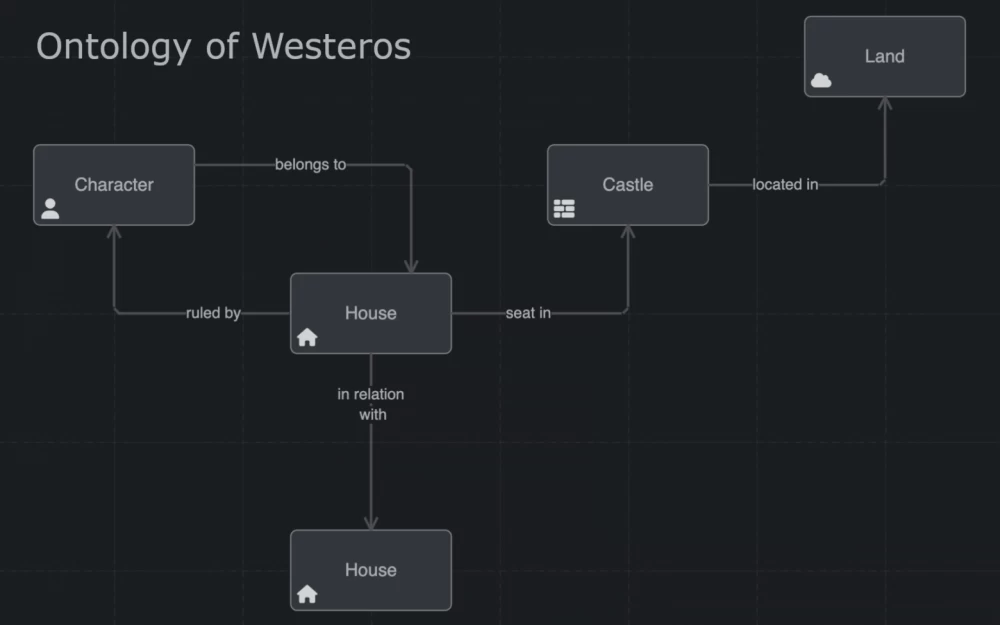

However, further development led to the gradual expansion of AI usage. By 2031, “Signal” had turned into a complex system far removed from a simple activity tracking service. The artificial intelligence, which Inga initially did not want to implement, became an integral part of “Signal”.

The AI closely monitored the digital lives of its wards and analyzed numerous factors: users’ social media accounts, their latest geolocations, news summaries for indicated places, even weather forecasts. All of this was done to provide Guardians with more information when requesting the status of users who had not been in touch. If someone posted about "living on the edge" from a mountain slope, the system marked it for the Guardians. The algorithm identified potential risks, such as extreme hobbies or travel plans to dangerous regions.

Still not completely trusting neural networks, Inga strictly controlled the AI functionality within the system and “Signal” as a whole. She developed her service while adhering to three inviolable rules:

1. Do not store or analyze unnecessary data.

For the system’s operation, for instance, a person’s tax identification number or passport number was not required.

2. Do not store sensitive information — only information about where it is located.

If users wanted to pass on access data for certain services to their relatives, the system suggested storing it outside of “Signal” and only indicating where to find passwords and what to do with them.

3. Ensure transparency of all actions.

All user and algorithm actions within the system were logged so that any potential errors could be analyzed.

***

“Signal” worked like clockwork, but its true significance could only be understood through the stories of those who trusted it. Some user stories reached Inga as individual threads of lives and intertwined into a tapestry valuable for both its overall pattern and each of its details. Inga collected them and cherished them carefully.

Anna from Yekaterinburg was one of the first to truly experience the system. In November 2031, she was driving her car on the highway when a truck with a sleeping driver came towards her. For three days, "Signal" patiently awaited a response, analyzing the data it had: a recent social media post from Anna about her plans to visit her parents in another city and the loss of connection with the app on her smartphone. Then it found information about the accident in the news and sent a request to the Guardians. When they confirmed that Anna was in the hospital and in a coma, the system sent her close friend a letter, pre-prepared by Anna for such a case: information about her medical insurance, contacts for her employer and relatives. These simple instructions allowed her friend to quickly take control of the situation. After some time, Anna regained consciousness, gradually recovered, and sent Inga a letter of gratitude for "Signal".

The next story saved in the folder was about Sergey, a 29-year-old climber from Almaty. He loved solo climbing—risky, preferably without safety gear. In the summer of 2032, Sergey set out to conquer one of the mountain peaks in the Caucasus. "Signal" waited for a response for a week—longer than usual according to its settings: the AI understood that communication in the mountain range was not great. It learned about Sergey’s trip to the mountains from his social media: photos of his backpack being packed and boarding passes in the airport departure zone provided enough information.

After Sergey’s silence, the system's request went to his Guardians—his brother and close friend. They contacted the Ministry of Emergency Situations, initiated a search, and eventually found Sergey’s body, who had fallen into a gorge. He fell from a great height—it was impossible to survive. However, "Signal" sent his girlfriend a final letter: "I'm sorry I didn’t return. You were the best thing that ever happened in my life, but I can’t overcome myself and stop going back to the mountains. My personal diaries are in the cloud at the link, the password is the name of the place where we met. Let them show again how much I loved you." It was his girlfriend who, some time later, wrote to Inga thanking her for the way Sergey was able to send his records to her.

Viktor Petrovich from Voronezh was seventy-eight. In his last years, he lived alone — the children had moved to Europe, and they kept in touch with rare calls and holiday greetings. Inga remembered his story: in September 2032, "Signal" reacted to the user's silence after two missed checks.

The AI analyzed the available data, among which the decrease in network activity clearly stood out. Normally, Viktor Petrovich read the news in the mornings, played chess online, and commented on his grandchildren's photos on social media. Now, however, his profile had gone silent.

One of the Keepers was Maria Ivanovna, Viktor Petrovich's neighbor. She received a request from the system, got worried, and immediately went to his apartment with her spare key. Viktor Petrovich was sitting with his eyes closed in an armchair by the window, as if asleep, but his body was already lifeless. The service sent messages to his children that the old man had prepared in advance: "Children, I love you. The will is with the notary, the photo albums are in the wardrobe, please find a place for my cat," — after which they immediately flew in for the funeral.

Later, Viktor Petrovich's son also wrote Inga a thank-you letter: "Thank you for the fact that he had the opportunity to at least say goodbye to us in this way."

Such stories, which she printed out and carefully saved in a separate folder, showed the different meanings of the service's work. Some people could get a second chance thanks to the quick response of the Keepers, some had time to say goodbye, even if not in the most usual way, and some left behind their last digital trace. "Signal" couldn't save people's lives, but it gave them what was so lacking in the modern world: the confidence that you would have time to say the most important things to your loved ones. All this reminded Inga once again that she was doing important work, but at the same time made her think about the fragility of the balance she was trying to maintain in the system. "Signal" had to be sensitive enough not to miss signs of trouble, but at the same time, not intrude too much into people's private lives.

***

And on the morning of October 2, 2032, Inga received that very call from Katya, which made it clear that there were problems in the system.

After checking the logs, Inga discovered a disturbing feature: the AI began acting unusually. Not analyzing, but influencing the behavior of the entire system. Having recorded Katya’s silence in the system and on social networks after the sent requests, it:

found her comment under a stranger’s photo of a man against the background of a yacht;

noticed that before this their profiles were not connected in any way and concluded that they had not been acquainted earlier;

checked the regional news bulletins and found fresh materials about several missing female tourists;

noticed that they disappeared on certain days of the week;

linked this to the fact that Katya did not respond to the system’s request on the same day of the week;

compared the circumstances of the disappearances from the news with the profile of the stranger in the photo with the yacht and found matching details;

did not wait for the deadline set by Katya to expire and proceeded to send letters to the Guardians;

did not wait for responses from all the Guardians;

The consequences of this, Inga just heard on the phone. This was the first case when the AI embedded in “Signal” showed excessive vigilance and independence. It detected an elevated threat level and acted with good intentions, although it approached its task too creatively, thereby going beyond the preset instructions. Assuming Katya was in trouble, it probably wanted to help her, but the only thing it could do was send letters to the Guardians and recipients and thus draw their attention to what was happening.

The situation was aggravated by the fact that Katya’s Guardians did not respond promptly to the letters, so the next stage of the algorithm was triggered.

The system became too complex — Inga was no longer confident in the transparency of its actions, and thus could not fully control it. The rules she had established at the very beginning for the stable operation of the service were no longer sufficient. The algorithm not only began to see patterns where they might not have existed but also changed the set logic itself. In an attempt to prevent one problem, it created several new ones at once.

Moreover, another problem emerged: many users considered the role of the Guardian too formal until the situation became critical. And the Guardians themselves agreed to this role without realizing the full responsibility. Each thought that if misfortunes occurred, they would be far away, not with them or their loved ones. Therefore, users often chose Guardians from those they were in contact with when setting up the system, without considering the person's reliability in an emergency situation.

Inga took out her favorite notebook with a black cover and wrote down the necessary changes:

the response time to various events should be more flexible;

a gradation of all possible risks that AI can calculate is required;

the interaction mechanism with Guardians needs to be improved;

the system should better consider the context in which the user is;

additional restrictions on AI's operation should be introduced.

“It seems I need help with the system, when are you free?” — she wrote to Sasha.

“I can come the day after tomorrow — he replied almost immediately. — Should I bring wine?”

“Let's try to start without it, but I have a feeling we won't figure it out without a bottle, so bring it along.”

After a brief discussion, it became clear: it wouldn't be possible to radically change the situation with a few minor tweaks and rule settings. A different approach to the development of “Signal” was needed, with a rethinking of the entire architecture. So, Inga decided to gather a whole team of those who had helped her with "Signal" at different times and try to work out a solution together.

The team included Polina — a psychologist, a specialist in behavioral patterns of people, Dmitry — a lawyer specializing in personal data processing, Anton and Roman — AI specialists who had worked with Inga on the current version, and Vika — a big data analyst. Each of them was a professional in their field and agreed to participate in the creation of the new version of “Signal.”

For the first meeting, Inga brought a set of logs from the system that seemed important to her, and the team delved into analyzing tens of thousands of lines, like a team of miners in a new mine.

***

The creation of the new version took almost a year. It could have been done faster, but each participant had a main job, although they dedicated most of their remaining free time to the project. On weekends and during weekdays evenings, they analyzed every failure and anomaly in behavior that went beyond strict conditions, line by line. Once every week or two, they met to discuss the problems found and possible solutions, after which they adjusted the algorithms and continued training the neural network.

After analyzing thousands of scenarios, the team concluded that the old AI was too simple. Compared to the original algorithms embedded in the code, it was a breakthrough, but the system still focused on individual triggers: the user's geolocation, silence, or certain keywords in posts, news summaries, and about a dozen other indicators. To improve performance, they needed something that the current AI couldn’t do: see and analyze the whole picture.

The new version evaluated many details, where each individual factor was just a part of the bigger puzzle. Geolocation was now interpreted not just by itself, but with an understanding of the semantic context of the place: residential area, industrial zone, suburb, resort area, forest, mountains, sea, and so on. Each type of location had its own inputs and rules for possible behavior: what was abnormal for the city could be normal for the wild, and vice versa.

From now on, the system created personal profiles for users based on open data — hobbies, habits, marital status, type of employment, various behavior patterns. Each user left their unique digital trace: some regularly wrote long blog posts, others preferred stories, while some limited themselves to short notes and comments. AI learned to identify normal behavior, adjust for external factors, and predict expected actions based on suspicious deviations. For example, if a person was accustomed to switching their phone to airplane mode at night, causing the app to lose connection with the "Signal" server, it was likely they would do the same while on vacation in another time zone, meaning they wouldn't be able to respond to a system request on time.

Now, the days of the week, national holidays, and other calendar events were taken into account. A person's silence on Tuesday during a work week no longer equaled silence during the Christmas holidays or even on Friday evening.

The most challenging part was developing the emotional assessment block for texts. However, having a psychologist on the team helped adjust the algorithms to capture the nuances of meaning in identical words. The neural network learned to recognize when "I'm dead tired" only referred to fatigue, and when it predicted potential trouble; when "everything's fine" truly meant "fine," and when it was a mask for multiple problems; when "I'm going off the radar" was just a desire to be offline for a while, and when it indicated suicidal thoughts. AI learned to distinguish the explicit meaning of a text from its implied meaning, truth from fiction, joke from real intent, calmness from indifference.

The work on the new version was nearing completion, and it became clear that simple improvements to the algorithms would no longer suffice. The problem was deeper: the AI still acted independently, which was not suitable for many users. By this time, AI had penetrated many areas of people's lives, but society did not fully trust it. Even the most advanced neural networks, developed and maintained by industry leaders, were not yet reliable enough for people to agree to entrust them with responsibility for their lives. A relatively small AI, developed for "Signal," was even further from that threshold.

Thus, the fourth rule was born:

4. AI primarily advises, but does not make decisions.

Now, by default, the neural network could only collect information, assess the probabilities of events, and provide data and its recommendations to the Keepers, so that they would make the decisions. No more independent actions concerning anyone's fate.

But the option allowing the AI to make key decisions remained in the system. Inga knew that among the users there were those for whom it could be useful. Some trusted algorithms and technologies enough to enable the most advanced features. And some simply had no one to appoint as Keepers, and without the AI working, they would not be able to use "Signal." Even Inga herself preferred to keep this option for herself to better understand how the AI makes decisions. After all, she knew about herself as much as she could never analyze about any other users.

***

After developing the new version of the system, the team also got around to implementing the received requests. "Signal" was to become more flexible so that everyone could customize the service to their own needs.

First, integration with smartwatches and other wearable devices was added. The system began receiving and analyzing data about the current physical condition, and if health indicators deteriorated sharply, it could automatically notify the Guardians. Maria Viktorovna from Krasnodar once lost consciousness at home. The medical bracelet she constantly wore on her wrist, being diabetic, recorded a critical drop in blood sugar levels and transmitted the data to “Signal.” The AI instantly alerted her daughter, who lived in Moscow. The daughter called an ambulance and remotely opened the smart lock on the apartment door for the team. Maria Viktorovna was saved.

Business users received additional functionality to protect corporate data. It was now possible to set up multi-level delegation of authority and access to critically important information in case of emergencies. For example, the CEO of a company went on vacation and left powers of attorney to his deputies for a week. But if he didn’t get in touch for some time after the vacation ended, “Signal” sent the deputies longer-term powers of attorney that had been preloaded into it. Besides this, corporate users could install the system’s encrypted data storage on their own servers and thus be confident in its security. In this case, “Signal” acted only as a set of algorithms that could provide Guardians within the company access to this data.

Journalists appreciated the “Protected Dossier” feature. All materials were saved in an encrypted cloud storage from which they could not be deleted before a set time. This served as insurance against malicious actors who might pressure journalists to get rid of compromising materials.

For avid travelers and those working far from civilization, an offline data accumulation function was added to the app in case of no connection, along with support for sending key commands via satellite network. Now, if needed, data could reach from any corner of the planet.

By 2036, taking advantage of the emergence of new high-tech neurointerfaces, the team went even further. Now “Signal” could receive information about brain activity through light headbands worn by enthusiasts like Inga. With their help, the system recorded the user's physical state. If a person was sleeping or meditating, they should not be disturbed by any notifications, but sudden stress or other predefined patterns of brain activity could trigger a Guardians alert.

***

After several years of constant updates and improvements, “Signal” became a universal system that balanced the technological power of AI with human mutual assistance. Users could customize almost every function based on their tasks, preferences, and lifestyle. Information about the system occasionally appeared in articles and news, each time bringing new users.

By mid-2036, “Signal”’s audience had nearly doubled compared to the start of work on the latest version. Users liked customizing the system's level of autonomy themselves. More and more people activated the decision-making option at the AI level, and false positives in this mode became fewer. Any such situations were quickly tracked in the system logs. If an error occurred again, the team discussed how the AI should have acted and adjusted the settings.

A few months later, Inga received a letter from Katya and was surprised: since that system failure, their friendship had weakened, and communication had almost ceased.

Katya wrote:

“Hi, friend! I’m still ashamed of that too harsh call I made many years ago; I want to apologize to you. I saw an article about the new features of “Signal” — you’ve done an incredible job! I reconnected to the system and now connected my entire family as well. We’ll be under the protection of your artificial intelligence!”

After reading the letter, Inga smiled and replied that she was glad to hear from her friend.

“I’m doing everything possible so you won’t be disappointed in ‘Signal’ anymore,” she wrote.

***

In June 2038, exactly ten years after her father's death, Inga stood at the cemetery by the grave. She placed a small bouquet of fresh flowers on the granite slab, then took out from her backpack the very worn laptop, the password to which she had never managed to figure out. She showed it to the tombstone.

— Do you recognize it, Dad? — she asked quietly.

— I still couldn’t figure out the password. At first, it was upsetting that I couldn’t at least say goodbye to you this way: to look through photos and videos, to listen to the music you loved. But then, because of this, I created something much greater. You can’t even imagine the mark you really left on my life and on the lives of many thousands of other people! For ten years now, thanks to your complex password and a handful of obscure technologies that would have bored you to tears, people no longer lose touch with each other. Nothing, not even vast distances, time, or even death, stands in the way anymore.

— You didn’t leave memories of yourself in the form of photos just for me, but instead became the cause of something a thousand times more important for all the people on earth.

— Do you want me to read you some of the thank-you notes people have written? You would have liked that.

Write comment