- AI

- A

Quantinuum on its progress in creating quantum AI

The research team from Quantinuum has made significant progress in creating quantum AI by presenting the first scalable model of quantum natural language processing — QDisCoCirc. This model combines quantum computing with AI, solving text tasks such as question answering, and opens up new possibilities for interpretability and transparency in AI.

A group of researchers from Quantinuum has made significant progress in the potential use of quantum artificial intelligence (AI), reporting the first implementation of scalable quantum natural language processing (QNLP). Their model, called QDisCoCirc, combines quantum computing with artificial intelligence to solve text tasks such as question answering, according to a scientific paper published on the ArXiv preprint server.

Ilyas Khan, founder and Chief Product Officer of Quantinuum, noted that while the team has not yet solved the problem at scale, this research is an important step towards demonstrating how interpretability and transparency can contribute to creating safer and more efficient generative AI.

“Bob has been working in the field of quantum natural language processing (qNLP) for over ten years, and for the past six years, I have proudly watched this process, preparing for the moment when quantum computers can actually solve applied problems,” Khan noted. “Our work on compositional intelligence, published earlier this summer, laid the foundation for what interpretability means. Now we have the first experimental implementation of a fully functioning system, and it is incredibly exciting. Along with our research in areas such as chemistry, pharmaceuticals, biology, optimization, and cybersecurity, this will help accelerate scientific discoveries in the quantum sector in the near future.”

Ilyas Khan stated: "Although this is not yet the moment that can be compared to 'ChatGPT' for quantum technologies, we have already outlined a path to their real practical significance in the world. Progress on this path, I believe, will be associated with the concept of quantum supercomputers."

To date, quantum AI, especially in combination with quantum computing and natural language processing (NLP), remains a predominantly theoretical field with a limited number of confirmed studies. According to Khan and Bob Coecke, Chief Scientist of Quantinuum and Head of Quantum-Compositional Intelligence, this work aims to shed light on the intersection of quantum computing and NLP.

The research demonstrates how quantum systems can be applied to AI tasks in a more interpretable and scalable way compared to traditional methods.

"At Quantinuum, we have been working on NLP using quantum computers for some time. We are pleased to have recently conducted experiments that show not only the possibility of training models for quantum computers but also how to make these models interpretable for users. In addition, our theoretical research provides promising indications that quantum computers can be useful for interpretable NLP."

The experiment described in the paper is based on the application of the compositional generalization technique. This method, borrowed from category theory and adapted for natural language processing, allows linguistic structures to be viewed as mathematical objects that can be combined and integrated to solve AI tasks.

According to Quantinuum, one of the key challenges of quantum machine learning is the scalability of training. To address this issue, they apply the "compositional generalization" approach. This means that models are trained on small examples using classical computers and then tested on much larger examples using quantum computers. Since modern quantum computers have already reached such complexity that they cannot be classically modeled, the scale and ambition of this work can quickly increase in the near future.

Researchers have solved one of the main problems of quantum machine learning — the so-called "barren plateau" problem. It occurs when training large quantum models becomes inefficient due to vanishing gradients. Quantinuum's research provides compelling evidence that quantum systems can not only solve certain problems more efficiently but also provide transparency in AI model decision-making, which is an important issue in modern AI.

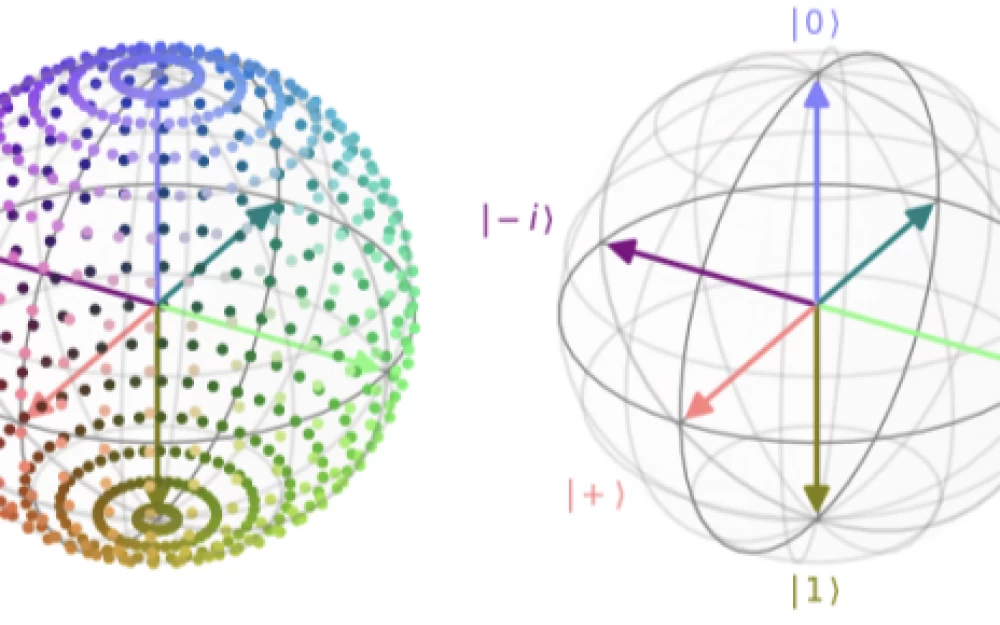

These results were achieved on the H1-1 quantum processor with ion traps from Quantinuum, which provided the computational power to execute the quantum circuits underlying the QDisCoCirc model. The QDisCoCirc model uses compositionality principles borrowed from linguistics and category theory to break down complex textual data into simpler and more understandable components.

In their work, the researchers emphasize the importance of the H1-1 quantum processor in conducting experiments: "We present experimental results for the question-answering task using QDisCoCirc. This is the first verified implementation of scalable compositional quantum natural language processing (QNLP). To demonstrate compositional generalization on a real device, beyond the instance sizes that were classically simulated, we used the H1-1 quantum processor with an ion trap, which has advanced two-qubit gate accuracy."

Practical implications

This research has significant practical implications for the future of AI and quantum computing. One of the most important results is the possibility of using quantum AI to create interpretable models. In modern large language models (LLM), such as GPT-4, the decision-making process often remains a "black box," making it difficult for researchers to understand how and why certain results are generated. In contrast, the QDisCoCirc model allows observing internal quantum states and relationships between words or sentences, providing a better understanding of the decision-making process.

From a practical point of view, this opens up wide opportunities for applications in areas such as question-answering systems, where it is important not only to get the correct answer but also to understand how the machine arrived at that conclusion. The interpretable approach of quantum AI, based on compositional methods, can be used in jurisprudence, medicine, and the financial sector, where transparency and accountability of AI systems play a key role.

Furthermore, the research demonstrated the successful application of compositional generalization—the ability of the model to generalize data trained on small sets to work with more complex and voluminous original data. This can be a significant advantage over traditional models, such as transformers and LSTMs, which, according to the authors of the study and data from technical documents, have not shown such successful generalization when tested on long and complex texts.

Performance higher than classical?

In addition to applications in natural language processing (NLP) tasks, researchers have also explored whether quantum circuits can outperform classical models, such as GPT-4, in some cases. The results showed that classical machine learning models, including GPT-4 type models, did not demonstrate significant success in solving compositional tasks — their performance was at the level of random guessing. This indicates that quantum systems, as they scale, may be uniquely suited for processing more complex forms of language. This may be especially important when working with large datasets, although large-scale language models, such as GPT, are likely to gradually improve.

The study demonstrated that classical models failed to effectively generalize large text instances, while quantum circuits successfully handled this task, demonstrating the ability for compositional generalization.

It was also found that quantum models have higher resource efficiency. Classical computers face difficulties when trying to simulate the behavior of quantum systems on a large scale. This indicates that quantum computers will become necessary for solving large NLP tasks in the future.

“As the size of text circuits increases, classical modeling becomes impractical, highlighting the need for quantum systems to solve these tasks.”

Methods and experimental setup

As part of the proof of concept of the QDisCoCirc model, the researchers developed datasets with simple tasks in the format of binary question-answer pairs. These datasets were intended to test how well quantum circuits handle basic linguistic tasks, such as identifying relationships between characters in a text. The research team used parameterized quantum circuits to create word embeddings — mathematical representations of words in a certain space. These embeddings were then used to build more complex text circuits, which were evaluated using a quantum processor.

Coecke and Khan note that this approach allows for maintaining model interpretability while leveraging the advantages of quantum mechanics. The significance of this approach will increase as the power of quantum computers grows.

“We consider the ‘compositional interpretability’ proposed in the article as a solution to the problems faced by modern AI.” Compositional interpretability involves giving understandable meaning to the components of the model, for example, in natural language, and then understanding how these components interact and combine with each other.

Limitations and possibilities

Despite significant progress, the study also has its limitations. One of the key issues, according to the team, is the current scale of quantum processors. Although the QDisCoCirc model shows great potential, researchers note that solving larger and more complex problems will require quantum computers with more qubits and higher accuracy. They acknowledge that their results are at the proof-of-concept stage but also point to the rapid development of quantum technologies. Quantum computers have entered a new era, which Microsoft calls the era of "reliable" quantum computers, and IBM calls "utility-scale" quantum computing.

"Scaling these computations to more complex real-world tasks remains a serious challenge due to existing hardware limitations," the researchers write, adding that the situation in this area is changing rapidly.

In addition, the current study focuses on solving binary questions — this is a simplified form of natural language processing (NLP). In the future, the team plans to explore more complex tasks, such as parsing entire paragraphs or working with multiple layers of context. Researchers are already looking for ways to expand the model to work with more complex textual data and various types of linguistic structures.

Write comment