- AI

- A

How to cook EdgeAI in 2024/2025

I have such a hobby - testing different boards for AI.

Why? I have been working in Computer Vision for over 15 years. I started with classical CV. Now it's transformers and all that. But now I mostly lead teams: structuring how to properly combine product and mathematics.

A lot of what I work with is about Computer Vision on the Edge. At some point, I realized that I lacked information. You want to read something about a new board. And there is nothing but an enthusiastic press release about it. God willing, there is also a video showing official examples being launched. But usually without it.

At some point, I started testing everything myself. To understand what is possible and what is not.

Most of these tests are in my Telegram.

Sometimes (once every year or two) I write a review article. And this is exactly it. Here I will try to consider the criteria that can be considered important for AI boards. And also briefly review the main boards on the market (mostly with links to my reviews).

My previous review (for 2022) can be found here on Tekkix.

In this review, I will slightly change the structure:

I will consider the criteria for choosing boards. And for each criterion, I will give several characteristic examples where the criterion works well and where it does not.

I will review all the main boards.

And yes. The article was originally written here. The translation here is the author's version, with minor edits and additions that were made after publication. Also, there is a version on YouTube:

Criteria

The criteria for choosing boards can be divided into several categories:

Product

Engineering

Scientific

These criteria have no clear boundaries. The same power consumption can be called both a product and an engineering criterion. But it is better to divide them somehow to highlight the areas of influence of different parts of the company.

Product

Product criteria are how the consumer or your product sees the board:

The price of the board in production. A board based on SG2002 can cost 5$. And a board based on Jetson Orin can reach 1000$. And between them is a continuum of solutions.

The cost of developing ML on the board. On Jetson, the price will be minimal, and on some microchip - the maximum.

Do you need your own production. Some boards are sold only in the form of chips. For example, Hailo-15. You will not buy boards based on it. Also, nothing will work if you need the correct configuration of connectors, or the minimum price.

The possibility of releasing a large batch. Everyone knows about the problems with the supply of Jetsons. There are no problems only for those who were promised by Nvidia…

In which country the product will be released. If you release a product in Russia and you are not connected with the government, it will be expensive and difficult to get Jetson for you. If you release a product in America, you will not be able to buy Huawei. And it makes no sense, it still cannot be sold. If you make a product for hospitals in Europe, you most likely will not be able to use RockChip (this is both certification and restrictions on equipment suppliers).

What is the power consumption of the board. If you want to integrate face recognition into a battery-powered doorbell, this is one level of boards. And if you can spend hundreds of watts on recognition, this is another level.

When you think about product criteria, you need to roughly limit the parameters for each item. What volumes do you want. What price range, form factor, countries, etc.

Engineering

Engineering is how the board looks for interaction with it. What are you ready for within your company.

System. Someone wants a system on Windows. But this is rather rare. Linux? Which one? Ubuntu or YOCTO? Or BuildRoot? Or maybe no Linux is needed, and pure Linux like ESP32? Or still add MicroPython?

Obviously, this greatly affects what the team should be able to do, the convenience of fleet management, the simplicity of manufacturing the hardware.Is it a separate device. Some neural network accelerators are separate boards. Some are integrated into the processor. It is clear that these are different inference options for different tasks.

How powerful is the processor. Running neural networks is often not all that algorithms need. And you need to look at how much the processor is needed:

Can it preprocess images in time?

Can it decode and encode video in time?

Can it handle 3D?

Support from the manufacturer. Often such boards are very limited and the documentation is incomplete. Is consultation from the manufacturer needed for development? Because open sources are not always enough.

Research

These are criteria regarding the AI that you put inside. They are most often neglected when choosing a board. But in vain. This can affect the development time by tens of times.

Inference speed. This is a key parameter for many applications. It is clear that if the board gives only 1 FPS for detection, nothing can be done if you need to detect objects at 1000 FPS.

Supported layers. Complexity of export tools. What does the manufacturer provide? Is quantization needed? What about LLM support?

Memory size, memory speed.

Jetson's family

It seems that Jetson is now perceived as "edge by the default". The first time I assembled Caffe on them was back in 2015. And since then they have only gotten better.

To date, the current series is Jetson Orin. In this series, there are three types of devices (Nano, NX, AGX). They differ in price and computing capabilities. Older Jetsons are still used. But much less often than the current ones.

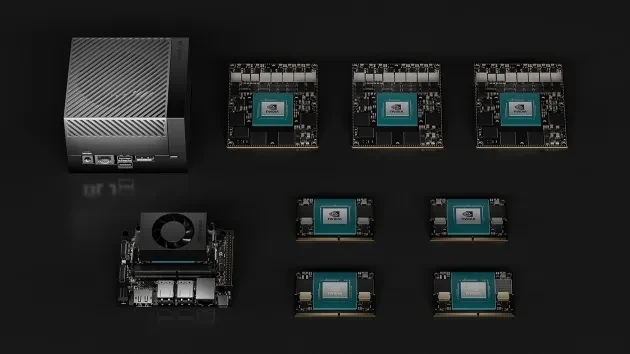

Within the AGX and NX devices, there are several subtypes. Again, differing in price and speed:

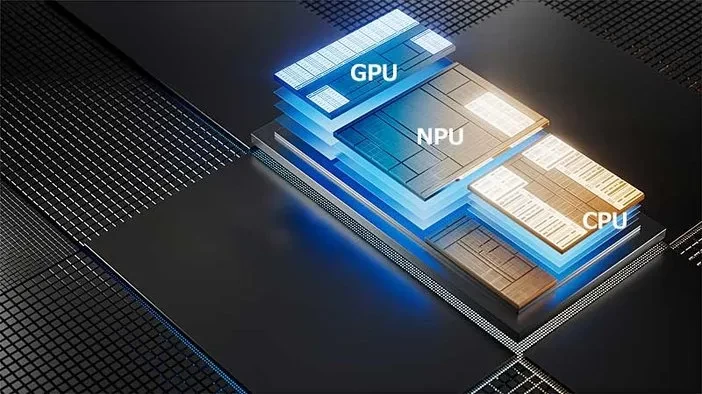

Jetson is primarily a GPU board. There are several versions where the GPU gives less performance than the NPU. But the GPU is still much more convenient (no quantization needed). The CPU in Jetson is quite weak for running networks. NPU is not everywhere yet.

The key difference between different models is the NPU. It is not in Nano. In NX there are 1 or 2 (depending on the model). The AGX frequency is almost 3 times higher.

Here you can see my review of the previous generation Jetson Nano. In terms of performance, it is already significantly inferior. But most of the ideas and logic of work remained the same.

The modern NPU works well only in Int8, but due to fallback layers, it is possible to compute individual layers through the GPU.

The advantages of Jetson include:

A huge infrastructure around it. TensorRT, Triton, CUDA, etc. Almost everything that can be run on a desktop can be easily run here.

A huge amount of information on the Internet. Almost any problem already has a topic on the internet.

Support for modern models. Yes, something may not work. But most LLM, VLM, and other models are already here. And this is a qualitative difference from 95% of boards.

High speed. If you convert TOPS to USD, Jetson may not be the most cost-effective. But among boards of this format, it is clearly one of the most productive.

The ability to write low-level code through TensorRT.

The disadvantages include:

Price. Jetsons are expensive. An assembled device on NX will immediately cost about 1000USD, which is not cheap.

Availability. You have only a few ways to ensure uninterrupted supply of Jetsons. You either need guarantees from Nvidia itself, or you must be some kind of government (reference to drones). In all other cases, you cannot guarantee batch sizes in the thousands of devices.

Power consumption. Nvidia often reports that each new Jetson is more and more energy efficient. Maybe it is so. But each subsequent one consumes more and more. For NX now it is about 40wt, which is not small.

NPU is primarily focused on INT8.

x86(Intel, AMD)

Speaking about x86, we should first talk about Intel. Their AI support is much stronger. NPU is present in the latest chips. OpenVino has long supported Intel GPU (where performance is quite good).

The main disadvantage of such calculators is high power consumption. At the same time, performance is comparable to Jetson. And the devices are much more accessible. And there are many of them. From N100 for 70 bucks to boxes under 1000.

Advantages:

Availability

Good community, support. Basic CPU inference works by default. ONNX Runtime, OpenVino, TorchScript, etc.

The ability to efficiently compute all modern networks (some through PyTorch, but still).

Disadvantages

NPU and GPU are not in every device

There are networks where support and speed are worse than Nvidia

Power consumption is often higher than Jetson's.

The price is at the level of Jetson

Other CPUs (ARM, RISK-based)

To close the "classic" branch, I will also mention other CPUs here. The boards that are suitable for Embedded development and use ARM/RISK are usually significantly slower than x86. At the same time, this does not prevent them from sometimes being fast enough to solve many problems. The same RockChip, MediaTec, Huawei, which will be discussed below, have more than decent processors that can handle ML for many situations (CV, NLP, etc.). At the same time, it is obvious that "import onnxruntime" out of the box is super simple and convenient.

But, of course, power consumption and maximum speed will lose to most NPU modules, x86, as well as good video cards (such as Intel's).

RockChip

On my channel, there are probably the most videos about RockChips (1,2,3,4,5,6,7). There are really many of them now, and they are very good for ML tasks. They have a wonderful NPU module with great support for various networks. A good half of modern Edge boards are based on them.

OrangePi

Radxa (RockPi)

Banana Pi

NanoPC

Khadas

FireFly

And many others.

They are made based on different boards

RK3588 - the most powerful and top-performing (and there are a bunch of downgraded RK3588s, 3582)

RK3568 - One of the older boards. Quite slow and not optimal in price. But at the time of choice, it was faster than RPi and one and a half times cheaper.

RK3566 - Super cheap board (Linux + NPU)

RK3576 - Analog of 3588, but slightly simpler processor

RV1106, RV1103 and several others - boards without full Linux and Python inference

RK3399Pro - The oldest NPU board, now almost no longer supported.

ETC…

The advantages of RockChip include

Price

Availability. Can be purchased from dozens of different manufacturers. Individually and in large batches.

Supported network volume. Of course, they are inferior to Jetsons. But you can find almost any network. Now the boundary is approximately at VLM. They support LLM, but not VLM. Some transformers work, but Whisper is only from one team under the GPL license.

Many diverse form factors. You can buy a fully ready-made board or design it from scratch.

FP16 is also computed on the NPU. This is very important because not every network can be quickly and easily run under int8.

There is some low-level access to the NPU - you can perform a lot of math on it manually.

Cons:

Quality. Many vendors make raw systems based on RockChips. RockChip itself is also not known for making high-quality code.

This is a Chinese board. In the USA and EU, it may fall under various restrictions.

Not every network can be run.

Complex NPU architecture in older models. There is no inference server - you need to build a multi-threaded inference to maximize execution speed.

Qualcomm

Recently, there have been rumors that Qualcomm is going to buy Intel. And, it should be noted, this is a good competitor in Edge Computing.

At the moment, I don't have a video about this board on my channel. And the last time I developed on Qualcomm was 3 years ago, a lot has changed since then. When I have the opportunity to test RB3, I will add it to the channel.

Pros

Fast inference

Cheap board in large batches

Good enough neural network support and documentation. 3 years ago there were problems - now there are fewer.

Cons

A lot of bureaucracy. Access to the development environment can take up to a month. You cannot buy a board and sign all the contracts as a private individual.

No open information. Everything is under NDA. You cannot predict in advance whether your system will work or not. For example, I am almost sure that LLMs do not work fully (including VLM).

As far as I know, there is no low-level access to the NPU.

VeriSillicon

These are truly versatile boards. And, most likely, you have not heard of VeriSillicon boards. Because they don't exist. The company sells chip designs. And many have NPU from VS. For example:

NXP - one of the largest electronics manufacturers. I have a review of Debix on my channel.

Amlogic - one of the leaders in small-sized processors (but it seems that the latest boards no longer have VS). I have a review of Khadas VIM3 based on Amlogic 311D on my channel.

STM32. Of course, this is not in all boards, but in the most productive ones. I have a small interview with their representative on my channel.

Synaptics.

BroadCom.

I'm sure I'm missing a lot.

Since the company provides hardware and a set of low-level libraries, the experience from two different vendors can be fundamentally different. Check out the videos I posted above. Essentially, if the vendor is good, like NXP, the usage is super intuitive.

Pros

This is a fairly energy-efficient architecture

Many vendors sell in different form factors

Chips are quite cheap

Cons

There are vendors for whom export does not work very well

These are not super fast boards

Not all networks are supported. No LLM, VLM, etc. And unfortunately, this cannot be fixed - there is no low-level access to the NPU.

External accelerators

In this section, I will talk about all accelerators in general. But, of course, each of them deserves a separate issue, or even several. What is common in accelerators? They try to solve the problem of "adding the missing AI power to your system". They are connected separately.

The most important thing for an accelerator is how it is connected. Mostly these are PCI-e (M.2) or USB accelerators. It is important:

What amount of data can be transferred through the channel. If you run your networks on large images or videos, this can be very limiting. There are accelerators from PCI-e(2) x1 line to PCI-e(4) x4 line. And for devices, this is also important. For example, on RPi only 1 line (officially PCI-e(1), but in practice PCI-e(2))

What is the latency on the transfer. If you need to react quickly, this is critical.

Is the processor fast enough to prepare the data and send it over the bus. On slow boards, calculations can be much slower even when there is a fast accelerator.

Which accelerators are the most popular?

Hailo

Hailo-8, Hailo-10. I have two videos about it on my channel (1, 2). And soon there will be a third one. I used it in practice and consulted several companies about it. So in the video, you can find my real feedback and a detailed review.

Pros:

Good support, open community

Easy to buy

Guides available for RPi

Fast

Very good guides for model export. A lot of quantization algorithms out of the box

Cons:

Hailo will cost more than some RockChip (but cheaper than Jetson)

Need for quantization. And there are no options here.

Many Transformer Based models do not work (LLM/VLM/Whisper). Maybe something else. Hailo promises to improve support. Specifically for LLM, they released Hailo-10. But there is no launch capability yet.

But there is also Hailo-15. And unlike its counterparts, it is a special processor module, not an external module. Its pros and cons are more or less similar. But there are a few points:

It is slower than Hailo-8 and Hailo-10.

It has a weak processor. However, if you do not need to process many cameras or perform complex preprocessing, it will be enough for you.

The processor-NPU bus is fast.

It is cheaper.

No ready-made boards out of the box. You need to develop based on the reference design (at least it was recently)

Axelera

There is only an interview with a representative on the channel. I have not tested it myself, so I cannot guarantee whether the words said are true or not.

Pros:

Very fast. According to the documentation, one of the fastest external boards.

Variety of form factors

Cons:

Quite expensive. This may apply to individual boards.

As of spring/summer, only pre-orders could be placed for developer versions. But there were working samples at exhibitions.

Not all transformers worked (LLM, VLM, etc., again, this could have changed)

SIMA

There is no review on the channel, but there is an interview that I recorded. I would say that SIMA is less focused on Edge in terms of "computing near the camera". Rather, it is "computing on a local server". And it is more of an alternative to a GPU than an accelerator for a local board. But they support up to 8 channels.

Pros.

The only manufacturers of such boards who more or less promised LLM and their analogues.

Fast. Some of the fastest in terms of connectivity (a lot of PCI-e 4th generation lines)

Cons:

Expensive. Some of the most expensive

Large and power-consuming. Not quite Edge

Other plug-in accelerators

For the most part, there is very little information about them (except Coral)

Kneon (USB)

Coral (USB, M.2, PCI-E) — I would say they are already too old

Gyrfalcon — seems to have already gone out of business

BrainChip

kinara.ai

I think I forgot many. So write in the comments

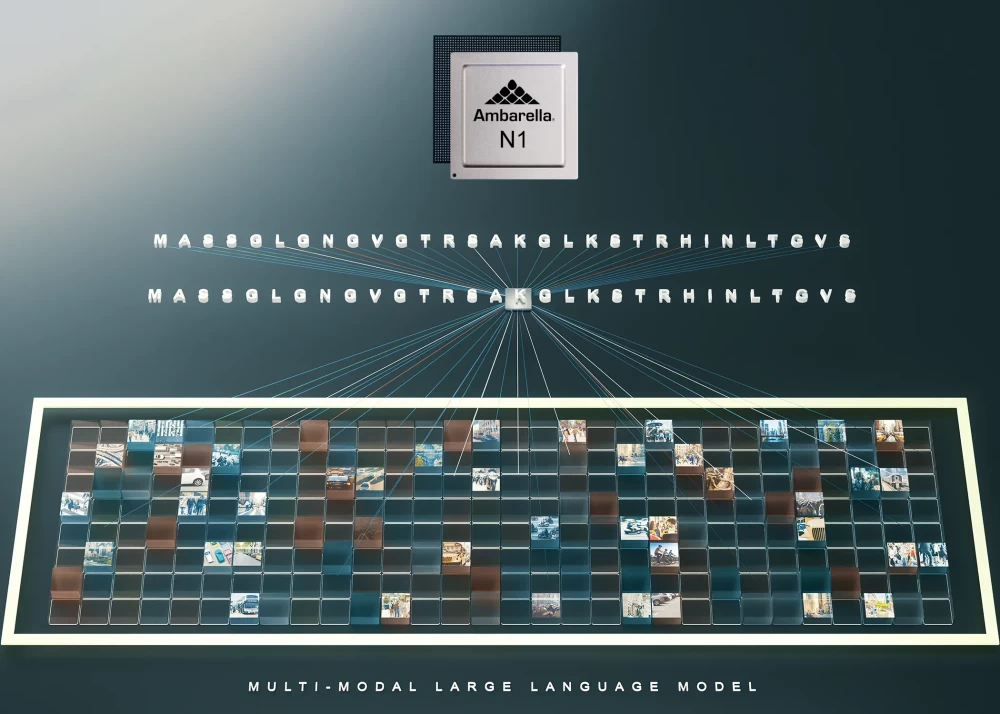

Ambarella

One of the most mysterious boards. You will not find reviews on it on the internet. But it is used in many cheap super mass devices. Many dash cams, DJI cameras, and so on and so forth. At the same time, there is a complete policy of secrecy. I know many teams working with it. But I haven't had the chance myself. Largely due to this secrecy. When in 2018 we at Cherry Labs were deciding whether we should switch to this board, the blocker for us was precisely the inability to adequately test it.

I really hope that someday I will have the opportunity to try it. But at the moment I do not consider myself competent enough to talk about it. It should be cheap, with int8 quantization.

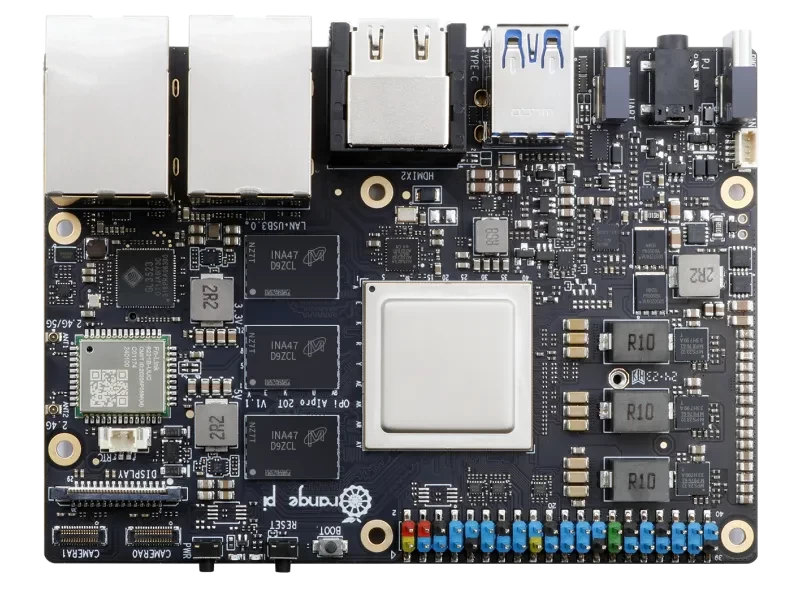

Huawei

There is a review of Orange PI AiPro on the channel, with the corresponding board. And I wrote an article about it on tekkix.

In general, the board is quite decent and open. Good in terms of speed.

Pros:

Fast

Cheap

Fairly free export of most models

Rich documentation

Cons::

No LLM support

Oriented towards the Chinese market. A bit towards the Russian and Indian markets.

Documentation in Chinese. Cannot be bought in Europe and America due to sanctions

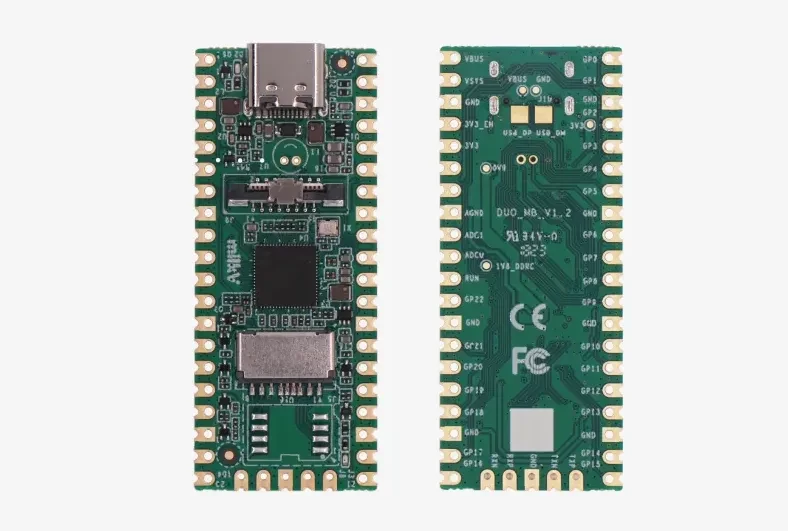

Sophron

This is a manufacturer of AI accelerators that has become quite popular recently. You might have seen it with: MAIX-CAM, Milk-V, SiFive, hw100k, reCamera, etc.

And again, it turns out that the manufacturer here means much more than the chip vendor. Watch my video about MILK-V. Using the accelerator is almost impossible. There is no documentation, most examples do not build, there is a lot of C++ code.

And compare it with the video about MAIX-CAM (not mine). This is a qualitative difference. But at the same time, I would not call MAIX-CAM a product solution. Rather a "craft for small batches". It is difficult to make a more or less general overview of all platforms. I myself have not tested the same SiFive, I have not found good reviews. But in a nutshell:

Pros:

Cheap

Fast enough

Cons:

Most likely will require a lot of support if the batch is large

Most likely will have quite limited memory

No LLM/modern networks

Most vendors are Chinese. Most likely not for everything can be used in Europe/USA

MediaTek

Another large company. Used to working with large ones. Somewhat reminiscent of Qualcomm. And this spoils the whole experience.

Quite good, fast and cheap Radxa NIO 12L turns out to be almost useless:

There is access only to the export tool as old as mammoth excrement

MediaTek refuses to provide access to new export tools.

Radxa seems to be unaware of their existence

You can test it only if your company signs an NDA with MediaTek. Which is not easy.

Does this make Genio a bad board? Overall, my expectation is that it is rather not. It is a good board at a reasonable price. Better than NXP in performance, worse than Qualcomm. But testing it is impossible.

Hobby boards.

There are many manufacturers who make small good boards aimed at enthusiasts who do not know what Computer Vision is but want to add eyes to their devices. It is very difficult to test such boards, as they are about "something else". Often there are no detailed instructions, only a "friendly interface". Nevertheless, the boards perform their task. From what I tested on my channel, it is:

Grove Vision Ai

UnitV2

And probably the already mentioned MAIX-CAM also belongs to this direction.

Outdated boards.

It's funny, but for many the topic of "accelerators" is still very new. And often people do not understand that several eras have already changed. They write to me with questions about how to run something on Myriad from Intel. And this is a board where Intel has already ended production and support. There are many such boards. At the same time, they are often still used in production.

For example, the OAK series from Luxonix uses various Intel accelerators (it seems that they will soon switch to Qualcomm, but so far RVC 4 has not been officially released and this is just my guess).

About the same feeling is caused by Google Coral, released almost 7 years ago.

And other small things like K210, the original ESP32(1,2), MAIX-II, GAP8, etc.

If anyone is interested, I have provided links to the reviews I have. But these are not the boards I would recommend in 2024/2025.

Microcontrollers

If we touch on the original ESP32, we can touch on the topic of microcontrollers. On my channel, I mostly avoid such boards. Except for MAX7800, ESP32. But you have to understand that there are a lot of them now:

GAP9

Syntiant (NDP101, NDP120 (Arduino), etc.)

Analog Devices

Synaptics

SiLabs

Innatera

Nordic Semiconductor

All of them differ in the following:

Almost never have a normal operating system. It's either C/C++ or MicroPython development. Or, through EdgeImpulse, if it supports this board.

The number of working networks for each platform is usually very small. Literally 1-2 networks

The speed is usually very low. You have to use super-optimized models

Almost always the boards require int8 quantization.

Almost always manufacturers have very average documentation.

Many of the boards require a lot of C++ code

Some are completely unsuitable for images, positioned for voice

Edge Impulse often solves problems. But you have to understand that it may not fully support the board. And the convenient interface it provides may partially limit both the achievable capabilities of AI devices and the possible features of the board.

Other boards.

There are many other boards. So far, I haven't had the strength to test them all:

MAIX-III — I tested this one, but it seems mediocre to me

Texas Instruments (Beagle Board, etc.). Quite a popular board. I found a way to test it now, maybe I'll give it a try.

Kneron — a lot of marketing about it

MAIX-IV (AX650N, axera-tech) — I wouldn't expect anything great from it. Feels like a continuation of the third version

AMD Kria — This is FPGA. I'm skeptical about the idea of ML on FPGA. All my acquaintances who did some projects on this board were swearing for a long time. So I decided not to test it.

Arm Ethos (U55, U65) —Arm also decided to go the "do ML" route. So far they are releasing very weak accelerators. But I hope the day will come when they will start in a more powerful segment. Haven't checked anything yet.

Renesas — they also have a lot of boards, seems like a fairly large vendor, but haven't looked into it yet

Syntiant

BrainChip - tried to talk to them at some exhibition, but everyone was busy. And it seems not easy to get the hardware

MemryX

deepx.ai — Asked them for a price, but they gave me something like "a couple of thousand USD", so I gave up

Horizon X3M - seems to be no longer produced

Kendryte k510/k230 —Seems like the guys switched to mining. But the boards are still being sold.

Sony IMX500 — A very strange board. The processor is combined with the matrix. It's like OAK-D but on one chip. RPi presented a camera based on it. But knowledgeable people say that working with the chip is difficult. Performance is supposed to be not great.

Do you have any questions? Or maybe there is some interesting board that I haven't considered here. Write to me!

Write comment