- AI

- A

What was found inside Claude when we peeked into his mind

We are used to thinking of neural networks as a "black box": data in, answer out, and magic inside. But what if this box could be made transparent? The Anthropic team conducted extensive dissection of Claude 3 Sonnet to identify specific "features" responsible for concepts—from Golden Gates to vulnerabilities in code. We explore how the thoughts of a neural network are structured and why this discovery changes our understanding of AI security.

This is the author of the channel tokens to the wind, and today we will discuss one of the most interesting AI studies of recent times.

There is a thing that doesn’t let AI researchers rest: we have created systems that write code, compose poetry, pass exams for doctors and lawyers — but we have no idea how exactly they do it. Literally a black box: data enters, an answer comes out, but what happens in between is a mystery.

This is not just academic curiosity. When the model hallucinates — we don’t understand why. When it refuses to execute a harmless request or, on the contrary, carries out a dangerous one — we can only speculate about the reasons. When companies say “our model is safe” — it’s more faith than knowledge.

At Anthropic, they decided to address this issue. The interpretability team published a study titled Scaling Monosemanticity, in which they effectively dissected Claude and mapped out what is happening inside it. The results are at times expected, at times frightening, and at times just amusing.

Let’s dive in.

A bit of context: where the problem came from

Neural networks are not a new technology. The first works appeared back in the 1940s, and by the 1980s there were already quite functional models. But back then they were small — hundreds or thousands of neurons. Researchers could literally draw a network diagram on a board and trace how the signal passed from input to output.

Modern language models have hundreds of billions of parameters. Claude 3 Sonnet, which was studied at Anthropic, contains tens of billions. It’s not even theoretically possible to draw something like that on a board.

At the same time, the models have started to exhibit behaviors that no one explicitly programmed. They understand irony, solve logic problems, and write functioning code in languages that were barely mentioned in the training data. Where does this come from? What’s going on inside?

Until recently, the answer was roughly this: “Well, it all works together somehow.” Not very scientific, I agree.

Why you can’t just look at the neurons

The first thing that comes to mind is to take a look at what each neuron is responsible for. Sounds logical: one neuron — one concept. Neuron #4231 activates for cats, neuron #7892 — for recipes, neuron #1337 — for code.

This idea is called "grandma neuron" — the hypothesis that there is a separate neuron in the brain that activates when you see your grandmother. A beautiful concept, but it doesn't work for modern neural networks.

The reality turned out to be much more complicated. When researchers began studying the activations of individual neurons in large models, they found a complete mess. The same neuron can respond to financial terms, the color blue, mentions of Australia, and for some reason, the word "Tuesday." Another one responds to medical terminology, baking recipes, and French grammar.

This is called polysemy. The model packs more concepts than it has neurons by using clever combinations of activations. Imagine you only have three colors of paint, but you want to paint a rainbow — you have to mix them.

Why do models do this? Efficiency. If each concept required a separate neuron, models would be thousands of times larger. Instead, the model learned to encode multiple concepts through a single neuron, using different "directions" in the activation space.

The problem is that for us humans, this makes the model completely opaque. Looking at individual neurons is like trying to understand a book by examining individual letters. Technically, all the information is there, but such analysis is of little use.

Sparse autoencoders: a decoder for thoughts

The solution applied by the Anthropic team is elegant and technically sophisticated.

The idea is this: if the model uses combinations of neurons to encode concepts, let's train a separate neural network — sparse autoencoder (SAE) — that will find these combinations and break them back down into individual, interpretable "features."

How it works:

We take activations from a certain layer of Claude

We pass them through the SAE, which breaks the activation vector into a space of much higher dimensionality — the experiment used 34 million features

We require that most of these features be inactive at every moment (hence the term "sparse")

We train the SAE to reconstruct the original activations from the sparse representation

The key point is the requirement for sparsity. If only a small part of the features (about 300 out of 34 million) is active at any given moment, the model is forced to make each feature meaningful and specific. You cannot just lump everything together — you have to distribute concepts across separate dimensions.

For those who want technical details:

Model: Claude 3 Sonnet

Number of features: experiments on 1M, 4M, and 34M

Where activations were taken from: residual stream after average layers

Quality metrics: reconstruction loss (how well the original activations are restored), L0 (average number of active features per token), interpretability score (how well a human can understand the purpose of a feature)

Results: an average of about 300 features out of 34 million are active, SAE explains approximately 95% of the variance in activations. And — most importantly — human experts were able to understand the purpose of about 70-80% of the identified features.

What was found inside Claude

And this is where it gets really interesting. Researchers discovered features of astonishing specificity — not vague categories like "transport" or "architecture," but completely concrete concepts.

Feature "Golden Gate Bridge"

One feature activated exclusively on mentions of the Golden Gate Bridge in San Francisco. Not just "bridge," not just "San Francisco," not just "landmark" — but this specific bridge.

The feature triggered on:

"The Golden Gate Bridge is beautiful"

"I drove across the Golden Gate yesterday"

"Мост Золотые Ворота — символ Сан-Франциско"

Mentions in other languages

And did not trigger on:

Other bridges in San Francisco (like the Bay Bridge, for example)

Other famous bridges around the world

The word "golden" in other contexts

The word "gate" alone

Moreover, the feature understood context. If someone wrote "I walked through the gates into the garden" — silence. If "I drove through the Golden Gate" — activation.

Feature "insecure code"

Another feature activated on examples of code with vulnerabilities. Not on all code, not on all errors — but specifically on security issues.

Triggers the feature:

query = f"SELECT * FROM users WHERE id = {user_input}" # SQL injection

Does not trigger:

query = "SELECT * FROM users WHERE id = ?"

cursor.execute(query, (user_input,)) # parameterized query

The model somehow learned the concept of "this is dangerous for safety" — not just a syntax pattern, but an understanding of why one code is safe while another is not.

Similar features were found for:

Buffer overflow vulnerabilities in C

Unsafe deserialization

Hardcoded credentials

And other classic security issues

The feature "internal conflict"

This is almost philosophy. A feature was found that activated in situations when the model encountered contradictory instructions or ethical dilemmas.

When a user asks to do something dubious but formally not prohibited. When the system prompt conflicts with the user's request. When one has to choose between honesty and politeness. In such situations, this feature would activate.

Literally — a neural doubt.

Other findings

The researchers cataloged thousands of features. Among them:

Features for specific known individuals (separate ones for Messi, Trump, Einstein)

Features for emotional states (anxiety, joy, confusion)

Features for abstract concepts (justice, recursion, irony)

Features for meta-level (understanding that the model is AI)

Features for various languages and dialects

Features for writing styles

Features related to the model's self-identification are particularly interesting. There is a feature that activates when Claude understands that the conversation is about itself. There is a feature for situations when a user tries to make the model pretend to be someone else.

Experiment: what if we tweak the knobs?

Finding features is half the job. The researchers decided to test whether these features truly govern behavior rather than just correlate with it. To do this, they began to artificially amplify and weaken individual features during the model's operation.

Golden Gate Bridge experiment

When the "Golden Gate Bridge" feature was amplified tenfold, Claude began to behave... obsessively.

Normal dialogue:

User: Tell me about yourself.

Claude: I am an AI assistant created by Anthropic to help people with various tasks...

With the amplified feature:

User: Tell me about yourself.

Claude: I am like the majestic Golden Gate Bridge — a link between human questions and the ocean of knowledge. Just as this iconic bridge connects the shores of San Francisco Bay...

The model literally could not stop thinking about the Golden Gate Bridge. Any question — about the weather, programming, philosophy — it managed to relate back to this landmark.

It’s funny, but telling: the feature really controls what the model thinks about.

Practical Experiments

More useful results were obtained with other features:

Enhancing the "refusal of dangerous requests" feature made the model more cautious — it began to refuse even harmless requests that remotely resembled something questionable

Weakening this same feature made the model more compliant

Enhancing the "formal style" feature changed the tone of the responses

Enhancing the "doubt" feature made the model more likely to acknowledge uncertainty

This opens the way to fine-tuning model behavior. Instead of retraining the entire model on new data (expensive, time-consuming, unpredictable), you can simply tweak the necessary features.

Why This Is Really Important

Okay, we found some features, twisted some knobs — so what? In fact, this research has several important practical applications.

AI Safety

Currently, model safety works somewhat like spam filters in 2005: blacklists, heuristics, keywords. The user wrote "how to make a bomb" — we refuse. Wrote "how to make a firework for a science project" — also refuse just in case. Wrote the same thing through metaphors and role-playing — we let it through because we didn’t recognize it.

If we have access to features responsible for dangerous behavior, we can:

Monitor their activation in real-time

Forcefully suppress certain features

Use activation as a signal for additional checks

This is fundamentally more reliable than trying to guess all dangerous phrasing. We look not at words, but at what the model "thinks."

Debugging and Understanding Errors

Is the model hallucinating? Right now, we can only shrug our shoulders and ask it to "think again." With interpretability at the feature level, you can literally see what was active at the moment of hallucination.

It may turn out that hallucinations occur when the "confidence" feature is active but the "fact-checking" feature is inactive. Or when the model "confuses" two similar concepts due to overlapping features. This provides tools for real debugging, rather than guessing.

Fine-tuning

Currently, to change the model's behavior, you either need to retrain it (expensive), write long system prompts (unreliable), or fine-tune on examples (unpredictable).

With access to features, you could say: "boost feature X by 20%, weaken feature Y by 30%." A model-builder instead of a black box.

Scientific understanding

Finally, it's just interesting from a scientific perspective. We are starting to understand that neural networks are not just "statistical parrots," as critics like to say. Structures are forming within them that suspiciously resemble what we would call "concepts" or "knowledge."

This does not prove that models "understand" in the human sense. But it shows that there is something more complex inside than simple pattern memorization.

Limitations and open questions

It would be unfair not to mention the problems. The research is groundbreaking, but we are still far from fully understanding the models.

Scalability

Training SAE on 34 million features requires thousands of GPU hours. Claude 3 Sonnet is far from the largest model. What will happen with Claude 3.5 Opus? With GPT-5? With future models that are ten times larger?

Methods need to be scaled, and that is non-trivial.

Not everything is interpretable

About 20-30% of the discovered features remain a mystery for researchers. They clearly react to something, but it has not been possible to understand what.

There are two possibilities: either these are artifacts of training the SAE, or they are concepts that exist within the model but have no analogs in human thinking. The second option is more interesting — and a bit frightening.

Causation vs correlation

A feature is activated by a specific pattern — but is it the cause of the model's behavior or just a correlate? Experiments with boosting features partially answer this question, but not completely.

Maybe there is a deeper level that we have not yet seen.

Dynamics over time

The study looks at a "snapshot" of activations at a specific moment. But the model operates sequentially, token by token. How do features interact over time? How does one activation affect the next?

This is a separate large area of research.

Other architectures

The work has been done on transformers. Will the method work on other architectures—Mamba, SSM, future developments? It is still unknown.

What this means for us, users

If you’ve read this far, you may be wondering: okay, interesting, but what does this mean for me?

Firstly, models will become safer and more predictable. Not tomorrow, but in the next year or two, companies will begin to use interpretability as a standard tool. Fewer strange failures, fewer hallucinations, more stable behavior.

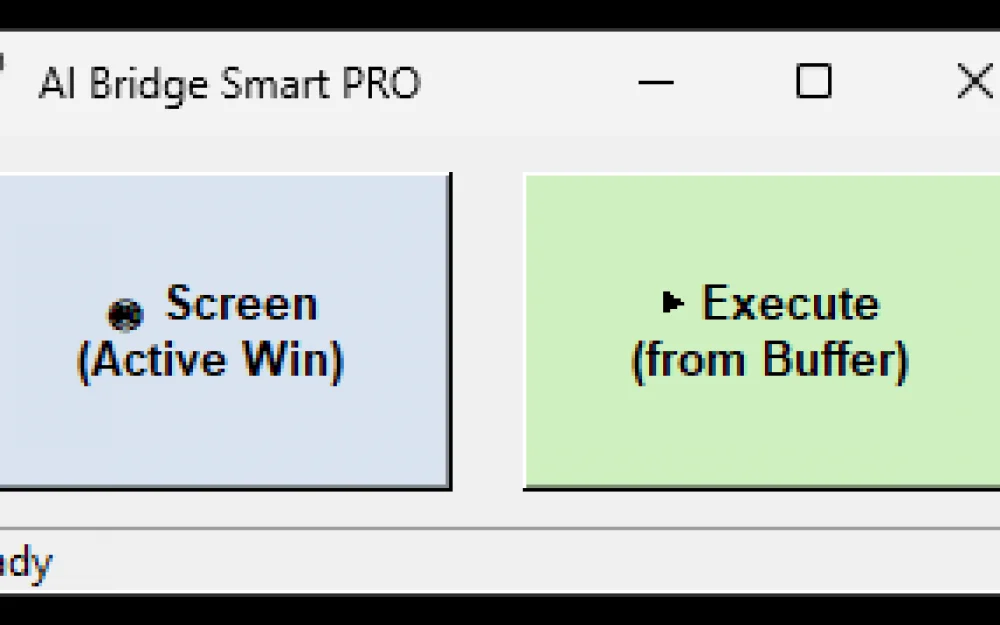

Secondly, there will be an opportunity for customization at a new level. Want a model that writes more formally? Or more creatively? Or with greater attention to detail? Instead of tweaking prompts, you will simply be able to adjust the necessary “knobs.”

Thirdly, regulators will start demanding explainability. The EU with its AI Act, American initiatives—all of them are moving towards the requirement to “explain why your model made such a decision.” Interpretability is the answer to this request.

What’s next

Anthropic has not stopped at this research. They already have working tools for monitoring models based on features. According to rumors (and hints in the company’s blogs), this is how they catch attempts to bypass restrictions—not by keywords, but by activating suspicious patterns.

Google DeepMind and OpenAI are also working on interpretability, although they publish less. This is becoming a standard area of research, not an exotic one.

I expect that over the next year we will see:

Interpretability tools in commercial APIs (possibly for an additional fee)

Regulatory requirements for model explainability

New fine-tuning methods based on features

Progress in understanding hallucinations and preventing them

The era of black boxes is gradually coming to an end. And this is perhaps one of the best pieces of news in AI in recent years.

P.S. Anthropic has released an interactive visualizer of the found features on their website — you can dig in yourself and see what concepts the model "knows". It's a captivating thing, I honestly warn you — I lost a couple of hours there.

Link to the original article in tokens to the wind — sometimes I write about such things, and sometimes about how LLMs think, or just pretend.

Write comment